Emotion plays a crucial role in our daily lives, manifesting through various forms such as auditory cues, facial expressions, and physiological signals (He L. et al., 2022). Among these, electroencephalogram (EEG) signals are particularly noteworthy due to their objective nature and resistance to falsification, making them a reliable indicator of different emotional states. With the rapid advancements in EEG acquisition technology and the evolution of machine learning techniques, the field of EEG-based emotion recognition has gained significant attention and made remarkable progress (Wang et al., 2024).

EEG exhibits distinct features across temporal, spatial, and frequency domains in different emotions (Li et al., 2019; Mognon et al., 2011). For example, during states of happiness or sadness, EEG may show different wave amplitudes and frequency distributions (Alarcao and Fonseca, 2017). Specific EEG frequency bands such as alpha waves (8–12 Hz) and beta waves (12–30 Hz) are known to be associated with different emotional states. Alpha waves are generally linked to relaxation and calmness (Davidson et al., 2000), while beta waves often indicate alertness and tension (Ray and Cole, 1985). Beyond analyzing these features individually, recent studies have demonstrated that the fusion of spectral and spatial features can significantly enhance emotion recognition performance. For example, a spatio-temporal self-constructing graph neural network (ST-SCGNN) effectively combines spectral information from EEG frequency bands with spatial activation patterns across different brain regions, leveraging their complementary strengths for cross-subject emotion recognition (Pan et al., 2023). This combination allows for a more comprehensive understanding of how different frequency bands manifest across specific spatial areas of the brain, providing a richer representation of emotional states. In addition to frequency and spatial attributes, temporal features are crucial for capturing the dynamic changes in brain activity that occur over time. For example, temporal models like Temporal Convolutional Networks (TCN) have been used to encode these time-dependent characteristics, significantly improving the performance of EEG-based cross-domain emotion recognition (He Z. et al., 2022). This highlights the importance of temporal modeling for capturing the evolving nature of emotional responses in EEG signals.

Neuroscientific research suggests that emotional processing involves a closely integrated system across both hemispheres and the anterior-posterior regions of the brain, with significant overlap in their roles. The left hemisphere tends to be more involved in processing emotions related to approach behaviors and positive affect, such as happiness and satisfaction, whereas the right hemisphere may play a greater role in processing emotions related to withdrawal behaviors and negative affect, such as sadness and fear (Celeghin et al., 2017). Similarly, the anterior brain regions, particularly the frontal lobe, are associated with heightened activity in response to positive emotions, while posterior regions, including the occipital lobe, show increased activity when processing visual emotional stimuli (Kringelbach and Berridge, 2010; Abdel-Ghaffar et al., 2024).

In addition to these spatial dimensions, specific frequency bands are also tied to certain brain regions and emotional states. For instance, alpha waves (8–12 Hz) are typically dominant in the posterior brain regions, particularly in occipital and parietal areas, and are associated with relaxation and calmness. In contrast, beta waves (12–30 Hz) are more prominent in the frontal regions, reflecting alertness and cognitive processing. These frequency bands exhibit different strengths depending on whether the signals come from the anterior-posterior or left-right axes, which reflect emotional lateralization (Davidson et al., 2000).

This connection between horizontal and vertical orientations and spectral characteristics is supported by the psychological understanding that the anterior-posterior axis is more involved in processing emotional stimuli, while the left-right axis helps differentiate positive and negative emotions (Davidson, 1992). Our proposed model leverages these insights, enabling the attention mechanism to focus on specific frequency bands according to the brain regions, improving emotion recognition performance.

Emotion classification based on EEG signals has made significant strides in recent years, reflecting the objective and precise emotional states of humans through electrophysiological manifestations. Wang et al. (2011) utilized a support vector machine (SVM) classifier to differentiate various emotional states, but their method struggled with the complex and high-dimensional nature of EEG data. Alhagry et al. (2017) employed a two-layer Long Short-Term Memory (LSTM) network, which captured temporal dependencies in the EEG signals and achieved satisfactory results. Despite this progress, there remains a need for the comprehensive fusion of features from spatial, temporal, and frequency domains. Tao et al. (2020) introduced an attention-based convolutional recurrent neural network (ACRNN) that employs a channel-wise attention mechanism with a CNN for adaptive spatial feature extraction and extended self-attention within an RNN to analyze temporal dynamics in EEG signals, considering crucial information across spatial and temporal domains. To further take advantage of spectral information, Xiao et al. (2022) utilized a CNN with spectral and spatial attention mechanisms to dynamically adjust weights across different brain regions and frequency bands, and incorporatedd a temporal attention mechanism within a bidirectional LSTM to analyze temporal dependencies in the data. Zhu et al. (2024) developed the Self-Organized Graph Pseudo-3D Convolution (SOGPCN). It uses a self-organizing graph convolution module to extract the spatial features from each frequency band and 3D-CNN layers followed by dot product attention layers to focus on valuable information, and utilizes an LSTM layer to model the temporal dynamics. In our previous work (Liu et al., 2021), we developed a 3D convolutional network (3D-CNN) that integrates a parallel positional-spectral-temporal attention module to learn crucial information across different domains for EEG emotion recognition. Although the aforementioned studies have effectively incorporated spatial, temporal, and spectral information, they typically viewed the EEG's spatial domain as a unified whole while ignoring the functional differences between anterior-posterior and left-right brain regions.

In this paper, we propose a Directional Spatial and Spectral Attention Network (DSSA Net) that enhances spatial, spectral, and temporal features, specifically emphasizing the attention across complementary orientations in the brain area. It is composed of the Position Attention (PA), Spectral Attention (SA), and Temporal Attention (TA) modules. The PA Module, consisting of the vertical attention (VA) branch and horizontal attention (HA) branch, learns the spatial attentions on orthogonal orientations from both anterior-posterior and left-right brain regions, While the SA Module learns the attention on different frequency bands. Unlike traditional undirected models, which treat the spatial dimensions of EEG signals uniformly, DSSA Net leverages the directional nature of brain activities by separately modeling the attention across anterior-posterior and left-right brain regions. This directional approach aligns with established neuroscientific findings that different hemispheres and brain regions are differentially involved in emotional processing, allowing the network to more accurately capture emotion-specific patterns in EEG data. These two modules produce a 3D attention map that enhances important frequency bands within the spatial context. Subsequently, the Temporal Attention (TA) Module models the temporal dynamics of the enhanced feature sequence using a Transformer encoder. In this way, our proposed framework captures the critical features across the spatial, spectral, and temporal domains, highlighting the contributions of various brain regions in the anterior-posterior and left-right directions on emotion recognition. The primary contributions of this paper are as follows:

• In this paper, we propose the Directional Spatial and Spectral Attention Network (DSSA Net), a novel EEG emotion recognition framework, which emphasizes the contributions of various brain regions on emotion recognition, while specially taking into account the functional differences between the anterior and posterior as well as the left and right brain regions, and the specific frequency bands associated with different emotional states.

• We conducted comprehensive experiments on benchmark datasets such as DEAP, SEED, and SEED-IV, which confirmed that the DSSA Net significantly outperforms most existing methods in emotion recognition tasks. This superior performance is largely due to its effective analysis of EEG signals from distinct brain orientations, notably the anterior-posterior and left-right directions. Ablation studies further confirm the critical importance of cohesively analyzing spatial directions and spectral features.

2 Related workEmotion classification based on EEG signals is a meaningful research direction. As the electrophysiological manifestation of the central nervous system, EEG objectively and precisely reflects the real emotional states of humans. With the development of artificial intelligence technology, emotion recognition has become a hot research topic in human-computer interaction.

2.1 Traditional machine learning based methodsIn recent years, several studies have applied traditional machine learning techniques to EEG-based emotion recognition. Koelstra et al. (2011), Wang et al. (2011), and Bahari and Janghorbani (2013) have employed Gaussian Naive Bayes, Support Vector Machines (SVM), and a combination of recurrence plot analysis with k-nearest neighbor classifiers, respectively, to analyze various emotional states. Additionally, Jiang et al. (2019) and Zhang et al. (2024) have enhanced cross-subject emotion recognition by integrating decision tree classifiers and a dynamically optimized Random Forest model, respectively, each enhanced by algorithmic adaptations for better performance. Xu X. et al. (2024) addressed the challenges of small EEG sample sizes and high feature dimensionality by leveraging both local and global label relevance for feature selection. It employs orthogonal regression to map EEG features into a low-dimensional space to capture local label correlations, and then integrates global label correlations from the original multi-dimension emotional label space. Xu et al. (2021) optimized EEG emotion recognition by globally evaluating and reducing feature redundancy, selecting informative and non-redundant features to improve performance across various datasets.

2.2 Deep learning based methodsCompared with traditional methods, deep learning technologies offer significant advantages in high-level representation and end-to-end training schemes. Al-Nafjan et al. (2017) utilized Deep Neural Networks (DNN) with Power Spectral Density (PSD) features to identify human emotions. Further considering the temporal characteristics of EEG, Alhagry et al. (2017) achieved satisfactory emotion recognition results by employing a two-layer Long Short-Term Memory (LSTM) network using EEG signals as input. Hefron et al. (2017) implemented LSTM-based Recurrent Neural Networks (RNNs) to model the time dependence of cognitive-related EEG signals. However, these studies did not fully exploit the multi-dimensional information available in EEG signals. To address the limitations of these approaches, some researchers have explored multi-dimensional features in EEG-based emotion recognition. Bashivan et al. (2015) proposed a deep recursive convolutional neural network (R-CNN) for EEG-based cognitive and mental load classification tasks, incorporating spatial and temporal dimensions. Zhang et al. (2017) developed a deep CNN model to learn robust spatio-temporal feature representations of raw EEG data for motion intention classification. Despite considering multi-dimensional features, these studies still have limitations. They often overlook critical aspects such as the frequency domain or the dynamic changes in EEG signals over longer periods, which are essential for a more comprehensive understanding of emotional states. Wu et al. (2024) enhanced the adaptability of EEG emotion recognition by using a dual-graph structure to capture emotion-relevant and emotion-irrelevant features, and applying orthogonal purification to reduce redundancy and align feature spaces across subjects.

2.3 Attention based methodsTo further enhance the analysis, attention mechanisms have been introduced to effectively capture and emphasize the most relevant features across different dimensions, providing a more detailed and accurate understanding of EEG-based emotion recognition. Building on this foundation, several advanced approaches have emerged. Tao et al. (2020) proposed an attention-based convolutional recurrent neural network (ACRNN) to extract more discriminative features and improve emotion recognition accuracy. The model employed a channel-wise attention mechanism and a CNN to adaptively extract spatial information, which further integrated extended self-attention with an RNN to explore temporal information based on intrinsic similarities within the EEG signals. To further take advantage of the different importance of frequency band features, Xiao et al. (2022) introduced the four-dimensional attention-based neural network (4D-aNN) for EEG emotion recognition. The 4D-aNN employs spectral and spatial attention mechanisms to dynamically adjust the weights assigned to different brain regions and frequency bands. A convolutional neural network (CNN) processes this spectral and spatial information. Additionally, the model integrates a temporal attention mechanism within a bidirectional Long Short-Term Memory (LSTM) network, enabling the exploration of temporal dependencies in the 4D EEG representations, aiming to enhance the utilization of comprehensive signal information for emotion recognition. Zhang et al. (2023) introduced an attention-based hybrid deep learning model for EEG emotion recognition. The model starts by extracting differential entropy features from EEG data, organized by electrode positions. It uses a convolutional encoder to capture spatial features and a band attention mechanism to weight different frequency bands. A long short-term memory (LSTM) network with a time attention mechanism then extracts and highlights key temporal features, enhancing classification accuracy. To further consider spatial correlations and temporal context information, Zhu et al. (2024) presented the Self-Organized Graph Pseudo-3D Convolution (SOGPCN). Unlike traditional methods that construct static graph structures for brain channels, SOGPCN dynamically addresses the varying spatial relationships between electrodes across different frequency bands. The process begins with creating a self-organizing map for each channel within each frequency band, identifying the 10 most relevant channels. Graph convolution then captures spatial relationships within these maps. Subsequently, pseudo-three-dimensional convolution paired with dot product attention is used to extract temporal features from the EEG sequences. Finally, an LSTM layer is utilized to learn contextual information between adjacent time-series data, aiming to improve the accuracy and reliability of emotion recognition from EEG signals.

Building on the significant advancements made in EEG-based emotion recognition through various attention-based models, it is evident that the incorporation of attention mechanisms in different dimensions significantly improves the feature extraction process. However, many existing methods still fail to fully integrate attention mechanisms across all dimensions, potentially missing crucial features. Additionally, even when these dimensions are considered, existing methods often fail to adequately explore the interactions between specific frequency bands and directional spatial positions within critical brain regions such as the left and right hemispheres and the anterior and posterior areas. These areas are essential for a nuanced understanding of emotional processing, yet they are frequently overlooked in the simultaneous analysis of spatial and spectral data. To address these issues, we introduce the DSSA Net, a framework that not only incorporates attention mechanisms across spatial, spectral, and temporal dimensions but also intricately links specific frequency bands to spatially distinct brain regions. This approach utilizes Positional Attention (PA) to highlight key positions related to emotion processing, which are directly associated with distinct frequency bands through the Spectral Attention (SA). By aligning spatial distinctions with spectral characteristics, our model enhances the detection of emotional states. Temporal Attention (TA) further prioritizes significant temporal slices, collectively enhancing the accuracy of emotion recognition.

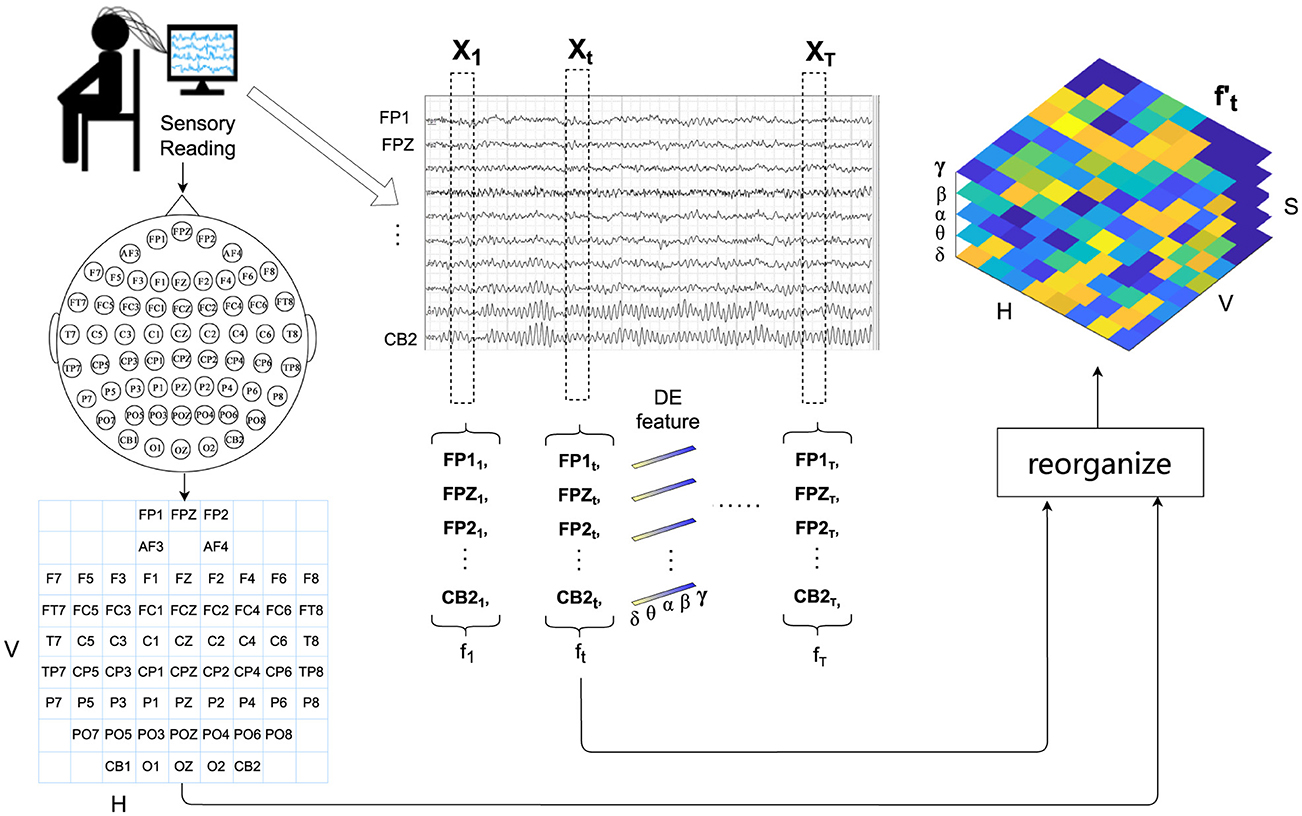

3 Methodology 3.1 Overview of model structureThe proposed DSSA Net framework comprising three modules is illustrated in Figure 1. The signal processing and feature extraction module segments the EEG signals into non-overlapping samples. For each sample, it extracts the differential entropy features across five frequency bands, and reorganizes them into a 3D feature representation following the layout of EEG electrodes.

Figure 1. Overview of the DSSA Net framework for EEG-based emotion recognition. The framework includes: (1) Signal Processing and Feature Extraction, which segments EEG signals and extracts differential entropy features across five frequency bands; (2) Local Feature Learning, featuring Position Attention (PA) with Vertical (VA) and Horizontal (HA) branches for spatial focus, and Spectral Attention (SA) for frequency-specific information; (3) Temporal Modeling (TA) using a Transformer encoder to capture temporal dynamics, followed by an MLP head for emotion prediction. The 3D attention map combines spatial and spectral insights to enhance feature representation.

The design of the Position Attention (PA) module, which includes vertical attention (VA) and horizontal attention (HA), is grounded in neuroscientific evidence that different brain regions play specific roles in emotional processing. The VA focuses on anterior-posterior brain activity, capturing the differences between frontal and occipital regions, which are known to be involved in cognitive and emotional responses. The HA focuses on left-right differences, reflecting the lateralization of emotions, where the left hemisphere is more associated with positive emotions and the right hemisphere with negative emotions (Davidson, 1992). These attentions allow the model to align with the spatial structure of brain activity as outlined in the introduction.

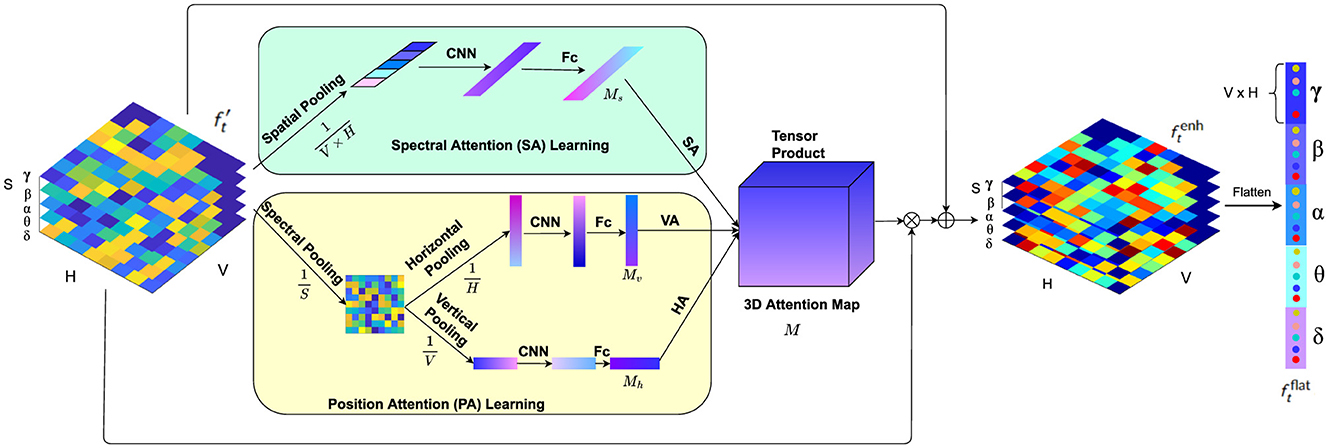

The local feature learning module contains a Position Attention (PA) branch with vertical attention (VA) and horizontal attention (HA) to emphasize the significant brain regions by analyzing comprehensive directional activation patterns, and a Spectral Attention (SA) branch to identify the frequency bands that are essential for emotion recognition.

The choice of the Spectral Attention (SA) branch is aimed at leveraging the frequency-specific emotional information, such as alpha and beta waves, which are linked to relaxation and alertness, respectively. This complements the spatial focus of VA and HA by allowing the model to focus on the most informative frequency bands.

The 3D attention map, learned from the VA, HA, and SA branches, is then applied to enhance the 3D feature representation via element-wise multiplication. The Temporal Modeling (TA) module leverages a Transformer encoder to capture the temporal dynamics among the enhanced feature representation sequence of the EEG signals. Finally, the attention-weighted EEG features are averaged along the temporal domain and fed into a multilayer perceptron (MLP) head to predict the emotional state.

3.2 Signal processing and feature organizationIn this section, we introduce the form of EEG signal processing and feature representation. Firstly, we partition the EEG signals into samples of T seconds, which are assigned the same emotion labels as those of their original EEG signals. For each sample, the EEG signal of each channel is first passed through a Butterworth bandpass filter between 0.5 and 50 Hz, then sliced into 1-s non-overlapping segments. For each segment, differential entropy (DE) features are extracted at five frequency bands: δ (1–3 Hz), θ (4–7 Hz), α (8–13 Hz), β (14–30 Hz), and γ (31–50 Hz), resulting in a five-dimensional feature vector.

Therefore, for the total C channels of the t-th second of a sample, a 2D feature matrix ft∈ℝC×S is obtained, where S = 5 represents the number of spectral bands.

The extracted DE features in each band correspond to different physiological characteristics of brain activity. For example, α waves (8–13 Hz) are dominant in the posterior regions and are associated with states of relaxation and calmness, reflecting reduced cognitive load and increased internal focus. β waves (14–30 Hz), on the other hand, are prominent in the frontal regions during states of alertness, focus, and even stress, indicating active cognitive processing. δ waves (1–3 Hz) are often linked to deep sleep and restorative brain activities, which can affect the baseline emotional state. θ waves (4–7 Hz) play a role in memory and emotional regulation, and often become more pronounced during meditative states or drowsiness. γ waves (31–50 Hz) are associated with high-frequency cognitive processing and attention, particularly during intense emotional experiences. These band-specific characteristics align with the brain's horizontal and vertical differentiation in emotional processing, as described in the introduction. By reorganizing the EEG data into a 3D feature representation following the electrode layout, our model integrates both the spatial and spectral information in a manner that reflects these known neural patterns.

To fully utilize the positional information of EEG electrodes, following the H × V grid layout of EEG electrodes as shown on the left of Figure 2, we reorganize ft∈ℝC×S of C EEG channels into a reshaped EEG feature representation ft′∈ℝH×V×S. For the empty grids in the layout that do not match the actual electrodes, considering that the electrical activity of the brain is spatially continuous and signals from adjacent electrodes are usually highly correlated (Valk et al., 2020), we use bi-linear interpolation to fill in the missing values.

Figure 2. Detailed workflow of signal processing and feature organization for EEG-based emotion recognition. This includes filtering, segmentation, differential entropy feature extraction across five frequency bands, and the reorganization of extracted features into a 3D representation following the EEG electrode layout for enhanced spatial and spectral analysis.

3.3 Local feature learningIn psychological and cognitive research, studies have consistently demonstrated differential activation patterns between left and right brain hemispheres during various cognitive tasks and emotional processing. For instance, the lateralization theory suggests that emotions may be processed differently in the left and right hemispheres of the brain, leading to distinct activation patterns (Davidson, 1992). Additionally, investigations into the anterior-posterior brain regions have highlighted the significance of anterior and posterior brain areas in emotional processing (Harmon-Jones and Gable, 2018). These studies indicate that different brain regions along both horizontal (left-right) and vertical (anterior-posterior) dimensions play crucial roles in emotional processing. Given the importance of these different brain regions in emotional processing, there is a growing need to explore attention mechanisms that can capture both the anterior-posterior and left-right directional cues in neural data processing. Furthermore, in the realm of spectral analysis, research has underscored the importance of different frequency bands in encoding emotional states. Studies have shown that specific frequency bands, such as alpha, beta, and gamma, exhibit distinct patterns of activity corresponding to different emotional experiences (Jenke et al., 2014). Hence, capturing effective spectral information is paramount in accurate decoding of emotional states from neural signals.

Motivated by these findings, we propose a novel local feature learning framework as depicted in Figure 3, which consists of two pivotal modules: the Position Attention (PA) module and the Spectral Attention (SA) module. The PA module captures vital cues from both the anterior-posterior and left-right brain regions, while the SA module emphasizes crucial spectral features relevant to emotional states. The outputs of these modules compose a 3D attention map, which is used to weight the EEG feature representation by element-wise product. This combined attention mechanism highlights more important spectral bands among the spatial regions in the anterior-posterior and left-right directions. The weighted feature representation is then integrated with the original feature to obtain a comprehensive representation encapsulating salient spatial and spectral information, which is then flattened into a feature vector following the grid layout within each frequency band.

Figure 3. The local feature learning framework consisting of the PA module and SA module. While the PA module (faint yellow) highlights more critical features among left and right brain regions as well as protocerebrum and tritocerebrum, the SA module (faint green) gives prominence to the key EEG spectral bands for emotion recognition.

3.3.1 Position attention moduleStudies have highlighted the significance of both anterior-posterior (anterior and posterior) and left-right (hemispheric) brain regions in emotional processing (Harmon-Jones and Gable, 2018). Furthermore, research in neuroscience has consistently demonstrated that different brain regions activate differently during various emotional tasks. This phenomenon, known as lateralization, indicates that the left and right hemispheres of the brain exhibit distinct activation patterns when processing emotions (Davidson, 1992). These differential activation patterns suggest that capturing spatial attention across these dimensions is crucial for understanding and classifying emotions. Inspired by these insights, we propose a Position Attention (PA) module that consists of two branches to learn spatial attention masks in both vertical and horizontal directions to highlight valuable brain regions for emotion classification: the vertical attention (VA) branch and the horizontal attention (HA) branch. The VA branch captures the differential activation and highlights more important features among the anterior-posterior brain regions, while the HA module highlights the salient information on the left-right hemispheric regions. Unlike previous spatial attention methods (Tao et al., 2020), which employ channel-wise attention to enhance feature representations by assigning weights to different electrodes independently, our method considers the significance of positions among the left-right and anterior-posterior brain regions by using both horizontal and vertical attention mechanisms. This approach enables the model to simultaneously capture spatial dependencies in both directions, enhancing the ability to detect complex interactions between different brain regions and improving the accuracy of emotion classification.

Specifically, the PA module operates as follows. Given the input EEG feature representation ft′, firstly the differential entropy (DE) features of all the S frequency bands on each grid are pooled, obtaining the average spectral distribution on the grid layout of the EEG electrodes. Then the horizontal pooling is performed to get the average spectral feature Rv(ft′) along the anterior-posterior brain region direction, and vertical pooling is performed to obtain the average spectral feature Rh(ft′) along the left-right hemispheric region direction.

Rv(ft′)=1S×H∑i=1S∑j=1Hftij′ (1) Rh(ft′)=1S×V∑i=1S∑k=1Vftik′ (2)where i, j and k represents the indices for the spectral, horizontal and vertical dimensions of the input tensor, respectively.

While pooling along these directions effectively summarizes spectral features, it can result in the loss of certain fine-grained spatial details. To address this, the attention mechanisms in the orthogonal directions (VA and HA branches) help to retain important spatial relationships that might be compressed during the pooling process. Additionally, the original feature maps, which contain the full spatial distribution, are reintroduced in later stages, ensuring that the overall spatial structure is preserved.

Next, fully connected layers and activation functions are applied to generate the attention masks:

Mv=ϕ(ReLU(fc(f1d(Rv(ft′))))) (3) Mh=ϕ(ReLU(fc(f1d(Rh(ft′))))) (4)where f1d represents the 1D convolution operation. fc denotes the fully connected layer, which is used to combine the extracted features into a more compact and representative form. To ensure that the dimensionality of the EEG features remains consistent throughout the convolutional layers, we applied padding settings that preserve the dimensions between input and output after each convolution operation. This approach is critical for maintaining the alignment between the attention masks and the feature maps, as it ensures that the recalibrated features from the attention modules can be directly applied to the corresponding spatial and spectral regions without any spatial distortion or resizing. By maintaining consistent dimensions, the model can effectively focus on relevant regions, leading to better emotion recognition performance. This strategy also prevents the need for additional resizing steps, which could introduce artifacts or reduce the effectiveness of the attention mechanisms. By preserving the spatial resolution of the feature maps, we ensure that the attention masks are directly applied without requiring additional adjustments. ReLU denotes the ReLU activation function, which is applied to the hidden layers to introduce non-linearity. This allows the model to capture complex patterns and relationships within the data by enabling the learning of non-linear feature transformations. The use of ReLU helps prevent issues such as the vanishing gradient problem and promotes efficient gradient-based optimization.

ϕ stands for the Softmax function, which is applied at the output of the fully connected layer. Softmax normalizes the outputs into a probability distribution, where the sum of the outputs equals 1, making it suitable for tasks where the output needs to represent mutually exclusive probabilities. This ensures that each output neuron represents the probability of a particular class or state, which is critical for the classification task in our model. By combining ReLU in the hidden layers and Softmax at the output, the model is able to first learn non-linear transformations of the input data, and then produce interpretable probabilistic outputs that can be used for classification. This combination of non-linearity from ReLU and the probabilistic nature of Softmax is essential for learning complex patterns while maintaining an interpretable output space.

Through the position attention learning module, the learned V dimension vector Mv and H dimension vector Mh can be used as the attention masks to highlight the brain regions in the vertical and horizontal directions, respectively.

3.3.2 Spectral attention moduleThe spectral analysis of EEG signals has shown that different frequency bands play crucial roles in encoding emotional states. For instance, the delta (1–3 Hz) band is often associated with deep sleep and unconscious processes, the theta (4–7 Hz) band with meditation and memory retrieval, the alpha (8–13 Hz) band with relaxation and mental coordination, the beta (14–30 Hz) band with active thinking and focus, and the gamma (31–50 Hz) band with higher cognitive functions and information processing (Jenke et al., 2014). These distinct patterns of activity in different frequency bands suggest that capturing spectral attention across these bands is essential for accurate emotion classification. Based on these findings, we propose a Spectral Attention (SA) module to learn a spectrum attention mask that emphasizes on more significant frequency bands for emotion classification. Unlike traditional statistical methods or Principal Component Analysis (PCA), which rely on fixed linear transformations to reduce dimensionality, the SA module highlights key spectral features by focusing on the differential energy spectrum of these bands when the brain is subjected to various emotional stimuli. This allows the model to capture discriminative EEG frequencies, thereby enhancing the performance of the emotion classifier.

Specifically, given the EEG feature representation ft′, the SA module operates as follows. First, the position pooling is performed to summarize the spectral features on all the grids of the layout:

Rs(ft′)=1V×H∑k=1V∑j=1Hftkj′ (5)Next, the attention weight vector is computed:

Ms=ϕ(ReLU(fc(f1d(Rs(ft′))))) (6)where f1d represents the 1D convolution operation, fc denotes the fully connected layer, and ReLU denotes the ReLU activation function and ϕ stands for softmax function. This process generates a S dimension vector as the attention mask of the spectral features.

3.3.3 3D attention map weighted local feature representationAfter the attention masks (Mv, Mh, and Ms) are learned, they are composed into a 3D attention map that integrates attention across horizontal (left-right), vertical (anterior-posterior), and spectral dimensions:

M=Mv⊗Mh⊗Ms (7)where ⊗ represents the tensor product. The 3D attention map M effectively captures the salient spatial and spectral features by considering both horizontal and vertical orientations of the brain, as well as the most relevant frequency bands. This comprehensive map ensures that the recalibrated features are enriched with region-specific and frequency-specific information.

The attention map is then used to recalibrate the local feature representation as:

ftrec=ft′×M (8)where × represents element-wise multiplication. In this step, ftrec becomes an enhanced feature representation that integrates the spatial attention from the horizontal and vertical brain axes and spectral attention from frequency bands. This recalibration process highlights the most informative regions and frequencies in the EEG data, focusing on features that are most indicative of emotional states.

The recalibrated feature ftrec is then combined with the original feature ft′ generating an enhanced comprehensive representation ftenh of the EEG signals:

ftenh=ft′+ftrec (9)The enhanced feature ftenh, encapsulating the salient spatial and spectral information, is then flattened into a V × H × S dimension feature vector ftflat following the grid layout within each frequency band. For an EEG sample of T seconds, the flattened outputs of the local feature learning module for all the T segments are assembled into a feature sequence F′=, which is then input into a Transformer encoder for temporal modeling.

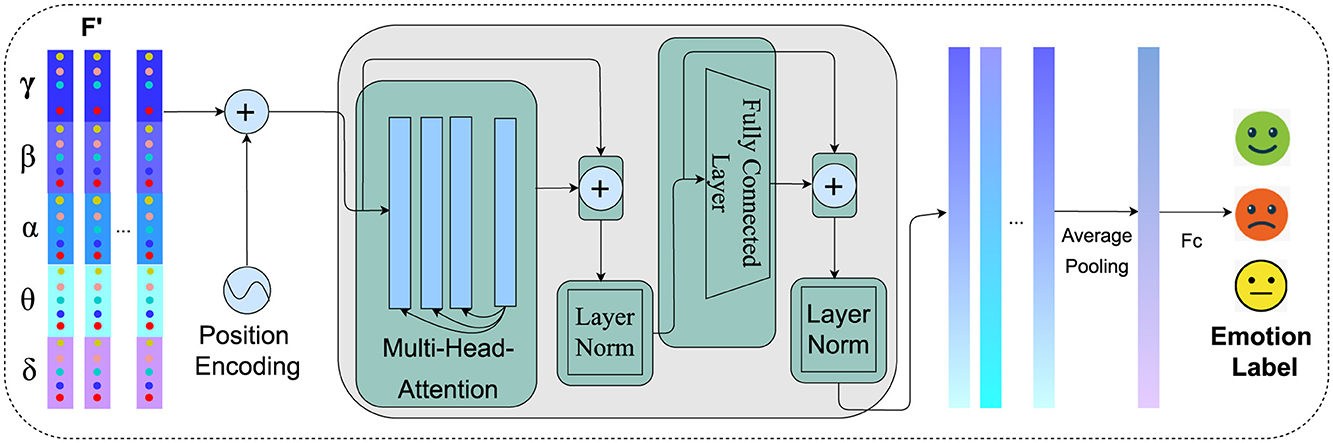

3.4 Temporal modelingStudies have demonstrated that effectively learning the temporal dynamics from EEG is crucial for accurately interpreting neural responses (Tong et al., 2024; Ding and Chen, 2024). In our study, the feature vector ftflat from the local feature module is fed into the Transformer encoder. Positional embeddings are combined with each sequence to create an vector X(t) = . Within the Transformer encoder, each enhanced vector passes through the following steps for each layer: First, multi-head attention allows the model to focus on discriminative information of the sequence. The output of this attention process is then added to the original enhanced vector (residual connection) and normalized (Add & Norm). This output is subsequently processed through a feedforward network, followed by another Add and Norm step, producing a series of hidden states . These states encapsulate the temporal and spatial-spectral relationships within the EEG data. The output of these states undergoes average pooling to combine them into a fixed-length vector, which is then fed into a multilayer perceptron (MLP) for final emotion classification. The whole process is illustrated in Figure 4.

Figure 4. Temporal modeling of spatial-spectral EEG features using transformer encoder. The process integrates positional embeddings, multi-head attention, and feedforward networks to capture intricate temporal dynamics, resulting in enhanced feature representations for emotion recognition.

Before feeding the feature sequences into the Transformer model, positional embeddings are added to encapsulate the temporal information within the sequence. These embeddings are computed as follows:

PE(t,2i)=sin

留言 (0)