Optimizing architectural spaces and 3D reconstruction are pivotal challenges in modern urban planning and building design (Wang et al., 2022). These tasks play a fundamental role in ensuring the efficient use of spatial resources, improving functionality, and minimizing costs, while also enabling dynamic reconfiguration to adapt to evolving demands (Wu et al., 2022). Beyond their direct benefits to building design, advancements in these domains can significantly enhance energy efficiency and sustainability, making them critical for addressing global environmental goals. Additionally, the integration of 3D reconstruction facilitates accurate digital modeling of architectural structures, supporting detailed analysis, renovation, and automation (Liu et al., 2023). Despite their significance, the current approaches to architectural space optimization face several key challenges:The ability to handle diverse and non-standard spatial configurations remains limited. Existing methods often lack adaptability to dynamic requirements or real-time adjustments. High computational costs and the lack of transparency in complex models hinder practical applications (Wang et al., 2021).

Traditional solutions, such as rule-based and symbolic AI methods, have long dominated the field (Amice et al., 2022). These techniques codify expert knowledge into structured systems that optimize spatial layouts based on predefined criteria. While these approaches excel in ensuring compliance with architectural standards, they suffer from rigidity and a lack of scalability (Li et al., 2022). As spatial configurations grow more complex, symbolic methods require extensive manual adjustments, reducing their practicality for large-scale or rapidly evolving scenarios (Kästner et al., 2023). Machine learning and data-driven approaches introduced a new paradigm by leveraging statistical models to identify patterns in spatial data (Liu et al., 2020). Techniques such as clustering and regression improved the flexibility of layout optimization, while machine learning models like decision trees and support vector machines offered predictive capabilities based on historical data (Xie et al., 2020). However, these methods still heavily rely on high-quality labeled datasets, which are often scarce or expensive to obtain. Consequently, their generalization to unseen or unconventional layouts remains limited (Pan et al., 2022). To address these gaps, deep learning approaches such as convolutional neural networks (CNNs; Zheng et al., 2022) and generative adversarial networks (GANs; Liu et al., 2022) have been adopted for space optimization and 3D reconstruction. These models demonstrate significant potential in capturing complex spatial features and adapting to various tasks through pre-training (Beach et al., 2023). Despite these advancements, deep learning methods introduce new challenges, including high computational demands and limited interpretability. Their black-box nature restricts their application in domains requiring adherence to rigorous standards or transparency (Vieira et al., 2022).

In order to enhance the cross-value between this article and LLM technology, we have added several latest research documents and compared and combined them with the method of this article. Chronis et al. (2024) proposed a robot task execution framework based on LLM and scene graphs, demonstrating the powerful capabilities of LLM in scene understanding and dynamic task planning. Chugh et al. (2024) proposed dynamic path planning for autonomous robots based on dynamic graphs and breadth-first search, which provides an important reference for applying path planning optimization in dynamic architectural environments. In addition, Wang et al. (2023) demonstrated a method of generating cues through a large language model to guide robot gait task planning, demonstrating the advantages of LLM in complex task adaptability and semantic understanding.

Recognizing the limitations of these methods, this paper proposes a novel framework that leverages unsupervised learning, modular design principles, and adaptive spatial attention to address the key issues in architectural space optimization and 3D reconstruction. Unsupervised learning eliminates the need for labeled data, enabling the model to generalize across diverse scenarios. The modular design facilitates the integration of multiple optimization techniques, ensuring scalability and adaptability. Finally, the adaptive spatial attention mechanism dynamically focuses computational resources on the most relevant spatial features, reducing costs while improving accuracy.

The contributions of this work include:

• A dynamic system for prioritizing critical architectural regions, enhancing space utilization efficiency.

• A design that supports adaptation to various contexts, ensuring high generalizability.

• Experimental results highlight superior performance in layout optimization and 3D reconstruction compared to state-of-the-art methods, along with significant reductions in computational costs.

2 Related work 2.1 Traditional space optimization techniquesTraditional approaches to space optimization in architectural planning often rely on manual methods or heuristic algorithms. Manual methods involve human experts who utilize architectural principles and spatial requirements to create optimal layouts (Zhang et al., 2021). While effective for simple projects, these methods become increasingly impractical as the complexity of architectural requirements grows. Heuristic algorithms, such as simulated annealing, genetic algorithms, and particle swarm optimization, have been used to automate parts of the design process (Atzori et al., 2016). These algorithms aim to find near-optimal solutions by iteratively refining the space configuration based on predefined objective functions. Although they can improve layout efficiency, heuristic methods still require careful tuning of parameters and a good understanding of the underlying problem, which can limit their adaptability to diverse and changing spatial requirements (Marcucci et al., 2022). Another drawback of traditional space optimization techniques is their limited ability to handle real-time or dynamic space modifications (Jin et al., 2024b). In scenarios where space usage evolves frequently—such as co-working spaces, hospitals, or smart homes—manual and heuristic approaches may struggle to adapt quickly enough to meet new requirements. Furthermore, these methods typically do not account for the spatial relationships and functional interactions between different regions, which can lead to suboptimal configurations in complex environments. The emergence of artificial intelligence (AI) techniques has introduced more sophisticated methods for addressing these limitations, laying the groundwork for the integration of machine learning and automated planning in architectural space optimization (Cauligi et al., 2020).

2.2 Supervised learning for space planningIn recent years, machine learning techniques, particularly supervised learning, have been applied to architectural space optimization. Supervised learning methods leverage large datasets of labeled examples to train models that can predict optimal space configurations based on input features such as building layouts, user preferences, and functional requirements. Techniques such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs) have been used to process spatial data and generate layout proposals. These models learn spatial patterns from the training data and can be fine-tuned to meet specific objectives, such as maximizing natural light, improving accessibility, or minimizing energy consumption (Hu et al., 2023). However, supervised learning approaches have several limitations in the context of space optimization. The requirement for large amounts of labeled data can be a significant drawback, as gathering and annotating spatial datasets is time-consuming and expensive (Li et al., 2024). The models trained using supervised learning may not generalize well to new or unseen architectural scenarios, especially when the training data does not cover the full range of possible spatial configurations. As a result, supervised models may perform poorly in environments with highly variable or unconventional layout requirements. Furthermore, the reliance on labeled data makes it challenging for supervised methods to adapt in real-time to evolving spatial needs, as new training data must be collected and labeled for the model to remain effective. These limitations have led researchers to explore unsupervised and semi-supervised learning techniques for more flexible and adaptive space optimization solutions (Jin et al., 2024a).

2.3 Unsupervised learning and self-organizing systemsUnsupervised learning approaches and self-organizing systems have gained attention in the field of architectural space optimization as a means to overcome the limitations associated with supervised learning. Unsupervised learning techniques do not require labeled data, making them suitable for scenarios where data collection and annotation are difficult. Methods such as clustering, dimensionality reduction, and generative models (Zhang et al., 2024) have been employed to learn underlying patterns in spatial data and generate optimal configurations. Clustering techniques, for instance, can be used to identify functional zones within a space based on user behavior or environmental factors, while dimensionality reduction can help visualize complex spatial relationships in a lower-dimensional space (Chang et al., 2023). Self-organizing systems, inspired by natural processes such as the growth of biological tissues or the behavior of ant colonies, offer another approach to space optimization. These systems utilize local rules or interactions between agents to achieve global spatial organization without centralized control. For example, agent-based modeling can simulate the behavior of occupants in a space to optimize room configurations based on predicted usage patterns. Similarly, self-organizing maps (SOMs) have been used to arrange spaces based on similarity criteria, enabling adaptive and emergent design solutions (Hewawasam et al., 2022). Despite their potential, unsupervised learning and self-organizing systems still face challenges in architectural applications. The quality of the generated solutions heavily depends on the choice of algorithm and parameters, which may require domain-specific knowledge. The interpretability of results can be a concern, as the models do not explicitly learn to optimize for predefined objectives like supervised methods (Spahn et al., 2021). Nonetheless, these approaches offer significant advantages in terms of flexibility and adaptability, making them promising candidates for real-time and dynamic space optimization tasks (Jin et al., 2023).

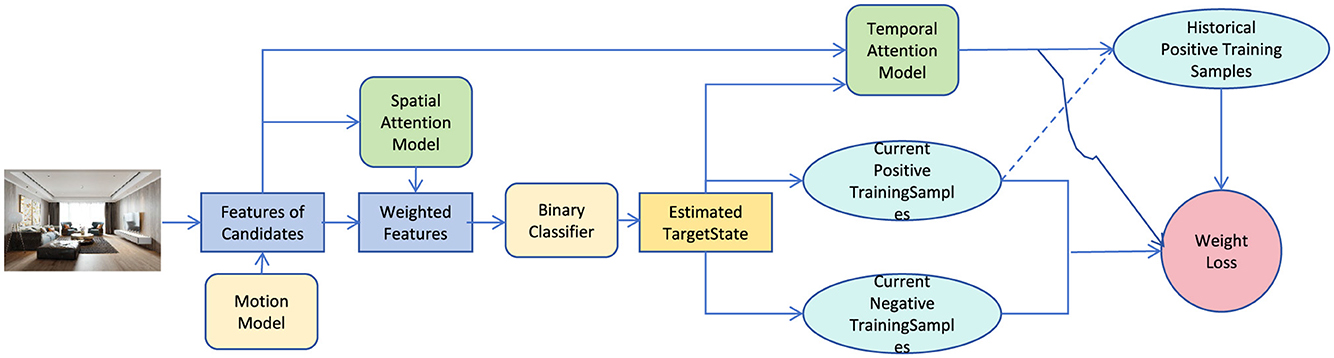

3 Methodology 3.1 OverviewThe proposed architectural planning robot framework leverages unsupervised learning for optimizing indoor spatial layouts, focusing on dynamic space utilization and adaptation to evolving requirements. The system integrates a Convolutional Neural Network (CNN) for feature extraction from visual inputs, such as floor plans, to capture spatial features and functional zones. Using neural robotics technology, the robot autonomously adjusts the architectural design in real-time based on user interactions and environmental changes, allowing for continuous space optimization. The unsupervised learning approach eliminates the need for labeled training data, making it suitable for a variety of planning scenarios where spatial requirements frequently change. The framework incorporates multiple modules: feature extraction through CNNs, spatial reasoning based on dynamic attention mechanisms, and a feedback-driven design refinement process. In sub-section 3.2, we elaborate on the feature extraction mechanism, while sub-section 3.3 discusses the design refinement and optimization strategies. Sub-section 3.6 covers the incorporation of real-time user and environmental feedback for adaptive planning (as shown in Figure 1).

Figure 1. The proposed overall framework. Through the spatial attention model, binary classifier and temporal attention model, the current positive and negative training samples are generated, and the weights are optimized through historical samples.

This paper introduces unsupervised learning technology to significantly reduce the need for large-scale annotated data. Traditional deep learning methods rely on high-quality annotated data to train models, which is often costly and time-consuming in complex scenarios. The method in this article automatically identifies and optimizes the spatial layout by learning latent spatial patterns in unlabeled data, which not only improves the adaptability of the model, but also significantly reduces data requirements. In addition, the modular design allows the system to flexibly adjust the computing resource allocation of sub-modules, avoiding the waste of computing that treats the entire scene equally, thus optimizing computing efficiency. The adaptive spatial attention mechanism further reduces redundant calculations by focusing on key areas to ensure efficient use of resources. In response to the problem of insufficient model interpretability, the modularization and attention mechanism design of this article provide higher transparency. The modular design clarifies the function of each sub-module in the overall optimization of the model. For example, the spatial attention mechanism points to key areas that need priority optimization, making the logic of the model output more interpretable. In addition, the experimental part further demonstrates the specific functions of these modules through visual attention distribution and performance analysis, providing intuitive support for understanding model behavior.

3.2 PreliminariesThe problem of architectural space optimization can be formulated as a dynamic optimization task, where the goal is to maximize the utility of an indoor space by adjusting its layout in response to varying constraints and objectives. Let the architectural space be represented by a set of spatial regions S = , where each region si is characterized by its geometric properties (dimensions, shape), functional requirements, and adjacency relationships with other regions. The layout configuration at a given time can be defined by a set of variables X = , where each xi represents the position and orientation of region si.

The optimization task aims to find the optimal configuration X* that maximizes a utility function U(X) subject to a set of constraints. The utility function is defined as:

U(X)=∑i=1nwi·fi(xi), (1)where fi(xi) denotes the utility of region si based on its configuration xi, and wi represents a weighting factor reflecting the importance of each region. The utility function can incorporate various criteria such as accessibility, natural lighting, privacy, and functionality, which are crucial for achieving an optimal layout.

The constraints in this optimization problem can be divided into geometric constraints and functional constraints. Geometric constraints ensure that regions do not overlap and maintain appropriate distances, while functional constraints ensure compliance with spatial requirements, such as minimum room size or specific adjacency relationships. These constraints can be mathematically expressed as:

gj(X)≤0, j=1,…,m, (2)where gj(X) represents a constraint function that the layout must satisfy.

To solve this optimization problem in a dynamic environment where spatial requirements may change, the framework employs a multi-objective optimization approach. The objectives can be adjusted in real-time based on user feedback and environmental conditions. The optimization is thus represented as:

maxXU(X), subject to gj(X)≤0,j=1,…,m. (3)To model the changing spatial requirements, we introduce a time-dependent component X(t) that allows the configuration to evolve over time. The time evolution of the layout can be represented using a differential equation:

dX(t)dt=F(X(t),t), (4)where F(X(t), t) denotes the update function that adjusts the layout based on the current state and external influences.

The unsupervised learning component is employed to learn spatial patterns from unlabeled data. Given a set of spatial configurations over time, the learning objective is to capture the underlying distribution P(X) that characterizes optimal spatial arrangements. This can be achieved using clustering techniques or generative models, such as autoencoders, which learn to represent the data in a lower-dimensional space while preserving the essential spatial relationships.

The framework also incorporates a feedback mechanism that continuously refines the learned spatial patterns based on user interactions and real-time sensor data. The feedback process can be formalized as:

Xnew=Xprev+α·∇U(Xprev), (5)where Xprev is the previous configuration, α is a learning rate, and ∇U(Xprev) is the gradient of the utility function with respect to the configuration. This feedback loop enables the system to adapt to changes in spatial requirements and improve the layout iteratively.

3.3 Feature extraction with spatial attention 3.3.1 Adaptive visibility mappingTo enhance the robustness of spatial feature extraction, we introduce an Adaptive Visibility Mapping mechanism. This method aims to address occlusion and distortion issues that arise in candidate state representations during real-time architectural space optimization. By leveraging a multi-stage convolutional architecture, the method ensures precise visibility estimation and adaptability to dynamic spatial configurations.

The visibility map V(ylm)∈ℝA×B for a candidate state ylm is generated through a hierarchical process designed to capture fine-grained spatial features. The visibility estimation is computed as:

V(ylm)=gvis(Φroi(ylm);Wvis), (6)where gvis is a visibility function implemented using a cascade of convolutional layers with ReLU activation and batch normalization. The parameter set Wvis includes the weights and biases of these layers. This setup allows V(ylm) to emphasize regions with high visibility while suppressing noisy or occluded areas.

To account for the inherent spatial correlations in architectural layouts, we include a spatial regularization term. This term ensures that abrupt variations in visibility between neighboring pixels are minimized, leading to smoother and more coherent visibility maps:

Lreg(V)=λ∑i,j((Vi+1,j-Vi,j)2+(Vi,j+1-Vi,j)2), (7)where λ is a regularization coefficient that balances the trade-off between smoothness and feature fidelity. The summation iterates over all spatial locations (i, j) in the visibility map V.

Furthermore, the adaptive aspect of the visibility mapping is achieved through a multi-resolution refinement strategy. Initial visibility maps are generated at a coarse resolution and iteratively refined to higher resolutions using a learned refinement network:

Vk+1(ylm)=Vk(ylm)+href(Vk(ylm);Wref), (8)where Vk denotes the visibility map at resolution level k, and href is a refinement function parameterized by Wref. This iterative process ensures that details missed in the initial estimation are progressively captured.

To further enhance the interpretability of the visibility maps, a confidence score c(ylm) is computed for each candidate state. The score is derived by aggregating the visibility values within the region of interest:

c(ylm)=1A×B∑i=1A∑j=1BVi,j(ylm). (9)This confidence score is used as an auxiliary signal in downstream tasks such as classification and spatial reasoning, enabling the model to prioritize candidate states with higher visibility.

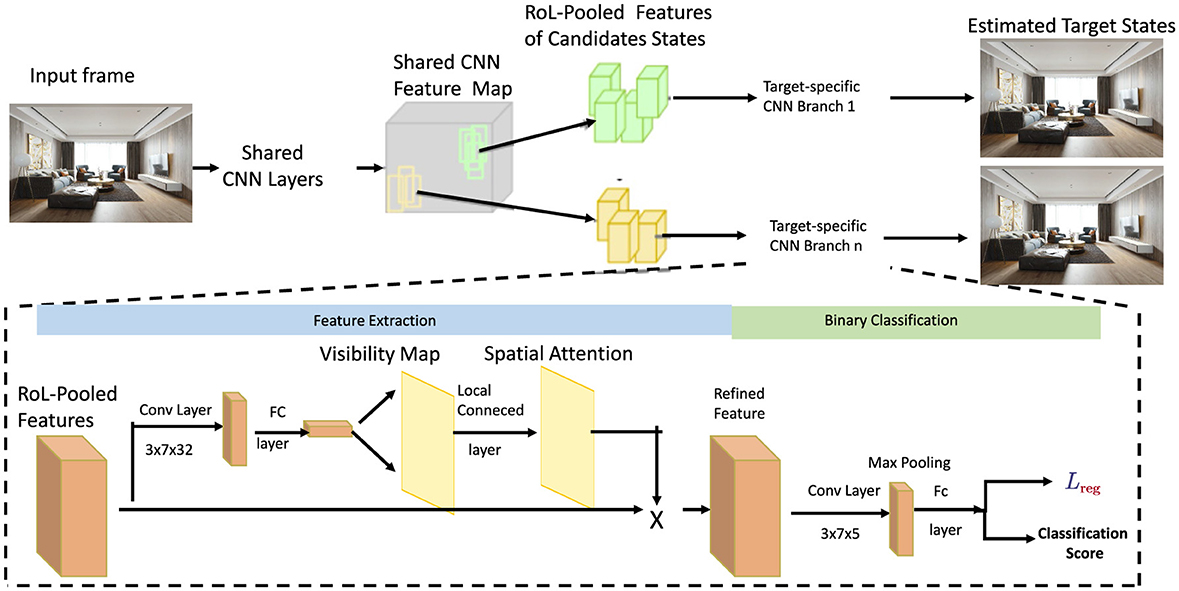

3.4 Multi-Scale Spatial Attention integrationBuilding upon the visibility maps, we introduce a Multi-Scale Spatial Attention (MSSA) mechanism designed to capture and emphasize significant spatial regions across multiple resolutions. This approach enhances the feature representation by integrating information from various spatial scales, ensuring that both macro and micro architectural elements are effectively captured (as shown in Figure 2).

Figure 2. Framework diagram of feature extraction with spatial attention. The candidate state features are extracted from the shared CNN layer, the target state is estimated by calculating the target-specific CNN branch, and visibility and spatial attention are processed by the feature extraction module, finally achieving binary classification and loss optimization.

The attention map A(ylm) for a candidate state ylm is generated by aggregating multi-scale feature maps:

A(ylm)=∑s=1Sws·hatt,s(Vs(ylm);Watt,s), (10)where ws are learnable weights assigned to each scale s, S denotes the number of scales, and hatt, s is a scale-specific function parameterized by Watt, s. The use of learnable weights ws allows the model to dynamically prioritize scales that are most relevant to the current architectural layout.

To generate the scale-specific feature map Vs(ylm), the input feature map is processed through a sequence of down-sampling and up-sampling operations. For a given scale s, the feature map is obtained as:

Vs(ylm)=fdown,s(Φroi(ylm);Wdown,s), (11)where fdown, s is a convolutional down-sampling operation parameterized by Wdown, s. The feature map is then up-sampled back to the original resolution using:

Vsup(ylm)=fup,s(Vs(ylm);Wup,s), (12)where fup, s is an up-sampling operation parameterized by Wup, s. These operations ensure that spatial information at different scales is uniformly represented in the attention map computation.

To combine the scale-specific maps, a normalization step is applied to maintain numerical stability:

A^(ylm)=A(ylm)∑i,jAi,j(ylm)+ϵ, (13)where ϵ is a small constant to prevent division by zero. The normalized attention map A^(ylm) ensures that the attention weights are bounded and interpretable.

To enhance feature representation further, the computed attention map is applied to the original feature map using an element-wise multiplication:

Φatt(ylm)=Φroi(ylm)⊙A^(ylm), (14)where ⊙ denotes the element-wise Hadamard product. This operation amplifies the features in regions of high attention while suppressing less relevant regions, improving the quality of extracted features.

To adapt dynamically to varying architectural layouts, a feedback mechanism is integrated. The feedback adjusts the attention weights ws based on the classification error of the downstream task:

wsnew=wscurrent-η·∂Lcls∂ws, (15)where η is the learning rate, and Lcls is the classification loss. This adjustment ensures that the attention mechanism aligns with the broader objectives of the spatial optimization framework.

The spatial attention module plays a core role in the overall framework, and its function is reflected by its close connection with other modules. First, the module receives input features extracted by the "Features of Candidates Module," which combines the dynamic information generated by the motion model to represent the feature expressions of different regions or targets in the scene. Through the spatial attention mechanism, the module weights the input features, generates weighted features that reflect the focus of the model, and passes them to the binary classifier to predict the target state. At the same time, the weighted features are al

留言 (0)