Our everyday life is immersed in a sociocultural world, which we navigate using a set of sophisticated socio-cognitive abilities. Although at first it might seem that this sociocultural world is just another downstream product of our cognition, decades of research in developmental psychology suggest the opposite. Our socio-cultural world, cultural knowledge, and our socio-cognitive abilities are the foundation of our development and both our social and asocial intelligence (Vygotsky and Cole, 1978; Bruner, 1990; Tomasello, 2019).

For Vygotsky, a main driver for “higher-level” cognition are socio-cultural interactions (Vygotsky and Cole, 1978). He argues that many high-level cognitive functions first appear at the social level and then develop at the individual level. This leap from interpersonal processes to intrapersonal processes is referred to as internalization. A typical example of this process is learning to count. Children first learn to count out loud, i.e. with language and social guidance, which is an interpersonal process. As the child improves, it will learn to count in its head, no longer requiring any external guidance: counting became internalized, and will be a first step toward other more complex forms of abstract thinking. Vygotsky's theories influenced multiple works within cognitive science (Clark, 1996; Hutchins, 1996), primatology (Tomasello, 1999) and the developmental robotics branch of AI (Billard and Dautenhahn, 1998; Brooks et al., 2002; Cangelosi et al., 2010; Mirolli and Parisi, 2011).

Another pillar of modern developmental psychology is Jerome Bruner. He, too, emphasized the importance of culture in human development. Bruner (1990) writes: “it is culture, not biology, that shapes human life and the human mind, that gives meaning to action by situating its underlying intentional states in an interpretative system.” Most importantly for this paper, he presents a pragmatic view studying how referencing, requesting and finally language develop through routinized social interactions (formats) in which those abilities are necessary to achieve various ends. He describes these interactions as scaffolded— the caretaker gradually helps less and demands more of the child to achieve those goals, and this bootstraps the child's development (Bruner, 1985).

Finally, Michael Tomasello's work (Tomasello, 1999, 2019, 2020) constitutes a representative and contemporary assessment of the nature and central importance of sociality in human cognition. He outlined core social abilities and motivations through theoretical and experimental studies with humans and apes. When combined with the relevant experience, those abilities enable us to enter, benefit from, and contribute to the human culture, i.e. they enable the cumulative cultural evolution (a powerful form of cultural transmission fostering the development and perpetuation of complex culture and knowledge) (Tomasello, 1999).

Given the key role social cognition plays in human cognition and cultural evolution, it is natural that the field of AI aims to model social intelligence. A socially competent AI could learn our culture and participate in its cultural evolution, i.e. improve our concepts, theories, inventions, and create new ones. A system capable of out-of-the-box thinking creative solutions and discovering new relevant problems must learn our values and how we see and understand the world (it must learn our culture). We do not claim that The SocialAI is sufficient to reach that far and complex goal. We only propose that being informed by the concepts discussed in this paper is useful, and we present SocialAI as a tool which could be used to start investigating such questions in more details. Enriching AI with those skills also has numerous practical implications. Socially competent robots, capable of social learning, would be much easier to deploy and adapt to novel tasks and tools. For example, performing collaborative tasks with a robotic learner able to detect, learn and reuse context-dependent sets of communicative gestures/utterances could be easily integrated into human teams, without requiring humans to adopt new conventions. Furthermore, robots capable of learning human values and moral norms will be capable of performing tasks in the constraints defined by those values.

To further clarify the motivation of this work, we present an analogy with the skill of multiple object tracking (MOT). The MOT ability is an object of study under the umbrella of perception in developmental psychology. This ability enables humans, for instance, to drive a car. In AI, the ability of MOT was adopted as a research goal. An algorithm with this ability could at one point be used in applications involving high-level perception such as autonomous driving. However, it remains possible that autonomous driving could be solved without MOT as an intermediary step. Furthermore, the implementetion of a MOT capable system does not need to be similar to a human MOT system. Similarly, socially recursive inferences are an object of study under the umbrella of social cognition in developmental psychology. This ability enables humans, for instance, to play pictionary. We therefore arge that it should be adopted as a research goal, even though it remains possible that a pictionary playing system could be created without this intermediary step. Like for MOT, the implementation of such a system does not need to be similar to a human system for social inferences.

AI research on interactive agents is often focused on navigation and object manipulation problems, excised of any social dimension (Mnih et al., 2015; Lillicrap et al., 2015). The study of sociality is mostly studied in Multi-Agent settings, where the main focus is often on the emergence of culture (often with only a weak grounding in developmental psychology) (Jaques et al., 2019; Baker et al., 2019). While we believe that those directions are both interesting and important, in this work we focus on entering an already existing complex culture. And we argue that it can be beneficial to be informed by developmental psychology theories.

Cognitive science has inspired many works in social cognition in AI both in disembodied and embodied settings. In a disembodied setting, Machine learning models have been evaluated on their capacity to predict agent actions in theory of mind experiments. Rabinowitz et al. (2018) and more general social perception assessments (Netanyahu et al., 2021). In a virtual embodied setting, Jaques et al. (2019) implemented a model of social influence to foster coordination of MARL agents. Wu et al. (2021) study theory of mind in the context of collaboration and present the Bayesian Delegation algorithm to infer the intentions of others. Finally, in the real-world embodied setting, Cangelosi and Schlesinger (2014) discuss how to leverage knowledge from the cognitive development of human babies into embodied robots. Vollmer et al. (2016) argue that restricted predefined (not learned) interaction protocols (pragmatic frames) are usually used in the field of Human-Robot Interaction, and suggest studying a broader set of social situations. Our work following this rich tradition of levering insight from cognitive science and developmental psychology with focus on virtual embodied agents.

In the rapidly emerging field of Large Language models, social cognition research consists of proof-of-concept simulations (Park et al., 2023) and systematic benchmarks. Two most notable benchmarks are SiQA (Sap et al., 2019), which evaluates social common sense reasoning (without grounding in psychology), and ToMi (Le et al., 2019) which presents false-belief querries (false-belief representing only a small subset of social-intelligence in general). We believe this relevant and interesting work can be further enriched by an overview of different aspects of social intelligence and interactive settings presented in this work.

Following the theories of Michael Tomasello and Jerome Bruner, this work identifies a richer set of socio-cognitive skills than those currently considered in most of the AI research. More precisely, we focus on three key aspects of social cognition as identified by Tomasello: (1) social cognition: the ability to infer what others see and to engage in joint attention, (2) communication: the development of referential communication through pointing and the beginning of conventionalized communication through language, and (3) cultural learning: the use of imitation and role reversal imitation in social learning. We also outline two concepts from Jerome Bruner's work: formats and scaffolding. Formats refer to the way in which social interactions are structured, and scaffolding refers to the temporary support provided by a caretaker to help a learner achieve a task that would be otherwise too difficult.

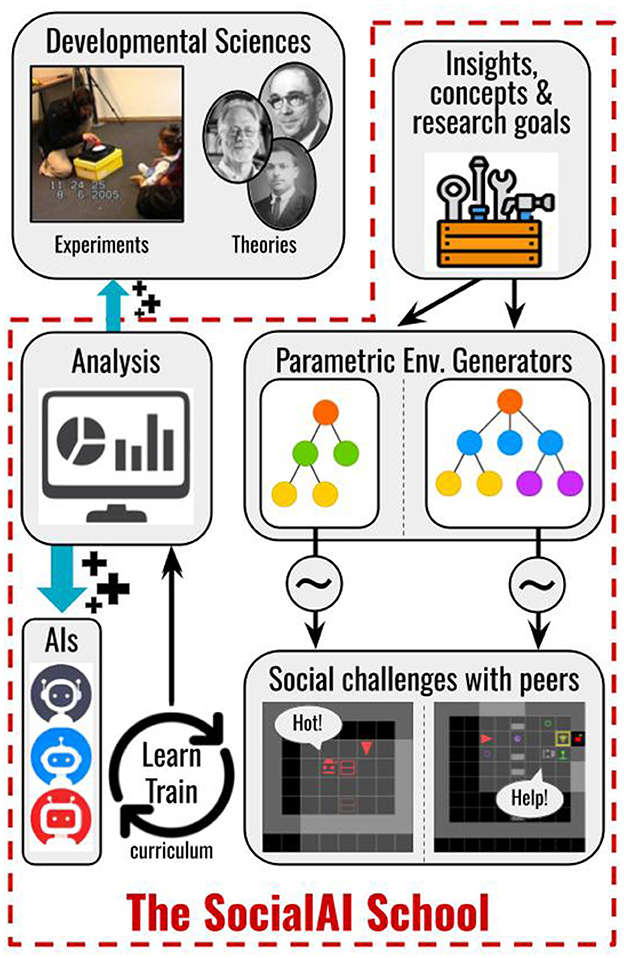

Based on this set of target abilities, we construct the SocialAI school (shown in Figure 1), a tool [based on MiniGrid (Chevalier-Boisvert et al., 2018)] which enables the construction of social environments whose diverse grid-world scenarios affords rich yet tractable research around social competence acquisition. Considered social scenarios are organized according to the key cognitive science experiments used to study the social cognition in children by highlighting core developmental steps.

Figure 1. The SocialAI School contains technical and conceptual tools simplifying research of socially proficient AI agents. Developmental sciences give inspiration to the SocialAI School in the form of terminology and research goals, based on which we created a parametric environment generator. The user defines the parameters defining the sampling of environments (~). This enables the analysis and experiments in the form of training, giving insight into for building better AI (+) and even for developmental sciences (+).

We do not claim that the SocialAI school is sufficient to construct a socially competent agent as this is a very far-reaching and complex goal. However, we believe that in aiming for this goal, concepts from developmental psychology can serve as signposts for AI—give directions and enable us to define short term goals. Given that the outlined skills are at the very core of human social and cognitive competences, artificial agents aimed at participating in and learning from social interactions with humans are likely to require the same core competences. We present the SocialAI school merely as a first step toward this goal. The SocialAI school can be easily modified and extended. The code is open-sourced and accompanied by additional resources (see Figure 2).

Figure 2. The SocialAI school is accompanied by additional resources available at the project's website.

In our experiments, we aim to show the versatility of the experiments which could be conducted with the SocialAI school. We present experiments regarding the following questions: generalization of social inferences (the pointing gesture) to new contexts, recreating an experiment from cognitive science (to study the knowledge transfer during role reversal), and the impact of a scaffolded environment on the agent's learning. To show the diversity of agents which can be used, we conduct those experiments with RL agents, and present an additional case study with LLMs as interactive agents. In the Supplementary material, we explore many more questions such as linguistic inferences, joint attention, and imitation. We hope to encourage future work extending and building on these first experiments to study various questions regarding social competence. For example, new socio-cultural scenarios, architectures, training regimes, and so on.

We outline the following main contributions of this work:

• An introduction to Michael Tomasello's and Jerome Bruner's theories on child development and core socio-cognitive abilities.

• An outline of a set of core socio-cognitive abilities important for current AI research (abilities that enable to enter a culture).

• The SocialAI school: a tool including a customizable procedural generation suite of social environments aiming to simplify studies of socio-cognitive abilities of AI agents.

• Examples of case studies demonstrating how SocialAI can be used to study various questions regarding socio-cognitive abilities in AI.

2 Cognitive science backgroundThis section introduces Michael Tomasello's and Jerome Bruner's theories and concepts.

2.1 The shared intentionality theory (Michael Tomasello)Humans are born into a culture filled with cultural artifacts, symbols and institutions like language, social norms, tool industries, or even governments (Richerson and Boyd, 2006; Tomasello, 2019). These artifacts are a product of a series of modifications over many generations. Tomasello calls this cumulative cultural evolution, and argues that it is behind our most impressive achievements (Tomasello, 1999).

Cumulative cultural evolution is grounded in our socio-cognitive abilities (e.g. social cognition, cultural learning, communication), which enable us to learn, improve, and teach our culture (Tomasello, 2019), i.e. enter a culture. Cultural artifacts inherited through this process become the core of our cognition. An example of this is language, which influences our cognition in many ways. For example, it defines how we categorize and construe the world, and enables a powerful form of social learning : instructed learning (Tomasello, 1999). This makes socio-cognitive abilities crucial, as their early development bootstraps both our social and asocial cognition (Herrmann et al., 2007).

Tomasello's Shared intentionality theory argues that human socio-cognitive abilities, such as communication and social learning, are transformed by two developmental steps : the emergence of Joint intentionality at around 9 months of age (the 9-month revolution), and the emergence of Collective intentionality at around 3 years of age (the objective/normative turn) (Tomasello, 2019).

Joint intentionality emerges at around 9 months of age (Tomasello, 2019). It enables children to form a joint agent (a dyadic “we”)—they understand that they work with a partner toward the same joint goal. Children begin to view dyadic social interactions through a “dual-level structure”: a joint agent “we” on one level, and a personal “I” on another, i.e. we both understand that we both have separate roles (“I”), and that we work together toward the same joint goal (“we”). This enables human children to take the perspective of others, which can also be done recursively (they are not only both attending to the same goal, they are also both attending to the partner's attention to the goal, and they both know that they both are doing so).

Collective intentionality emerges at around 3 years of age (Tomasello, 2019). It enables children to form a cultural group-minded “we,” which in comparison with a dyadic “we” represents an identity for a group. For example, a child might enforce a social norm because “this is how we, in this culture, do things.” Consequently, children begin to participate in conventions and norms, and to view things from the “objective” perspective.

These two developmental steps transform countless abilities, motivations, and behaviors. For the purpose of this paper, we focus on the following three developmental pathways: social cognition (Section 2.1.1), communication (Section 2.1.2), and social learning (Section 2.1.3), as we consider them the most relevant for AI at the moment.

2.1.1 Social cognitionIn this section, we discuss the development of the ability to coordinate perspectives and view things from the objective perspective (a perspective independent from any individual) (Tomasello, 2019). The starting point is the ability to infer what another sees or knows. The earliest example of this is gaze following of six-month-olds (D'Entremont et al., 1997). Here, only one perspective is processed at the time. Joint attention (JA) emerges at around 9 months of age. Tomasello (2019) defines JA as consisting of two elements: triangulation (two participants attending to the same referent) and recursiveness (both participants being recursively aware that they are both sharing attention). JA is characterized by the dual-level structure of shared attention (on one level) and individual perspectives (on another level). Consequently, children start to align and exchange perspectives. Once children reach a sufficient level of linguistic competence, they start sharing attention to mental content in the form of linguistic discourse (at two to three years of age). The presence of conflicting perspectives in linguistic discourse (e.g. a disagreement about where some object is located) pushes children to resolve those conflicts, which they do by forming the “objective” perspective, and coordinating other perspectives with it.

2.1.2 CommunicationCommunication starts with imperative gestures for self-serving purposes. An example of such a gesture is the child pulling the adult's hand, requesting them to pick them up. This gesture always has the same imperative meaning, and it never refers to an external object. The 9-month revolution brings forth referential communication—children start to communicate triadically to external referents through pointing and pantomiming. The pointing gesture is a powerful way of communicating, as the same gesture can be used to express different meanings in different contexts (provided that the observer can infer that meaning). The ability to infer this meaning is based on the emerging abilities of joint intentionality. Those of joint attention and, most notably, of socially recursive inferences—to interpret a pointing gesture, we make a recursive inference of what “you intend for me to think.” For example, if we are looking for a ball together, and you point to a cupboard behind me. I should infer that you are drawing my attention to the cupboard to communicate that I should look for the ball in the cupboard. The next step is the appearance of conventionalized linguistic communication. The underlying principle stays the same: reference to an external entity combined with inferring the meaning through recursive inferences. The difference is that, now, the meaning depends on the conventional means (e.g. words and phrases) as well as the context. Tomasello argues that, at first, children don't understand language as conventional, and they use it as any other tool. The understanding of language as conventional follows the emergence of collective intentionality after the third birthday. This enables a myriad of different language uses, such as discourse or pedagogy.

2.1.3 Cultural learningHuman culture is characterized by a powerful form of cultural transmission called cumulative cultural evolution—inventions quickly spread and are improved by following generations (Tomasello, 1999). These inventions spread at such a pace that they are rarely forgotten or lost. This is referred to as the ratchet effect (Tomasello et al., 1993)—inventions are iteratively improved without slippage back. This effect is enabled by human social learning abilities (ex. imitation, instructed learning), and motivations (to learn from others, but also to affiliate and conform). The earliest form of cultural learning is the mimicking of facial expressions [observed even in neonates (Meltzoff and Moore, 1997)]. Over the course of the first year, children begin to imitate other's actions and goals, and then, they begin doing so in ways which demonstrate their understanding of other's as intentional agents (Meltzoff, 1995). For example, in the failed-attempt paradigm children imitate a goal that the adult attempted, but failed, to reach. Joint intentionality brings forth a new form of cultural learning called role reversal imitation. Children can reverse the roles of a collaborative activity by learning about the partners role only from playing their own. For example, children respond to an adult tickling their arm, by tickling the adult's arm (instead of its own) (Carpenter et al., 2005). This is enabled by the dual-level structure of joint intentionality through which children understand, at the same time, the joint goal of a dyadic interaction on one level, and the individuals' separate roles on another. The next big step in the development of cultural learning is learning from instructions—instructed learning (following the emergence of collective intentionality). It is based on the adults' motivation to teach children as well as on the children's ability to understand and learn from linguistic instructions. Children understand knowledge acquired through instructions as objective truth, and generalize it much better than knowledge acquired by other means (Butler and Tomasello, 2016). In this way we acquire the most complex knowledge and skills such as reading or algebra.

2.2 Scaffolding and formats in Jerome Bruner's theoryThis work is also influenced by Jerome Bruner's theories, especially regarding the concepts of scaffolding (Wood et al., 1976) and formats (Bruner, 1985), which were recently reintroduced to AI as pragmatic frames (Vollmer et al., 2016).

Formats (Pragmatic frames) (Bruner, 1985) simplify learning by providing a stable structure to social interactions. They are regular patterns characterizing the unfolding of possible social interactions (equivalent to an interaction protocol or a grammar of social interactions). Formats consist of a deep structure (the static part) and a surface structure (the varying realizations managed by some rules). An example of a format is the common peek-a-boo game. The deep structure refers to the appearance and the reappearance of an object. The surface structure can be realized in different ways. For example, one might hide an object using a cloth, or hands; one might hide his face or a toy; one might do shorter or longer pauses before making the object reappear. We understand social interactions through such formats, and our social interactions are based on our ability to learn, negotiate, and use them.

Another relevant concept is scaffolding (Wood et al., 1976) [similar to Vygotsky's zone of proximal development (Vygotsky and Cole, 1978)]. Scaffolding is a process through which an adult bootstraps the child's learning. The adult controls aspects of a task which are currently too hard for the child (scaffolds the interaction). The scaffold is gradually reduced as the child is ready to take on more aspects of the task, until they can solve the task alone (without scaffolding). An example is a child constructing a pyramid with the help of an adult (Wood et al., 1976). At first, the child is not even focusing on the task, and the adult tries to get its attention to the task by connecting blocks and building the pyramid in front of them. Once the child is able to focus on the task, the adult starts passing the blocks to the child to connect. In the next phase, the child is grabbing blocks by itself, and the adult is helping through verbal suggestions. Then, only verbal confirmations are needed to guide the child. Finally, the child can construct the pyramid by itself. In summary, the adult observes the child and gradually transfers parts of the task (removes the scaffold) to the child. Through this process, the caretaker enables the child to master a task they would not be able to master alone.

3 The SocialAI schoolThe SocialAI school is a tool for building interactive environments to study various questions regarding social competence, such as “What do concepts related to social abilities and motivations (outlined by developmental psychology) mean in the scope of AI?”, “How can we evaluate their presence in different AI agents?”, “What are their simplest forms and how can AI agents acquire them?”

To construct SocialAI, we rely on a set of key experiments and studies from developmental psychology, which were used to outline the most important abilities, motivations and developmental steps in humans. From the work of Tomasello, we focus on developments before and around the age of 9 months (we believe it is important to address those before more complex ones relating to development of 3-year-olds, see Section 2.1). We study the following developmental pathways: Social cognition (inferring other's perception and joint attention), Communication (referential communication through the pointing gesture and the beginning of conventionalized communication through simple language), and Cultural Learning (imitation and role reversal imitation). From the work of Bruner, we study the concepts of Formats and Scaffolding (see Section 2.2). Using The SocialAI school, we construct environments and conduct experiments regarding all of those concepts.

SocialAI, which is built on top of Minigrid (Chevalier-Boisvert et al., 2018), includes a customizable parameterized suite of procedurally generated environments. We implement this procedural generation with a tree-based structure (the parametric tree). This makes it simple to add and modify new environments, and control their sampling. All the current environments are single-agent and contain a scripted peer. The agent has to interact with the peer to reach an apple. This setup enables a controlled and minimal representation of social interactions. To facilitate future research, this tool was made to be very easy to modify and extend. The SocialAI school is completely open sourced, and we hope that it will be useful to study the questions regarding social intelligence in AI.

The remainder of this section is organized as follows. First, Section 3.1 describes technical details such as the observation and the action space. Then, Section 3.2 introduces the parameter tree and explains how it can be used to sample environments. Finally, Section 3.2 describes two environment types, which were used in case studies in Section 4. In the Supplementary material, we present one additional environment type.

3.1 Parameterized social environmentsThe SocialAI school is built on top of the MiniGrid codebase (Chevalier-Boisvert et al., 2018), which provides an efficient and easily extensible implementation of grid world environments. SocialAI environments are grid worlds consisting of one room. In all of our environments, the task of the agent is to eat the apple, at which point it is rewarded. The reward is diminished according to the number of steps it took the agent to complete the episode. The episode ends when the agent eats the apple, uses the done action, or after a timeout of 80 steps.

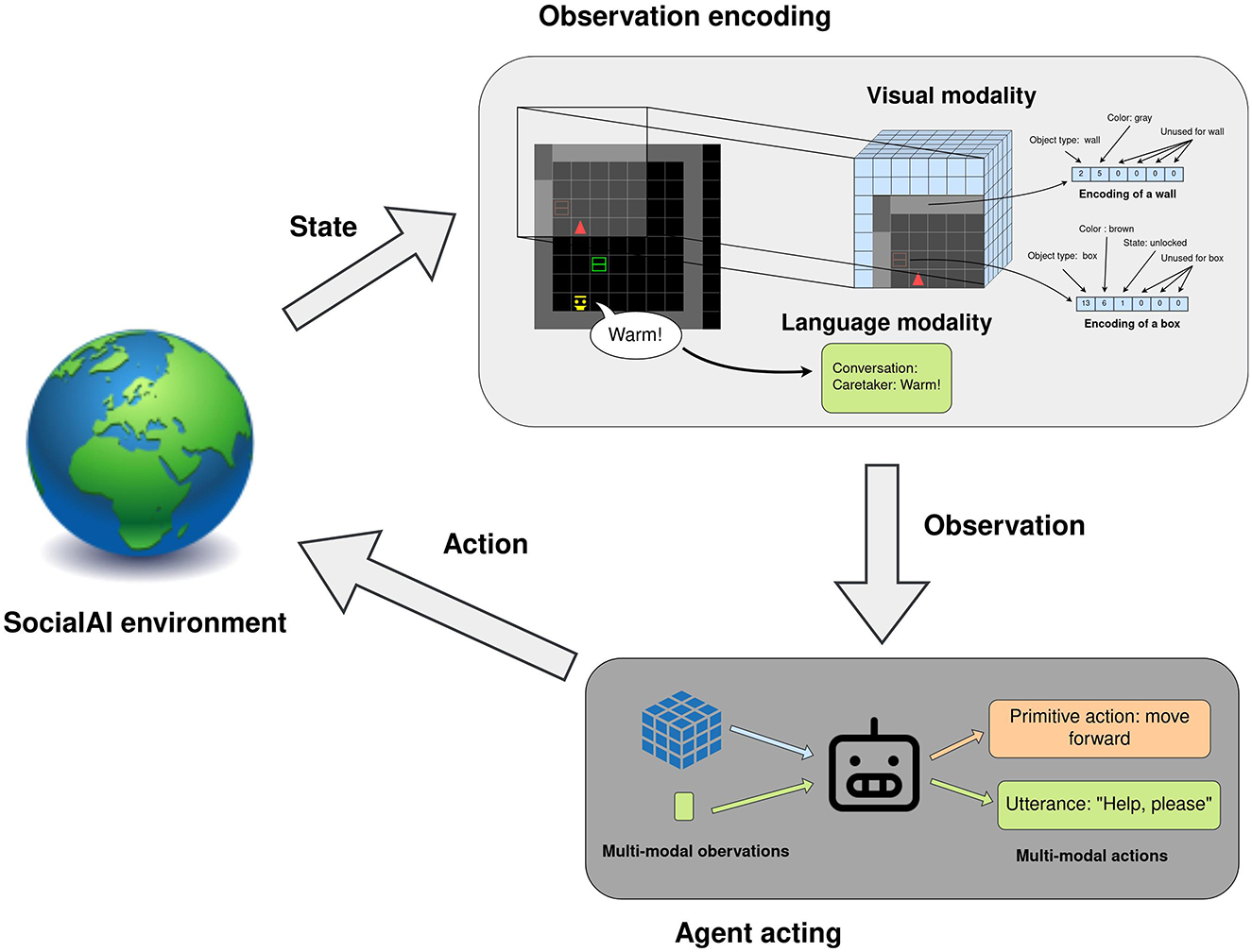

The agent's observation and action spaces are shown in Figure 3. This multimodal observation space consists of the full dialogue history, and a 7 × 7 × 8 tensor corresponding to the 7 × 7 grid in front of the agent. Each cell is encoded by six integers representing the object type, color, and some additional object-dependent information (e.g. is the door open, point direction, gaze direction, etc). A list of all possible objects in provided in the Supplementary material. The agent acts in the environment through a multimodal action space, which consists of 6 primitive actions (no, movement actions, toggle, and done) and a 4 × 16 templated language (the agent also has the option not to speak). All environments can also be instantiated as Textworlds (Côté et al., 2018). This procedure is explained in more detail in Section 4.5.

Figure 3. Workflow of an agent acting in the SocialAI school. The environment generates a state, which is represented as multimodal observations: a 7 × 7 × 6 tensor and the full dialogue history. The agent acts through a multi-modal action space consisting of primitive actions and utterances.

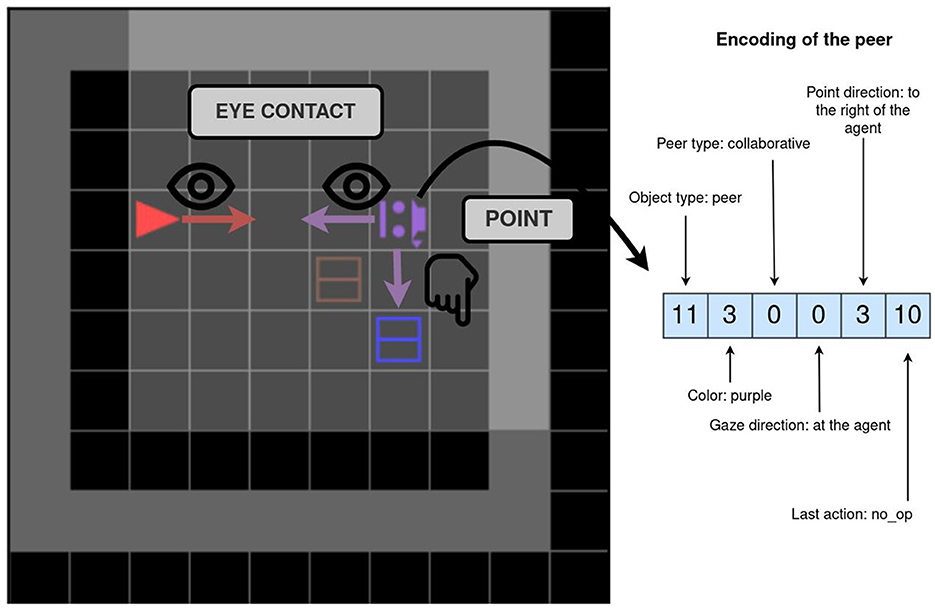

All environments, unless otherwise stated, contain a scripted social peer, and the task can only be solved by interacting with this peer (for which socio-cognitive abilities are needed). A social peer observes the world in the same way as the agent does (as a grid in front of it), and it also observes the agent's utterances. Their action space consists of primitive actions for movement, pointing, and the toggle action. The peer can also communicate with words and sentences. As the peer is scripted, there are no constraints on the language it can utter (it is not constrained to a templated language). The language it uses depends on the environment, which defines with sentence the peer will utter at which point. The peer is represented in the agent's observation by seven integers depicting their: object type, position, color, type (cooperative or competitive), gaze direction, point direction, and the last executed primitive action. The peer's gaze and point directions are represented relative to the agent (e.g. 1—to the left of the agent). The pointing direction can also be set to 0, which signifies that the peer is not pointing. Figure 4 shows an example of an environment with the corresponding encoding of the peer. The agent (red) and the scripted peer (purple) are making eye contact—the peer and the agent are in the same row or column and their gazes meet frontally. In this example, the scripted peer is also pointing to the blue box.

Figure 4. A depiction of a peer and its encoding. The agent and a peer are in eye contact, and the peer is pointing to the blue box. To the right is an encoding of the peer. The encoding contains information about the peer, e.g. the gaze and point direction.

The SocialAI environments are parameterized, and those parameters define the social dimensions of the task. In other words, parameters define which socio-cognitive abilities are needed to solve the task. For example, depending on the ENVIRONMENT TYPE parameter, the peer can give information, collaborate with the agent, or be adversarial. In the case of the peer giving information, additional parameters define what is the form of this information (linguistic or pointing).

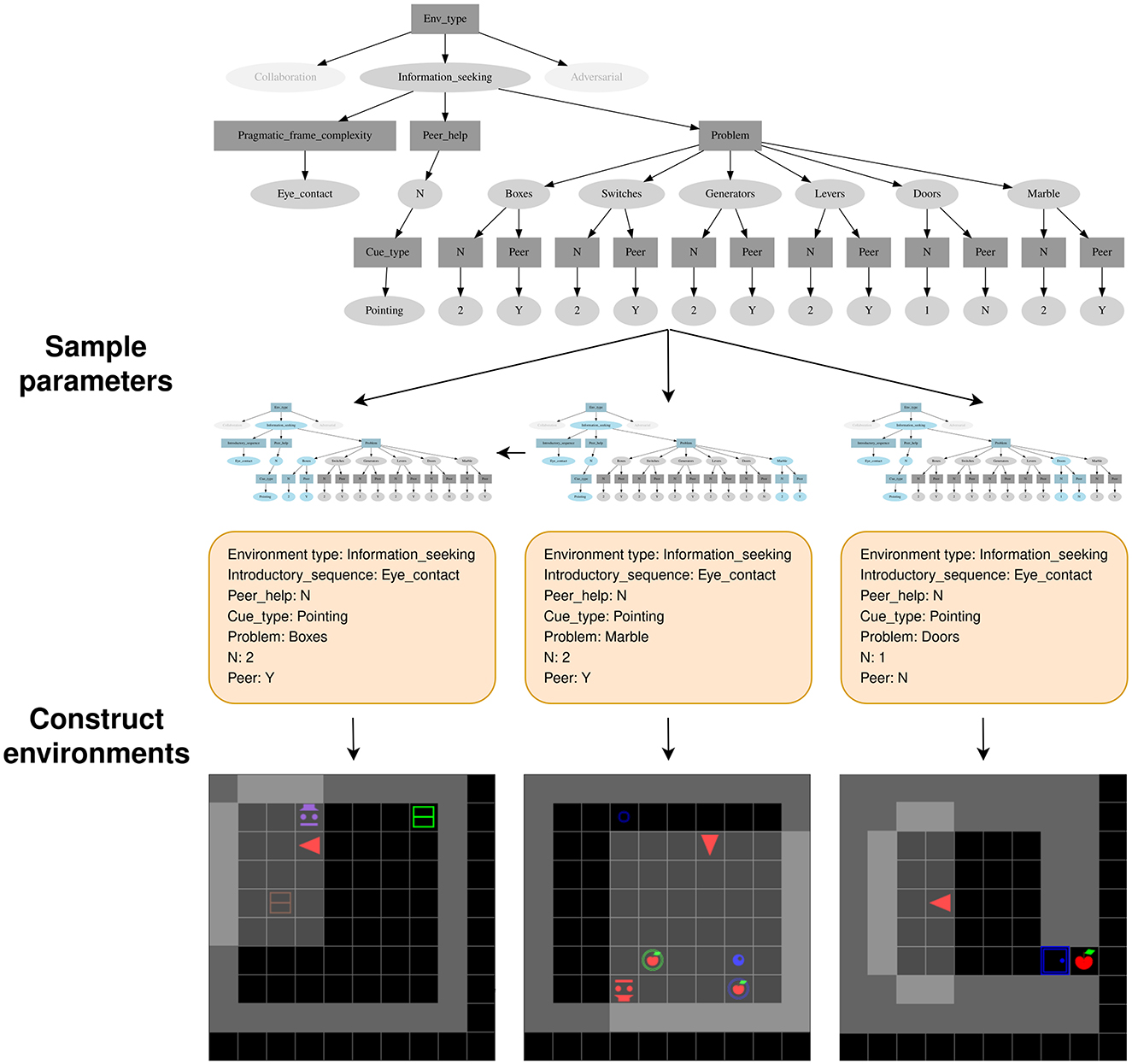

3.2 Parameter treeSocialAI enables the creation of many parameterized environments, and those parameters are implemented as nodes in a parameter tree. A parameter tree is a structure through which the experimenter can easily define which parameters (and their values) can be sampled. An example of such a tree can be seen in Figure 5. The experimenter defines a parameter tree at the beginning of the experiment. Each episode begins with the sampling of a set of parameters from this tree. Then, an environment is created and the agent placed inside.

Figure 5. An example of procedural environment generation using tree-based parametric sampling. Parameter nodes (rectangles) require that one of its children (a value node) is selected. Value nodes (ovals) require that sampling progresses through all of its children (parameter nodes). Three examples of parameter sampling (and the three corresponding environments) are shown.

The parameter tree is used to sample parameter sets, an example of such sampling is shown in Figure 5. There are two kinds of nodes: parameter nodes (rectangles) and value nodes (ovals). Parameter nodes correspond to parameters, and value nodes corresponds to possible values for those parameters. Sampling proceeds in a top-down fashion, starting from the root node. In all our experiments, ENV_TYPE parameter node is the root. Sampling from a parameter node selects one of its children (a value node), i.e. sets a value for this parameter. This can be done by uniform sampling over the node's children, or by prioritized sampling with a curriculum. Once a value node has been chosen, the sampling continues through all of its children (parameter nodes). In other words, setting a value for one parameter, defines which other parameters (the value node's children) need to be set. In our codebase, it is simple to create such trees, and add additional parameters and environments. In the following sections, we explain the most relevant parameters. The Supplementary material contains additional examples of parametric trees.

3.3 Environment typesThe most important parameter is the environment type—ENV_TYPE (the root node). We implemented three different environment types: INFORMATIONSEEKING, COLLABORATION, and ADVERSARIALPEER. A parameter tree doesn't have to contain all of them, rather this depends on the type of experiment one wants to conduct (most often only one will be present). For example, Figure 5 shows the tree with only the INFORMATIONSEEKING environment type. This tree was used to study understanding of the pointing gesture in Section 4.2. In the rest of this section, we describe the INFORMATIONSEEKING and the COLLABORATION environment types. The ADVERSARIALPEER type is described in the Supplementary material.

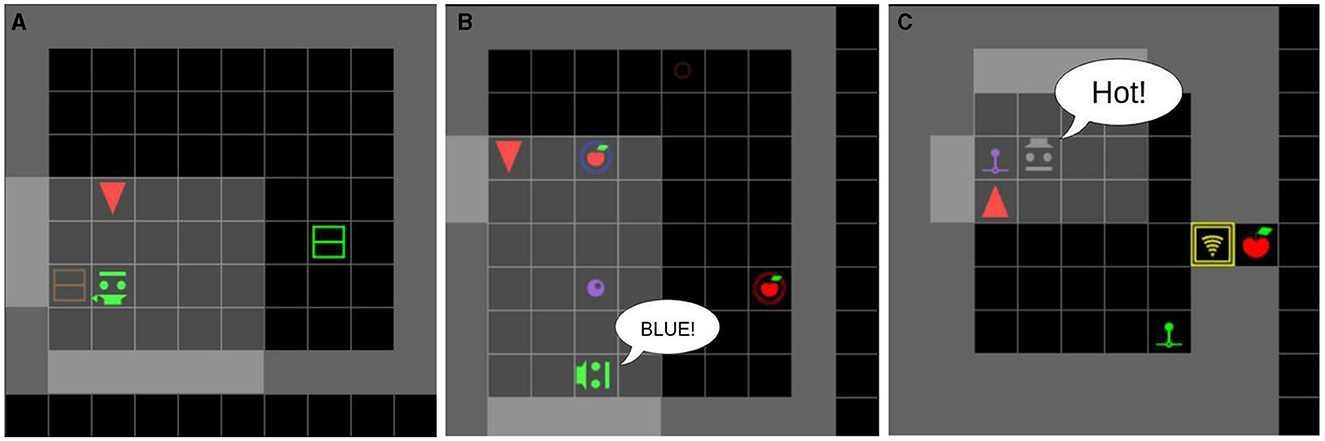

3.3.1 Information seeking type environmentsWe used this environment type in case studies regarding communication, joint attention, and imitation learning. Figure 6 shows examples of INFORMATIONSEEKING type environments.

Figure 6. Examples of INFORMATIONSEEKING type environments, in which agents learn to find hidden apples using textual or non-verbal communication with social peers. (A) A scripted peer pointing to a box. The agent needs to open the red box. (B) A scripted peer uttering the color of the correct generator. The agent needs to push the marble onto the blue generator. (C) A scripted peer hinting the distance to the correct lever (“Hot” means very close). The agent needs to pull the purple lever to open the door.

The general principle of this environment type is as follows. The agent is rewarded upon eating the apple, which is hidden. The apple can be accessed by interacting with an object. The PROBLEM parameter defines which objects will in the environment. There are six different problems: BOXES, SWITCHES, MARBLE, GENERATORS, DOORS, or LEVERS. Different objects make the apple accessible in different ways. For example, opening the box will make the apple appear at the location of the box, while pulling the lever will open the door in front of the apple. A distractor can also be present (if N is set to 2). A distractor is an object of the same type as the correct object, but if it is used, both objects are blocked and the apple cannot be obtained in this episode.

To find out which object is the correct one, the agent must interact with the scripted peer. This interaction starts with the agent introducing itself. The way in which the agent should introduce itself is defined by the INTRODUCTORY SEQUENCE parameter. We define the following four values: NO, EYE_CONTACT, ASK, ASK-EYE_CONTACT. For the value NO, no introduction is needed and the peer will give information at the beginning of the episode. In most of our experiments, we will use the value EYE_CONTACT. For this value, the scripted peer will turn to look at the agent and wait for the agent to look at it. The agent must direct its gaze directly toward the scripted peer. An example of an established eye contact can be seen in Figure 4. For the value ASK, the agent needs to utter “Help, please” (a full grammar of the language is given in the Supplementary material). Finally, the ASK-EYE_CONTACT value is a combination of the previous two (the agent utters “Help, please” during eye contact).

Once the agent introduces itself, the HELP parameter defines the peer's behavior. If it is set to Y the peer with obtain the apple, and leave it for the agent to eat. Alternatively, it will give cues to the agent about which object to use. The nature of this cue is defined by the CUE TYPE parameter. We define four different values: POINTING, LANGUAGE COLOR, LANGUAGE FEEDBACK, and IMITATION. For the POINTING type, the peer will point to the correct object. It will move to a location from which it can unambiguously point (e.g. the same row) and point to the object. For the LANGUAGE COLOR type, the peer will say the color of the correct object. For the LANGUAGE FEEDBACK type, the peer will hint how close the agent is to the correct object. Every step, the peer will say “Cold,” “Medium,” “Warm,” or “Hot,” depending on how close the agent is to the correct object (e.g. “Cold” means that the agent is far from the object, and “Hot” that it is right next to it). For the IMITATION type, the peer will demonstrate the use of the correct object. The peer will use the correct object, obtain the apple, eat it, and reset the environment to its initial state.

For the purpose of analyzing the agent's behavior more thoroughly, Information seeking environments can also be created without the distracting object, i.e. in their asocial versions. This can be achieved by setting parameter PEER to N and parameter N to 1. The asocial version of an information seeking environment contains no distractor, and no peer, i.e. the agent just needs to use the only object in the environment.

3.3.2 Collaboration type environmentsWe used this environment type to study the agent's role-reversal ability. It consists of collaborative activities with two clearly defined roles. Environments are separated into two halves by a fence over which the agent can see, but which it cannot cross (each half corresponds to one role). If both roles are fulfilled correctly, two apples will become accessible (one on each side of the fence).

The most important parameters are ROLE and PROBLEM. The ROLE parameter defines in which role to put the agent. The PROBLEM parameter defines the collaborative activity, of which we implemented seven: DOORLEVER, MARBLEPUSH, MARBLEPASS, BOXES, SWITCHES, GENERATORS, MARBLE. In DOORLEVER one participant opens the door by pulling the lever and the other passes through them, and activates the generator (generating two apples). In MARBLEPUSH one participant opens the door by pulling the lever, and the other pushes a marble through them. This marble activates the marble generator upon touching it. In MARBLEPASS one participant pushed the marble to the right side of the room, and then the other redirects it toward the marble generator. In the other four problems, one participant is presented with two boxes of different colors, and the other participant is presented with two objects of those same colors and of the type defined by the PROBLEM parameter (e.g. two generators). First, the participant that was presented with boxes opens one box (an apple will be in both). After this, to obtain its apple, the other participant must use the object of the same color as the opened box. Figure 7 shows examples of COLLABORATION type environments.

Figure 7. Examples of COLLABORATION type environments, in which agents must learn cooperative strategies with a (scripted) peer to achieve two-player puzzles. (A) The MARBLEPASS problem with the agent in role B. The peer pushes the marble to the right and then the agent pushes it further to the purple marble generator. This makes two apples appear on the blue and red platforms. (B) The LEVERDOOR problem with the agent in role B. The peer opens the red door by pulling on the green lever. This enables the agent to go through the door and activate the purple generator This makes two apples appear on the gray and yellow platforms. (C) The MARBLEPUSH problem with the agent in role A. The peer opens the yellow door using the green lever. Then the agent pushes the marble through the door to the purple marble generator. This makes two apples appear on the purple and green platforms.

Like the information seeking environments, collaboration environments can also be instantiated in their asocial versions. This can be achieved by setting the VERSION parameter to ASOCIAL. The peer is not present in the environment, and the environment is initialized so that the task can be solved alone. For example, in MARBLEPASS the marble is already on the right side of the room, so the agent just has to push it toward the marble generator.

4 ExperimentsIn this section, we demonstrate the diversity of experiments that can be conducted with the SocialAI school. To facilitate future research, the SocialAI school is easy to modify and extend, and is completely open sourced. We hope that it will be useful to study various questions regarding social intelligence in AI. Here, we present a series of such case-studies inspired by theories and studies discussed in Section 2.

The remainder of this section is organized as follows. In Section 4.1 we describe the agents used in case studies with reinforcement learning. In Section 4.2 we evaluate the generalization of socially recursive inferences by RL agents to new contexts—pointing in a new context. In Section 4.3 we show how an experiment from cognitive science can be recreated in the context of AI—we study the transfer of knowledge from one role to another, i.e. role reversal. In Section 4.4 we study how an RL agent can be made to learn a complex task by changing the environment (scaffolding) rather than the agent. Finally, in Section 4.5 we show how SocialAI environments can be easily transformed to pure Textworlds to study large language models as interactive agents. Five additional case studies are briefly outlined in Section 4.6 and presented in detail in the Supplementary material. These regard linguistic communication, joint attention, meta imitation learning, inferring the other's field of view, and formats (pragmatic frames). The Supplementary material also presents a pilot study, which was used to outline the most promising agent for all case-studies.

4.1 BaselinesIn all of our case studies, except the study with LLMs (Section 4.5), we use a PPO (Schulman et al., 2017) reinforcement learning agent as depicted in Figure 3. The multimodal observation space consists of a 7 × 7 × 6 tensor (vision) and the full dialogue history (language). The multimodal action space consists of 6 primitive actions (no_op, turn left, turn right, go forward, toggle, and done), and a 4 × 16 templated language. The architecture of the agent is taken from Hui et al. (2020) and adapted for the multimodal action space with an additional output head (see Supplementary material for details). This additional head consists of three outputs: a binary output indicating if the agent will speak, and outputs for the template and the word to use.

In a set of pilot experiments (see Supplementary material) we proposed two count-based exploration bonuses, which we compared to other exploration bonuses including RND (Burda et al., 2018) and RIDE (Raileanu and Rocktäschel, 2020). Visual count-based exploration bonus (“PPO-CB”) performed best on the tasks in which language is not used, and its linguistic variant “PPO-CBL” performed best in environments with the peer giving linguistic cues. We therefore used those two agents in our case studies. Both of those two exploration bonuses are episodic. They estimate the diversity of observations in an episode and give reward proportional to that diversity. The linguistic exploration bonus uses the number of different words, and the vision-based exploration bonus the number of different encodings observed. In case studies in Sections 4.2, 4.3 we use the “PPO-CB” exploration bonus. The case study in Section 4.4 requires as raw PPO agent, and the one in Section 4.5 uses LLMs as agents.

4.2 Understanding the pointing gestureThis experiment is motivated by a study of children's ability to understand pointing gestures (Behne et al., 2005), discussed in Section 2.1.2. We study if an RL agent (with a visual count-based exploration bonus) can infer the meaning of a pointing gesture, and generalize this ability to new situations (infer the new meaning of a pointing gesture in a new context). This kind of generalization is relevant because the power of inferring pointing gestures is based on being able to infer its meaning to new referents based on new social contexts. We show how the SocialAI school can be used to evaluate the presence of generalizable social skills in artificial agents.

The environment consists of two objects (ex. boxes) and the peer that points to the correct object. The agent then has to interact with that object (ex. open the box) to get access to an apple. The agent is trained on five problems each with different objects (Boxes, Switches, Levers, Marble, Generators), and on the asocial version of the Doors problem (only one door and no peer). Training on the asocial version enables the agent to learn how to use a door, which is a prerequisite for generalization of the pointing gesture to an environment with two doors. The agent is evaluated on the Doors problem in the social setting (two doors and a peer pointing to the correct one). The agent needs to combine the knowledge of how to use a door (learned on the asocial version of that problem), with inferring the meaning of the pointing gesture (learned on the other five problems), and generalize that to a new scenario where the peer points to a door. To succeed, it needs to do pragmatically infer the intended meaning of the point (a socially recursive inference).

Figure 8 shows the success rate of the agent on the training environments [“PPO_CB(train)”] and on the evaluation environment [PPO_CB(test)]. We can see that while the agent easily solves the training environments (with the success rate of 95.2%), it fails to generalize to the testing environments (it reaches the success rate of 45.2%, which corresponds to randomly guessing the correct object). These results demonstrate that, while the agent can learn to infer the meaning of a pointing gesture in a familiar context, it cannot generalize to new social contexts. These results motivate future research on how an agent can be endowed with abilities for such combinatorial generalization. Given the recent advances in modeling social interactions with LLMs (Park et al., 2023), the LLM-based agents constitute a potential solution.

Figure 8. The Pointing experiments. Is an RL agent is able to infer the meaning of a pointing gesture in a new context? The figure compares the success rate (mean ± SD over eight seeds) on the training environments with the evaluation on the testing environment. The cross marks depict statistical significance (p = 0.05). The agent achieves high performance on the training environments, but it is not able to infer the meaning of a pointing gesture in a new context.

The Supplementary material presents similar two experiments in which the peer provides linguistic cues for the color and for the proximity of the correct object (instead of pointing). Similarly, we observe that, while the PPO agents master the training environments, they fail to generalize to a new context.

4.3 Role reversal imitationIn this experiment, we study the role-reversal capabilities of an RL agent: to what extent can it learn about the partner's role from playing its own. In doing so, we also show how SocialAI can be used to adapt a cognitive science methodology for AI to recreate the experiment. In Fletcher et al. (2012) apes and children were trained on one role (role B), and then tested on how long it took them to master the opposite role (role A). Results showed that children, but not apes, master role A faster than the control group (not pretrained). These results imply that children learn about the opposite role just from playing their own, i.e. they see the interaction from a bird's eye perspective. We study the following two questions: (1) How much do RL agents learn about the partner's role during a collaborative activity? (2) Does increasing the training diversity (training on more tasks in both roles) enable the agent to learn more about the partner's role?

We conduct this study on the MarblePass task. This task consists of two roles: one participant pushes the marble to the right side of the environment (role A), from wh

留言 (0)