In the context of rapid advancements in information technology, motion action recognition has emerged as a pivotal research area within computer vision and artificial intelligence (Kong and Fu, 2022). This technology is receiving heightened attention due to its critical role in enhancing the accuracy and intelligence of applications such as intelligent surveillance systems, medical rehabilitation, sports analysis, and human-computer interaction (Sun et al., 2022). The ability to automatically recognize and analyze human actions enables significant labor cost reductions and improves the speed and precision of system responses, thus contributing to smarter and more efficient solutions. Motion action recognition research, therefore, is not only a natural progression in technological innovation but also addresses pressing real-world application challenges (Elharrouss et al., 2021).

Traditional approaches to action recognition, including symbolic AI and knowledge-based methods, rely heavily on predefined rules and logical reasoning. These methods offer certain advantages, such as low computational requirements and straightforward implementation, and perform adequately in structured environments with well-defined rules. For example, momentum-based methods analyze motion changes by computing object speed and direction, making them useful for video surveillance and motion tracking (Rao et al., 2021). Gradient-based methods, which analyze brightness and color changes within video frames, can capture subtle motion nuances effectively (Xiao et al., 2022), while logistic regression provides a simple statistical approach to classify basic actions (Sun et al., 2022). Despite these advantages, such traditional methods are sensitive to noise and struggle to capture complex, nonlinear motion patterns, limiting their applicability in dynamic environments with large-scale, diverse data.

In recent years, machine learning-based algorithms have gained popularity for motion action recognition as they allow for automatic feature extraction from large datasets, improving both generalization and recognition accuracy. Methods such as Principal Component Analysis (PCA) are used for dimensionality reduction, extracting representative features to enhance efficiency and accuracy (Shiripova et al., 2020). Ensemble models like Random Forests employ multiple decision trees with a voting mechanism, enhancing stability and resilience against noise (Langroodi et al., 2021). Multi-Layer Perceptrons (MLPs) utilize nonlinear mapping across multiple layers, effectively recognizing complex actions through higher classification precision. However, these methods require substantial computational resources and heavily rely on large annotated datasets, which pose challenges in practical, resource-constrained applications.

To handle the limitations of statistical and machine learning techniques with high-dimensional time-series data, deep learning-based algorithms have become central to motion action recognition. These methods achieve superior recognition by learning hierarchical features from data, handling multimodal inputs, and managing complex temporal dependencies. Convolutional Neural Networks (CNNs) excel at learning spatial features in video frames and capturing local motion features (Chen et al., 2021). Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) networks are effective in handling temporal dynamics by incorporating memory units for long-term dependencies (Majd and Safabakhsh, 2020). More recently, attention mechanisms in Transformer models have significantly improved action recognition, weighting key features to handle intricate action sequences (Liu et al., 2022). Although these methods excel in processing large-scale, complex datasets, they demand substantial computational resources, complex training processes, and access to extensive labeled data.

Existing deep learning methods face significant challenges in fine-grained action recognition, particularly in accurately capturing subtle and intricate actions within multimodal data. Traditional models often struggle with the complexities of fusing multimodal information and lack the sensitivity to nuanced motion variations. To address these limitations, we propose a novel architecture, FGM-CLIP (Fine-Grained Motion CLIP), specifically designed to enhance the recognition of detailed actions within a multimodal framework. FGM-CLIP leverages the robust joint representation learning capabilities of Contrastive Language-Image Pretraining (CLIP) and extends this to video action recognition, capturing fine motion details through an end-to-end architecture. This innovative approach combines a CLIP-based feature extraction module, a fine-grained motion encoder, and a multimodal fusion layer to integrate both visual and motion features seamlessly. By jointly optimizing these features, FGM-CLIP achieves high precision in classifying complex actions, thus pushing the boundaries of fine-grained action recognition. Through this architecture, we aim to provide a more effective tool for detailed action analysis and set a foundation for future multimodal learning advancements.

• FGM-CLIP introduces a CLIP-based feature extraction module and combines it with a fine-grained action encoder to innovatively enhance the capability to capture complex action details.

• This method demonstrates efficiency and versatility across multiple scenarios, accurately identifying fine-grained actions in various video datasets with strong adaptability.

• Experimental results indicate that FGM-CLIP significantly improves classification accuracy across several action recognition benchmark tests, showing exceptional performance particularly in fine-grained action classification tasks.

2 Related work 2.1 Action recognitionAction recognition has emerged as a crucial area of research within computer vision, primarily fueled by the exponential growth of video data across various platforms. Early techniques for action recognition were predominantly based on manually designed feature extraction methods, such as optical flow and trajectory-based approaches (Li Q. et al., 2024). While these methods were somewhat effective for simpler action scenarios, they faced significant challenges when dealing with complex and nuanced action sequences, where subtle differences and contextual information are vital for accurate classification. The introduction of deep learning has brought about a transformative shift in action recognition, with Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) becoming prominent tools in this domain. CNNs are adept at extracting rich spatial features from individual video frames, capturing essential visual elements that characterize actions. Meanwhile, RNNs, particularly Long Short-Term Memory (LSTM) networks, are designed to model temporal sequences, enabling the capture of dynamic aspects inherent in video content (Wang et al., 2019b). The integration of spatial and temporal data through these architectures has led to significant improvements in action recognition accuracy. Despite these advancements, traditional deep learning methods still encounter limitations when processing long sequences, handling complex backgrounds, and discerning subtle differences in actions. To address these challenges, researchers have turned to multimodal learning techniques that integrate various modalities–such as visual, motion, audio, and textual information–enhancing model performance by leveraging complementary data sources (Wang et al., 2019b). This approach allows for a more holistic understanding of actions, improving recognition capabilities in diverse contexts. Additionally, the rise of Transformer-based models in action recognition has opened new avenues for exploration. Transformers excel in capturing long-range dependencies through self-attention mechanisms, allowing models to focus on relevant parts of the input sequence while considering the overall context (Li M. et al., 2024). This capability is particularly beneficial for recognizing complex actions that unfold over extended periods, as it enables the model to retain critical information from earlier frames that may influence later actions. Overall, the ongoing evolution in action recognition methodologies, fueled by deep learning and multimodal integration, is paving the way for more accurate and robust systems capable of understanding intricate human activities in dynamic environments.

2.2 Action recognition with CLIP modelsContrastive Language-Image Pre-training (CLIP) models have shown remarkable potential in bridging the gap between visual and textual data, creating unified feature representations that facilitate zero-shot learning and robust cross-modal understanding (Lin et al., 2022). In the context of action recognition, recent studies have adapted CLIP's joint embedding capabilities to video tasks, aiming to leverage both visual cues from frames and semantic cues from textual descriptions (Fishel and Loeb, 2012). However, applying CLIP to action recognition introduces unique challenges. First, video data encompasses complex temporal dependencies that are not naturally suited to the static image-text pairs on which CLIP was originally trained (Wang et al., 2019a). Researchers have attempted to address this by fine-tuning CLIP for video action recognition or integrating it with temporal models, such as LSTMs and Transformers, to better capture the sequential nature of actions. Despite these efforts, capturing fine-grained motion details and ensuring temporal alignment between frames remain open challenges. Furthermore, CLIP's sensitivity to nuanced action variations is limited, which can impact its performance on fine-grained action recognition tasks where subtle differences are crucial (Liu et al., 2025).

2.3 Challenges in end-to-end learning for action recognitionEnd-to-end learning has emerged as a powerful approach in action recognition, offering the advantage of optimizing all components of the model simultaneously for a cohesive representation of both visual and motion cues. Yet, this approach is not without limitations (Sverrisson et al., 2021). For instance, directly applying end-to-end learning to multimodal data often results in inefficiencies in capturing the distinct dynamics of each modality. In action recognition, temporal dynamics play a critical role, requiring architectures that can manage not only visual feature extraction but also the temporal evolution of those features across frames (Wang et al., 2024). Traditional end-to-end models, such as CNN-LSTM or CNN-Transformer hybrids, often struggle to achieve this balance effectively, especially in the context of complex, fine-grained actions (Wang et al., 2016). Furthermore, the integration of domain-specific priors, such as known motion patterns or contextual information from the scene, into an end-to-end framework remains a challenging area. Without such priors, end-to-end models can overfit to spurious features in training data, limiting their ability to generalize to new environments or action types. To address these issues, recent approaches have incorporated multimodal fusion layers, domain adaptation techniques, and regularization methods to enhance model robustness and adaptability in diverse action recognition scenarios.

3 Methodology 3.1 Overview of our networkIn this work, we introduce a novel architecture named FGM-CLIP (Fine-Grained Motion CLIP), specifically designed to enhance the recognition of fine-grained actions within a multimodal framework. The architecture effectively leverages the capabilities of Contrastive Language-Image Pre-training (CLIP) and integrates it with a fine-tuned motion recognition mechanism, addressing the prevalent challenges associated with detailed motion analysis in video data. By operating in an end-to-end manner, FGM-CLIP allows for the joint optimization of visual and motion features, enabling the model to capture subtle variations in actions that are critical for accurate recognition. The architecture comprises three main components: a CLIP-based feature extraction module, a fine-grained motion encoder, and a multimodal fusion layer. The CLIP-based feature extraction module utilizes the powerful capabilities of CLIP, which has been trained on a large corpus of image-text pairs. This module extracts rich visual features from the input video frames while simultaneously generating contextual textual representations. The ability of CLIP to understand both visual and linguistic information significantly enhances the model's performance, allowing it to leverage the semantic richness of textual descriptions during action recognition.

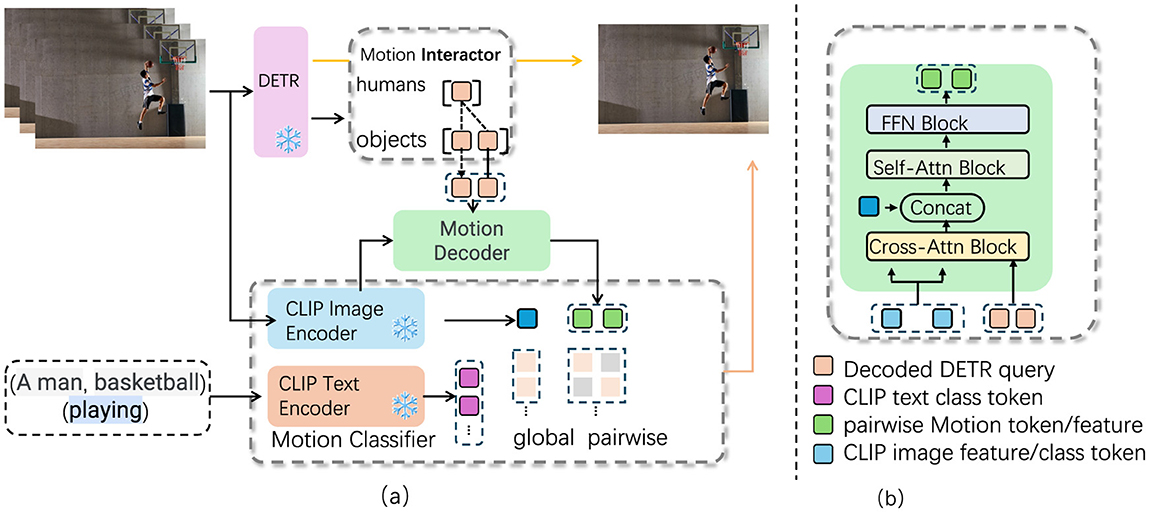

Following feature extraction, the fine-grained motion encoder processes the motion data extracted from the video. This component is specifically designed to capture intricate details of the motion sequences, enabling the model to analyze temporal dynamics effectively. By focusing on the temporal aspect of the actions, the motion encoder ensures that even the slightest variations in movement are accounted for, which is essential when differentiating between actions that may appear visually similar but differ in subtle temporal dynamics. The final component, the multimodal fusion layer, synergizes the extracted visual and motion features. This layer combines information from both modalities, allowing the model to take advantage of the complementary strengths of visual and motion data. The fusion of these features enhances the model's ability to make precise action classifications, as it can consider both the visual appearance and the motion dynamics of the actions simultaneously. The primary innovation of our approach lies in the seamless integration of CLIP with motion-based analysis, which facilitates a comprehensive understanding of fine-grained actions. This integration not only harnesses the extensive visual and textual knowledge encoded in CLIP but also enables fine-tuning on the specific nuances of motion data. Consequently, FGM-CLIP is particularly relevant for tasks requiring the differentiation of actions that share visual similarities yet exhibit distinct temporal characteristics (as shown in Figure 1).

Figure 1. FGM-CLIP architecture illustration, showing the detailed processing flow for fine-grained action recognition. (a) The system begins with the DETR model identifying objects and humans in video frames, followed by motion feature extraction using the Motion Interactor and Motion Decoder. CLIP's Image and Text Encoders generate both visual and textual representations, which are then processed by the Motion Classifier to produce global and pairwise motion tokens. (b) The Motion Decoder integrates these features using cross-attention, self-attention, and feed-forward blocks, resulting in an enriched representation for precise action classification.

In our model, the text encoder is designed to process descriptive textual data related to each action, rather than just simple action labels. Specifically, “text” refers to carefully crafted descriptions that capture the context and finer details of each action. For instance, instead of using a basic label like “running,” the text input may consist of phrases such as “an athlete running on a track” or “a person sprinting across a field,” which provide richer semantic information that aligns closely with the action being performed. These descriptions are derived from existing datasets or manually created to align with the nuances of each action class, ensuring that the text captures detailed aspects of the motion and context. The text encoder, based on CLIP's pretrained language model, encodes these descriptive texts into a feature space that aligns with the visual embeddings generated by the image encoder. During training, we leverage CLIP's multimodal embedding space to map both visual and textual representations onto a shared space, enabling the model to associate nuanced textual descriptions with corresponding visual cues. This integration allows the model to utilize semantic information from the text to enhance its ability to distinguish fine-grained actions that may look visually similar but differ in context or subtle motion characteristics.

In the following sections, we describe the architecture and components of FGM-CLIP in detail. In Section 3.2, we introduce the mathematical formulation of the problem, outlining the key challenges and objectives. Section 3.3 details the novel architecture of FGM-CLIP, emphasizing the design choices that enable fine-grained action recognition. Finally, in Section 3.4, we discuss the strategies employed to integrate domain-specific priors into the model, enhancing its ability to generalize across diverse datasets.

3.2 PreliminariesThe task of fine-grained motion action recognition involves identifying and classifying subtle and often nuanced movements within video sequences. Formally, let V= represent a set of N video clips, where each video clip vi consists of a sequence of frames , and T denotes the number of frames in the clip. Each video clip vi is associated with a ground-truth label yi∈C, where C is the set of possible action categories. Given this setup, the objective is to learn a function F:V→C that maps each video clip vi to its corresponding action label yi. This mapping function F is parameterized by a deep neural network, specifically designed to handle the multimodal nature of video data, which includes both visual features and temporal motion cues. To achieve this, we utilize a contrastive learning-based approach, inspired by the CLIP model, where a large-scale pre-trained network is fine-tuned on the target dataset for action recognition. The network F can be decomposed into three main components: a visual encoder Ev, a motion encoder Em, and a fusion module G. The visual encoder Ev extracts spatial features from individual frames, while the motion encoder Em captures temporal dynamics from the sequence of frames. The fusion module G combines these features to generate a final representation, which is then used for classification. The learning process involves minimizing a loss function L that captures the discrepancy between the predicted labels and the ground-truth labels. Specifically, we define the loss as follows:

L(θ)=-1N∑i=1Nlogp(yi|vi;θ) (1)where θ denotes the parameters of the network F, and p(yi|vi; θ) is the probability assigned to the correct label yi by the model.

In addition to this classification loss, we incorporate a contrastive loss to align the visual and motion representations. Let Vt and Mt denote the visual and motion feature spaces, respectively, at time step t. The contrastive loss is defined as:

Lcontrastive(θ)=1T∑t=1T(||Ev(fit;θv)-Em(fit;θm)||2) (2)where θv and θm represent the parameters of the visual and motion encoders, respectively. This loss ensures that the visual and motion features are closely aligned, enabling the network to effectively capture the fine-grained nuances of actions.

Finally, the overall loss function used to train the network is a weighted sum of the classification loss and the contrastive loss:

Ltotal(θ)=L(θ)+λLcontrastive(θ) (3)where λ is a hyperparameter that balances the two loss components. The training process optimizes this total loss to learn the parameters θ that best distinguish between different fine-grained actions in the video data. This formulation sets the stage for the detailed exploration of the model architecture and strategies employed to achieve robust fine-grained action recognition, which are presented in the subsequent sections.

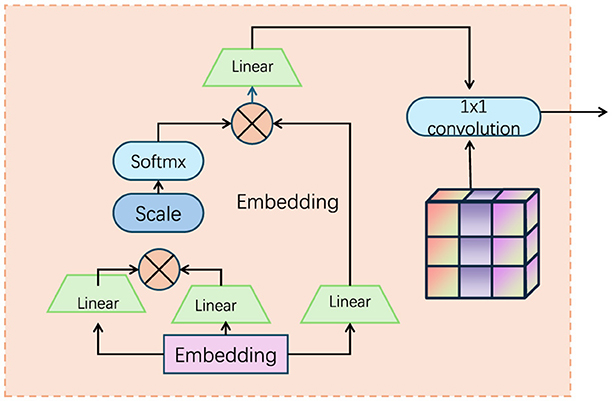

3.3 Motion-visual synergy moduleTo effectively capture and integrate the intricate dynamics of fine-grained actions, we introduce the Motion-Visual Synergy Module (MVSM) as the core component of the FGM-CLIP architecture. The MVSM is designed to synergize the spatial and temporal information extracted by the visual and motion encoders, respectively, ensuring that the final action representations are both comprehensive and discriminative (as shown in Figure 2).

Figure 2. Diagram of the Motion-Visual Synergy Module (MVSM), illustrating the integration of spatial and temporal features extracted by visual and motion encoders. The module leverages embedding layers, scaling, and linear transformations to combine dynamic and static information, with a 1 × 1 convolution layer as the final step to produce comprehensive action representations.

3.3.1 Feature extractionIn the first stage, we aim to extract meaningful features from both the visual and motion information contained in the video data. This process leverages two specialized encoders: the visual encoder Ev for capturing spatial features from individual frames, and the motion encoder Em for extracting temporal features based on motion dynamics. The visual encoder Ev processes each frame fit from the video clip vi, producing a set of spatial features vit. These spatial features encapsulate the visual content present in each frame, such as object appearances and scene layouts. Mathematically, the spatial features are computed as follows:

vit=Ev(fit;θv) (4)Here, fit represents the t-th frame from video clip vi, and θv denotes the parameters of the visual encoder Ev, which is typically a deep convolutional neural network (CNN) pre-trained on large-scale image datasets.

Simultaneously, the motion encoder Em processes temporal information by analyzing the motion between consecutive frames. The motion information can be derived from optical flow or other motion cues that capture the pixel-wise displacement between two consecutive frames, fit and fi(t−1). The temporal features mit, which encode the dynamic aspects of the video, are computed as:

mit=Em(OpticalFlow(fit,fi(t-1));θm) (5)In this equation, OpticalFlow(fit, fi(t−1)) represents the optical flow calculated between the current frame fit and the previous frame fi(t−1), while θm denotes the parameters of the motion encoder Em, which can be designed using CNNs or recurrent networks like LSTMs to capture the temporal dependencies in the video data. By combining the spatial features vit and the temporal features mit, we obtain a comprehensive representation of both the static and dynamic elements in the video clip, which can then be used for subsequent stages of the video analysis task.

3.3.2 Temporal alignmentIn the second stage, we address the potential discrepancy in temporal resolution or synchronization between the visual and motion features. Since visual features vit and motion features mit may not be perfectly aligned in time, it is crucial to implement a temporal alignment mechanism to ensure that these features are synchronized. The goal is to adjust the motion features mit such that they are temporally aligned with the corresponding visual features vit, resulting in an aligned motion feature set m~it. The temporal alignment is represented mathematically as:

m~it=Align(mit,vit) (6)Here, m~it denotes the aligned motion features for frame t in the video clip vi, and the function Align(·, ·) represents the alignment process. Several methods can be employed for this alignment:

Dynamic Time Warping (DTW): This technique computes the optimal alignment between two sequences (visual and motion features) by stretching or compressing one sequence in time to match the other. It minimizes the differences in temporal evolution between the features.

m~it=DTW(mit,vit) (7)Attention-based Alignment: Another approach involves using attention mechanisms, which assign different weights to different temporal segments of the motion features mit based on their relevance to the visual features vit. This allows the model to focus on temporally relevant parts of the motion sequence that correspond to visual cues.

m~it=Attention(mit,vit) (8)The temporal alignment mechanism ensures that the temporal dynamics represented by the motion features are appropriately synchronized with the visual content, facilitating more effective joint processing in later stages of the model.

3.3.3 Multimodal fusionIn the final stage, the aligned visual and motion features are fused to form a unified representation for each frame, capturing both spatial and temporal information. This fusion is critical for integrating the complementary aspects of the visual content (from the visual encoder) and motion dynamics (from the motion encoder). We utilize a multimodal fusion strategy that involves concatenating the aligned visual and motion features, followed by passing them through a fully connected layer. The fused feature representation for each frame fit is given by:

hit=ReLU(Wf[vit;m~it]+bf) (9)In this equation: - Wf represents the weight matrix of the fully connected fusion layer, - bf is the bias vector, - [vit;m~it] denotes the concatenation of the spatial feature vit and the aligned motion feature m~it, - ReLU(·) is the activation function that introduces non-linearity.

The output hit is the fused feature vector for frame fit, encapsulating both the spatial and temporal attributes of the video at that particular time step. Once we have obtained fused features hit for each frame, the next step is to aggregate these frame-level features to create a single video-level representation hi. This is achieved by averaging the fused features across all frames in the video:

hi=1T∑t=1Thit (10)Here, T represents the total number of frames in the video, and hi is the final aggregated video-level feature, which serves as a comprehensive representation of both the spatial content and temporal dynamics across the entire video. This video-level representation hi is then passed to the classification layer for the final task, such as action recognition or video categorization.

3.4 Domain-specific prior integrationTo improve the generalization of the FGM-CLIP model across different datasets and action categories, we incorporate domain-specific priors into the learning process. These priors, derived from existing knowledge about actions, motion patterns, and contextual information, allow the model to focus on relevant features and reduce overfitting to training data.

3.4.1 Motion pattern priorsMany actions exhibit distinct motion patterns that remain consistent across instances. For example, a “golf swing” involves a smooth, continuous motion with a specific trajectory, while a “jump” is characterized by a rapid upward movement followed by descent. To incorporate these motion pattern priors, we introduce a regularization term in the loss function that penalizes deviations from expected motion trajectories:

Lmotion(θ)=1N∑i=1N∑t=1T||mit-m^it||2 (11)Here, mit represents the motion feature at time t extracted by the motion encoder, and m^it denotes the expected motion pattern for the action category of video vi. This term encourages the model to learn motion features that align with known patterns, improving action recognition based on motion cues.

3.4.2 Contextual priorsActions usually occur in specific environments that provide contextual clues for recognition. For instance, a “swimming” action is likely to happen in a water setting, while “running” is commonly seen outdoors. We incorporate contextual priors by embedding scene recognition models into the pipeline, which analyze the background and generate context features cit for each frame:

cit=Ec(fit;θc) (12)These context features are fused with the motion and visual features during multimodal fusion, enabling the model to better differentiate between actions that may appear visually similar but occur in different environments.

3.4.3 Clustering-based priorsFine-grained actions often show intra-class variability but maintain consistent features within categories. To account for this, we integrate clustering algorithms into the training process, allowing the model to identify and reinforce common sub-patterns within action categories. Specifically, the feature vectors hit are grouped into clusters Ck:

Ck=Cluster(i=1N) (13)These clusters guide the model to refine its representations by encouraging features within the same cluster to be similar while pushing apart features from different clusters. This is enforced through a clustering regularization term in the loss function:

Lcluster(θ)=1N∑k∑i∈Ck∑j∈Ck||hiti-hitj||2-∑l∉Ck||hiti-hitl||2 (14)This clustering approach enhances the model's ability to learn more discriminative representations by reinforcing intra-cluster similarities and increasing inter-cluster distinctions.

3.4.4 End-to-end learningTo ensure the effective integration of domain-specific priors–such as motion patterns, contextual information, and clustering-based insights–the FGM-CLIP model is trained using an end-to-end learning framework. This approach enables the model to jointly optimize all components, allowing the priors to influence the learning process throughout. By adopting this strategy, the model captures not only the basic features but also the deeper, domain-relevant patterns, which ultimately enhance its generalization capability across diverse datasets and action categories. The overall loss function combines several key components: the primary task loss L

留言 (0)