Unmanned Aerial Vehicles (UAVs) have become increasingly prominent in tasks such as autonomous navigation and object detection, owing to their vast range of applications in both civilian and military domains. Not only can UAVs efficiently perform aerial surveillance and reconnaissance, but they also play a pivotal role in search and rescue missions, environmental monitoring, and agricultural operations (Wang et al., 2024). However, UAV navigation and object detection remain challenging due to the complexities of dynamic environments, where changes in lighting, weather, and terrain can hinder performance (Nicora et al., 2021). Moreover, the ability to detect and track objects in real-time is crucial for the safe and effective deployment of UAVs in real-world scenarios. These tasks not only demand high accuracy and robustness but also computational efficiency, making the development of sophisticated methods necessary for overcoming these challenges.

To address the limitations of early approaches, researchers initially explored symbolic AI and knowledge-based methods for UAV navigation and object detection (Evjemo et al., 2020). These methods relied on predefined rules and symbolic representations of the world, allowing UAVs to reason about their environment and plan paths accordingly. One of the key strengths of symbolic AI was its interpretability, as human knowledge could be directly incorporated into the system. This transparency made it easier to understand and analyze the decision-making process, which was particularly valuable in scenarios that required clear explanations of the system's behavior. For instance, in search and rescue operations, being able to explain the decision logic of a UAV can help human operators better understand its actions and make appropriate adjustments. As a result, symbolic AI provided a direct pathway for integrating human knowledge into the decision-making processes of UAVs. However, despite the significant advantages of symbolic AI in terms of interpretability, it faced substantial limitations. One major drawback was its rigidity. Because these systems relied on explicit representations and manually defined rules, they struggled to cope with the variability and uncertainty inherent in real-world environments (Tao et al., 2020). This limitation became especially problematic in unpredictable scenarios, where adaptability was crucial. For example, in complex or constantly changing environments, predefined rule-based systems often fail to adjust their behavior effectively, leading to suboptimal performance in navigation and object detection tasks. Furthermore, as the complexity of tasks and the amount of data grew, symbolic AI's lack of scalability became increasingly apparent. These systems were not well-suited to handling large-scale, dynamic environments, where the number of variables and environmental changes can overwhelm rule-based approaches. Additionally, maintaining and updating such systems required extensive human intervention, as new rules had to be manually defined and adjusted to reflect changes in the environment. As a result, symbolic AI became less practical for real-time, large-scale operations, and was gradually phased out in favor of more adaptable, data-driven approaches that could learn and generalize more effectively in complex environments.

In response to the limitations of knowledge-based methods, the research community began shifting toward data-driven and machine learning approaches (Wang X. et al., 2023). Machine learning techniques, especially those rooted in statistical models, allowed UAVs to identify patterns from data without the need for explicitly programmed rules (Arinez et al., 2020). This shift represented a significant improvement in the flexibility and adaptability of UAVs, particularly in dynamic environments. For example, Support Vector Machines (SVMs) (Bazi and Melgani, 2018) and Random Forests (Deng et al., 2024) were widely adopted for object detection tasks, while reinforcement learning models (Xi et al., 2024) allowed UAVs to learn from experience through trial and error. The ability to improve performance based on experience was particularly useful in uncertain environments, where fixed rule-based systems would otherwise struggle to adapt. Despite the potential of these machine learning methods, they were still constrained by certain limitations. A key challenge was the reliance on hand-crafted features, which required human intervention to extract relevant information from raw data. This need for feature engineering increased the complexity of developing such systems and limited their autonomy. Additionally, machine learning models often required large labeled datasets, which posed a challenge in scenarios where data was scarce or difficult to label (Sampieri et al., 2022). For instance, in hazardous or highly complex environments, acquiring enough high-quality labeled data might be infeasible, limiting the practical application of these models. As a result, while machine learning approaches were more flexible than symbolic AI, their dependence on feature engineering and labeled data revealed their limitations in handling more complex tasks autonomously.

As deep learning gained traction, the focus shifted toward neural network-based approaches and the use of pre-trained models. Deep learning fundamentally transformed UAV navigation and object detection by enabling the automatic extraction of complex, high-dimensional features directly from raw data. Convolutional Neural Networks (CNNs) (Barrios et al., 2024) and Recurrent Neural Networks (RNNs) (Jiandong et al., 2024) became standard tools for these tasks, showing notable improvements in accuracy and adaptability. CNNs, in particular, excelled at image-based object detection tasks, while RNNs proved effective in handling sequential data for tasks such as time-series prediction and trajectory planning. More recently, Transformer-based architectures (Ma Z. et al., 2024) have demonstrated even greater potential in managing complex scenarios. These models, which originated in natural language processing, have shown impressive performance in various vision-based tasks due to their ability to capture long-range dependencies and contextual information. Another major advancement in deep learning was the introduction of pre-trained models, particularly those trained on large datasets like ImageNet. These models leveraged transfer learning, enabling them to generalize more effectively with fewer task-specific datasets. For UAVs, this approach proved valuable in situations where obtaining large labeled datasets was challenging. By fine-tuning pre-trained models on smaller, domain-specific datasets, UAV systems could achieve high performance without the need for extensive data collection. However, despite the significant advances in deep learning, these methods come with considerable computational costs. Neural networks, particularly deep models with many layers, typically require substantial processing power and memory. This poses challenges for real-time operations in UAVs, particularly in resource-constrained environments, where onboard processing capabilities may be limited. Additionally, the black-box nature of neural networks has raised concerns regarding interpretability. In safety-critical applications, such as autonomous navigation in crowded airspaces, it is crucial to understand the decision-making process. The lack of transparency in neural network models makes it difficult to trust their outputs, especially when errors or unexpected behavior occur. Consequently, while deep learning has revolutionized UAV navigation and object detection, challenges remain in terms of computational efficiency and the need for greater interpretability, particularly in scenarios where transparency is critical.

To overcome the limitations of previous methods, we introduce NavBLIP, a novel multimodal approach designed to enhance UAV navigation and object detection by integrating both visual and contextual information. NavBLIP is engineered to meet the demands of real-time performance, providing computational efficiency without sacrificing accuracy or adaptability in dynamic environments. A key feature of NavBLIP is its use of transfer learning, which enables the model to leverage knowledge from pre-trained networks, significantly reducing the need for large, task-specific datasets. Additionally, NavBLIP incorporates a Nuisance-Invariant Multimodal Feature Extraction (NIMFE) module, which disentangles relevant features from noisy or complex inputs. This allows the system to generalize effectively across a variety of environments and scenarios, making it more robust in real-world applications. By combining visual data with contextual information and optimizing feature selection in real-time, NavBLIP ensures high performance even in unpredictable conditions. Through this approach, we address the challenges of both scalability and efficiency, offering a more versatile solution for UAV navigation and object detection tasks.

• NavBLIP introduces a new module, NIMFE, which disentangles relevant features from nuisance variables, ensuring more robust performance in diverse operational conditions.

• The method excels in adaptability, enabling UAVs to perform well across multiple scenarios and conditions while maintaining computational efficiency, making it suitable for real-time applications.

• Experimental results demonstrate that NavBLIP outperforms state-of-the-art models in terms of accuracy, recall, and computational efficiency, especially on benchmark datasets such as RefCOCO and OpenImages.

2 Related work 2.1 Multimodal learning for UAV systemsMultimodal learning has recently gained significant traction as a means to enhance the capabilities of Unmanned Aerial Vehicles (UAVs) in tasks such as navigation and object detection. Traditional UAV systems typically rely on unimodal inputs, such as RGB images or sensor data, which can limit their decision-making abilities, particularly in complex and dynamic environments (Chen et al., 2024). This limitation has led to a shift toward integrating multiple data modalities to improve UAV performance. By combining different types of data, such as visual information with text, metadata, or sensor readings, UAVs can make more informed and context-aware decisions in a wider range of conditions. For instance, VisualBERT (Tong et al., 2024) demonstrates the advantages of multimodal learning by combining visual and textual inputs to enhance object recognition capabilities. In UAV operations, multimodal learning can be applied to fuse visual data with GPS coordinates, environmental metadata, and other contextual information, improving the accuracy of navigation and object detection tasks. However, despite the potential benefits, existing multimodal methods often face challenges such as modality collapse, where one data modality is overemphasized, while others are underutilized. This imbalance can lead to suboptimal system performance, especially in scenarios requiring the comprehensive integration of diverse data sources. To overcome these challenges, we introduce the Nuisance-Invariant Multimodal Feature Extraction (NIMFE) module. NIMFE effectively disentangles task-relevant features from nuisance factors, enhancing the robustness and adaptability of UAVs in varied operational environments (Zacksenhouse, 2011). By isolating the relevant information from different modalities, NIMFE ensures that UAVs can make more reliable decisions even in unpredictable conditions. NavBLIP expands on this idea by dynamically adjusting the weighting of each modality based on the specific environmental context. This allows for a more balanced and effective integration of all available data streams, ensuring that no single modality dominates the decision-making process. The dynamic adjustment of modalities enables UAVs to optimize their performance in real-time, leveraging the strengths of each input source according to the needs of the task at hand. This leads to improved decision-making capabilities, making NavBLIP particularly suited for real-time UAV operations, where flexibility, robustness, and computational efficiency are essential.

2.2 Transfer learning for UAV adaptabilityTransfer learning has become a crucial technique in machine learning, particularly for applications like UAV navigation, where data collection is expensive or time-consuming (Zhang et al., 2023). Traditional UAV models typically require large, task-specific datasets and considerable computational resources for training, making it difficult for these models to quickly adapt to new environments. Transfer learning addresses these challenges by allowing models to leverage knowledge from previously learned tasks and apply it to new, unseen tasks with minimal retraining (Zacksenhouse et al., 2010). In the UAV domain, models can be pre-trained on large-scale datasets such as ImageNet or OpenImages, and then fine-tuned for specific tasks like object detection, terrain navigation, or obstacle avoidance. Techniques such as fine-tuning convolutional layers or freezing earlier layers during training have demonstrated improved model generalization, helping UAVs perform better in novel situations . However, most current transfer learning approaches in UAV systems either ignore multimodal data or are not optimized for real-time adaptation. NavBLIP advances the field by embedding transfer learning within a multimodal framework, allowing UAVs to adapt more effectively to new environments using both visual and contextual data. Our experimental results highlight that this approach reduces the need for extensive retraining, significantly improving accuracy and computational efficiency across a variety of environments (Ma F. et al., 2024). By integrating transfer learning with multimodal data, NavBLIP offers a more scalable and efficient solution for real-time UAV operations.

2.3 Computational efficiency in real-time UAV operationsReal-time processing is essential for UAVs, particularly in high-stakes applications like search-and-rescue missions, environmental monitoring, and autonomous navigation. These scenarios demand fast, accurate decision-making, but as deep learning models grow more complex, balancing computational efficiency with high performance has become increasingly challenging (Qiu et al., 2023). Advanced models, such as transformers and ResNet-based architectures, typically deliver superior accuracy due to their ability to handle large-scale data and extract complex features. However, they come with significant computational overhead, making them less feasible for real-time UAV operations where resources like processing power and energy are often limited (Zacksenhouse et al., 2014). This limitation is critical in UAVs, which must operate efficiently in constrained environments. To address these challenges, several optimization techniques have been explored. Model pruning reduces the number of parameters, trimming the network without sacrificing much in terms of performance. Quantization reduces the precision of the weights and activations, thereby decreasing the computational load. Knowledge distillation allows smaller, simpler models to learn from larger models, maintaining performance while being more resource-efficient (Liu et al., 2023). In addition to these techniques, efficient network architectures like MobileNet and EfficientNet have been specifically designed for resource-constrained environments. Although these architectures significantly reduce resource consumption, they often underperform in terms of accuracy when compared to larger models, making them less ideal for applications requiring high precision. Zhang et al. (2024) NavBLIP takes a more balanced approach by integrating these efficiency-enhancing techniques into a multimodal framework. This enables the model to maintain high performance while avoiding excessive resource consumption. Furthermore, NavBLIP leverages transfer learning, allowing the model to adapt quickly to new environments with minimal retraining. This not only cuts down on the computational cost but also improves adaptability and speed, both crucial for real-time UAV operations. By combining multimodal learning with advanced transfer learning, NavBLIP ensures robust performance across a variety of environments without compromising on accuracy or efficiency (Zereen et al., 2024). This makes it particularly well-suited for dynamic, real-time UAV applications, where both adaptability and computational efficiency are paramount.

3 Methodology 3.1 Overview of our networkOur research introduces a groundbreaking model, NavBLIP, designed to tackle the complexities faced by Unmanned Aerial Vehicles (UAVs) in dynamic environments. The core of NavBLIP lies in its ability to seamlessly integrate multiple data modalities, such as images, metadata, and text, for robust object detection and navigation tasks. This model leverages the power of pre-trained vision-language frameworks, similar to the BLIP-Diffusion architecture, augmented by an advanced object detection mechanism analogous to the NDFT framework. By combining these elements, NavBLIP is tailored to UAV operations where environmental variables, such as weather conditions, altitude changes, and viewing angles, impose significant challenges. The architecture processes UAV-captured imagery and corresponding metadata (such as altitude, weather, and view angles), allowing the system to generate disentangled feature representations. These representations are passed into two parallel modules: an object detection pipeline and a metadata-driven control system, facilitating a coordinated output. Through this multimodal interaction, NavBLIP enhances the UAV's ability to detect objects while simultaneously adjusting its navigation based on real-time metadata inputs.

The data flow in NavBLIP begins when a UAV captures images that are first passed through a feature extraction unit, which generates domain-specific representations. These are further split into distinct streams for object detection and control. The disentanglement of features ensures that challenging factors, such as environmental variability, are processed without compromising detection accuracy. This dual-branch architecture equips the UAV with the ability to handle complex environmental variations while maintaining consistent performance in object detection. In the following sections, we provide a detailed exploration of the model. Subsection 3.2 covers the preliminaries, offering formal definitions and problem formulation. Subsection 3.3 delves into the architectural innovations, presenting the mathematical foundations and specific modules of NavBLIP. Lastly, subsection 3.4 explores the integration of prior knowledge and environmental cues into the model, demonstrating how domain knowledge strengthens the UAV's adaptive capabilities. Through these sections, we aim to offer a comprehensive understanding of the novel aspects and the overall design of the NavBLIP system.

3.2 PreliminariesTo formalize the problem of UAV-based navigation and object detection, let us define the basic components involved. UAVs operate in complex, dynamic environments where the task is to detect objects from aerial imagery while simultaneously navigating through these environments. Let X represent the set of UAV-captured images, and Y represent the corresponding object labels. Our goal is to map each image x∈X to its corresponding object class and bounding box coordinates y∈Y, while maintaining robustness to various nuisances such as altitude, weather, and view angles. We formalize the object detection task as a function fobj, which, given an input image x, produces a detection result fobj(x) = ŷ, where ŷ includes both the predicted object class and bounding box. The detection performance can be evaluated by a loss function:

Lobj(fobj(x),y), (1)which typically involves a combination of classification loss and localization loss.

In addition to object detection, UAV navigation requires processing metadata that describes the flight conditions. Let M= represent metadata attributes, where A denotes altitude, V denotes view angle, and W represents weather conditions. These metadata are used to adjust the detection process to improve robustness. Specifically, we model the nuisance disentanglement process by introducing a transformation function fnd that maps the raw image and metadata (x, m) to a nuisance-invariant feature space. The goal of fnd is to extract task-relevant features z = fnd(x, m) that are invariant to changes in altitude, view angle, and weather. To mathematically capture the disentanglement of nuisances, we minimize a joint loss function Ljoint, which combines the object detection loss Lobj with a nuisance penalty term Lnuis, aimed at minimizing the effect of nuisance attributes on the detection task:

Ljoint=Lobj(fobj(x),y)+λ·Lnuis(fnd(x,m),m), (2)where λ is a weighting factor that balances the importance of the two objectives.

To handle varying nuisances, we also define a set of nuisance-specific transformations fnd(i) for each type of nuisance i∈, such that:

z=fnd(A)(x,A) or z=fnd(V)(x,V) or z=fnd(W)(x,W), (3)depending on the specific metadata available. These transformations allow the model to learn robust, domain-invariant features that are less sensitive to the nuisance factors while retaining high object detection accuracy.

In UAV operations, the navigation system requires a model fnav that processes both the image features z and metadata m, generating control signals for navigation ĉ = fnav(z, m). The navigation system can be trained using a similar loss function that ensures the control outputs are robust to environmental factors:

Lnav=?(x,m)[ℓ(fnav(fnd(x,m),m),c)], (4)where c denotes the ground-truth control signals for UAV navigation and ℓ is an appropriate error function (e.g., squared error).

By defining these components and relationships, we have a unified formalization of the UAV object detection and navigation problem. This structure lays the foundation for the development of our NavBLIP model, which will be described in the following subsections.

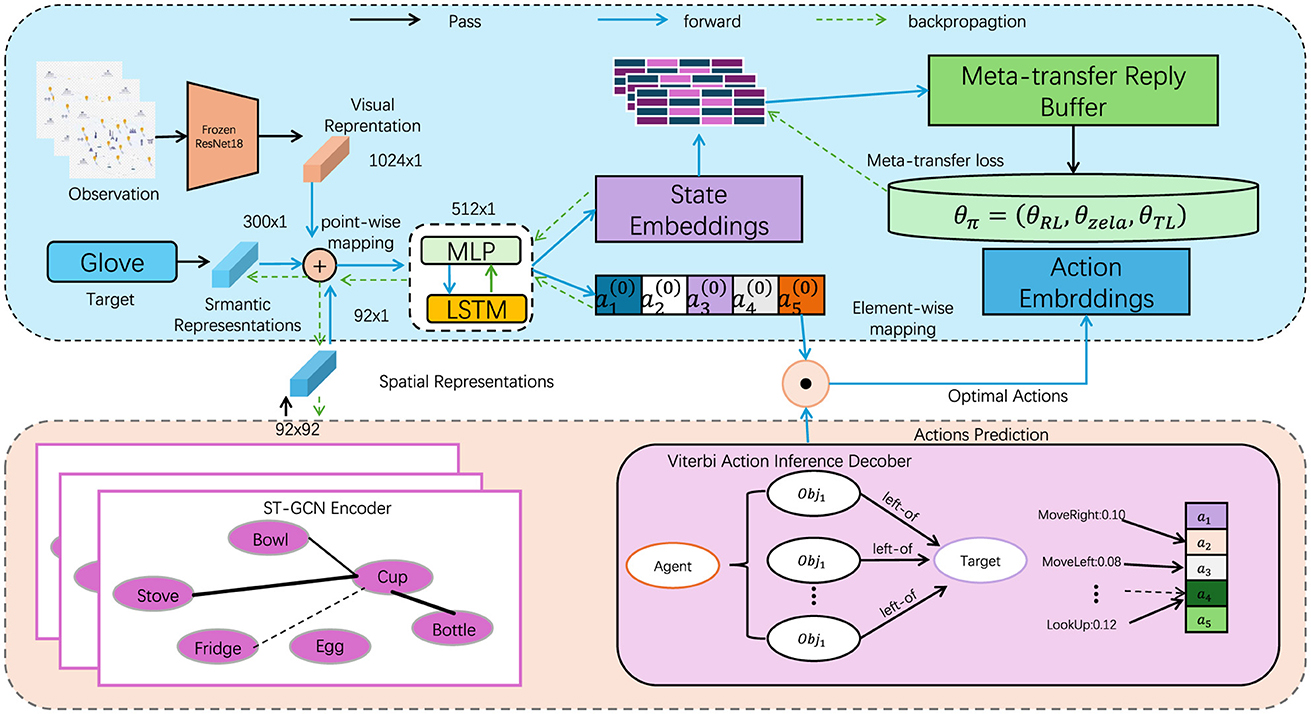

3.3 Nuisance-invariant multimodal feature extractionThe Nuisance-Invariant Multimodal Feature Extraction (NIMFE) module serves as the central mechanism of our model, engineered to isolate task-relevant information from UAV-captured images while mitigating the influence of environmental nuisances such as altitude, view angle, and weather conditions. This multimodal architecture extends beyond conventional visual processing by incorporating metadata from the UAV's flight context, facilitating a more robust and adaptive system. In this section, we detail the core design of NIMFE, elaborating on its role in extracting disentangled, nuisance-invariant representations that feed into downstream object detection tasks and navigational systems (Figure 1).

Figure 1. The overall framework diagram of the proposed method. The data flows from observation through various representations (visual, semantic, spatial) to the agent. Actions are predicted using an encoder, and optimal actions are derived through backpropagation.

The input to the NIMFE module consists of two primary components: the UAV image x and metadata m. The metadata includes environmental and contextual information, specifically altitude A, view angle V, and weather conditions W, which are critical factors influencing image perception but considered nuisances for consistent object detection. To handle these nuisances, our approach leverages separate transformation networks for each type of metadata, ensuring that the visual features extracted from the image are disentangled from the nuisance factors without losing crucial information for the task at hand.

Initially, the UAV image x is processed through a visual feature extractor fT(x), generating a raw feature map fT(x)∈ℝh×w×d, where h, w, and d represent the height, width, and depth of the feature map, respectively. Simultaneously, each metadata component is processed through its respective transformation network: altitude A, view angle V, and weather W are passed through transformation networks fA(mA), fV(mV), fW(mW), yielding nuisance-specific embeddings zA, zV, zW. These embeddings capture the influence of each nuisance but are designed to be processed separately from the visual features.

Once the feature extraction process is complete, the metadata embeddings are integrated with the visual feature map through a cross-modal attention mechanism. The cross-modal attention mechanism ensures that the nuisance-related embeddings are appropriately incorporated into the feature space, while minimizing their direct impact on the object detection task. This interaction is formalized as:

zcombined=CrossAttention(fT(x),zA,zV,zW), (5)where CrossAttention fuses the image-derived feature map with the nuisance-specific embeddings zA, zV, zW, generating a combined feature map zcombined that is robust to changes in altitude, view angle, and weather. This disentangled representation is critical for ensuring that object detection remains unaffected by the changing flight conditions.

The goal of the NIMFE module is to extract a feature representation that is both relevant for the primary task of object detection and invariant to the nuisance factors. This is achieved through an adversarial learning approach, where the system is trained to maximize object detection accuracy while simultaneously minimizing the system's ability to predict the nuisance attributes from the extracted features. The adversarial loss ensures that the learned features are robust and invariant to environmental changes. The adversarial training objective is defined as:

Ladv=-∑i∈γi·Lnuis(fnuis(i)(zcombined),mi), (6)where Lnuis is the nuisance prediction loss, γi is a balancing coefficient for each nuisance type i, and fnuis(i) represents the prediction network for nuisance i. This loss term encourages the NIMFE module to generate feature maps that minimize the influence of nuisances on the final object detection output.

The total loss function for the NIMFE module integrates the object detection loss Lobj, the adversarial loss Ladv, and a regularization term Lreg, which encourages smoothness and consistency in the learned feature space. The overall objective is expressed as:

LNIMFE=Lobj+λadv·Ladv+λreg·Lreg, (7)where λadv and λreg are hyperparameters controlling the trade-offs between object detection accuracy, nuisance suppression, and regularization. During training, the model alternates between optimizing the object detection task and minimizing the influence of nuisances, ensuring that the final feature map is disentangled from the environmental variables.

Once the disentangled feature map zcombined is produced, it is fed into the object detection network fobj, which generates bounding boxes and class labels for the detected objects. The adversarial mechanism ensures that this feature map is robust to altitude, view angle, and weather variations, resulting in more accurate object detection across different flight conditions. This robustness allows the NIMFE module to not only enhance object detection but also improve UAV navigation and situational awareness. By integrating both visual and metadata inputs, the NIMFE module ensures high adaptability to diverse environmental conditions, enabling UAVs to perform effectively in real-world scenarios. The module's ability to isolate task-relevant information while suppressing nuisances makes it a powerful tool for improving the performance and robustness of UAV-based systems in complex, dynamic environments.

3.4 Prior-guided multimodal adaptation strategyIn UAV-based object detection and navigation tasks, integrating prior domain knowledge is crucial for improving the model's generalization to unseen environments and enhancing robustness against variable conditions. Our model employs a Prior-Guided Multimodal Adaptation Strategy (PGMAS) that leverages UAV-specific metadata (such as altitude, weather, and view angle) and domain-specific knowledge to refine both detection and navigation outputs. This strategy is incorporated into the overall architecture of NavBLIP to guide decision-making processes, ensuring that UAV operations remain effective in diverse and unpredictable environments. The prior information consists of domain knowledge in the form of pre-defined rules or distributions about how different nuisances (e.g., altitude, weather, or view angle) affect object visibility and detection performance. Let P(A),P(V),P(W) represent the prior distributions of altitude, view angle, and weather, respectively. These priors are used to weight the importance of specific features when predicting objects or adjusting navigation behavior. For instance, when flying at high altitudes A, smaller objects may be harder to detect, and thus, the model should prioritize extracting finer features. The adaptation mechanism begins by calculating a prior-guided feature adjustment gprior, which modifies the combined feature representation zcombined from the NIMFE module. This adjustment is computed by combining the feature map with the learned priors:

gprior(zcombined,P(A),P(V),P(W))=zcombined+α·(P(A)+P(V) +P(W)), (8)where α is a learned parameter that controls the strength of the prior adjustment. The term P(A)+P(V)+P(W) represents a weighted combination of the priors, which can dynamically influence the feature adjustment based on the current metadata values.

To optimize this adaptation process, the model minimizes a prior-guided loss function, which encourages the system to use prior knowledge effectively while performing object detection and navigation. The total loss for this adaptation strategy, Lprior, is defined as:

Lprior=Lobj+β·?x,m[gprior(zcombined,P(A),P(V),P(W))], (9)where β is a regularization parameter that balances the importance of the prior information with the object detection loss.

Additionally, PGMAS allows for strategic control over UAV navigation. The prior-guided feature adjustment also affects the control signals generated by the navigation module fnav, ensuring that the UAV responds appropriately to environmental variations. For instance, when adverse weather conditions W are detected, the navigation system can adjust the UAV's trajectory to avoid areas with reduced visibility or higher detection difficulty. This adaptation is handled by modifying the navigation loss Lnav to account for the priors:

Lnavprior=?(x,m)[ℓ(fnav(gprior(zcombined,P(A),P(V),P(W))),c)], (10)where the navigation output is influenced by the adjusted features gprior, ensuring that the UAV adapts its control based on environmental conditions.

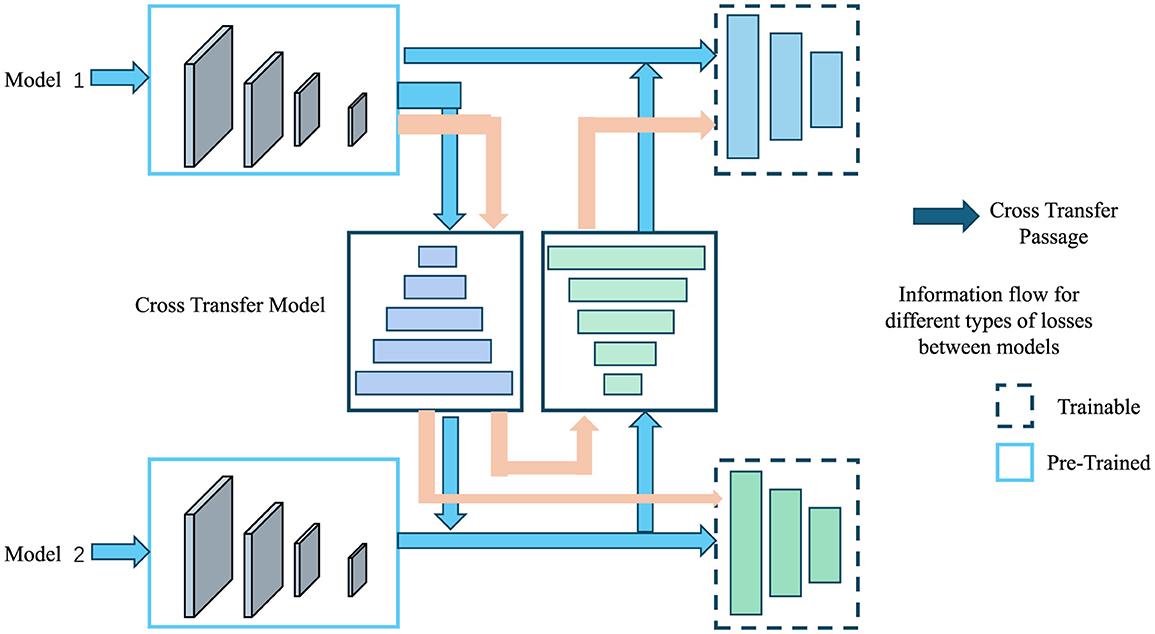

The overall impact of the Prior-Guided Multimodal Adaptation Strategy is twofold: first, it enhances the object detection capabilities of the system by leveraging prior knowledge about nuisance effects, and second, it improves UAV navigation by adjusting control outputs based on real-time metadata and prior information. This approach enables NavBLIP to operate effectively in diverse environments, enhancing both accuracy and robustness across a range of UAV applications. Figure 2 is a schematic diagram of the principle of Transfer Learning.

Figure 2. A schematic diagram of the principle of transfer learning.

3.4.1 Theoretical justification for adversarial learning convergenceThe adversarial training approach aims to achieve two critical objectives: disentangling task-relevant features from nuisance factors while enhancing the model's robustness under diverse environmental conditions. This objective is formulated as a min-max optimization problem, defined as:

留言 (0)