In recent years, camera-based perception systems have been widely used in various kinds of robots, which brings the need for image-based scene understanding algorithms. For robots, it is not only necessary to recognize the semantic information in the scene, but also to distinguish different instances, which is of great significance for robots to navigate, interact and execute tasks in complex environments. Specifically, robots need the help of computer vision techniques to identify obstacles in the scene, identify users, find targets, etc.

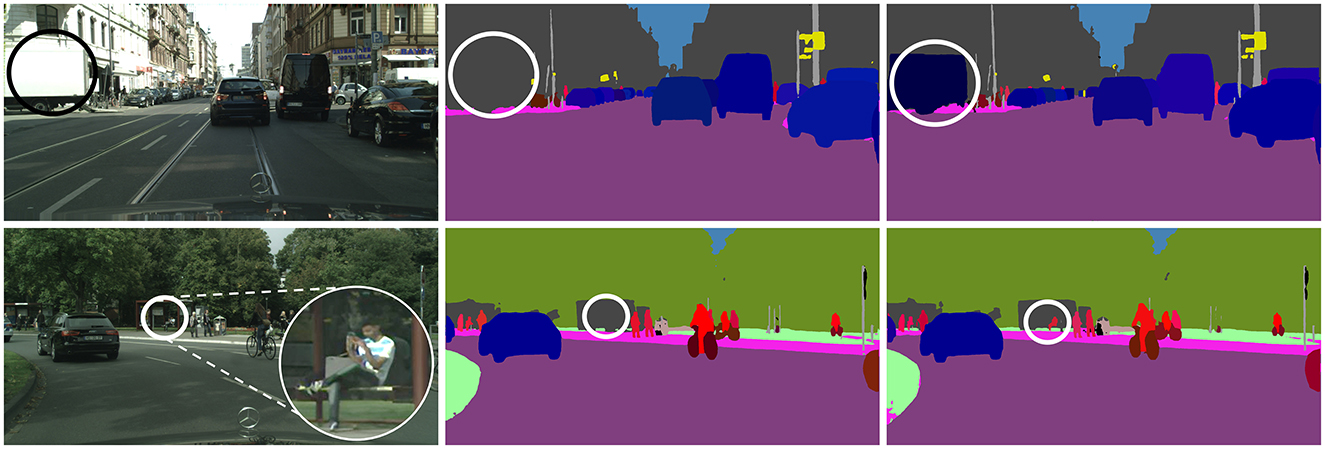

In order to meet this demand, semantic segmentation (Zhang et al., 2022; Ye et al., 2023; Zhang et al., 2023) and object detection (Liu and Stathaki, 2018; He et al., 2017) tasks have been proposed in the field of computer vision, which are used to identify the semantic information of the pixels in the scene and distinguish the information of different instances in the image respectively. Until Kirillov et al. (2019a) proposed the panoptic segmentation task, which unifies the above two tasks. This new task is dedicated to identifying each pixel's semantic and instance ID in the input image. This task has gained significant attention in the field of scene understanding due to its precise definition. It is a valuable tool for autonomous driving and industrial robotics applications. There are currently three categories of deep learning-based panoptic segmentation methods based on the instance mask generation approach: top-down (Mask R-CNN based), bottom-up (DeepLab based), and transformer-based (DETR based). These methods have shown promising progress on the datasets. However, misclassification of textureless regions and missing detection of small targets remain to be solved. For example, the white truck was wrongly identified as a building because it lacks texture and has the same color as the building behind it. Additionally, the person in the distance was not detected by the current algorithm due to their small size, as shown in Figure 1.

Figure 1. Visualization examples of misclassification and missing detection. Left: input image; middle: UPSNet results, misclassification of the track and missing detection of the human; right: our results.

The lack of contour perception may cause the problems above, according to the perceptual theory of biological vision. Research (Zhou et al., 2000) indicated that contour detection plays a crucial role in processing scene data in a monkey's visual cortex. The studies suggest that about 18% of the cells in area V1 and over 50% in V2 and V4 on the cerebral cortex are dedicated to processing contour-related information. It is well verified by the previous semantic segmentation that introduced edge detection as an auxiliary task. Gated-SCNN (Takikawa et al., 2019), DecoupleSegNets (Li et al., 2020), and RPCNet (Zhen et al., 2020) demonstrated the importance of contours in scene recognition by introducing semantic edges to improve semantic segmentation performance. In the panoptic segmentation task, some previous studies (Xu et al., 2021; Chang et al., 2023) have explored contour detection as a separate component in their work. CAPSNet (Xu et al., 2021) was the first work that introduced a contour branch to guide feature extraction and explicit contour supervision on the result of panoptic segmentation to improve the network's understanding of the structure. However, this method did not incorporate contour information into the panoptic segmentation process. SE-PSNet (Chang et al., 2023) adopted a similar structure and used contour as auxiliary information to enhance instance segmentation, but did not contribute to semantic segmentation.

In this study, we introduce the Cascade Contour-enhanced Panoptic Segmentation Network (CCPSNet), a novel approach designed to fully embrace structural knowledge to improve the detection of small targets and the semantic recognition of weak texture areas. Our method employs a cascading strategy to adaptively refine multi-scale structural contour details, thereby facilitating more precise detection of contours in areas lacking texture or containing small objects. Furthermore, we present a contour-guided multi-scale feature enhancement stream that integrates panoptic contours and multi-scale features to refine segmentation features and calibrates the perceptual field using structural-aware feature modulation module(SFMM), thereby enhancing segmentation accuracy. The key contributions of our work are as follows:

• We propose a cascade contour-enhanced panoptic segmentation network, which effectively delves into the comprehensive structural knowledge using panoptic contour detection and corresponding features, thereby improving the robot vision's perception ability in challenging complex areas.

• We develop a cascaded contour detection stream for the panoptic segmentation network, which aims to extract scene structural information by a feature channel regulation module and cascade strategy.

• We design a contour-guided multi-scale feature enhancement stream that incorporates contour information and contextual features to enhance the feature learning of areas with small objects and weak textures.

• Extensive experiments on the Cityscapes and COCO datasets substantiate the robustness and superiority of our proposed network compared to existing methods.

2 Related workIn this section, we review the progress of research on deep learning-based panoptic segmentation algorithms and contour detection in deep learning.

2.1 Deep learning-based panoptic segmentation 2.1.1 Top-downDue to the exceptional performance of Mask R-CNN (He et al., 2017) on the instance segmentation task, this type of approach combines semantic segmentation branches with instance segmentation outcomes to generate panoptic segmentation results. The Panoptic-FPN was proposed by Kirillov, which utilized semantic segmentation with a shared Feature Pyramid Network backbone. Since then, many studies (Chen Y. et al., 2020; Li et al., 2019; Liu et al., 2019; Xiong et al., 2019) have expanded upon this approach. UPSNet (Xiong et al., 2019) introduced a parameter-free panoptic head that predicts the final panoptic segmentation via pixel-wise classification, the number of classes per image of which could vary. BANet (Chen Y. et al., 2020) exploits the complementary relationship between semantics and instances to design Semantic-to-Instance module and Instance-to-semantic module to improve the performance. EfficientPS (Mohan and Valada, 2021) is currently the most effective technique in top-down methods. It involves creating a new backbone network and implementing a 2-way FPN while maintaining the core structure of the Mask R-CNN component.

Based on the above studies, CAPSNet (Xu et al., 2021) pioneered the idea of enhancing the network's ability to perceive structures by introducing panoptic contour-aware branches. Then SE-PSNet (Chang et al., 2023) introduced the contour-based enhancement features into different predicted heads. While the previous methods use contour perception to aid in the understanding of structural information within an image, the design of this approach is relatively simple and may struggle with small targets. To address this issue, we introduce a new cascaded panoptic contour detection head to improve detail awareness and a contour attention feature enhancement module to enhance feature expression. These improvements should enhance the overall performance of the system.

2.1.2 Bottom-upIn contrast to the above approaches that use instance partitioning as the core of the network, many studies (Chen et al., 2017; Yang et al., 2019; Cheng et al., 2020; Gao et al., 2019; Wang H. et al., 2020; Sun et al., 2023) that focus more on semantic segmentation and cluster the results to generate instance segmentation results. They adopt models such as DeepLab (Chen et al., 2017), a semantic segmentation model that uses an encoder-decoder architecture with an atrous convolution structure as the backbone to generate semantic segmentation results while generating instance segmentation results through a bottom-up approach. Panoptic-DeepLab (Cheng et al., 2020) adopts the dual-ASPP and dual-decoder structures specific to semantic and instance segmentation, respectively. Deeper-Lab (Yang et al., 2019) uses bounding box and center point to generate the instance mask and SSAP (Gao et al., 2019) proposes a pixel-pair affinity pyramid to predict the instance by computing the probability that two pixels belong to the same instance.

2.1.3 Transformer basedThe DEtection TRansformer (DETR) has been proposed by Carion et al. (2020) as a successful application of the Transformer method, commonly used in NLP, for image detection tasks. Many networks (Wang et al., 2021; Yu et al., 2022a,b) have subsequently emerged that employ the self-attention module. Max-DeepLab (Wang et al., 2021) introduces mask transformer to predict class-labeled masks directly, while training with panoptic quality inspired loss via bipartite matching to improve Axial-DeepLab (Wang H. et al., 2020)'s performance on highly deformable objects, or nearby objects with close centers. Building on this work, CMT-DeepLab (Yu et al., 2022a) composes the process of assigning pixels to the clusters by feature affinity and updating the cluster centers and pixel features as Clustering Mask Transformer. kMaX-DeepLab (Yu et al., 2022b) draws on the k-means clustering algorithm and redesigns the cross-attention mechanism by introducing the relationship between pixels and object queries. The above model greatly simplifies the process of panoptic segmentation and has more powerful feature learning capability to improve the performance effectively. However, they require huge computility is unsatisfactory.

2.2 Contour detection in deep learningThe edge detection task is a fundamental task in computer vision, and this task has also seen new advances through deep learning this year. Among them, HED (Xie and Tu, 2015) improves the performance by using a pattern of fusion of multiple layers. Meanwhile, edges also play an important role in the segmentation task. Nvidia (Takikawa et al., 2019) proposed to assist segmentation through contours. CAPSNet (Xu et al., 2021) is the first model that proposes to introduce panoptic segmentation contours into the panoptic task. SE-PSNet (Chang et al., 2023) assists panoptic segmentation according to semantic contours and instance contours, respectively.

All of the above methods use contouring as an auxiliary task, and for the first time, our model incorporates panoptic segmentation contouring results into the prediction process.

2.3 Attention model in deep learningMany previous works have demonstrated the outstanding performance of attention mechanisms on various tasks such as object detection (Alazeb et al., 2024), autonomous driving (Yang et al., 2023), saliency prediction (Min et al., 2020), and fixation prediction (Min et al., 2016). Min et al. (2016) utilizes canonical correlation analysis to identify the most relevant audio features. It construct visual attention models through spatial attention and temporal attention to predict fixation points. The moving sound target is located using cross-modal kernel canonical correlation analysis. Min et al. (2020) introduces a two-stage adaptive audiovisual saliency fusion method to complete the saliency prediction. Axial-DeepLab (Wang H. et al., 2020) is a fully attentional network with novel position-sensitive axial-attention layers that combine self-attention for non-local interactions with positional sensitivity. The deletion of the object detection branch leads to those methods being more efficient rather than more effective.

In this paper, we use the attention mechanism to enhance structure perception. The expression of the contour on the feature channel is guided by the attention in the cascaded contour detection stream, and the panoptic contour is used as the input to generate spatial attention to assist the final panoptic segmentation in the contour-guided multi-scale feature enhancement stream.

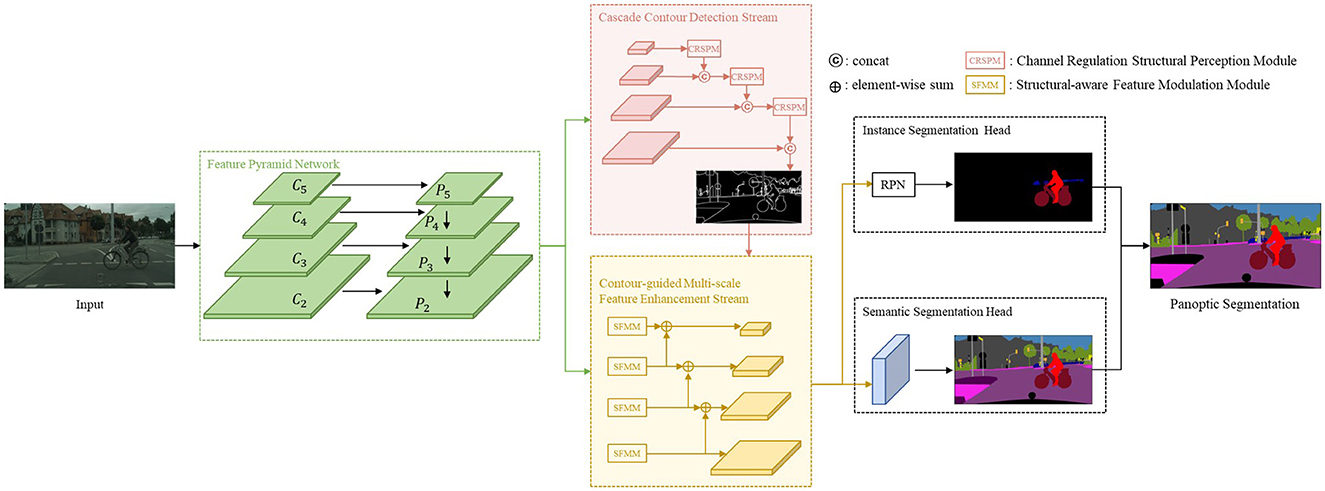

3 MethodologyIn this section, we provide a detailed overview of our proposed network, illustrated in Figure 2. The network follows a top-down, which employs a shared ResNet backbone with a Feature Pyramid Network (FPN). Our network has four major components: (1) Cascade contour detection stream. (2) Contour-guided multi-scale feature enhancement stream to enhance feature expression on the backbone. (3) The instance segmentation head provides the instance segmentation predictions. (4) The semantic segmentation head predicts semantic results. This section provides the details of those.

Figure 2. Illustration of the proposed CCPSNet.

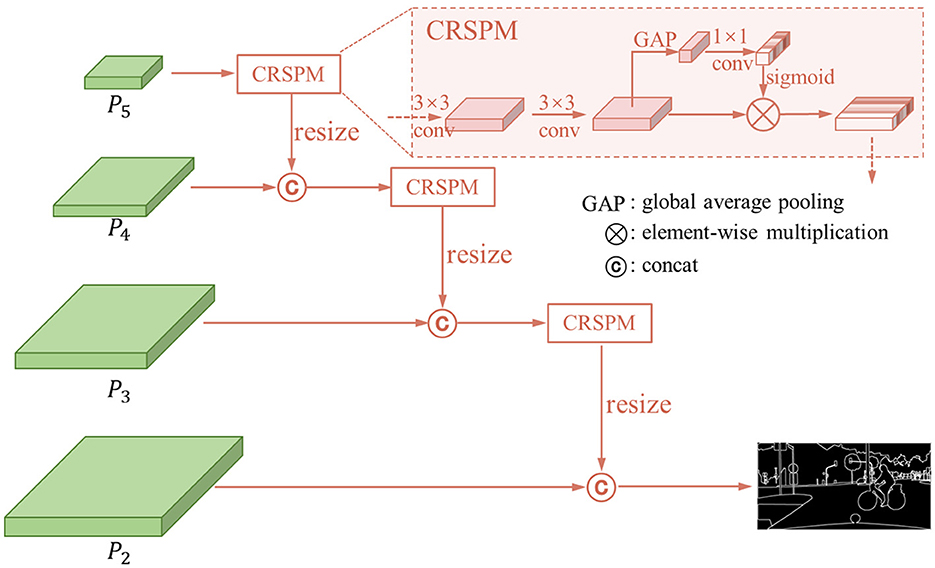

3.1 Cascade contour detection streamInspired by Gated-SCNN (Takikawa et al., 2019) and RPCNet (Zhen et al., 2020) that introduce the edge detection to aid in semantic segmentation, contours are critical clues in segmentation task. In order to outline every object or background in the scene, panoptic segmentation contour is an amalgamation of semantic segmentation contour and instance segmentation contour. Particularly, for an image I, it's panoptic segmentation contour label CI = Cs∪Ct. Here, Cs is the semantic contour for stuff categories and Ct is the instance contour for things label. In terms of the truth result of the panoptic segmentation ground truth P, specifically for a pixel Pp, whenever any of the 8 pixels surrounding this pixel point has a different semantic or instance ID with it, we consider it to be a panoptic contour pixel. Since the results are colored by category and instance, different categories or different objects id in the same category will be colored differently. Thus Laplace convolution can be used to obtain a panoptic segmentation contour.

CPp=1 ∀Pp∈P, s.t. lapulation(Pp)≠0. (1)In order to get the contour, we design a cascade contour detection stream. The structure is shown in Figure 3. We introduce the featuresP2−P5 obtained from FPN into the channel regulation structural perception module (CRSPM) to obtain the contour features of the corresponding layers. To begin with, we apply a 3 × 3 convolution to convert the features into contour features. Subsequently, considering the variability of the activation patterns of different convolution kernels, we generate weights on channels to regulate feature volume, which aims to enhance structural information and suppress negative impact information. This module is primarily built through global average pooling and fully connected layers, which can be represented by the following formula:

P=f(Pi)⊗g(GAP(f(Pi))) (2)In which, f() represents the two 3 × 3 convolution layers, GAP() represents the global average pooling, and g() is the 1 × 1 convolution. By this way, we retain the ability to perceive the texture while picking out the channels that are responsive to the contours. In order to maintain the overall information on the large scale and avoid the misclassification caused by the texture difference inside the structure, the large-scale and small-scale features are fused by concatenate operation. From P5 to P2, we apply coarse-to-fine cascade aggregation to get the contour feature and predict the panoptic segmentation contour.

Figure 3. The structure of cascade contour detection stream.

Due to the distribution imbalance of the number of contour and non-contour pixels, we adopt the class-balancing cross-entropy loss function Lc following the HED (Xie and Tu, 2015) as the contour loss. Equation 1 provides its formalization. In which α is the class-balancing weight on a per-pixel term basis. C denotes the ground truth of panoptic contour, and Ĉ denotes the predicted panoptic contour. C− denotes the contour ground truth label sets.

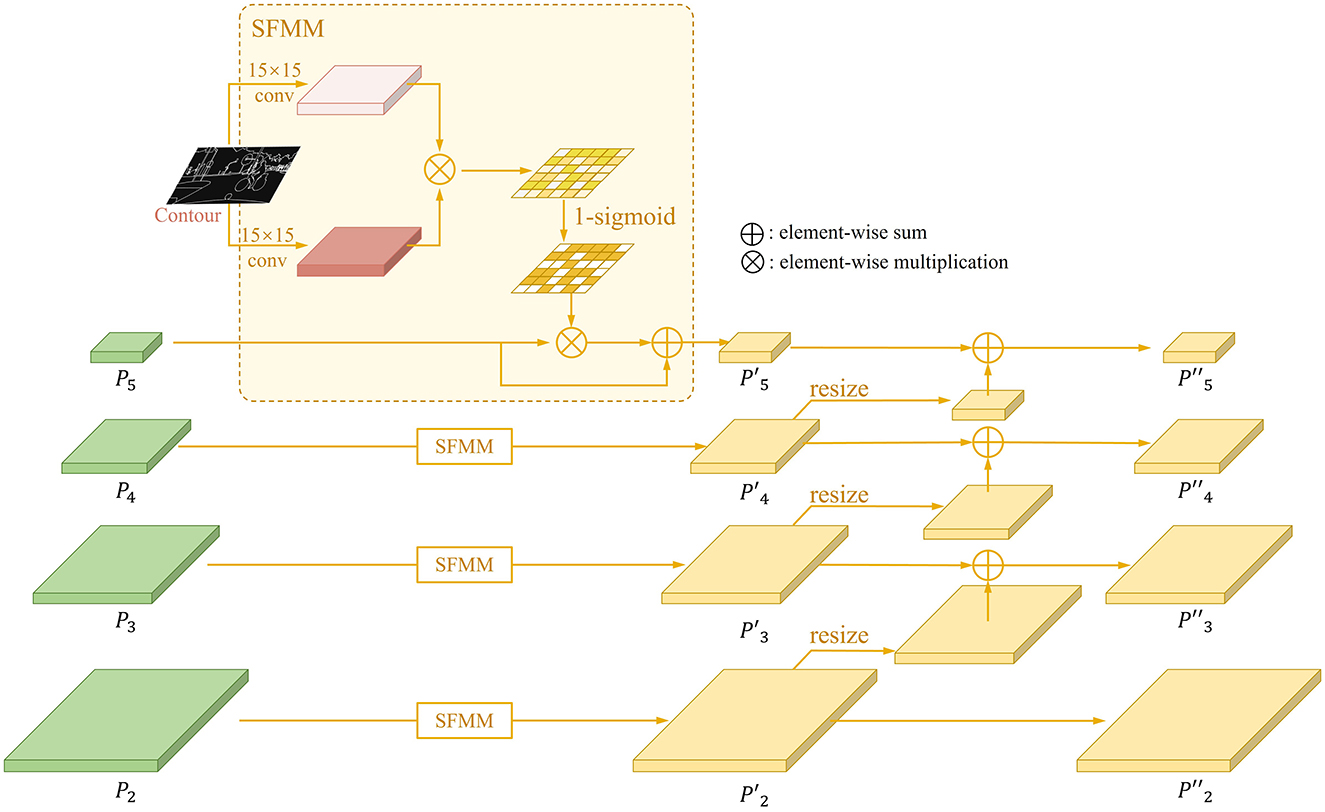

Lc=−αClog(sigmoid(C^))−(1−α)(1−C)log(1−sigmoid(C^));α=C−C (3) 3.2 Contour-guided multi-scale feature enhancement streamIn the process of extracting features from the backbone, a lot of detailed information is lost due to multiple down-sampling and pooling operations, which leads to the mission detection of small objects. To address this issue, we proposed structural-aware feature modulation module (SFMM) and inverse aggregation operation, which augments the features by taking the panoptic contours to generate attention at the spatial scale and fuses with features of different scales. This module helps incorporate structured information into features at all scales to aid in learning small object features. Its structure is shown in Figure 4. Here, we give a detailed formulation for this process.

Figure 4. The structure of contour-guided multi-scale feature enhancement stream.

Given an input feature map Pi∈ℝN×Wi×Hi from the i-th scale FPN branch. The contour was resized to same scale with this feature map Ci∈ℝ1×Wi×Hi. The attention can be formulated as follows:

Atti=sigmoid(σ(fi1(Ci,wi1)⊗fi2(Ci,wi2))) (4)where fij(·, ·) denotes an atrous convolution function, ⊗ represents element-wise multiplication, σ means a 1 × 1 convolution, and sigmoid indicates the Sigmoid activation function, Atti∈ℝ1×Wi×Hi is the attention generate from the contour. In this process, the atrous convolutions f are employed to mine spatial information from contour. During the experiments, in our experience, the kernel sizes of both fi1 and fi2 are set to 15, with a dilation rate of 3, and they do not share weights.

To emphasize the feature of object, we formulate the attention weighted map Atti′ as 1−Atti. Then the enhanced feature map Pi′∈ℝN×Wi×Hi can be presented as:

Pi′=Pi⊗Atti′⊕Pi (5)where ⊕ means element-wise sum.

Inspired by Tan et al. (2020), an inverse aggregation method is designed to utilize features in low levels assistant for large instance identification. We designed the structure to allow low-level features to provide detailed information for nearby high-level features, which implemented by resizing the low-level feature map Pi-1′∈ℝN×Wi-1×Hi-1 to the same scale as near high-level feature map Pi′∈ℝN×Wi×Hi and employing the element-wise sum. The i-th feature Pi″∈ℝN×Wi×Hi for segmentation and detection can be generated as:

Pi″=Pi′⊕δ(Pi-1′) (6)where δ means down-sampling. It is worth noting that since P2″ has no lower-level features, in practice P2″ and P2′ are the same. At this point, enhancement of features based on contours is complete.

3.3 Instance segmentation headFollowing the Mask R-CNN (He et al., 2017), our instance segmentation head produces bounding box regression, classification, and segmentation mask from P2″-P5″. The purpose of this head is to provide pixel-level annotations for each instance. The loss function Lins is defined as follows:

Lins=Lcls+Lbbox+Lmask (7)where Lcls is the is the classification loss, Lbbox is the object bounding-box regression loss, and Lmask is the average binary cross-entropy loss for mask prediction.

3.4 Semantic segmentation headFor semantic segmentation head, we stack two 3 × 3 deformable convolution layers following SFMM features. Similar to PSPNet (Zhao et al., 2017), we first scale the features of different sizes to the same scale to achieve fusion of different granularity. Then, the semantic presentations are obtained by concatenating these scaled features. For this head, we choose the standard cross entropy in semantic segmentation as the loss function, denoted as Lseg.

During training, the total loss Ltotal is formulated as:

Ltotal=LC+Lins+Lseg (8) 4 ExperimentsIn this section, our CCPSNet is evaluated on Cityscapes (Cordts et al., 2016) and Microsoft COCO (Lin et al., 2014) datasets. We present the experimental results on these datasets and compare with them the state-of-the-art models based on Mask R-CNN. The ablation studies and robustness analysis are presented at last.

4.1 Datasets and metrics 4.1.1 CityscapesThis dataset focuses on understanding urban streets scenes. It is composed of 2,975 training images, 500 validation images, and 1,525 test images. All these 5,000 images are with fine annotations. There are another 20,000 images with coarse annotations, which are not utilized in our experiment. This dataset has a total of 19 categories, of which 8 are things and the remaining 11 are stuff. In this dataset, we use 4 RTX 2080Ti GPUs to train our model, and trained in a batch size of 1 per GPU, learning rate of 0.02 and weight decay of 1e−4 for 48,000 steps in total and decay the learning rate by a factor of 0.1 at step 36,000.

4.1.2 COCOThis dataset contains a large number of natural images, comprising both indoor and outdoor scenes. The distribution between images is also inconsistent, making it challenging for algorithms to learn good results. It contains 140,000 images with 115,000 training images, 5,000 validation images, 20,000 test-dev images, and 20,000 test images. There are 80 thing categories and 53 stuff categories. We only rely on the train set with no extra data, presenting the results on the validation set for comparison. In this dataset, we use 8 RTX 2080Ti GPUs to train our model, and trained in a batch size of 1 per GPU, learning rate of 0.01 and weight decay of 1e−4 for 48,000 steps in total and decay the learning rate by a factor of 0.1 at step 240,000 and 32,000.

4.1.3 Evaluation metricsFollowing Kirillov et al. (2019b), the Panoptic Quality (PQ) is adopted as evaluation metrics:

PQ=∑(p,g)∈TPIoU(p,g)|TP|︸segmentation quality(SQ)×|TP||TP|+12|FP|+12|FN|︸recognition quality(RQ),According to the formula, PQ is determined by multiplying SQ and RQ, combining the evaluation of semantic segmentation and instance segmentation. In the formula, IoU(p, g) means the intersection-over-union between predicted object p and ground truth g. TP (True Positives) represents matched pairs of segments, and FP (False Positive) means unmatched pairs of segments, and FN (False Negatives) means unmatched ground truth segments. When the value of IoU is >0.5, it is considered a positive match. Note that PQ, PQTh, and PQSt refer to the PQ values averaged across all classes, thing classes and stuff classes.

4.2 Comparsion to state-of-the-artWe compare our proposed network with other state-of-the-art methods on Cityscapes (Cordts et al., 2016) val set and MS-COCO (Lin et al., 2014).

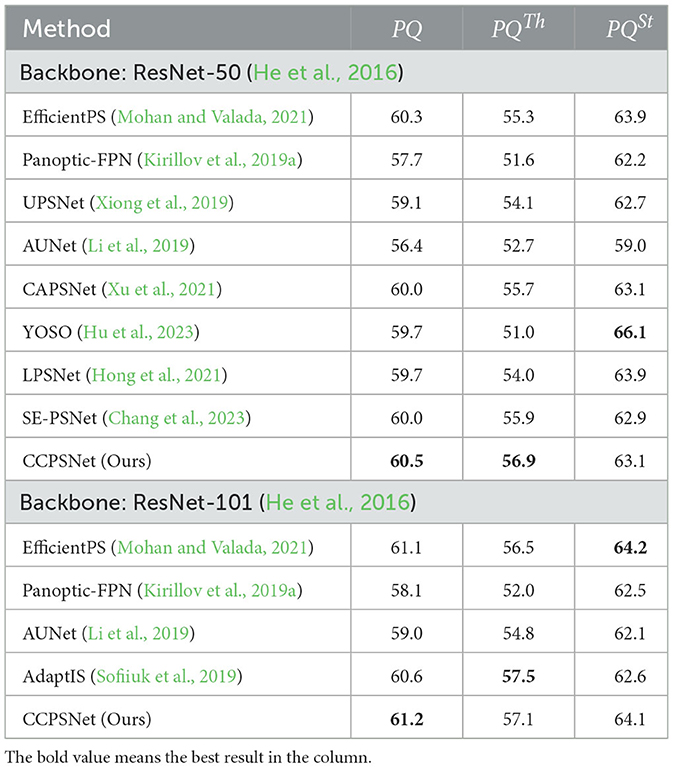

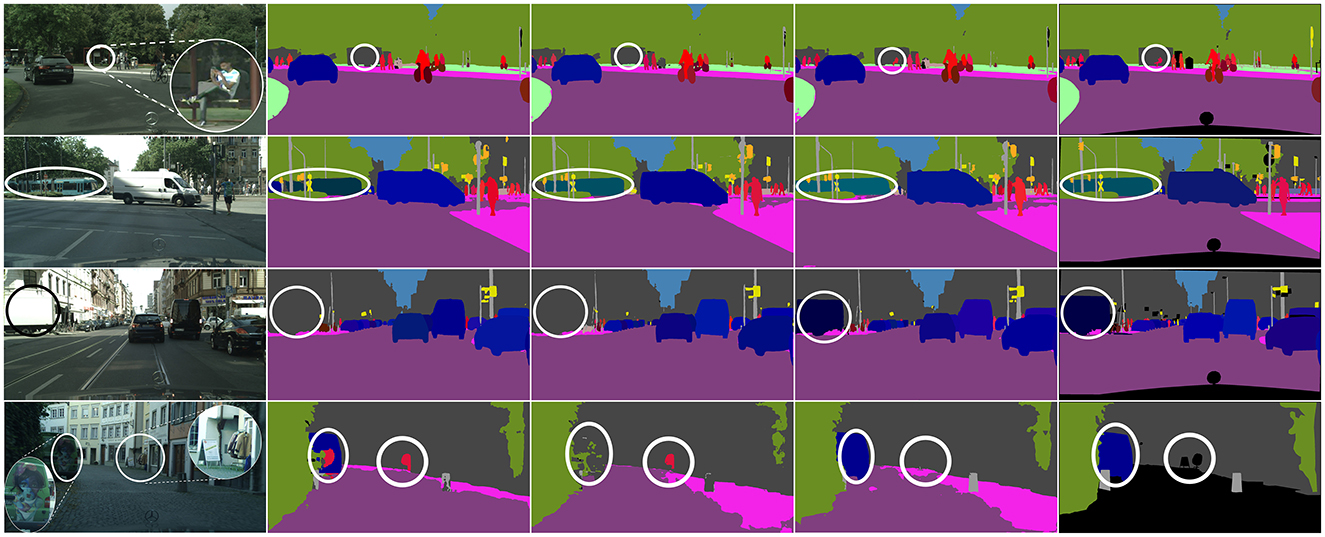

4.2.1 CityscapesAs shown in Table 1, the proposed CCPSNet of ResNet-50 is used to realize 60.5% and CCPSNet of ResNet-101 is used to realize 61.2% which is improved compared with similar methods with same backbone. Figure 5 presents some visual examples of our algorithm on Cityscapes. The first row shows that the problem of small target objects, such as cyclists, which is difficult to distinguish due to lighting problems is alleviated by the feature cascade fusion module proposed by CCPSNet. The second and third rows show that when there is occlusion between different objects, contour perception in CCPSNet can solve this problem very well. The fourth row shows that through contour perception and contour feature enhancement, CCPSNet can effectively detect ambiguous scenes such as the left side of the vehicle painted with a face pattern and the right side of the street hanging a row of clothes.

Table 1. Comparsion with other methods on Cityscapes val sets.

Figure 5. Visual examples of panoptic segmentation on Cityscapes. From left to right are input images, predicted results from UPSNet, CAPSNet, CCPSNet (ours), and ground truth.

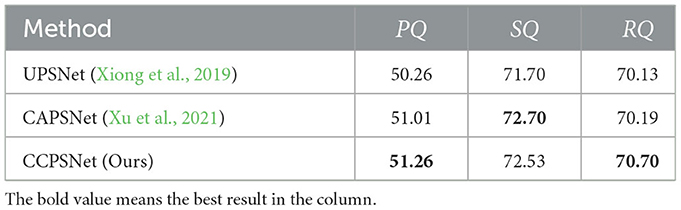

We evaluate the segmentation results on specific categories in Cityscapes, where the object size is smaller than 32 pixels × 32 pixels. The results based on ResNet-50 are reported in Table 2. It can be observed that our proposed algorithm indeed improves the performance for small objects, thanks to contour-guided multi-scale feature enhancement stream.

Table 2. Accuracy of small object on CityScapes val set.

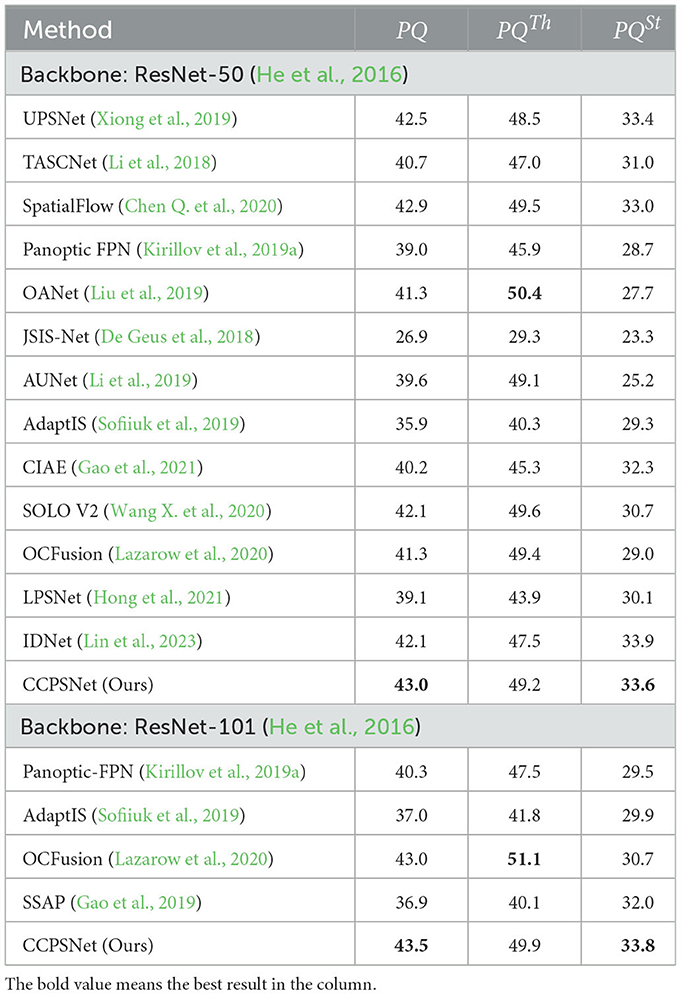

4.2.2 COCOIn addition to verification in outdoor driving scenarios, we further demonstrated the universality of our method on the COCO dataset, which includes various indoor and outdoor scenes. As shown in Table 3, we compare with similar methods with same backbone. The proposed CCPSNet achieves the PQ 43.2% and the PQ 43.5% with backbone ResNet-101.

Table 3. Comparsion with other methods on COCO val sets.

Figure 6 presents some visual examples of our algorithm on MS-COCO. The first row presented is similar to the one in CityScapes in its ability to detect extra-long targets such as trains, and the distant train body is well preserved. The second row is mainly for the detection of bus drivers, and the information of the characters is better retained. The third row focuses on the detection of small targets of bird flocks. Unlike UPSNet, CCPSNet still retains the good individual characteristics of flying birds for a large number of small targets and does not show a large number of block-like structures, and there are relatively independent characteristics among flying birds from the original figure. The fourth row shows the structure of the flush toilet, which is well preserved in CCPSNet, even if the structure of the drain is clearly visible.

留言 (0)