In recent years, the field of neuromorphic computing has witnessed significant advancements, driven by the quest to emulate the remarkable efficiency and parallel processing capabilities of the human brain. Opposite to mainstream Artificial Neural Networks (ANNs), Spiking Neural Networks (SNNs) provide a biologically close framework for information processing (Maass, 1997), attempting to integrate more biological mechanisms (Schmidgall et al., 2023). SNNs communicate through spikes, a strategy that mimics the neural activity observed in biological neurons. This spike-based communication enables SNNs to exhibit temporal dynamics and local adaptation, characteristics that closely resemble those found in biological neural networks (Malcolm and Casco-Rodriguez, 2023). This approach aims to simplify the power- and resource-consuming training demanded by ANNs because of two factors. Based on events, SNNs can theoretically execute with reduced power consumption compared to a continuous execution. Furthermore, the local plasticity algorithms, which refer to the ability of a neural network to adapt and change its structure or parameters in response to new information or experiences (Jordan et al., 2021), reduce the computation load compared to global gradient-descent methods. Despite living beings demonstrate that it is possible to achieve excellent results in recognition and classification tasks, ANNs are today still superior to SNNs in most of those tasks. Therefore, more research is needed in unraveling biological keys and proposing SNN topologies and algorithms (Diehl and Cook, 2015; Yang et al., 2024b).

In order to improve the knowledge and understanding of SNNs, the availability of specific hardware allows their fast prototyping and real-time execution. In order to make progress in demonstrating their suitability for solving real-world problems, it is necessary to map applications and benchmark them. Two possible reasons may explain why the results generally do not achieve the same performance as classic ANNs. First, the attempt to reproduce the same topology and configuration of ANNs by encoding values on spike streams may lead to sub-optimal solutions. Second, the development of efficient local adaptation algorithms is still an open research field, where advancements continue to be made in enhancing robustness and energy efficiency through innovative learning techniques (Yang and Chen, 2023; Yang et al., 2023).

So far, many neural and synaptic plasticity models have been proposed (Sanaullah et al., 2023), but often it remains unclear what is the best usage of them and the most suitable spike encoding. However, the fact that biological networks are able to perform excellent recognition tasks is a motivation to search and a hint that good performance can be achieved with current SNNs models or their evolutions.

In order to accelerate the production of results and reduce the resources demanded by large-scale networks, a promising avenue in this domain is the development of custom hardware architectures that combine low power consumption and high computational efficiency with real-time execution. For proof of concept demonstration, architectures implemented on Field Programmable Gate Arrays (FPGAs), excel in fast prototyping (Akbarzadeh-Sherbaf et al., 2018; He et al., 2022; Yang et al., 2024a), at the cost of resource count and power dissipation compared to ASICs.

Application-Specific Integrated Circuits (ASICs) allow to obtain the maximum performance from a given chip technology, at a much higher development cost. Among the most significant proposed ASIC architectures supporting SNNs, the following stand out: IBM TrueNorth (DeBole et al., 2019), a real-time neurosynaptic processor that features a non-von Neumann, low-power, highly-parallel architecture, with a new version called PoleNorth (Cassidy et al., 2024) ; SpiNNaker (Mayr et al., 2019), composed of multiple ARM processors that support a general-purpose architecture with multiple instructions and multiple data; and finally, the Intel Loihi 2 chip (Orchard et al., 2021), one of the most advanced chips with asynchronous operation. As an important added asset, SNN-specific hardware also offers the possibility of interfacing with sensors to interact with algorithms in real time using the data produced.

Contributing to the neuromorphic field, the Hardware Emulator of Evolving Neural Systems (HEENS) is being developed as a digital synchronous architecture designed to emulate SNN with high levels of parallel processing, reduced resource and power requirements, keeping the synchronous digital flexibility, and focusing on real-time neural emulation with biological realism and user-friendly prototyping environment.

The development of HEENS is based on significant previous research. For instance, originally Madrenas and Moreno (2009) introduced a scalable multiprocessor architecture employing SIMD configurations that is flexible for emulating various neural models. Additionally, the implementation of the AER communication protocol, detailed by Moreno et al. (2009) and Zapata (2016), has been crucial in enabling compact emulation of interconnections in large-scale neural network models. Also notable is the Spiking Neural Networks for Versatile Applications (SNAVA) simulation platform (Sripad et al., 2018), a scalable and programmable parallel architecture implemented on modern FPGA devices. The integration of these technologies and other advances implemented over time have allowed the current development of HEENS, which incorporates features of these previous works while introducing new capabilities that are described in the following sections.

This article provides a brief overview of HEENS, delving into its architectural characteristics features and focusing on its remarkable flexibility in prototyping SNNs, designed to adapt and emulate a wide variety of neural configurations, and its programmability, allowing researchers and developers to customize and adjust the emulator's behavior according to the specifications of their neural applications. In this context, the central objective of this work is to present the detailed methodology for modeling and implementing applications of SNN. The work starts with an exploration of its fundamental components, described in Section 2.1 namely a summary of the HEENS hardware architecture, followed by an in-depth analysis of neural and synaptic models, including the Leaky Integrate and Fire (LIF) neuron model and Spike Timing Dependent Plasticity (STDP) mechanisms (Bliss and Gardner-Medwin, 1973). In addition, we will assess the concept of homeostasis (Ding et al., 2023) and its impact on network behavior. These concepts will be demonstrated by implementing a handwritten digit recognition application in HEENS, using data sets such as MNIST (LeCun et al., 1998) to train and test neural networks. Through a series of experiments, we evaluate HEENS' effectiveness in achieving precision and robustness in pattern recognition tasks, comparing its performance with alternative solutions and identifying potential limitations.

This article aims to provide valuable insights into the capabilities and potential of HEENS by means of the demonstration of a character recognition application with local learning to advance the frontier of neuromorphic computing for researchers and professionals. With its blend of biological realism, scalability, and computational efficiency, HEENS emerges as a promising platform for exploring the intricacies of neural information processing and accelerating the development of intelligent systems.

Following this introduction, in Section 2, the methodology used is detailed, including architectural description, network architecture, neural and synaptic modeling, culminating in the implementation of the neural application, as well as the proposed input encoding and the stages of training and evaluation. Section 3 presents the execution experiments and results, while Section 4 offers a discussion of the results and the comparison with other alternatives or solutions, ending with future work and directions. Finally, the conclusion is reported in Section 5.

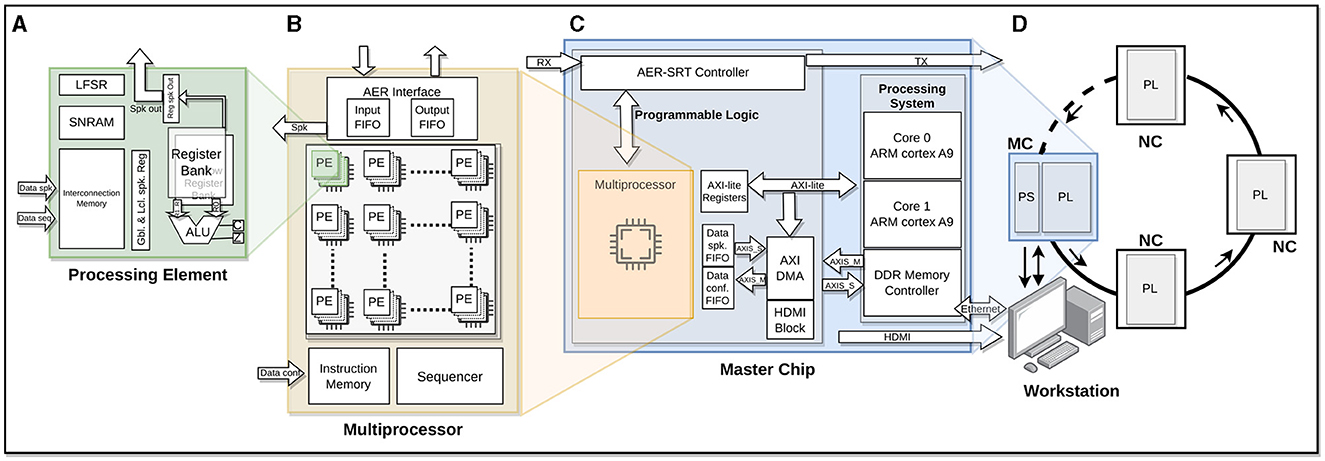

2 Methods 2.1 Summary of the HEENS hardware architectureHEENS is a Single Instruction Multiple Data (SIMD), scalable, and multichip hardware architecture implemented on several devices of the Xilinx's Zynq family of Field Programmable Gate Arrays (FPGAs), designed to emulate multi-model SNNs with high parallelism (Figure 1), using basic Processing Elements (PE) adapted to SNN requirements. PE arrays allow for the implementation of clustered networks inspired by the structural organization of the brain. The main goal of HEENS is to emulate large-scale SNNs in real time with a high degree of biological realism while also providing users with a friendly and flexible prototyping environment.

Figure 1. HEENS architecture overview. (A) Processing Element (PE) scheme. (B) Multiprocessor. (C) Master chip: Processing System (PS) and Programmable Logic (PL). (D) Ring topology in a master-slave connection scheme: master chip/node (MC) and neuromorphic chips/nodes (NC).

The basic system unit (PE, Figure 1A) contains the necessary logic to emulate one or more biological neurons and their synapses. It is composed of the fundamental units of a processor datapath: an Arithmetic and Logic Unit (ALU), a register bank, and routing resources, as well as two local Static Random Access (SRAMs). Each PE's ALU performs hardware addition, subtraction, multiplication, and logical operations, interacting with a 16-bit general-purpose register bank and an additional shadow register bank for extended register space. The Interconnection Memory stores the synaptic connection map. It is used to decode the incoming spike address identifier, detecting those that match the PE, while the Synaptic and Neural RAM (SNRAM) contains the neuron parameters required for the execution of the synaptic and neural models. A 64-bit Linear Feedback Shift Register (LFSR) is also included in each PE to generate uncorrelated noise, essential for various neural applications.

Each PE can be time-multiplexed without the need for additional hardware, at the cost of increasing execution time. This process is referred to as virtualization, which allows emulating (i.e., executing in real time) a maximum of eight virtual neurons per PE.

A 2D PE array together with the control unit, consisting of a Sequencer and an Instruction Memory, form the multiprocessor (Figure 1B). HEENS has its own custom Instruction Set Architecture (ISA), especially designed for the implementation of arbitrary spiking neural and synaptic models. The neural and synaptic programs are stored in the Instruction Memory. The sequencer performs instruction fetch and decoding, executes the control instructions, and broadcasts the ones related with data processing to the PE array. Finally, a synchronous Address Event Representation (AER) interface is in charge of internally and externally convey the spikes produced by the PE array neurons.

The architecture supports a maximum array of 16 × 16 PEs, which allows emulating up to 16 × 16 × 8 (2k) neurons with 256 synapses per PE distributed among its virtual layers. However, the total number of PEs that can be implemented depends on the hardware resources available in the FPGA. In the case of the Zynq706 (the model used in this work), an array of 16 × 10 PEs has been successfully mapped operating at a frequency of 125 MHz.

In Figure 1C, the main blocks that make up a master chip (node) can be observed. The master node includes communication with the user. This node consists of two parts, associated with the Programmable Logic (PL) and the Processing System (PS) of the programmable device. The PL contains the multiprocessor along with dedicated communication blocks for user interaction and the transmission of local and global spikes. On the other hand, the PS, which in the case of Zynq family chips embeds two ARM family processors, acts as a bridge between the user and the multiprocessor implemented in the PL, allowing the transmission of configuration packets and network control, monitoring and debugging information.

To support working with more complex networks and a larger number of neurons, the architecture can be extended by connecting slave nodes, creating a master-slave ring topology (Figure 1D), where a Master Chip is used along with Neuromorphic Chips (NC) as slaves. The latter contain multiprocessor instances, but lack the PS. The addressing space of the architecture supports up to 127 NCs. The communication between the chips is done using the Address Event Representation over Synchronous Serial Ring Topology (AER-SRT) protocol due to its high performance in applications focused on event transmission through GTX high-speed serial transceivers (Dorta et al., 2016). This communication is used not only to transmit spikes, but also to send configuration packets to each of the chips. Besides the monitoring through the master node, it is also possible for any node to support real-time monitoring via HDMI (Vallejo-Mancero et al., 2022).

Among the main features of HEENS, the following can be highlighted.

• Real-time operation. Time slots of 1 ms are considered real-time for each execution cycle of neural applications. This time slot can be tuned.

• High scalability: Achieved through an extendable and compact communication system, and low-resource, low-power design. Notice that each node requires only an input and an output serial port for all the operations.

• Unified data flow: The same communication ring supports all tasks: System configuration, spike transmission, and system execution monitoring.

• Multimodel Algorithm Support: Virtually any spiking neural algorithm can be programmed, including LIF (Abbott, 1999), Izhikevich (Izhikevich, 2003) , Quadratic LIF (Alvarez-Lacalle and Moses, 2009), and others, using dedicated software tools.

• Flexible Synaptic Algorithms: Same as neural ones, local synaptic algorithms are customizable via software, including Spike-Timing Dependent Plasticity (STDP) (Bliss and Gardner-Medwin, 1973), as a relevant example.

• Efficient Memory Usage: The neural, synaptic, and connection parameters are stored in local memory for optimized performance. This solves the processor-memory bottleneck issue by applying the in-memory computing paradigm.

• Comprehensive Computer-Aided Engineering (CAE) Toolset: Tools that fully automate the configuration and real-time monitoring of SNNs, making the mapping of a neural network a simple task of specifying in textfiles the network topology, the initial neural and synaptic parameters and selecting from a library the neural and synaptic algorithm to be executed (Oltra et al., 2021; Zapata et al., 2021).

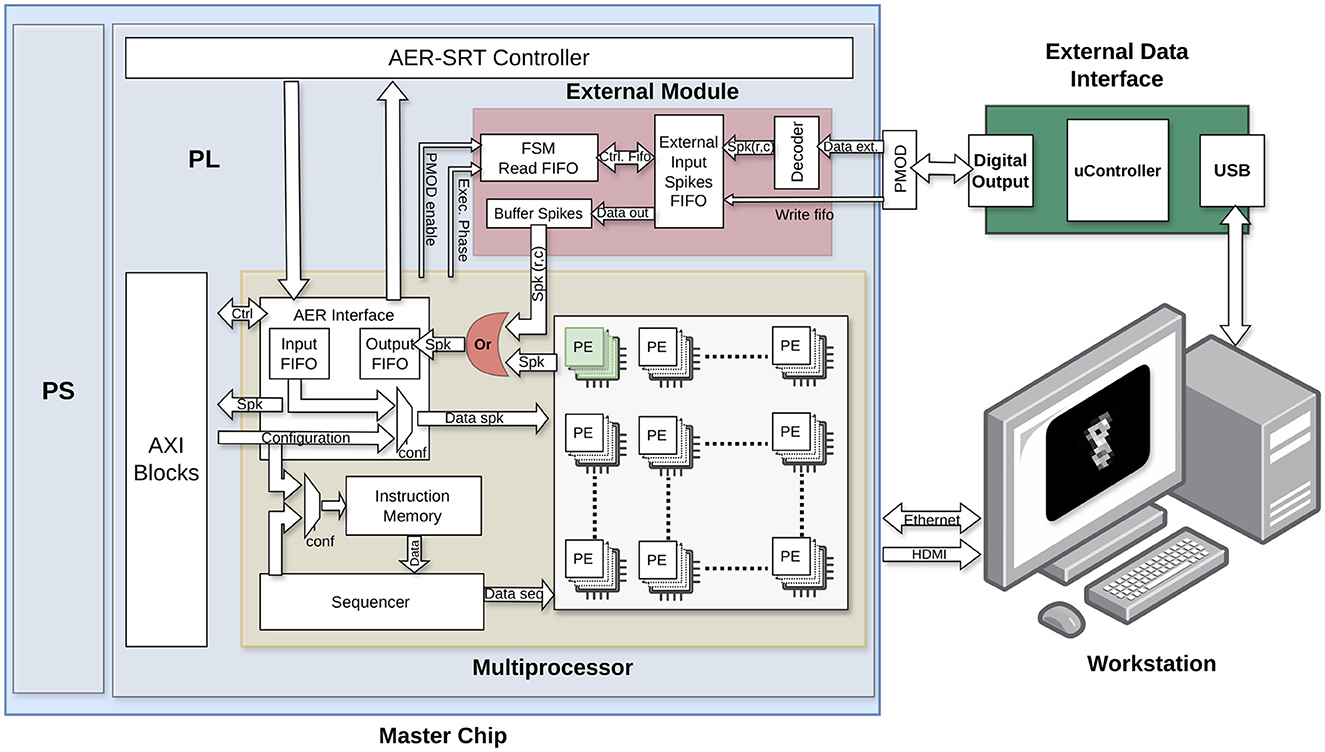

2.1.1 External input moduleAs observed in Figure 2, the original HEENS architecture was adapted to directly support access to the PE by multiplexing the output signal of the neuron. The PE array organization makes straightforward the direct access of external data to individual neurons. By default, the first virtual layer is accessed, although other layers could be equally accessed. This adaptation allows for the definition of a group of neurons that can be activated externally, managed by a hardware block responsible for converting the received signals into spikes directly injected to those specific neurons.

Figure 2. Schematic of the external spike input module hardware, detailing its individual blocks and connection with other modules.

The conversion and injection of spikes into the system must consider the real-time processing performed by the architecture. To achieve this, a First-Input First-Output (FIFO) memory is utilized to store data until the corresponding execution cycle requires it, every time step (typically 1 ms). Data is transmitted from an external hardware block and, for the sake of increasing speed, each 8-bit data is encoded into two 4-bit packs transmitted in parallel and processed in the architecture by a decoder block. Each bit is directly connected to a destination register bit, reducing the number of clock cycles required for each data and allowing for faster data transmission to ensure the real-time operation.

Data can come from various sources, typically attached sensors, but for this work the MNIST dataset (LeCun et al., 1998) is utilized and externally generated, where each event has been encoded in an 8-bit word. An explanation of the dataset management is provided in Section 2.3.

2.2 Spiking neural and synaptic model 2.2.1 SNN modelAs mentioned before, arbitrary spiking neural models can be implemented in HEENS. The choice depends mainly on two factors: the degree of biological realism and the computational efficiency for the application. Considering the second factor, the LIF model (Abbott, 1999) has been selected. This model is recognized for its simplicity and low computational cost. This well-known model is described with Equations 1, 2.

τmdVdt=−(V(t)−Vres)+R·I(t) (1) If: V(t)≥Vth then:V(t)←Vres (2)Where V(t) is the membrane potential, Vrest is the resting membrane potential, τm is the membrane time constant, R is the membrane resistance, I(t) is the synaptic input current and Vth is the threshold voltage. When the membrane potential crosses this threshold value, the neuron fires, and the membrane potential is reset to Vrest. The calculation of the synaptic input current is represented by Equations 3–5.

I(t)=k.gex(t)+k.gih(t) (3) τexdgexdt=−gex, τihdgihdt=−gih (4) gex=∑i=1Nexwi·δ(t−ti), gih=∑i=1Nihwi·δ(t−ti) (5)Where I(t) represents the total input current received by the neuron at time t, which is computed as the sum of excitatory and inhibitory synaptic currents. The constant k is the synaptic potential difference, scaling the impact of excitatory and inhibitory synaptic conductances on the membrane potential of the neuron. The excitatory synaptic conductance dynamics, gex(t), decays exponentially with τex time constant, while gih(t) represents the inhibitory synaptic conductance dynamics, following a similar exponential decay with τih time constant. The weights wi denote the strength of individual synapses, where Nex and Nih representing the total number of excitatory and inhibitory synapses, respectively. Finally, ti represents the activation time of each synapse, indicating when a synaptic event occurs. These variables describe the complex dynamics of synaptic inputs and their collective influence on the neuron's total input current.

2.2.2 Plasticity mechanismIn artificial neural networks (ANNs), learning techniques typically employ static algorithms to adjust connection weights based on specific training data, without dynamically incorporating the timing observed in biological neural network synapses (Abdolrasol et al., 2021). In contrast, synaptic plasticity mechanisms are dynamic processes through which connections between neurons can modify their strength and efficacy in response to neuronal activity, crucial for neural system functionality, particularly in learning and memory processes (Citri and Malenka, 2008). Several mechanisms contribute to synaptic plasticity dynamics, including Long-Term Potentiation (LTP), which strengthens synapses through repeated activation of presynaptic neurons, and Long-Term Depression (LTD), which weakens synapses during periods of reduced synaptic activity (Barco et al., 2008). Another form is Spike-Timing-Dependent Plasticity (STDP), which adjusts synaptic strength based on the precise timing of spikes between presynaptic and postsynaptic neurons.

The plasticity mechanism implemented in this work is STDP, described by Equation 6. It is a hardware-friendly version of the original Bliss and Gardner-Medwin (1973), that uses two new variables, apre and apost, known as pre and postsynaptic activity “traces” (Stimberg et al., 2019). These “traces” track the temporal activity patterns of presynaptic and postsynaptic neurons, respectively, where τpre and τpost are their time constants that determine their rate at which these traces change over time. Due to the fact that the HEENS architecture processes spikes individually, it is important to highlight that the traces apply to individual spikes, not to spike rate averages.

τpredapredt=−apre, τpostdapostdt=−apost (6)The weight or strength of the connection, denoted by w, adjusts dynamically with each presynaptic or postsynaptic spike, as shown in Equations 7, 8, in response to the temporal activity of the neurons. The Equation 9 ensure that the synaptic weight w remains within a biologically plausible range, without exceeding a maximum value wmax and without becoming negative.

apre←apre+Δapre, w←w+apost (7) apost←apost+Δapost, w←w+apre (8) w←{wmaxif w≥wmax,0if w≤0. (9)Where Δapre and Δapost represent the increment and decrement amounts for synaptic plasticity variables, respectively.

Considering that a competitive learning method is employed, with the aim of each neuron or group of neurons to specialize in recognizing specific features, it is important to introduce a method to control and restrict the unlimited growth of weights between neurons, as mentioned in the work of Goodhill and Barrow (1994). To achieve this, a rule of synaptic scaling plasticity based on subtraction was implemented to generate competition among synapses. This mechanism normalizes synaptic strength after each training digit and follows Equations 10, 11.

facw=Wnorm−∑i=1NstdpwiNstdp (10)Where facw is the normalization factor of synaptic weight, Wnorm represents the target value of normalization, wi indicates the synaptic weight of the i-th synapse and Nstdp represents the total number of synapses subject to the STDP plasticity rule. These normalization techniques are already present in various image processing works, such as Krizhevsky et al. (2012).

2.2.3 HomeostasisThe homeostasis involves biological systems maintaining stability by autonomously adapting to fluctuations in external conditions (Billman, 2020). In the context of neural networks, homeostasis ensures that neurons maintain balanced firing rates and receptive fields, preventing individual neurons from dominating neural responses. The homeostasis mechanism used here follows the same approach as Diehl and Cook (2015). The goal is to ensure that all neurons have similar firing rates but different receptive fields. To prevent a single neuron in the excitatory layer from dominating the response, the firing threshold (Vth) was adapted in Equations 12, 13:

Vth−ad=Vth+θe−t/τhom (12) If :V(t)≥Vth−ad then: θ←θ+Δθ (13)In the homeostasis equation, Vth−ad represents the adaptive threshold, Vth denotes the original threshold, and θ indicates the magnitude of the adaptation, which increases Δθ with each spike and decays exponentially according to the time constant τhom. Consequently, as a neuron fires more frequently, its threshold progressively rises, needing greater input for subsequent firing. This iterative process persists until θ decreases to a satisfactory level.

2.3 Handwritten digit recognition 2.3.1 MNIST datasetThe set of images used for the application corresponds to the MNIST dataset, which consists of grayscale images of handwritten digits from 0 to 9. It contains 60,000 training examples and 10,000 test examples. MNIST is widely used in the field of Optical Character Recognition (OCR), where applications for recognizing, various alphabets and languages such as the Latin alphabet (Singh and Amin, 1999; Darapaneni et al., 2020), Farsi-Arabic (Mozaffari and Bahar, 2012; Tavoli et al., 2018), Japanese (Matsumoto et al., 2001), besides numeric digits, are also notable. The MNIST dataset was chosen not only for its simplicity and clarity in digit recognition tasks but also because it serves as a standard benchmark dataset in the field of machine learning and neuromorphic computing (Guo et al., 2022; Liu et al., 2022; Tao et al., 2023). This selection allows for a direct comparison of the performance of the HEENS architecture with existing methodologies and provides a baseline for evaluating its capabilities.

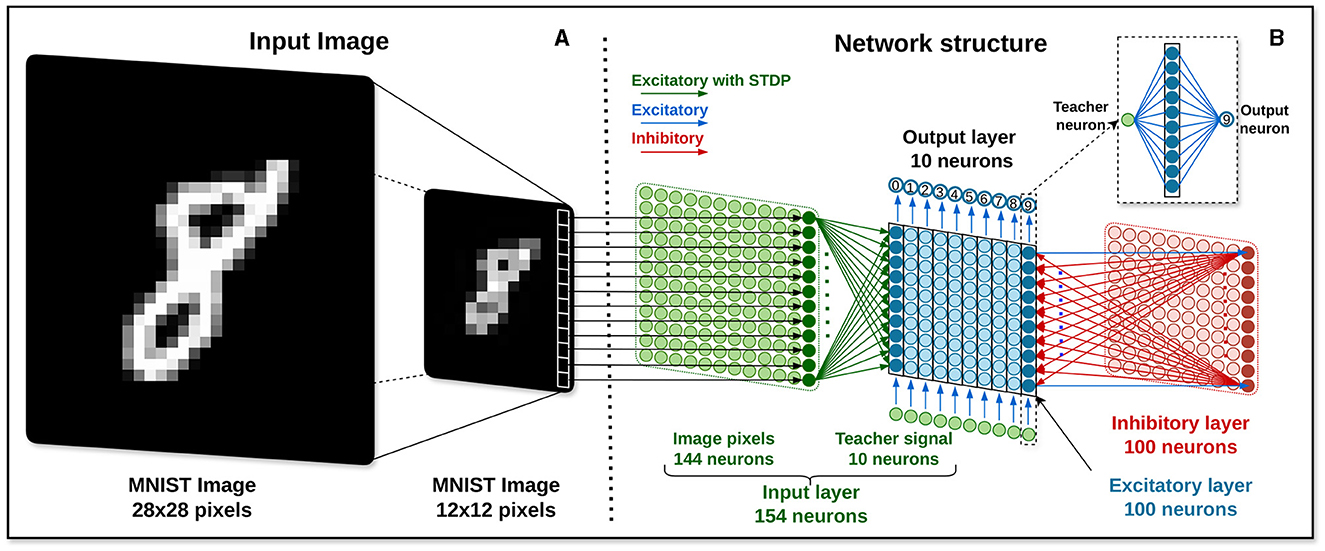

Although each image has a size of 28 × 28 pixels, due to limitations regarding the maximum number of synapses and implementable neurons in the HEENS architecture, as explained in Section 2.1, the size of the images is reduced using the .resize((height, width)) function from the PIL (Python Imaging Library). This function resizes the image to the specified values assigned to the height and width variables using the nearest-neighbor interpolation technique (GeeksforGeeks, 2022). For this application, considering that the maximum number of neurons that can be implemented per node in HEENS is 16 × 10, the dimensions of each image are configured to be 12 × 12 pixels, resulting in a total of 144 pixels per input image. Each pixel corresponds to a neuron in the input layer, as illustrated in Figure 3A.

Figure 3. Image processing. (A) Input image encoding. (B) Network architecture.

2.3.2 Input encodingThe network receives input for 350 ms in the form of Poisson-distributed spike trains, with firing rates corresponding to the pixel intensity of MNIST images. Here, the maximum intensity, denoted as 255 for white and 0 for black, enables an accurate representation of luminosity levels within the images. Moreover, a 150 ms pause separates each data set digit presentation presentation, aiding in the clear differentiation of inputs.

In the training process, a supervised learning approach is adopted. A 200 Hz constant spike rate transmits the teacher signal, synchronized with the spikes generated by input images. This signal is conveyed through a designated input neuron, chosen based on the current class label being trained. This strategy is activated exclusively during the learning phase and is deactivated during testing. It facilitates the gradual enhancement of the network's performance in classifying various image classes, even in the absence of explicit error correction. Furthermore, it is worth noting that the teaching signal follows the same cycle duration of 350 ms with a 150 ms pause as the input presentation cycle.

2.4 Network architectureThe proposed network architecture consists of 4 layers, as illustrated in Figure 3B: the input layer, the excitatory neuron layer, the inhibitory neuron layer, and the output layer. It takes as reference the network architectures from Diehl and Cook (2015), Hao et al. (2020), and Lee and Sim (2023), with some variations.

Each pixel in the input image is connected one-to-one to 144 neurons of the input layer (in green color). These neurons are fully connected to the excitatory layer, being all connections excitatory with a synaptic plasticity mechanism enabled during the learning stage. The main function of these 144 neurons is to transmit encoded pulse trains from each image to the next layer.

In addition, the input layer contains 10 neurons (in green) driven by external input, used as teacher signals for supervised learning. Each of these neurons is excitatorily connected to a unique 10-neuron group of the excitatory layer. These reference signals have no configured plasticity mechanism and serve only to provide external information to guide the learning of the network without adjusting their own synaptic weights. The value of these connections is the maximum STDP weight defined in the constant wmax.

The excitatory layer (in blue) consists of 100 LIF-type neurons with homeostasis enabled during the learning phase. In addition to the mentioned connections from the input layer, it also interacts with the inhibitory layer and the output layer. Each excitatory neuron establishes a direct excitatory connection with a corresponding inhibitory neuron characterized by a strong connection strength. In contrast, each inhibitory neuron forms inhibitory connections with all excitatory neurons in the layer, except the one to which it is directly connected. This setup aims to utilize the “winner take all” technique, where the first firing neuron inhibits the rest, aiding in classifying and selectively focusing the network toward the desired input. In the output layer, each column of 10 neurons is connected to an output neuron associated with a specific class (see Figure 3B inset). Thus, the first neurons are associated with 0, the following ones with 1, and so on up to 9. The weight value used is 8 times wmax, a value utilized in Hao et al. (2020) to increase the activity of the layer upon receiving spikes.

The inhibitory layer (in red), which consists of 100 LIF-type neurons with disabled homeostasis, plays a crucial role in regulating the activity of neurons within the excitatory layer. Each neuron within this layer receives input from an excitatory neuron. The synaptic weights of these connections must be carefully adjusted to prompt the activation of inhibitory neurons quickly upon receiving an excitatory pulse, ensuring timely inhibition and contributing to the network's ability to regulate and control its activity dynamics. At the same time, the weights of the inhibitory connections must be adjusted to achieve a balance that prevents the complete suppression of the participation of neighboring neurons in the computation of the network.

Finally, the output layer consists of 10 LIF neurons with disabled both homeostasis and STDP. This layer serves as the final processing stage in the neural network, with each neuron dedicated to representing one of the ten possible classified digits.

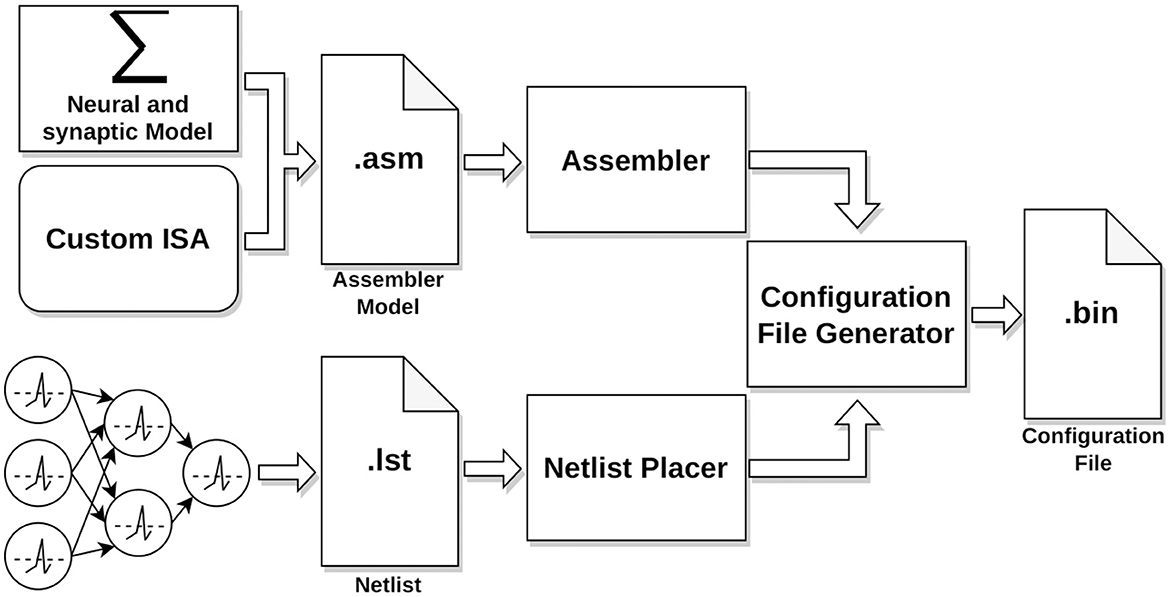

2.5 Neural and synaptic model implementation on HEENSThe implementation process of a neural application in HEENS involves several stages as shown in Figure 4. Every application requires two files, the assembler model, that contains the neural and synaptic algorithms and the netlist. Both files are compiled, and with the use of custom-made Python tools, a configuration file is generated. This file is then transmitted from the PC to the HEENS hardware through the Ethernet interface during an initial configuration phase.

Figure 4. Workflow: neural modeling to configuration file generation.

2.5.1 Assembler modelThe neural and synaptic model algorithms are encoded in a text file in assembly language based on the HEENS custom Instruction Set Architecture (ISA). The ISA has 64 defined instructions, optimized for working with spiking neural networks. HEENS operates with 16-bit precision integer arithmetic, which proves to be a good trade-off between accuracy and resources due to its programmability and multi-model capability. Lower-precision solutions are often limited to specific applications or need to be combined with higher resolution formats to achieve desired outcomes (Yun et al., 2023). On the other hand, using higher resolutions significantly increases resource usage, which may compromise the scalability and power efficiency of the solution (Das et al., 2018; Narang et al., 2018). It was experimentally found that the best suited resolution to operate is 10 μV per Least Significant Bit (LSB).

For the implementation of the model, Equations 1–13 were used, which describe soma dynamics, synaptic plasticity, and homeostasis. However, in order to support the long time constants required by homeostasis, a modification was made in Equation 12. Undersampling was implemented for its decay calculation, which involves calculating the exponential term only at certain number of steps instead of at every time step. Because of the very slow decay, the variation over short time intervals is so small that it cannot be detected by the architecture resolution. The constant used t

留言 (0)