In this section, we present a comprehensive evaluation of the proposed MWFNet through a series of experiments. First, we introduce the validation dataset and the experiment setups. We then report the performance of our method and compare it with other existing methods. Additionally, the robustness and generalization capabilities of the MWFNet are verified in this section. Finally, we conduct ablation experiments to analyze the influence of the individual components of the proposed MWFNet on the final classification performance.

3.1 DatasetsNeuroMorpho.org (Ascoli et al., 2007) is one of the largest web-accessible repositories for digitally reconstructed neurons. It contains 183,740 neurons (version 8.2.36) from multiple brain regions, species, and types, contributed by over 900 laboratories worldwide. It is important to note that the quality of digitally reconstructed neurons varies. While some neurons contain complete dendrites and axons, others only have partial dendrites or lack axons entirely. Moreover, neurons of different types can exhibit similar morphologies, while neurons of the same type can differ in morphology (Li et al., 2021). This makes the identification of neurons more challenging. To ensure an objective and fair comparison between our method and existing methods, we randomly selected all neuron data from NeuroMorpho.org (Ascoli et al., 2007) without considering the physical integrity of neurons.

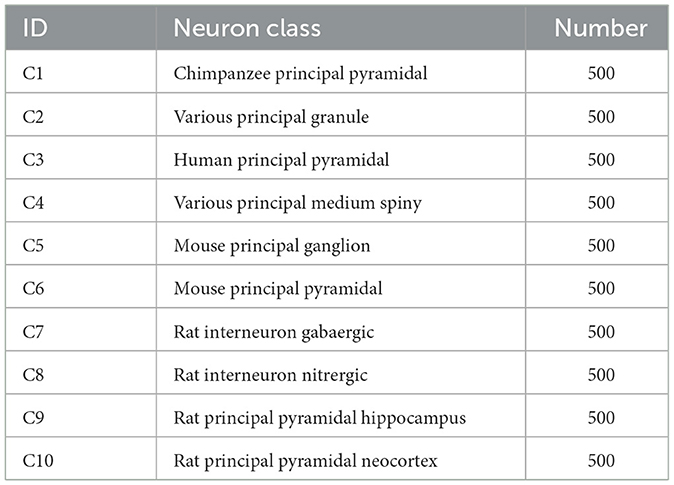

3.1.1 Dataset 1As presented in Table 1, this dataset consists of 10 classes of neurons belonging to multiple brain regions and species. It contains pyramidal cells (from the chimpanzee, mouse, rat, and human), pyramidal cells from rat neocortex and hippocampus, as well as rat GABAergic and nitrergic interneurons. Besides, granule and medium spiny neurons belonging to various species are also considered. There are 500 neurons for each type.

Table 1. The considered neuron classes of Dataset 1.

3.1.2 Dataset 2To verify the generalization of our method, the dataset only considering neuronal type also serves as a validation dataset. It consists of 1,802 digitally reconstructed neurons belonging to five classes, namely ganglion, granule, motor, Purkinje, and pyramidal cells. The number of samples per class is 500, 500, 95, 208, and 499, respectively. For clarity, these five neuron types are denoted as GA, GR, MO, PU, and PC, respectively.

3.2 Experiment settings 3.2.1 Implementation detailsIn the data prepossessing, the ϕ is set to 45°. Consequently, the number of view images N is 8, and each view image is resized to 224 × 224 pixels. In the view descriptor generation module, the ResNet-50 is employed to extract raw view features. The learnable GeM pooling parameter pc is initialized to 3. Besides, T1 and T2 in GVMM and GVEM are set as 0.9 and 0.8, respectively. The MWFNet framework is implemented using PyTorch and two NVIDIA GTX 2080Ti GPUs are employed to train the model with a batch size of 16. The Adam optimizer with a learning rate of 0.001 is adopted to optimize the cross-entropy loss, while other parameters are set to their default values.

Besides, we employ the 10-fold cross-validation method to assess the performance of our MWFNet. Therefore, the dataset is randomly divided into 10 equal-sized folds. In each validation iteration, one fold serves as the test set while the remaining nine serve as the training set. This process is repeated 10 times, ensuring that each subset is used as a test set. Consequently, our MWFNet is trained on 10 distinct training sets and evaluated on 10 separate test sets. Finally, we calculate the average of these 10 validation results to estimate the overall performance of our MWFNet.

3.2.2 Evaluation metricsOverall accuracy is naturally utilized to evaluate the classification performance of our method. Besides, the F1 score serves as an evaluation metric to verify the effectiveness of the proposed approach. Classification confusion matrices are also provided to clearly demonstrate the effectiveness of our method.

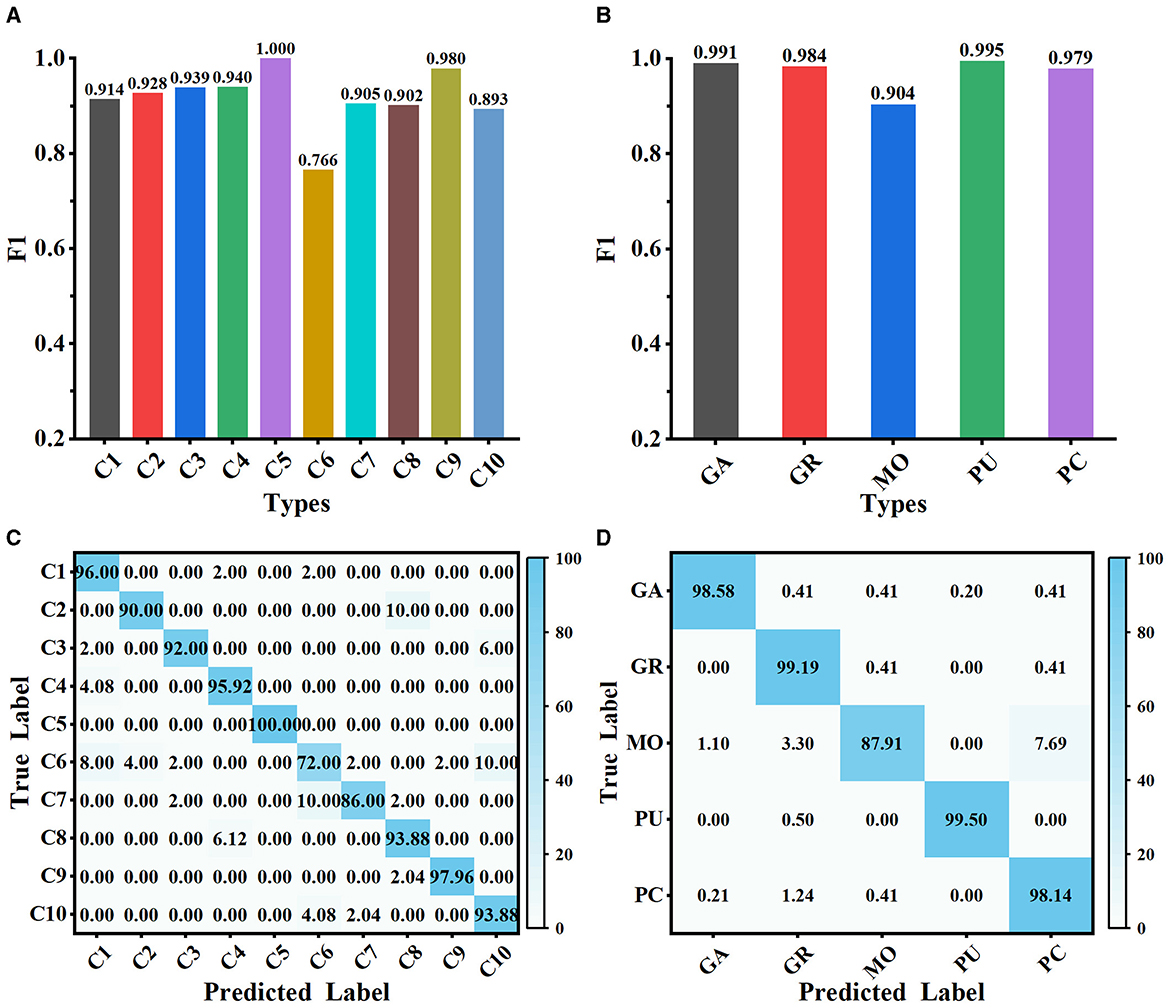

3.3 Classification performance of MWFNetThe proposed method is evaluated on Dataset 1 and Dataset 2, and the classification performance is shown in Figure 5. Our method achieves satisfactory results on the 10-class classification task, with an average accuracy of 91.73% and an F1 score value of 0.917. It is noteworthy that the F1 scores of all classes on Dataset 1, except C6, exceed 0.890, implying that the extracted deep features can effectively represent the differences among neuron classes (as shown in Figure 5A). We observe that our method has some limitations in distinguishing rat pyramidal cells (i.e., C6 type), only with an F1 score of 0.766. 10% of mouse pyramidal cells are misclassified as rat pyramidal cells (i.e., C10 type; as shown in Figure 5C). There are some morphological similarities between these pyramidal cells, such as apical dendrites, basal dendrites, and soma. While our method is good at capturing the overall structure of neuronal morphology, it currently has some limitations in identifying subtle morphological nuances, such as dendrite size and branching patterns. Our future work will focus on improving the ability of our method to represent subtle differences so that it can accurately describe the morphological properties of neurons both globally and locally. When evaluated on Dataset 2 with 5 neuron types, our method exhibits better classification performance with an average F1 score of 0.971 (as presented in Figure 5B). The confusion matrices report a high true prediction rate for neuron types (as shown in Figures 5C, D), indicating that our method can precisely distinguish neurons with the instance-level descriptors.

Figure 5. Classification performance of MWFNet on both datasets. (A, B) are the F1 scores of each type evaluated on Dataset 1 and Dataset 2, respectively. (C, D) are the confusion matrices of classification on Dataset 1 and Dataset 2, respectively.

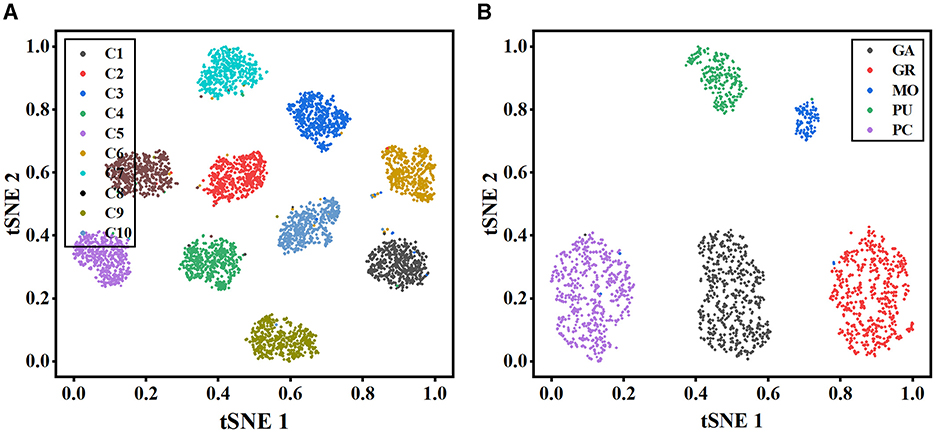

To further illustrate the effectiveness of our method, t-stochastic neighbor embedding (t-SNE) (Van der Maaten and Hinton, 2008) is employed for the analysis of the distribution of high-dimensional features. Figure 6 depicts the distribution of the instance-level features extracted from the MWFNet on both datasets. Despite the great inter- and intra-class variation in neuronal morphology, our method clearly separates each category into a distinct cluster. By enhancing the raw view feature and reducing redundant information among views, the instance-level features learned from the MWFNet contribute to the accurate identification of different neurons. Both visual and qualitative experimental results demonstrate the superiority of our method in neuronal morphology analysis.

Figure 6. Visualization of the instance-level feature vectors using the t-SNE. (A, B) are the feature distributions of Dataset 1 and Dataset 2, respectively.

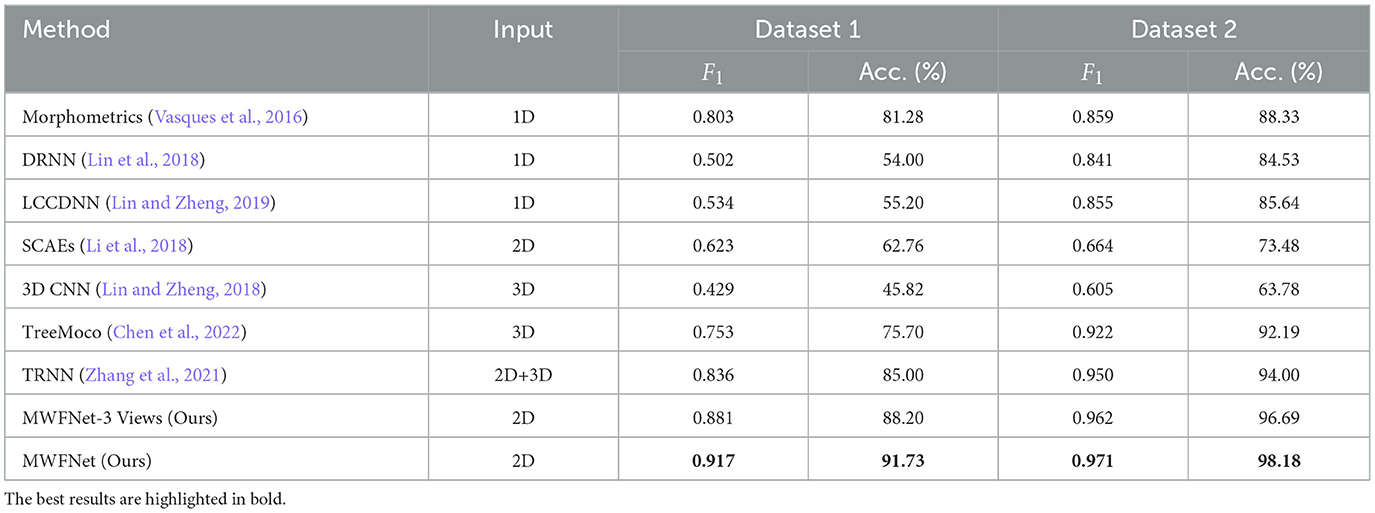

3.4 Comparison with other methodsWe compare our method with various methods evaluated on two datasets to demonstrate its efficacy, including Morphometrics (Vasques et al., 2016), 3D CNN (Lin and Zheng, 2018), DRNN (Lin et al., 2018), LCCDNN (Lin and Zheng, 2019), TRNN (Zhang et al., 2021), SCAEs (Li et al., 2018), and TreeMoCo (Chen et al., 2022). Especially, the Morphometrics is evaluated by using 43 predefined morphometrics computed by L-Measure (Scorcioni et al., 2008) as feature descriptors. Then, various supervised algorithms and training settings are utilized as the previous work (Vasques et al., 2016), and the best performance is reported. Additionally, we evaluate the performance of our method, using only three orthogonal views (namely x–y, y–z, and x–z planes) projected from the 3D neuron data as input. This configuration is referred to as MWFNet-3 Views. For other methods, to make a fair comparison, we train these models using the same dataset and train-test split as our method. Moreover, we utilize the default parameter of these compared models to optimize them. The best results are reported.

As presented in Table 2, the proposed method yields the best classification performances with an accuracy of 91.73% and an F1 score of 0.917 on Dataset 1. Our method has a striking accuracy improvement by 10.45% over Morphometrics (Vasques et al., 2016). This indicates that the novel feature representations produced by the MWFNet are more effective at characterizing neurons with complex morphology structures. Compared with the 3D CNN (Lin and Zheng, 2018) based on the voxelized 3D data, our method makes full use of the complementary information across views while enhancing the view-level feature of each view to compensate for the information loss caused by projection. Therefore, our method can comprehensively depict neuronal arbors and precisely identify the type of neurons. By considering the impact of different views on the classification results, our method gains a 6.73% accuracy improvement compared with TRNN (Zhang et al., 2021), which directly connects different view features to generate the instance-level descriptor. Although TreeMoCo (Chen et al., 2022) constructs the neuron as a tree graph and designs many features, it involves neuron node sampling during the data preprocessing, so its performance can be further improved. Besides, MWFNet performs better when takes multiple views as input instead of three orthogonal projection views.

Table 2. Comparison of different methods.

Table 2 also reports the classification performance of different methods evaluated on Dataset 2. Our method achieves an accuracy of 98.18% and an F1 score of 0.971 on Dataset 2, outperforming other methods on all evaluation metrics. Compared with the 3D CNN (Lin and Zheng, 2018), our method improves the classification accuracy by 34.4%. The information loss of part neuronal arbors caused by the voxelization and the sparsity of neuron data negatively affects the performance of 3D CNN (Lin and Zheng, 2018). Despite possessing an average F1 score of 0.950, the TRNN (Zhang et al., 2021) performs 2.1% worse than our method. This is because it ignores the distinctions between view features and their influence on analysis outcomes. Conversely, our approach measures the discriminability of various view images and treats view features differentially. Consequently, our method can effectively improve the performance of neuron classification.

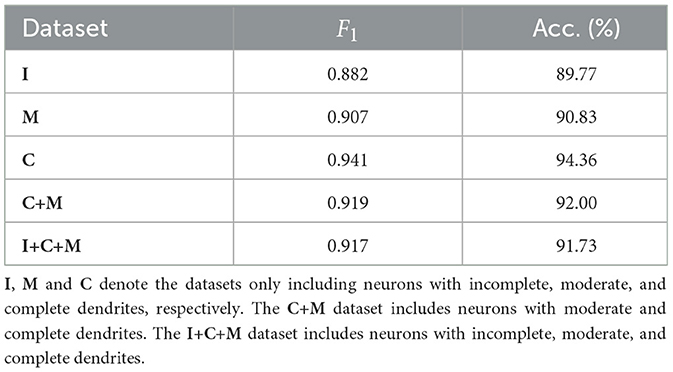

3.5 Validation of robustnessThe proposed MWFNet is evaluated on the dataset, randomly downloaded from NeuroMorpho.org (Ascoli et al., 2007). It is a comprehensive database, contributed to by over 900 laboratories worldwide, presenting a wide array of experimental conditions and data quality. To thoroughly assess the capabilities of MWFNet, diverse selection criteria based on neuronal dendrites are utilized to generate the evaluation dataset. Specifically, 10 types of neurons within Dataset 1 are first categorized based on the physical integrity of their dendrites, labeled as complete, moderately complete, or incomplete. Subsequently, they are organized into multiple sub-datasets: neurons only with complete dendrites (C), neurons only with moderately complete dendrites (M), neurons only with incomplete dendrites (I), neurons with dendrites that are at least moderately complete (C+M), and a comprehensive group including all neuron types (I+C+M).

Table 3 shows the classification performance of the proposed MWFNet evaluated on these sub-datasets, evidencing its robust classification efficacy. Notably, our MWFNet attains an impressive accuracy of 94.36% in classifying neurons with complete dendrites (C dataset). For the M dataset, including neurons with moderately complete dendrites, MWFNet achieves an accuracy of 90.83%. Remarkably, it also yields an accuracy of 89.77% on the I dataset, despite the significant challenges involved in classifying neurons with exclusively incomplete dendrites. When evaluated on the C+M dataset, MWFNet achieves satisfactory performance with an accuracy of 92.00%. Crucially, it proves its comprehensive applicability by correctly identifying 91.73% of neurons across all categories. These findings confirm that MWFNet delivers high-quality analysis across neuron datasets with variable reconstruction quality, showcasing its reliability as an effective tool for large-scale and diverse neuron type analysis.

Table 3. Classification accuracy of our method evaluated on datasets with different physical integrity of neuronal dendrites.

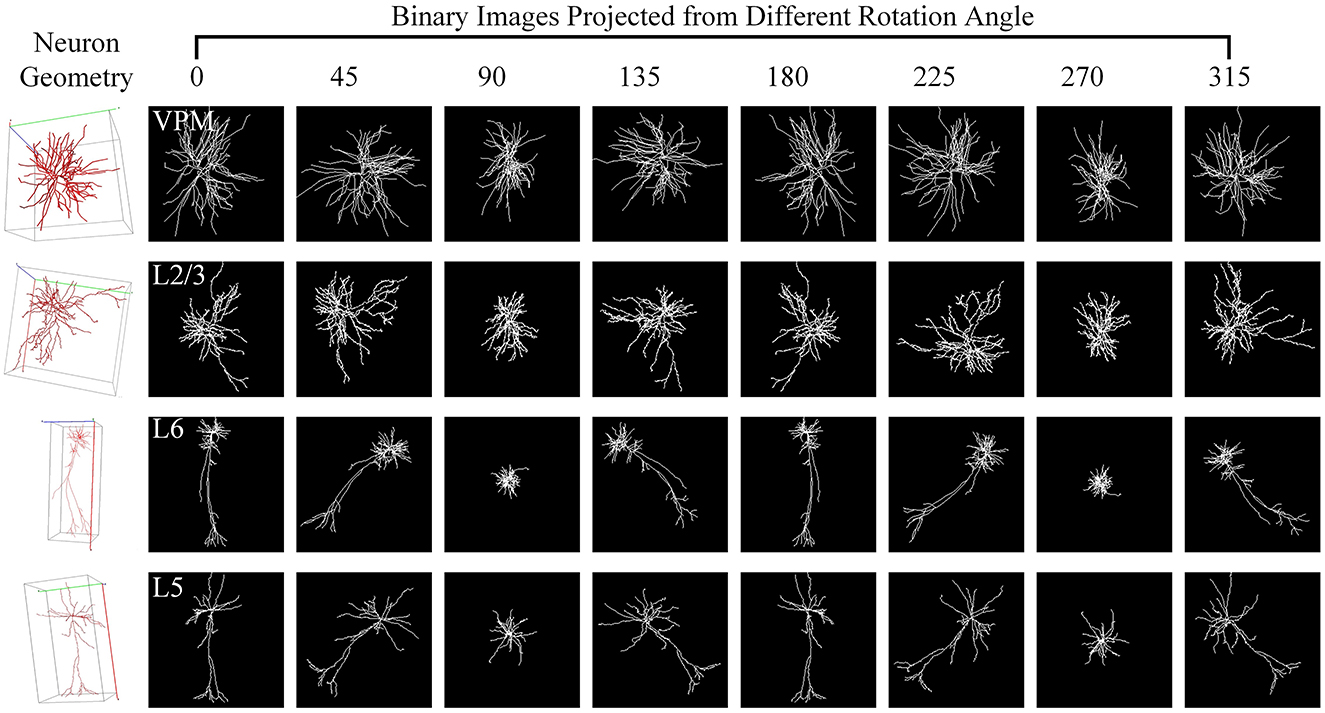

3.6 Validation of generalizationHere, we utilize the digitally reconstructed neurons provided by the Janelia MouseLight (JML, http://mouselight.janelia.org/) to verify the generalization of our approach. We use the same dataset as Chen et al. (2022) to make a fair comparison and give the optimal results reported in Chen et al. (2022). We eliminate neurons that exhibit clear reconstruction errors. Furthermore, although some samples have both dendrites and axons, we simplify our analysis by retaining only the dendritic arbors as done in Chen et al. (2022). Consequently, the selected JML-4 dataset used in this section includes 369 neurons belonging to L2/3, L5, L6, and VPM. Each class consists of 64, 179, 114, and 12 neurons. We employ the same training-test split as the work (Chen et al., 2022) and utilize the data preprocessing method described in Section 2.1 to obtain the 2D view images (as shown in Figure 7) as the input of our method.

Figure 7. Neuron samples of the JML-4 dataset and their corresponding 2D images projected view images. Each 3D neuron sample (shown in the first column) is projected into eight view images (shown in columns 2–9), with a projection interval of 45 degrees. The types of neurons are labeled with white text labels.

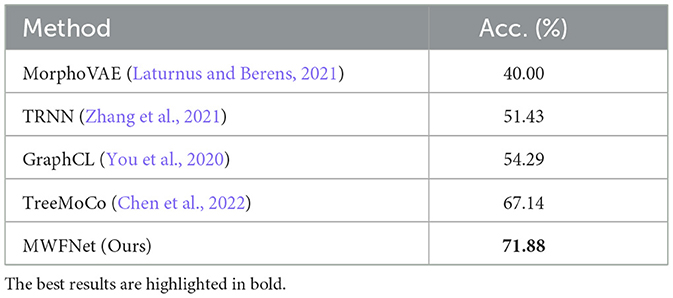

As shown in Table 4, our method yields the best results, with an accuracy of 71.88%, outperforming other methods. It should be noted that this dataset exhibits data imbalance, with the number of samples for each class ranging from 10 to 200, and it suffers from a shortage of overall training data, totaling only 369 samples. Furthermore, neurons of different types often share similar morphologies, as seen in the neurons from layers L5 and L6 (as illustrated in Figure 7). These factors make neuron classification particularly challenging, resulting in relatively low performance across all comparison methods applied to this dataset. Despite these challenges posed by this dataset, our method delivers robust results. MWFNet effectively captures the complex morphologies of neurons through multiple projection images and sophisticated feature representation, enhancing its efficacy even with limited data availability. Furthermore, the proposed MWFNet can be used to effectively identify neurons from various resources.

Table 4. Classification accuracy of different approaches evaluated on the JML-4 dataset.

3.7 Ablation studiesTo verify the importance of each module of the proposed MWFNet, we perform ablation experiments. Firstly, we verify the effectiveness of GVMM and GVEM, respectively. Next, we analyze the representation differences of different views and explore the impact of such differences on the final results. Finally, we examine the effect of using different thresholds in the GVMM and GVEM.

3.7.1 Validation of different modulesHere, we first verify the influences of GVMM and GVEM. The baseline method adopts ResNet-50 and GeM pooling as the feature extractor, concatenating different view features directly to generate the instance-level descriptors of neurons (see the first row of performance in Table 5). In our method, the GVMM aims to calculate the importance of views based on salient feature regions, eliminating the influence of background regions. As shown in the Table 5, incorporating the GVMM improves the accuracy by 9.2% and 2.7% over the baseline on both datasets while the number of parameters of GVMM is almost negligible. The GVEM mines the relationships between different views and enhances the view-level descriptors, further reducing redundant information among views and improving the performance of our method. The introduction of GVEM improves the accuracy of our method by 9.48% and 3.04% on the two datasets, respectively. When introducing both GVMM and GVEM, the accuracies of our method evaluated on both datasets gain 11.53% and 3.15% over the baseline, respectively.

Table 5. Performance of MWFNet with different modules.

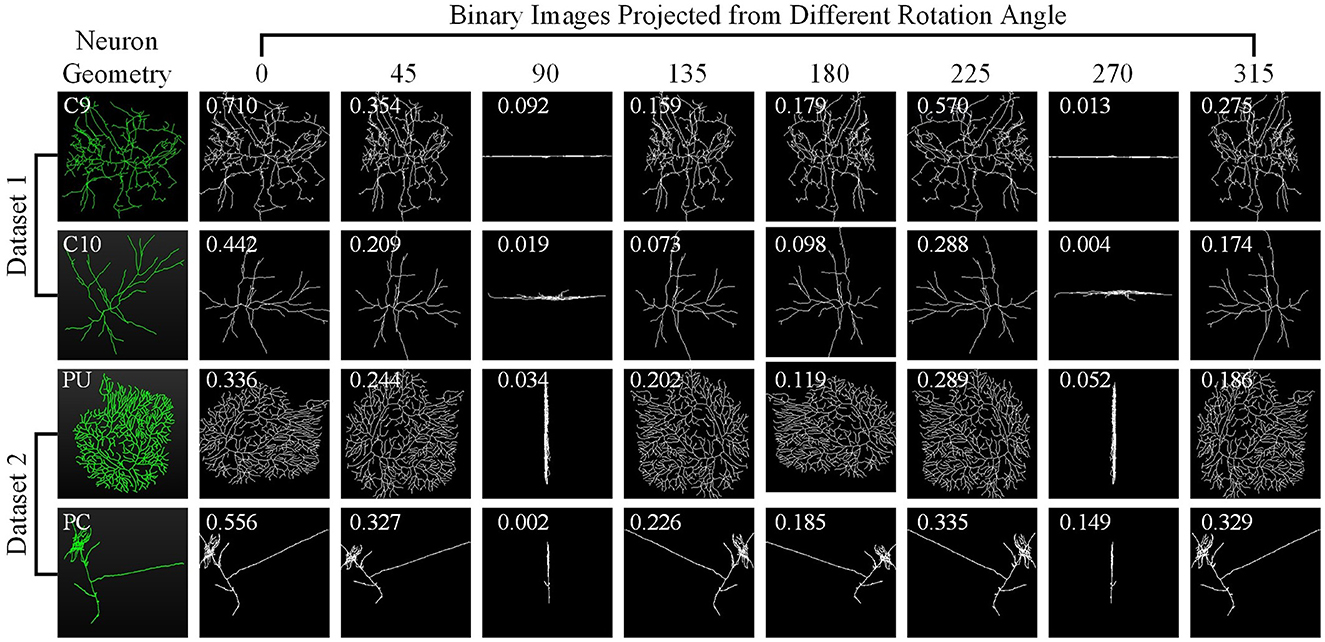

3.7.2 Validation of different viewsHere, we investigate how each projection image affects the categorization results. Figure 8 shows the projected view images of neurons and the related weights produced by the GVMM. As presented in Figure 3, neurons exhibit comparable morphologies from the view images projected from the same projection angles. View images projected at different rotation angles show morphological distinctions. Additionally, the projected view images containing varying morphological details contribute differentially to the classification results. Here, the weight estimations of different views are shown in Figure 8 with white font. It is observed that the view image with more morphological details usually has a larger weight score, indicating that the view has more distinct morphological information and would strongly impact the classification decision, and vice versa. Therefore, it is sensible that our method treats the features from different views differentially by assigning adaptive weights in the fusion process. If the view-level features are treated equally, the instance-level descriptors of neurons are not sufficiently differentiated among different classes and may contain more redundant information, hindering effective neuronal morphology classification.

Figure 8. Neuron samples randomly selected from both datasets and the corresponding projected images. The discriminability score for each projected image is marked in white font.

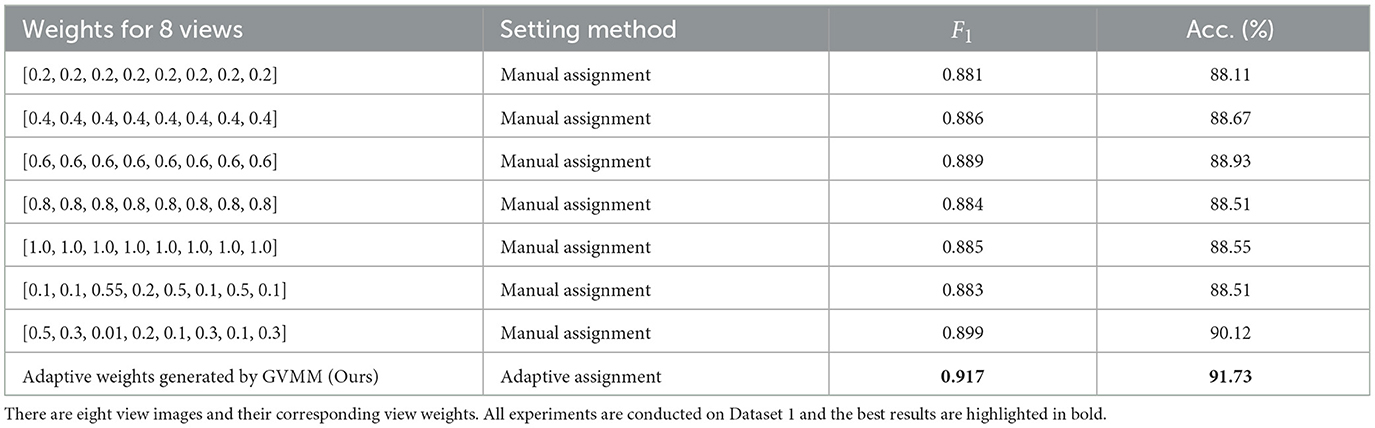

To further validate the efficacy of our method in treating different view images distinctly, we conduct experiments on Dataset 1 by manually assigning equal weights to view features. Table 6 presents the classification results of MWFNet with manual and adaptive weight assignment. It is evident that when each view is assigned the same weight manually (such as 0.2, 0.4, 0.6, 0.8, and 1.0), the classification performance is relatively poor, showcasing a notable disparity compared to the performance achieved by adaptively assigning weights computed by GVMM to different views. This highlights the effectiveness of our MWFNet in treating views differentially based on their morphology representation for neurons. Moreover, we also explore the impact of manually assigning varying weights to each view based on their representation differences in neuronal morphology. When setting corresponding weights to views, the results are comparable to the adaptive weight assignment. Interestingly, when opposite weights are manually assigned to views according to the morphology representation of each view for neurons, a slight decrease in classification performance can be observed. These results further demonstrate the validity of our method in utilizing the corresponding weights generated by GVMM for different view images.

Table 6. Classification accuracy of our method with different view weight assignment methods.

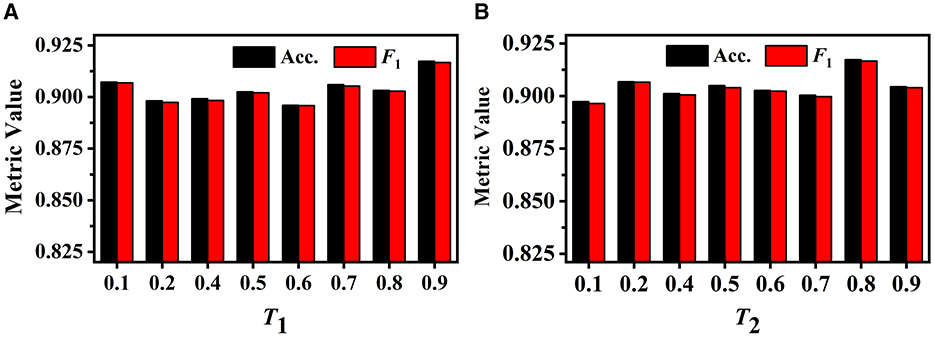

3.7.3 Threshold selectionHere, we explore the effects of different T1 and T2 for the GVMM and GVEM, respectively. All experiments are carried out on Dataset 1, consisting of more types of neurons.

We first investigate the influence of different T1 values on GVMM. We conduct experiments with different T1 to select the optimal value. As illustrated in Figure 9A, when the value of T1 is relatively small, the feature area used by GVMM to measure view confidence is wider. This makes the distinguishability measurement of each view less precise. To gauge the significance of the view for analysis results, GVMM selects high-confidence feature areas as the T1 value increases. This lessens the influence of background or noisy areas, allowing for improved categorization results. Our method yields the best performance across all three metric values when T1 is equal to 0.9. Therefore, we set the T1 to 0.9 for other trials.

Figure 9. Performance of our method under different T1 on the GVMM (A) and under different T2 on the GVEM (B).

The GVEM employs the threshold T2 to assess the similarity among the view images, reducing the weight of the highly similar view features while strengthening the less similar ones. Figure 9B displays the experimental results of our method based on various T2 values. We can observe that our method achieves the best classification performance when T2 is equal to 0.8. The highest performance (an F1 score of 0.917) presents a 2.02% gain compared to when T2 is 0.1. This demonstrates that GVEM can enhance view features and reduce redundancy, facilitating the learning of instance-level feature descriptors.

4 DiscussionsIdentifying and analyzing neuron types based on morphology are important to understanding the neuronal function and activity (Li et al., 2021; Parekh and Ascoli, 2013). However, it is challenging due to the significant differences in neuronal morphology among intra- and inter-classes (Li et al., 2021). Recently, automatic analysis methods (Zhu et al., 2022; Lin and Zheng, 2018, 2019) based on morphological characteristics mainly employ 3D CNNs or 2D CNNs to extract feature representations from 3D neuron data or 2D images, respectively. However, the sparsity of neuronal morphology makes it not easy to build a unified 3D network for various datasets (Li et al., 2018). While the method (Zhang et al., 2021) based on 2D images obtains a unified framework and saves computing resources, it does not account for the limitations and specificity of 2D views in characterizing neuronal morphology.

In this work, we propose the MWFNet, which hierarchically describes neuronal morphology based on multiple 2D view images. The MWFNet considers the differences between 2D views in representing neuronal morphology, as well as the similarity and repeatability among views. The obtained instance-level descriptors contain salient features learned from multiple-view images and reduce the redundant information induced from similar views. Therefore, our method can fully represent neuronal morphology and accurately reflect the characteristics of different categories.

As Figure 8 illustrates, different view images depict neuronal morphology differentially. Consequently, their influence on the analysis results varies. The GVMM employs threshold T1 to select high-confidence and salient feature regions to assess the impact of each view. Experimental results show that the GVMM effectively improves the performance of our method in identifying 10 types and 5 types of neurons. The ablation study on the selection of T1 shows that our method produces the best classification results (accuracy of 91.73% and 98.10% on Dataset 1 and Dataset 2, respectively) when the threshold T1 is equal to 0.9. However, the threshold T1 value is manually selected and set in this work. In future work, we will explore adjusting the T1 adaptively during the learning process to analyze the neuronal morphology conveniently.

We observe similarities between views (see Figure 8) when utilizing the multi-view method to describe neuronal morphology. However, if all view descriptors are used to form the instance-level descriptor, it leads to information redundancy. The GVEM is designed to improve the effectiveness of view-level features and retain dissimilar features. It enhances the feature representation of the views different from others while weakening the characteristics of these extremely similar views. According to experimental results, GVEM increases classification accuracy by 9.48% and 3.04% on Dataset 1 and Dataset 2, respectively. However, our method sets a high threshold T2 to retain as many view features as possible while removing redundant information to some extent. In our future work, we will consider removing redundant features to a greater extent while maintaining the classification performance of our method. Additionally, we will investigate setting the threshold T2 more flexibly.

5 ConclusionsThis paper proposes a novel feature representation for neuronal morphology using the Multi-gate Weighted Fusion Network (MWFNet). The MWFNet first utilizes a Gated View Measurement Module (GVMM) to assess the impact of each view on the classification results according to the salient feature regions and a Gated View Enhancement Module (GVEM) to enhance view-level descriptors based on the paired similarity. After that, the discriminative instance-level descriptors for neurons are obtained by adaptively assigning the corresponding discrimination score generated by GVMM to the enhanced view features obtained from the GVEM. Experimental results show that our method achieves high accuracies of 91.73% and 98.18% on 10-type and 5-type neuron classification tasks, respectively, outperforming other methods. Moreover, the MWFNet has good generalization and robustness when evaluated on other datasets.

In the future, we will further optimize the proposed MWFNet and apply it to analyze large-scale datasets. While MWFNet yields significant performance, its GVMM and GVEM manually set thresholds. In future work, we will explore approaches to automatically adjust and set these thresholds during the feature extraction process. This will enable more automatic and scalable neuronal morphology analysis. Additionally, neuron data is increasing dramatically thanks to continuous advances in high-precision microscopic imaging and reconstruction techniques. Therefore, we plan to apply the proposed MWFNet to analyze larger-scale datasets. Our goal is to develop a robust and efficient tool for large-scale neuron analysis that will significantly advance the field of neuroscience.

Data availability statementThe original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributionsCS: Conceptualization, Methodology, Validation, Visualization, Writing – original draft. FZ: Funding acquisition, Writing – review & editing, Supervision.

FundingThe author(s) declare financial support was received for the research, authorship, and/or publication of this article. This work was supported by the Anhui Provincial Natural Science Foundation under Grant 2108085UD12.

AcknowledgmentsWe acknowledge the support of GPU cluster built by MCC Lab of Information Science and Technology Institution, USTC.

Conflict of interestThe authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's noteAll claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

ReferencesAscoli, G. A., Donohue, D. E., and Halavi, M. (2007). Neuromorpho. org: a central resource for neuronal morphologies. J. Neurosci. 27, 9247–9251. doi: 10.1523/JNEUROSCI.2055-07.2007

PubMed Abstract | Crossref Full Text | Google Scholar

Batabyal, T., Condron, B., and Acton, S. T. (2020). Neuropath2path: classification and elastic morphing between neuronal arbors using path-wise similarity. Neuroinformatics 18, 479–508. doi: 10.1007/s12021-019-09450-x

PubMed Abstract | Crossref Full Text | Google Scholar

Batabyal, T., Vaccari, A., and Acton, S. T. (2018). “Neurobfd: size-independent automated classification of neurons using conditional distributions of morphological features,” in Proc. Int. Symp. Biomed. Imaging (Washington, DC: IEEE), 912–915. doi: 10.1109/ISBI.2018.8363719

Crossref Full Text | Google Scholar

Caznok Silveira, A. C., Antunes, A. S. L. M., Athié, M. C. P., da Silva, B. F., Ribeiro dos Santos, J. V., Canateli, C., et al. (2024). Between neurons and networks: investigating mesoscale brain connectivity in neurological and psychiatric disorders. Front. Neurosci. 18:1340345. doi: 10.3389/fnins.2024.1340345

PubMed Abstract | Crossref Full Text | Google Scholar

Chen, H., Yang, J., Iascone, D., Liu, L., He, L., Peng, H., et al. (2022). TreeMoCo: contrastive neuron morphology representation learning. Proc. Adv. Neural Inf. Process. Syst. 35, 25060–25073. doi: 10.5555/3600270.3602087

Crossref Full Text | Google Scholar

Colombo, G., Cubero, R. J. A., Kanari, L., Venturino, A., Schulz, R., Scolamiero, M., et al. (2022). A tool for mapping microglial morphology, morphomics, reveals brain-region and sex-dependent phenotypes. Nat. Neurosci. 25, 1379–1393. doi: 10.1038/s41593-022-01167-6

PubMed Abstract | Crossref Full Text | Google Scholar

Deng, Y., Lin, X., Li, R., and Ji, R. (2019). “Multi-scale gem pooling with n-pair center loss for fine-grained image search,” in Proc. IEEE Int. Conf. Multimedia Expo (Shanghai: IEEE), 1000–1005. doi: 10.1109/ICME.2019.00176

Crossref Full Text | Google Scholar

Feng, Y., Zhang, Z., Zhao, X., Ji, R., and Gao, Y. (2018). “GVCNN: group-view convolutional neural networks for 3d shape recognition,” in IEEE Conf. Comput. Vision Pattern Recognit. (Salt Lake City: IEEE), 264–272. doi: 10.1109/CVPR.2018.00035

Crossref Full Text | Google Scholar

Fogo, G. M., Anzell, A. R., Maheras, K. J., Raghunayakula, S., Wider, J. M., Emaus, K. J., et al. (2021). Machine learning-based classification of mitochondrial morphology in primary neurons and brain. Sci. Rep. 11:5133. doi: 10.1038/s41598-021-84528-8

PubMed Abstract | Crossref Full Text | Google Scholar

Gillette, T. A., and Ascoli, G. A. (2015). Topological characterization of neuronal arbor morphology via sequence representation: I-motif analysis. BMC Bioinform. 16, 1–15. doi: 10.1186/s12859-015-0604-2

PubMed Abstract | Crossref Full Text | Google Scholar

Gillette, T. A., Hosseini, P., and Ascoli, G. A. (2015). Topological characterization of neuronal arbor morphology via sequence representation: II-global alignment. BMC Bioinform. 16, 1–17. doi: 10.1186/s12859-015-0605-1

PubMed Abstract | Crossref Full Text | Google Scholar

Hamdi, A., Giancola, S., and Ghanem, B. (2021). “MVTN: multi-view transformation network for 3d shape recognition,” in Proc. IEEE Int. Conf. Comput. Vis. (Montreal, QC: IEEE), 1–11. doi: 10.1109/ICCV48922.2021.00007

Crossref Full Text | Google Scholar

Hernández-Pérez, L. A., Delgado-Castillo, D., Martín-Pérez, R., Orozco-Morales, R., and Lorenzo-Ginori, J. V. (2019). New features for neuron classification. Neuroinformatics 17, 5–25. doi: 10.1007/s12021-018-9374-0

PubMed Abstract | Crossref Full Text | Google Scholar

Kanari, L., Dłotko, P., Scolamiero, M., Levi, R., Shillcock, J., Hess, K., et al. (2018). A topological representation of branching neuronal morphologies. Neuroinformatics 16, 3–13. doi: 10.1007/s12021-017-9341-1

PubMed Abstract | Crossref Full Text | Google Scholar

Laturnus, S. C., and Berens, P. (2021). “Morphvae: generating neural morphologies from 3D-walks using a variational autoencoder with spherical latent space,” in Proc. Int. Conf. Mach. Learn. 6021–6031. doi: 10.1101/2021.06.14.448271

Crossref Full Text | Google Scholar

Li, Z., Butler, E., Li, K., Lu, A., Ji, S., Zhang, S., et al. (2018). Large-scale exploration of neuronal morphologies using deep learning and augmented reality. Neuroinformatics 16, 339–349. doi: 10.1007/s12021-018-9361-5

PubMed Abstract | Crossref Full Text | Google Scholar

Li, Z., Fan, X., Shang, Z., Zhang, L., Zhen, H., Fang, C., et al. (2021). Towards computational analytics of 3d neuron images using deep adversarial learning. Neurocomputing 438, 323–333. doi: 10.1016/j.neucom.2020.03.129

Crossref Full Text | Google Scholar

Lin, X., and Zheng, J. (2018). A 3D neuronal morphology classification approach based on convolutional neural networks. Int. Symp. Comput. Intell. Design 2, 244–248. doi: 10.1109/ISCID.2018.10157

Crossref Full Text | Google Scholar

Lin, X., and Zheng, J. (2019). A neuronal morphology classification approach based on locally cumulative connected deep neural networks. Appl. Sci. 9:3876. doi: 10.3390/app9183876

留言 (0)