Science thrives on incremental progress, where the creation and organization of knowledge is driven by a cycle of developing theories and testing predictions made by those theories. When tests of a prediction have the same result repeatedly, confidence in the test result grows, especially when the tests are run by different scientists operating independently. Several terms have been introduced to describe different variants of this test repetition concept: a test result is considered “reproducible” when the same raw measurements and analytic methods are used by a different scientist; “replicable” when new measurements are recorded but the same methods are used as in a previous test; “robust” when the same raw measurements but different analytic methods are used, and “generalizable” when new measurements are recorded and new methods are utilized (Nosek et al., 2022; Poldrack et al., 2019b). In recent years, low levels of reproducibility have been reported in a growing number of scientific disciplines, culminating in a so-called “reproducibility crisis” (Ioannidis, 2005; Munafò et al., 2017). Neuroscience, and specifically in-vivo human neuroimaging, is no exception to this trend. Despite the increasing volume of neuroimaging publications, significant doubts about their accuracy and generalizability have emerged (Poldrack et al., 2017; Klapwijk et al., 2021; Botvinik-Nezer et al., 2020). Three studies (Carp, 2012; Guo et al., 2014; Poldrack et al., 2017) of methodology reporting in functional Magnetic Resonance Imaging (fMRI) papers found that researchers often omit important details like interpolation methods, smoothness estimates, and multiple comparison correction techniques. Neuroimaging researchers have also suggested that low statistical power (Müller et al., 2017; Button et al., 2013; Hong et al., 2019), hypothesizing after the results are known (Klapwijk et al., 2021), and publication bias (David et al., 2013) have contributed to reproducibility limitations (Bishop, 2019; Munafò et al., 2017). These shortcomings have highlighted the need to implement a set of specific factors that will encourage robust and reproducible findings in neuroimaging data analysis. The next section will explore these reproducibility factors and compare their current status between fMRI and brain PET fields.

2 Reproducibility factors relevant to brain PETIn neuroimaging research communities, a set of reproducibility factors have been proposed as a means to promote explicit documentation of methods, availability of research materials, pre-registration of hypotheses and analyses, and standardized analysis pipelines, with an end goal of enabling easier replication of findings (Jadavji et al., 2023). In subsequent sections, we define these factors, explore their current status within neuroimaging in general, and then describe how prevalent they are in both fMRI and brain PET communities.

2.1 Standards for writing and publishing scientific studiesScientific papers serve as the primary means for sharing research findings. Accurate and complete descriptions of methods and data versioning allow fellow researchers to understand exact procedures, exact data/software versions and underlying rationale, facilitating error detection and incremental improvement in study design or data analysis (Munafò et al., 2017; Gorgolewski and Poldrack, 2016; Aguinis et al., 2018). The Organization for Human Brain Mapping Committee on Best Practices in Data Analysis and Sharing (COBIDAS) actively advocates for journals to abide by best practices in the reporting of neuroimaging methods and data (Poldrack et al., 2017). Specialized committees have developed reporting standards that are specific to structural MRI and fMRI (Nichols et al., 2017), Magnetoencephalography (MEG) (Pernet et al., 2020), Electroencephalogram (EEG) (Styles et al., 2021), and Positron Emission Tomography (PET) (Knudsen et al., 2020). Web-based apps like pyBIDS, bids-matlab, and fMRIPrep can help authors adhere to these guidelines by automatically generating reports and providing method templates (Niso et al., 2022a). Additionally, automatic tools like statcheck can help identify inconsistencies in statistical results reported in research papers by extracting the test statistic and degrees of freedom and recalculating p-values. This helps ensure the statistical results are accurate (Nuijten and Polanin, 2020; Epskamp and Nuijten, 2016).

2.1.1 Current state of fMRIA recent review of 160 fMRI publications found that most of them had adopted reporting practices recommended in 2016 by COBIDAS, including clear descriptions of study design (85%), motion correction (77%), and registration to a standard space (80%) (Acar et al., 2023).

2.1.2 Current state of PETDespite initial steps toward establishing reporting standards, including a panel discussion at the 2016 NeuroReceptor Mapping conference and a subsequent consensus paper (Knudsen et al., 2020), progress has been limited. Although a PET standardized reporting checklist project (eCOBIDS PET) was initiated, as of 2024, it remains incomplete and still under development (COBIDAS Contributors, 2020).

2.2 Pre-registrationPre-registration entails writing a study plan that includes information about planned data acquisition, inclusion and exclusion criteria, analytic methods, and depositing that study plan in a third-party repository prior to data acquisition (Poldrack et al., 2017). The goal is to ensure that after the data is collected, publications containing the data use the pre-registered study plan to prove which of their analyses were a priori hypothesis driven as opposed to exploratory and thus susceptible to analytic biases (Gorgolewski and Poldrack, 2016). There are three main platforms currently used by neuroimaging studies for pre-registration (OSF, 2011; NIH, 1997; Wharton-Upenn, 2017) and the Center for Open Science provides detailed forms and guidelines to support pre-registration (COS, 2013). An alternative to the pre-registered study plan is the registered report (RRs), which allows authors to submit methodology text for peer review and conditional acceptance prior to data analysis. Initial studies suggest that RRs in neuroscience outperform traditional papers in methodological rigor, analysis quality, and overall paper quality (Soderberg et al., 2021). Leading neuroimaging journals including Neuroimage have recently incorporated RRs as a new article type (NEUROIMAGE, 2024).

2.2.1 Current state of fMRIA 2022 survey of 283 fMRI researchers worldwide revealed a mixed picture regarding pre-registration. Some 57.6% had pre-registered an fMRI study, and 14.1% had written an RR. In addition, out of those who have pre-registered their studies, only 55% expressed willingness to pre-register their next study, and 26% were hesitant to do so (Paret et al., 2022).

2.2.2 Current state of PETThe brain PET community does not appear to have embraced pre-registration yet. Of the 32 neuroimaging studies that were pre-registered on the OSF website between Aug 2019 and Jan 2022, all involved structural MRI and/or fMRI, and none involved PET (OSF, 2011). In addition, the main platforms for pre-registration include study plan templates tailored for fMRI, but not for PET.

2.3 Data sharingSharing raw and/or processed data empowers researchers to validate published findings, explore new methodologies, and conduct meta-analyses, boosting both transparency and research potential. Pooled data from multiple studies enables investigation of biases and heterogeneity, leading to more reliable findings. Increasingly, journals and funding agencies mandate data sharing, promoting scientific advancement. Numerous publicly available neuroimaging datasets (ADNI, 2004; HCP, 2011; Openneuro, 2022; Marcus et al., 2007) underscore the value of these resources for researchers. In this context, the Brain Imaging Data Structure (BIDS) has established a consensus on organizing and sharing MRI and fMRI data (Gorgolewski et al., 2016). They later introduced extensions of BIDS to include MEG (Niso et al., 2018), EEG (Pernet et al., 2019), and PET (Norgaard et al., 2022).

2.3.1 Current state of fMRIIn the current state of fMRI, data sharing practices vary, with 66% of participants sharing raw data but only 54% intending to share data from their next paper, citing barriers such as consent forms and institutional review boards (Paret et al., 2022).

2.3.2 Current state of PETData sharing in PET is limited. OpenNeuro (Markiewicz et al., 2021) hosts 92 fMRI datasets compared to just 9 for PET. Even access to existing PET data faces hurdles: PET scans with newer radiotracers often face stricter limitations on data sharing, and some institutions limit sharing to only those researchers that collaborate with their investigators. While repositories like ADNI (Jagust et al., 2015) and A4 (Insel et al., 2020) host a large number of brain PET scans, there is still a relative lack of large datasets featuring recently-developed radiotracers.

2.4 Codes, containers, and the cloudTraditional publications necessarily provide abbreviated descriptions of computational methods applied to neuroimaging data, due to space constraints. Therefore, the most accurate source of information about every detail of computational procedures is the source code of the computer programs themselves (Niso et al., 2022a; White et al., 2022). Version control system repositories such as the GitHub and Brainlife offer platforms for open code sharing and preserving snapshots of specific versions (Kubilius, 2014), thus encouraging reproducibility by enabling differing labs to implement precisely the same computational steps. Beyond code that governs individual computational steps, the complexity of neuroimaging analysis workflows that assemble sequences of such steps presents challenges for reproducibility (Paninski and Cunningham, 2018). These workflows rely on intricate software dependencies (Merkel, 2014), system-level resources (Abe et al., 2022), and specialized packages (Poldrack et al., 2019a) which can lead to inconsistencies across computing environments and divergent results (Renton et al., 2022; Halchenko and Hanke, 2012; Niso et al., 2022a). Software containers such as Docker and Singularity have emerged as effective tools for capturing entire software stacks, ensuring reproducibility across different platforms (Kurtzer et al., 2017). Additionally, BIDS Apps enhance reproducibility by packaging existing neuroimaging pipelines in containers, resolving installation issues, and improving analysis reproducibility (Gorgolewski et al., 2017).

2.4.1 Current state of fMRIAn fMRI survey (Paret et al., 2022) showed that out of 183 participants, 66% have used containerized BIDS Apps dominated by tools like fMRIPrep (44%) and MRIQC (23%) highlighting a thriving ecosystem for code and software sharing. fMRIPrep generates visual reports to assess result quality and aids researchers in understanding each workflow step. The reports include comprehensive text descriptions of major pipeline steps, including exact software versions and citations.

2.4.2 Current state of PETIn contrast to the extensive support available for fMRI, there is a lack of dedicated PET software in containerized BIDS Apps and limited support for PET code sharing in platforms like Brainlife.

2.5 Optimizing and standardizing workflowsQuantifying brain activity requires complex analysis pipelines, and researchers have significant flexibility to customize each pipeline step. This flexibility, however, can lead to vastly different results from the same data, especially in fMRI studies (Loring et al., 2002; Eklund et al., 2016; Botvinik-Nezer et al., 2020). The choice of analytical methods also plays a crucial role in the variability of findings across neuroimaging techniques. For example, differences in approaches such as intensity-based versus feature-based coregistration (Li et al., 2024), gradient descent-based versus trust region-based optimization methods (Zhao and Xie, 2016), or PET template-based versus MRI-based image processing (Kuhn et al., 2014) can each act as a distinct reproducibility factor, independent of the software package used to implement these methods. Studies in PET (Greve et al., 2016; Mukherjee et al., 2016; Samper-González et al., 2018) also demonstrate how variations in processing workflows can significantly impact results. This variability makes it difficult to distinguish between true effects and biases introduced by analytic choices.

To address this, the neuroimaging community has proposed solutions such as “multiverse analysis” where data is processed through multiple pipelines and all results are combined to identify convergent findings (Dafflon et al., 2022), “pipeline optimization tools” that automatically find the best suited pipeline for a given problem domain to maximize reproducibility (Churchill et al., 2012), and “gold standard pipelines” that become the expected workflow for a specific research task (Niso et al., 2022a). These strategies aim to limit a researcher’s ability to bias the outcome of a study through careful pipeline adjustment.

2.5.1 Current state of fMRIFMRI reproducibility has been boosted by established pipelines like fMRIPrep (Esteban et al., 2019) and initiatives like HALFpipe (Waller et al., 2022) which promote consistent analysis across studies. However, researchers still navigate complex analysis choices. Multiverse analysis studies (Demidenko et al., 2024; Kristanto et al., 2024) explore the impact that these choices have on results.

2.5.2 Current state of PETAchieving standardization of analysis pipelines in PET lags behind fMRI. In one study, 14 international groups were asked to analyze the same simulated brain PET dataset using their own methods. Despite controlling for several preprocessing steps using the simulated data, the results were consistent but not identical across the groups. This highlights the significant impact of analytical and statistical choices on PET neuroimaging findings (Veronese et al., 2021). A recent review (Niso et al., 2022a) highlights the limited number of dedicated PET pipelines compared to those for MRI/fMRI. To our knowledge, the development of gold standard pipelines and multiverse analysis is extremely limited in brain PET.

2.6 SummaryIn summary, while pressure is growing from funding bodies, research institutions, and publishers to implement reproducibility factors within neuroscience (Frank et al., 2017; Niso et al., 2022a), fMRI is currently leading the way and brain PET is struggling to catch up. The following section aims to demonstrate the real-world impact of brain PET’s position as a straggler in reproducibility. We describe how difficult it was to implement a prominent PET analysis pipeline based on available descriptions, to demonstrate that the relative lack of brain PET reproducibility infrastructure has real effects on the conduct of science.

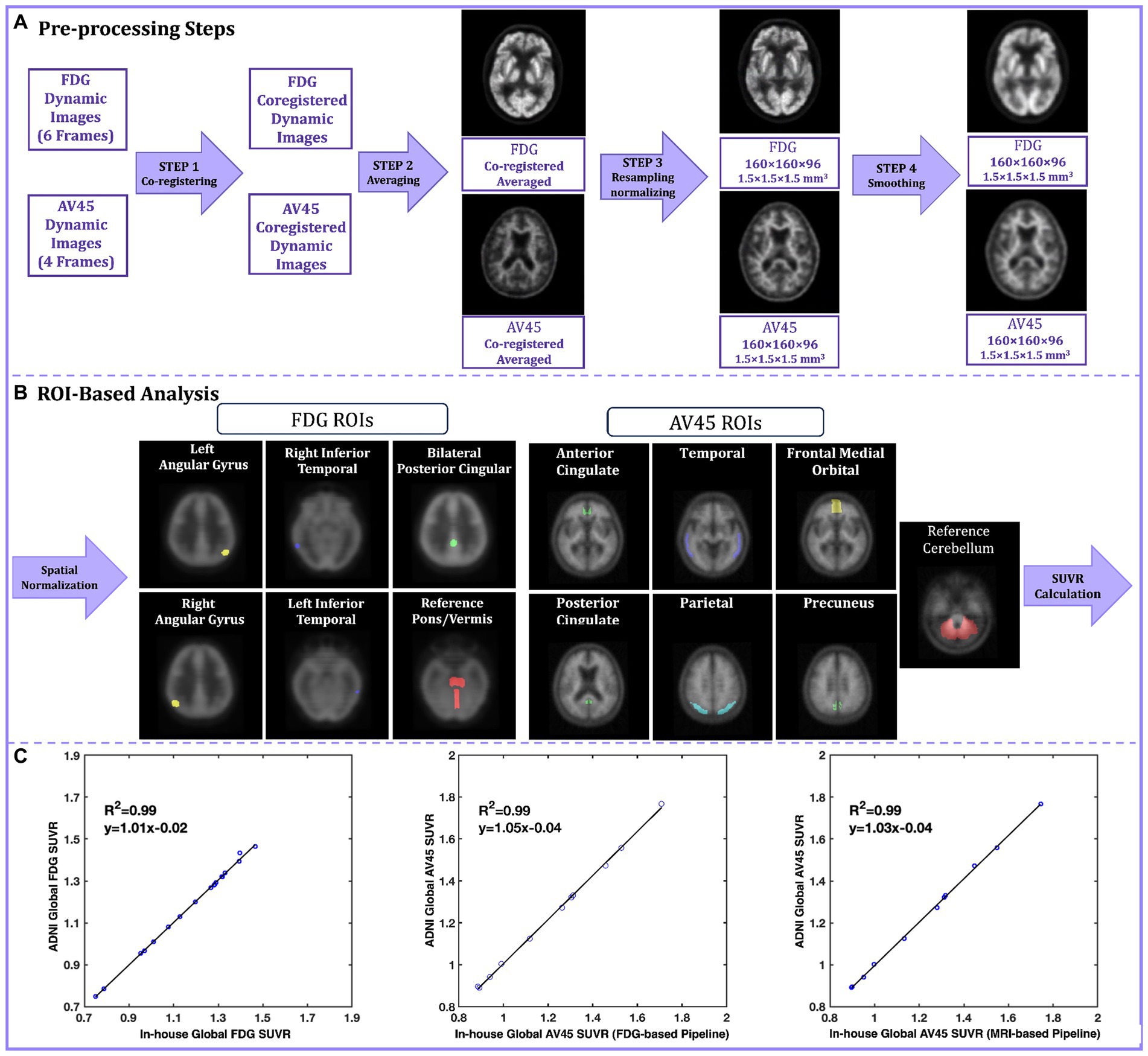

3 Case studyTo give an example of the real-world experience of PET image processing reproducibility, we tried to reproduce the processing pipeline of a large public dataset from the ADNI study. ADNI offers pre-processed PET scans for 18F-FDG and 18F-Florbetapir (AV45) with incremental levels of processing. Figure 1A illustrates the pre-processing steps for both FDG and AV45 PET scans. A detailed listing of acquisition parameters and description of the pre-processing pipeline can be found on (ADNI, 2004). The original scans include six 5 min FDG and four 5 min AV45 dynamic frames. In step 1 of pre-processing, coregistered dynamic frames are generated, using the first frame as the reference, to correct for head motion. Step 2 averages coregistered dynamic frames to reduce noise. Step 3 spatially reorients the averaged image to a standard grid (160 × 160 × 96 voxels, 1.5 mm3). The re-gridding corrects for inter-individual differences in brain size and shape while also providing a consistent baseline across individuals for measuring longitudinal change. The FDG scans are re-grided using an FDG PET Talairach atlas template, while the AV45 scans are coregistered to either the baseline FDG scan (FDG-Based) or the MRI scan of the participant when FDG is not available (MRI-based). Re-gridded, coregistered, dynamic images are then generated by co-registering the original frames to this baseline scan, followed by averaging. Then intensity normalization is applied. The final preprocessing step is to smooth images to ease cross-scanner comparability (Jagust et al., 2015). In the ADNI pipeline, NeuroStat is the software that handles coregistration, re-gridding, and frame co-registration (NeuroStat, 2000), while ADNI’s in-house software performs smoothing, averaging, and intensity normalization. In our replication of the ADNI pipeline, we employed SPM12 (MATLAB, 2020b) for smoothing and averaging. We skipped the initial intensity normalization in step 3 because this simply put voxel values on a uniform scale, and the subsequent analyses involve their own normalization. ADNI uses a separate in-house intensity normalization for amyloid scans, so we focused on replicating the core pre-processing steps and this omission did not affect the final results.

Figure 1. (A) The ADNI preprocessing pipeline steps for FDG and AV45. In step 1 original FDG and AV45 frames are coregistered to the reference frame, in step 2 coregistered FDG and AV45 frames are averaged, in step 3 the averaged image is re-grided into 160 × 160 × 96 grid with voxel size of 1.5 mm3 to create the baseline FDG and AV45 scans and further intensity normalized, in step 4 the resampled images are smooth using a non-isotropic gaussian filter; (B) The ADNI ROI-based analysis pipelines for FDG and AV45; The fully preprocessed (output of step 4) images are spatially normalized into the MNI standard space and pre-defined ROI are applied in respect to the tracer, finally a global SUVR is calculated from the average of the individual ROI SUVRs relative to a reference region of choice; (C) The SUVR linear regression plots for the validation dataset. The Y-axis represents ADNI’s calculated global SUVR as the gold standard, while the X-axis represents our in-house calculated global SUVRs for FDG (left), AV45 FDG-based (center), and AV45 MRI-based (right) pipelines. ADNI, Alzheimer’s Disease Neuroimaging Initiative; florbetapir, AV45; ROI, Region of interest; MNI, Montreal Neurological Institute; SUVR, standard uptake value ratio.

Quantification of fully preprocessed scans involved region of interest (ROI) based analyses using ADNI’s method for FDG and AV45 (Figure 1B). Both scans were spatially normalized to MNI space in SPM12 using its default FDG template (Della Rosa et al., 2014) and a specialized florbetapir template (Joshi et al., 2015). Standard uptake value ratios (SUVRs) were calculated for specific ROIs. The FDG ROIs are comprised of the right/left angular gyrus, right/left inferior temporal gyrus, and bilateral posterior cingulate in reference to pons/cerebellar vermis region (Landau et al., 2011). The AV45 ROIs are comprised of the medial orbital frontal, temporal, parietal, anterior cingulate, and posterior cingulate cortices as well as the precuneus, with the entire cerebellum as a reference region (Joshi et al., 2015). Finally, a global SUVR is computed from the average of the individual ROI SUVRs. The dataset we validated included 11 subjects with diagnoses ranging from cognitively normal (n = 6) to mild cognitive impairment (n = 5). All participants had FDG, AV45, and T1-weighted MRI scans, and for all participants, both their FDG and AV45 PET scans were included in this replication study.

For FDG PET pipeline validation, the SUVRs provided publicly by ADNI were used as the gold standard and compared against our in-house calculated SUVRs. For AV45 PET validation, we could not use the ADNI SUVRs because for some part of the ROI-based analysis, they had used SPM2, an outdated software version that is no longer widely available and easy to deploy. Instead, we downloaded the fully pre-processed AV45 PET scans from ADNI and performed ROI-based analysis. These calculated SUVRs on the fully pre-processed ADNI AV45 images were used as the gold standard against our in-house calculations using the unprocessed images of the same AV45 scans. Gold-standard SUVRs were compared to in-house calculated SUVRs using R-squared values from linear regression, and intra-class correlation coefficients (ICCs). Our results demonstrated strong linearity for FDG, AV45 FDG-based, and AV45 MRI-based global SUVRs, with R-squared of 0.99, 0.99 and 0.98, and ICCs of 0.998, 0.996, and 0.998, respectively (Figure 1C).

4 Challenges in replicating ADNI PET processing pipelineWhile our experimental study ultimately showed strong correlation between in-house and gold standard SUVRs for both tracers, replicating ADNI’s preprocessing and processing pipeline presented significant hurdles. Despite achieving high linearity and ICCs, incomplete documentation and ambiguous implementation details delayed our replication process. These challenges can be categorized into the following areas:

Standards for Writing and Publishing Scientific Studies:

• Lack of specification for intermediate steps, design choices, and software parameters and settings in ADNI documentation.

• Undocumented sub-steps in the ADNI pipeline, such as pre-smoothing frames before coregistration in step 1 and re-gridding AV45 scans using MRI when FDG scans are unavailable in step 3.

Data Sharing:

• Compatibility issues arose with NeuroStat requiring ECAT format files not available from the ADNI website.

• Obtaining undocumented, crucial information necessitated direct contact with the ADNI PET group.

Codes, Containers, and the Cloud:

• Transitioning between different software tools (e.g., NeuroStat and SPM) presented challenges due to varying data format requirements.

• Irreproducibility of ADNI’s normalization approach for AV45 scans due to the utilization of specific ADNI in-house software.

• Inability to use ADNI Avid SUVRs as the gold standard for AV45 pipeline validation due to outdated SPM2 version.

Optimizing and Standardizing Workflows:

• The use of ADNI’s in-house software for normalization made it difficult to replicate their exact approach, highlighting the need for standardized techniques.

5 DiscussionOur perspective and case study showed that PET neuroimaging research faces a concerning reproducibility gap compared to fMRI and MRI. While the sheer volume of research using fMRI and MRI contributes to this disparity, other factors are also at play. PET data often presents unique challenges due to lower signal-to-noise ratios, partial volume effects, and specialized reconstruction techniques. Additionally, the diverse clinical and research applications of PET contribute to a more heterogeneous research landscape compared to fMRI. The rapid evolution of PET technology further complicates standardization efforts.

Efforts within the PET community to address these challenges are ongoing. For instance, Greve et al. highlight how variations in partial volume correction methods can significantly impact results, underscoring the need for standardized approaches (Greve et al., 2016). Initiatives like the NeuroReceptor Mapping conference and the consensus paper also aim to develop guidelines and frameworks for PET research, although the field still lacks comprehensive and universally adopted standards (Knudsen et al., 2020). Pfaehler et al. also point out that the lack of publicly available data, heterogeneity in metrics, and insufficient reporting details hinder reproducibility in radiomic studies. They call for standardized preprocessing steps to improve comparability and reproducibility across studies (Pfaehler et al., 2021). A recent review emphasizes that while various organizations have developed harmonization strategies for quantitative PET, international methodology harmonization is still needed to ensure comparability across global clinical studies (Akamatsu et al., 2023).

Compared to PET, EEG and MEG (MEEG) have made strides in standardization, particularly regarding acquisition protocols, reporting, and analysis pipelines (Niso et al., 2022b; Gross et al., 2013). However, all these neuroimaging fields encounter obstacles related to data sharing, consistent analysis methods, and collaborative culture. As highlighted by the LiveMEEG 2020 conference (Niso et al., 2022b), a collaborative mindset is essential for advancing reproducibility in neuroimaging.

The MEEG community has demonstrated the value of shared efforts, emphasizing the importance of resource sharing, knowledge exchange, and joint problem-solving. While PET research has begun to embrace open science principles, there is a need for a more concerted and collaborative approach. The PET community must embrace open science practices like comprehensive documentation, open-source software, detailed pipeline descriptions, and dedicated communication platforms. Implementing stricter standards and data sharing policies across PET journals is crucial for fostering a cultural shift toward open science. The limited standardized reporting checklists, pre-registration, containerized tools, and standardized processing pipelines exacerbates the issue. Initiatives like OASIS and ADNI have made strides in accumulating PET scans, but sharing data from newer radiotracers, such as flortaucipir, is crucial for advancing PET research. Promoting the development of standardized pipelines and tools should be a top priority. Solutions like incentivizing open practices by designating funding for long-term maintenance of code and data repositories, as well as, offering promotions, tenure, and influencing funding decisions based on data reuse can drive progress.

Data availability statementThe original contributions presented in the study are included in the article/Supplementary material, further inquiries can be directed to the corresponding author.

Ethics statementThis study involves de-identified data from humans who participated in the Alzheimer’s Disease Neuroimaging Initiative. Alzheimer’s Disease Neuroimaging Initiative participants provided written informed consent to participate in that study and share their de-identified data. The Alzheimer’s Disease Neuroimaging Initiative was conducted in accordance with local legislation and institutional requirements.

Author contributionsMN: Formal analysis, Methodology, Validation, Writing – original draft, Writing – review & editing. SR: Writing – original draft, Writing – review & editing, Methodology, Investigation. OC: Writing – original draft, Writing – review & editing, Conceptualization, Funding acquisition.

FundingThe author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This work was supported by NIH grants RF1 AG075769, R01 AG077497, R01 AG077000, R01 AG041200, R01 AG062309.

Conflict of interestThe authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s noteAll claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary materialThe Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fninf.2024.1420315/full#supplementary-material

ReferencesAbe, T., Kinsella, I., Saxena, S., Buchanan, E. K., Couto, J., Briggs, J., et al. (2022). Neuroscience cloud analysis as a service: an open-source platform for scalable, reproducible data analysis. Neuron 110, 2771–2789.e7. doi: 10.1016/j.neuron.2022.06.018

Crossref Full Text | Google Scholar

Acar, F., Maumet, C., Heuten, T., Vervoort, M., Bossier, H., Seurinck, R., et al. (2023). Reporting practices for task fMRI studies. Neuroinformatics 21, 221–242. doi: 10.1007/s12021-022-09606-2

PubMed Abstract | Crossref Full Text | Google Scholar

Aguinis, H., Ramani, R. S., and Alabduljader, N. (2018). What you see is what you get? Enhancing methodological transparency in management research. Acad. Manag. Ann. 12, 83–110. doi: 10.5465/annals.2016.0011

Crossref Full Text | Google Scholar

Akamatsu, G., Tsutsui, Y., Daisaki, H., Mitsumoto, K., Baba, S., and Sasaki, M. (2023). A review of harmonization strategies for quantitative PET. Ann. Nucl. Med. 37, 71–88. doi: 10.1007/s12149-022-01820-x

PubMed Abstract | Crossref Full Text | Google Scholar

Botvinik-Nezer, R., Holzmeister, F., Camerer, C. F., Dreber, A., Huber, J., Johannesson, M., et al. (2020). Variability in the analysis of a single neuroimaging dataset by many teams. Nature 582, 84–88. doi: 10.1038/s41586-020-2314-9

PubMed Abstract | Crossref Full Text | Google Scholar

Button, K. S., Ioannidis, J. P., Mokrysz, C., Nosek, B. A., Flint, J., Robinson, E. S., et al. (2013). Power failure: why small sample size undermines the reliability of neuroscience. Nat. Rev. Neurosci. 14, 365–376. doi: 10.1038/nrn3475

PubMed Abstract | Crossref Full Text | Google Scholar

Churchill, N. W., Oder, A., Abdi, H., Tam, F., Lee, W., Thomas, C., et al. (2012). Optimizing preprocessing and analysis pipelines for single-subject fMRI. I. Standard temporal motion and physiological noise correction methods. Hum. Brain Mapp. 33, 609–627. doi: 10.1002/hbm.21238

PubMed Abstract | Crossref Full Text | Google Scholar

Dafflon, J., Costa, F. D. A., Monti, R. P., Bzdok, D., Hellyer, P. J., Turkheimer, F., et al. (2022). A guided multiverse study of neuroimaging analyses. Nat. Commun. 13:3758. doi: 10.1038/s41467-022-31347-8

PubMed Abstract | Crossref Full Text | Google Scholar

David, S. P., Ware, J. J., Chu, I. M., Loftus, P. D., Fusar-Poli, P., Radua, J., et al. (2013). Potential reporting bias in fMRI studies of the brain. PLoS One 8:e70104. doi: 10.1371/journal.pone.0070104

PubMed Abstract | Crossref Full Text | Google Scholar

Della Rosa, P. A., Cerami, C., Gallivanone, F., Prestia, A., Caroli, A., Castiglioni, I., et al. (2014). A standardized [18 F]-FDG-PET template for spatial normalization in statistical parametric mapping of dementia. Neuroinformatics 12, 575–593. doi: 10.1007/s12021-014-9235-4

PubMed Abstract | Crossref Full Text | Google Scholar

Demidenko, M. I., Mumford, J. A., and Poldrack, R. A. (2024). Impact of analytic decisions on test-retest reliability of individual and group estimates in functional magnetic resonance imaging: a multiverse analysis using the monetary incentive delay task. bioRxiv. doi: 10.1101/2024.03.19.585755

Crossref Full Text | Google Scholar

Eklund, A., Nichols, T. E., and Knutsson, H. (2016). Cluster failure: why fMRI inferences for spatial extent have inflated false-positive rates. Proc. Natl. Acad. Sci. 113, 7900–7905. doi: 10.1073/pnas.1602413113

PubMed Abstract | Crossref Full Text | Google Scholar

Epskamp, S., and Nuijten, M. B. (2016). statcheck: “Using “statcheck” to detect and prevent statistical reporting inconsistencies.” International Conference on Teaching Statistics. 2018. package version 0.1.

Esteban, O., Markiewicz, C. J., Blair, R. W., Moodie, C. A., Isik, A. I., Erramuzpe, A., et al. (2019). fMRIPrep: a robust preprocessing pipeline for functional MRI. Nat. Methods 16, 111–116. doi: 10.1038/s41592-018-0235-4

PubMed Abstract | Crossref Full Text | Google Scholar

Frank, M. C., Bergelson, E., Bergmann, C., Cristia, A., Floccia, C., Gervain, J., et al. (2017). A collaborative approach to infant research: promoting reproducibility, best practices, and theory-building. Infancy 22, 421–435. doi: 10.1111/infa.12182

PubMed Abstract | Crossref Full Text | Google Scholar

Gorgolewski, K. J., Alfaro-Almagro, F., Auer, T., Bellec, P., Capotă, M., Chakravarty, M. M., et al. (2017). BIDS apps: improving ease of use, accessibility, and reproducibility of neuroimaging data analysis methods. PLoS Comput. Biol. 13:e1005209. doi: 10.1371/journal.pcbi.1005209

PubMed Abstract | Crossref Full Text | Google Scholar

Gorgolewski, K. J., Auer, T., Calhoun, V. D., Craddock, R. C., Das, S., Duff, E. P., et al. (2016). The brain imaging data structure, a format for organizing and describing outputs of neuroimaging experiments. Sci. Data 3, 1–9. doi: 10.1038/sdata.2016.44

Crossref Full Text | Google Scholar

Gorgolewski, K. J., and Poldrack, R. A. (2016). A practical guide for improving transparency and reproducibility in neuroimaging research. PLoS Biol. 14:e1002506. doi: 10.1371/journal.pbio.1002506

PubMed Abstract | Crossref Full Text | Google Scholar

Greve, D. N., Salat, D. H., Bowen, S. L., Izquierdo-Garcia, D., Schultz, A. P., Catana, C., et al. (2016). Different partial volume correction methods lead to different conclusions: an 18F-FDG-PET study of aging. NeuroImage 132, 334–343. doi: 10.1016/j.neuroimage.2016.02.042

PubMed Abstract | Crossref Full Text | Google Scholar

Gross, J., Baillet, S., Barnes, G. R., Henson, R. N., Hillebrand, A., Jensen, O., et al. (2013). Good practice for conducting and reporting MEG research. NeuroImage 65, 349–363. doi: 10.1016/j.neuroimage.2012.10.001

PubMed Abstract | Crossref Full Text | Google Scholar

Guo, Q., Parlar, M., Truong, W., Hall, G., Thabane, L., Mckinnon, M., et al. (2014). The reporting of observational clinical functional magnetic resonance imaging studies: a systematic review. PLoS One 9:e94412. doi: 10.1371/journal.pone.0094412

PubMed Abstract | Crossref Full Text | Google Scholar

Halchenko, Y. O., and Hanke, M. (2012). Open is not enough. Let's take the next step: an integrated, community-driven computing platform for neuroscience. Front. Neuroinform. 6:22. doi: 10.3389/fninf.2012.00022

Crossref Full Text | Google Scholar

Hong, Y.-W., Yoo, Y., Han, J., Wager, T. D., and Woo, C.-W. (2019). False-positive neuroimaging: undisclosed flexibility in testing spatial hypotheses allows presenting anything as a replicated finding. NeuroImage 195, 384–395. doi: 10.1016/j.neuroimage.2019.03.070

PubMed Abstract | Crossref Full Text | Google Scholar

Insel, P. S., Donohue, M. C., Sperling, R., Hansson, O., and Mattsson-Carlgren, N. (2020). The A4 study: β-amyloid and cognition in 4432 cognitively unimpaired adults. Ann. Clin. Transl. Neurol. 7, 776–785. doi: 10.1002/acn3.51048

PubMed Abstract | Crossref Full Text | Google Scholar

Jagust, W. J., Landau, S. M., Koeppe, R. A., Reiman, E. M., Chen, K., Mathis, C. A., et al. (2015). The Alzheimer's disease neuroimaging initiative 2 PET core: 2015. Alzheimers Dement. 11, 757–771. doi: 10.1016/j.jalz.2015.05.001

PubMed Abstract | Crossref Full Text | Google Scholar

Joshi, A. D., Pontecorvo, M. J., Lu, M., Skovronsky, D. M., Mintun, M. A., and Devous, M. D. (2015). A semiautomated method for quantification of F 18 florbetapir PET images. J. Nucl. Med. 56, 1736–1741. doi: 10.2967/jnumed.114.153494

PubMed Abstract | Crossref Full Text | Google Scholar

Klapwijk, E. T., Van Den Bos, W., Tamnes, C. K., Raschle, N. M., and Mills, K. L. (2021). Opportunities for increased reproducibility and replicability of developmental neuroimaging. Dev. Cogn. Neurosci. 47:100902. doi: 10.1016/j.dcn.2020.100902

PubMed Abstract | Crossref Full Text | Google Scholar

Knudsen, G. M., Ganz, M., Appelhoff, S., Boellaard, R., Bormans, G., Carson, R. E., et al. (2020). Guidelines for the content and format of PET brain data in publications and archives: a consensus paper. J. Cereb. Blood Flow Metab. 40, 1576–1585. doi: 10.1177/0271678X20905433

PubMed Abstract | Crossref Full Text | Google Scholar

Kristanto, D., Burkhardt, M., Thiel, C., Debener, S., Giessing, C., and Hildebrandt, A. (2024). The multiverse of data preprocessing and analysis in graph-based fMRI: a systematic literature review of analytical choices fed into a decision support tool for informed analysis. bioRxiv. doi: 10.1016/j.neubiorev.2024.105846

Crossref Full Text | Google Scholar

Kuhn, F. P., Warnock, G. I., Burger, C., Ledermann, K., Martin-Soelch, C., and Buck, A. (2014). Comparison of PET template-based and MRI-based image processing in the quantitative analysis of C 11-raclopride PET. EJNMMI Res. 4, 1–7. doi: 10.1186/2191-219X-4-7

Crossref Full Text | Google Scholar

Landau, S. M., Harvey, D., Madison, C. M., Koeppe, R. A., Reiman, E. M., Foster, N. L., et al. (2011). Associations between cognitive, functional, and FDG-PET measures of decline in AD and MCI. Neurobiol. Aging 32, 1207–1218. doi: 10.1016/j.neurobiolaging.2009.07.002

PubMed Abstract | Crossref Full Text | Google Scholar

Li, L., Shiradkar, R., Gottlieb, N., Buzzy, C., Hiremath, A., Viswanathan, V. S., et al. (2024). Multi-scale statistical deformation based co-registration of prostate MRI and post-surgical whole mount histopathology. Med. Phys. 51, 2549–2562. doi: 10.1002/mp.16753

PubMed Abstract | Crossref Full Text | Google Scholar

Loring, D., Meador, K., Allison, J. D., Pillai, J., Lavin, T., Lee, G. P., et al. (2002). Now you see it, now you don’t: statistical and methodological considerations in fMRI. Epilepsy Behav. 3, 539–547. doi: 10.1016/S1525-5050(02)00558-9

PubMed Abstract | Crossref Full Text | Google Scholar

Marcus, D. S., Wang, T. H., Parker, J., Csernansky, J. G., Morris, J. C., Buckner, R., et al. (2007). “Open Access Series of Imaging Studies (OASIS): cross-sectional MRI data in young, middle aged, nondemented, and demented older adults” J. Cogn. Neurosci, 19:1498–1507. doi: 10.1162/jocn.2007.19.9.1498

留言 (0)