The human brain, a sophisticated and complex organ, possesses remarkable capabilities such as decision-making, memory formation, visual and auditory processing, language comprehension, spatial awareness, and motor control functions. These functions are thought to arise from neural networks in which a large number of neurons are diversely connected via synapses. To simulate brain function, neurons have been mathematically described using either non-spiking or spiking models. Non-spiking models are predicated on simulating the neuronal firing rate, which refers to the frequency of electrical impulses, known as spikes, emitted by neurons. This approach is based on the prevalent belief that neurons primarily encode information through variations in their firing rate. A notable example of this type of modeling is the Convolutional Neural Network, which is widely used in contemporary AI technology. However, non-spiking models are limited in their ability to accurately replicate spike-timing-dependent plasticity (STDP), an essential mechanism for learning and memory across various regions of the brain, including the visual cortex (Fu et al., 2002; Yao et al., 2004), somatosensory cortex (Allen et al., 2003; Celikel et al., 2004), hippocampus (Bi and Poo, 1998; Wittenberg and Wang, 2006), and cerebellum (Piochon et al., 2013). Therefore, to construct a neural network modeled on the brain, it is crucial to accurately simulate STDP. Spiking neuron models produce spikes to represent their activities, thereby enabling the representation of STDP. Spiking neural networks have gained attention in various fields, including those envisioning general artificial intelligence (AGI) and other specialized AI applications (Calimera et al., 2013; Pfeiffer and Pfeil, 2018; Yang and Chen, 2023; Yang et al., 2023). They have also been utilized in neuromorphic computing to emulate the structure and function of biological neural circuits efficiently (Boi et al., 2016; Osborn et al., 2018; Donati et al., 2019; Mosbacher et al., 2020). Contemporary AI development has primarily focused on mimicking the cognitive and decision-making functions of the cerebrum in humans and animals. However, the cerebellum, known for its role in motor control and learning, has also been recently identified as playing a part in higher cognitive functions and decision-making (Ito, 2008; Strick et al., 2009; Liu et al., 2022). Thus, neural networks that emulate both cerebrum and cerebellar circuits could significantly enhance the advancement of more intelligent AI and AGI. Moreover, the cerebellum has been demonstrated to be critical in the adaptive motor control of various movements, including eye movements (McLaughlin, 1967; Ito et al., 1970; Miles et al., 1986; Nagao, 1988), eye blinks (Lincoln et al., 1982; Yeo et al., 1985), arm reaching (Martin et al., 1996; Kitazawa et al., 1998), gait (Mori et al., 1999; Ichise et al., 2000), and posture (Nashner, 1976), among others (Monzée et al., 2004; Leiner, 2010). In the realm of advanced robotics, an artificial cerebellum should be highly effective for achieving human-like flexible motor control and learning. Specifically, an artificial cerebellum that operates in real-time, maintains compactness, and exhibits low power consumption, holds potential for applications in neuroprosthetics and implantable brain-machine interfaces. Such advancements may provide viable solutions to compensate for impaired motor functions.

The cerebellar neural network is well-understood regarding its anatomical connectivity and physiological neuronal characteristics, as detailed in studies by Eccles et al. (1967) and Gao et al. (2012), among many others. Since the pioneering theoretical work by Marr (1969) and Albus (1971), several artificial cerebellums have been developed. Similar to other neural network models, these artificial cerebellum models can be classified into two major types: spiking and non-spiking. A representative non-spiking cerebellar model is the cerebellar model articulation controller (CMAC), proposed by Albus (1975). CMAC has demonstrated exceptional performance and robustness as a non-model-based, nonlinear adaptive control scheme in controlling a submarine (Huang and Hira, 1992; Lin et al., 1998) and an omnidirectional mobile robot (Jiang et al., 2018). Examples of spiking models of the cerebellum include a model to explain the timing mechanism of eyeblink conditioning (Medina et al., 2000), and a model for acute vestibulo-ocular reflex motor learning (Inagaki and Hirata, 2017). Moreover, a realistic 3D cerebellar scaffold model running on pyNEST and pyNEURON simulators (Casali et al., 2019), a cerebellar model capable of real-time simulation with 100k neurons using 4 NVIDIA Tesla V100 GPUs (Kuriyama et al., 2021), and a Human-Scale Cerebellar Model composed of 68 billion spiking neurons utilizing the supercomputer K (Yamaura et al., 2020) have been constructed. A caveat with these spiking cerebellar models is their resource-intensive nature compared to non-spiking models due to the computational demands of simulating spike dynamics in neurons. Consequently, real-time simulations become challenging without substantial processing power.

In recent years, dedicated neuromorphic chips, designed for real-time computations of spiking neural networks and the simulation of various network types with synaptic plasticity, have been developed. These chips offer new possibilities for advanced neural network modeling. One such chip is the Loihi2, capable of handling up to 1 million spiking neurons across 128 cores. Each core has 192 kB of local memory, with 128 kB allocated specifically for synapses (Davies et al., 2018; Davies, 2023). This setup allows the simulation of up to 64k synapses per neuron when using 16-bit precision for synaptic weights. However, each core is designed to handle a maximum of 8,192 neurons. If a neuron requires more than 8k synapses, the number of neurons per core must be reduced. This can lead to inefficiencies, as neuron memory may remain underutilized. Another notable neuromorphic chip is the TrueNorth, which can simulate 1 million spiking neurons. However, it faces limitations with local memory for synaptic states, providing only 13 kB per core. This restricts each neuron to have just 256 synapses (Merolla et al., 2014), significantly fewer than found in cerebellar cortical neurons such as Purkinje cells. Overall, these neuromorphic chips are constrained by their memory capacity, which poses challenges for efficiently simulating complex structures like the cerebellum.

Field-programmable gate arrays (FPGAs) allow designers to program configurations of logic circuits with low power consumption, giving them an edge over central processing unit (CPUs) and graphics processing unit (GPUs) in developing specialized and efficient architectures. Several studies have implemented spiking neural networks including those of the cerebellum on FPGAs. For instance, Cassidy et al. (2011) implemented 1 million neurons on a Xilinx Virtex-6 SX475 FPGA-based neuromorphic system. Neil and Liu (2014) developed a deep belief network of 65k neurons with a power consumption of 1.5 W on an FPGA-based spiking network accelerator using a Xilinx Spartan-6 LX150 FPGA. Luo et al. (2016) implemented the cerebellar granular layer of 101k neurons on a Xilinx Virtex-7 VC707 FPGA, with a power consumption of 2.88 W. In a similar vein, Xu et al. (2018) implemented an artificial cerebellum with 10k spiking neurons on a Xilinx Kintex-7 KC705 FPGA, applying it to neuro-prosthesis in rats with an eye blink conditioning scheme. Lastly, Yang et al. (2022) implemented a cerebellar network of 3.5 million neurons on six Altera EP3SL340 FPGAs, with a power consumption of 10.6 W, and evaluated it by simulating the optokinetic response. These efforts highlight the significant role of FPGAs in neuromorphic computing, offering powerful and efficient solutions for simulating complex neural networks such as those found in the cerebellum.

One of the potential applications of the artificial cerebellum is implantable brain-machine interfaces used for neuroprosthesis. However, the chronic use of such active implanted devices raises safety concerns, particularly due to thermal effects. Studies have shown that temperature elevations greater than 3 °C above normal body temperature can induce physiological abnormalities like angiogenesis and necrosis (Seese et al., 1998), and the temperature increase due to the power consumption of an implanted microelectrode array in the brain is estimated to be 0.029°C/mW (Kim et al., 2007), suggesting that the power consumption of hardware implanted in the brain should not exceed 100 mW. From this perspective, even the FPGAs that have been used to implement cerebellar spiking neural networks (Cassidy et al., 2011; Neil and Liu, 2014; Xu et al., 2018; Yang et al., 2022), are not currently suitable for creating devices for this purpose.

In this study, we aim at constructing an artificial cerebellum that is portable, lightweight, and low power consumption. To achieve this, we utilized the Xilinx Spartan-6 and employed three novel techniques to effectively incorporate the distinctive cerebellar characteristics. First, we reduced the required number of arithmetic and storage devices while maintaining numerical accuracy. This was achieved by utilizing only 16-bit fixed-point numbers and introducing randomized rounding in the computation of numerical solutions for the differential equations that describe each spiking neuron model. Second, to facilitate the transmission of spike information between the computational units of each neuron, we installed a spike storage unit equipped with a data transmission circuit. This circuit is fully coupled between the pre- and postsynaptic neurons, effectively eliminating the latency that is dependent on the number of neurons and spikes. Third, to further decrease the number of necessary storage devices, we introduced a pseudo-random number generator. This serves as a storage device for storing information about connections between neuron models. As a result, a cerebellar cortical neural circuit model consisting of 9,504 neurons and 240,484 synapses was successfully implemented on the FPGA with low power consumption (< 0.6 W) and operation in real-time (1 ms time step). To validate the model, we compared the firing properties of a minimum scale cerebellar neuron network model on the FPGA with the same model implemented on a personal computer in Python using a 64-bit floating-point number. This comparison demonstrated that the model on the FPGA possesses sufficient computational accuracy to simulate spiking timings. Furthermore, we applied the FPGA spiking artificial cerebellum for the adaptive control of a direct current (DC) motor in a real-world setting. This demonstration showed that the artificial cerebellum is capable of adaptively controlling the DC motor, maintaining its performance even when its load undergoes sudden changes in a noisy natural environment.

2 Materials and methods 2.1 Cerebellar spiking neuronal network modelThe artificial cerebellum to be implemented on the FPGA in the present study is similar to those previously constructed by referring to anatomical and physiological evidence of the cerebellar cortex (Medina et al., 2000; Inagaki and Hirata, 2017; Casali et al., 2019; Kuriyama et al., 2021). Presently, the scale of the artificial cerebellum (the number of neuron models) is set to ∼104 neurons which is limited by the specification of the FPGA (XC6SLX100, Xilinx) used in the current study (see below for more detailed specs).

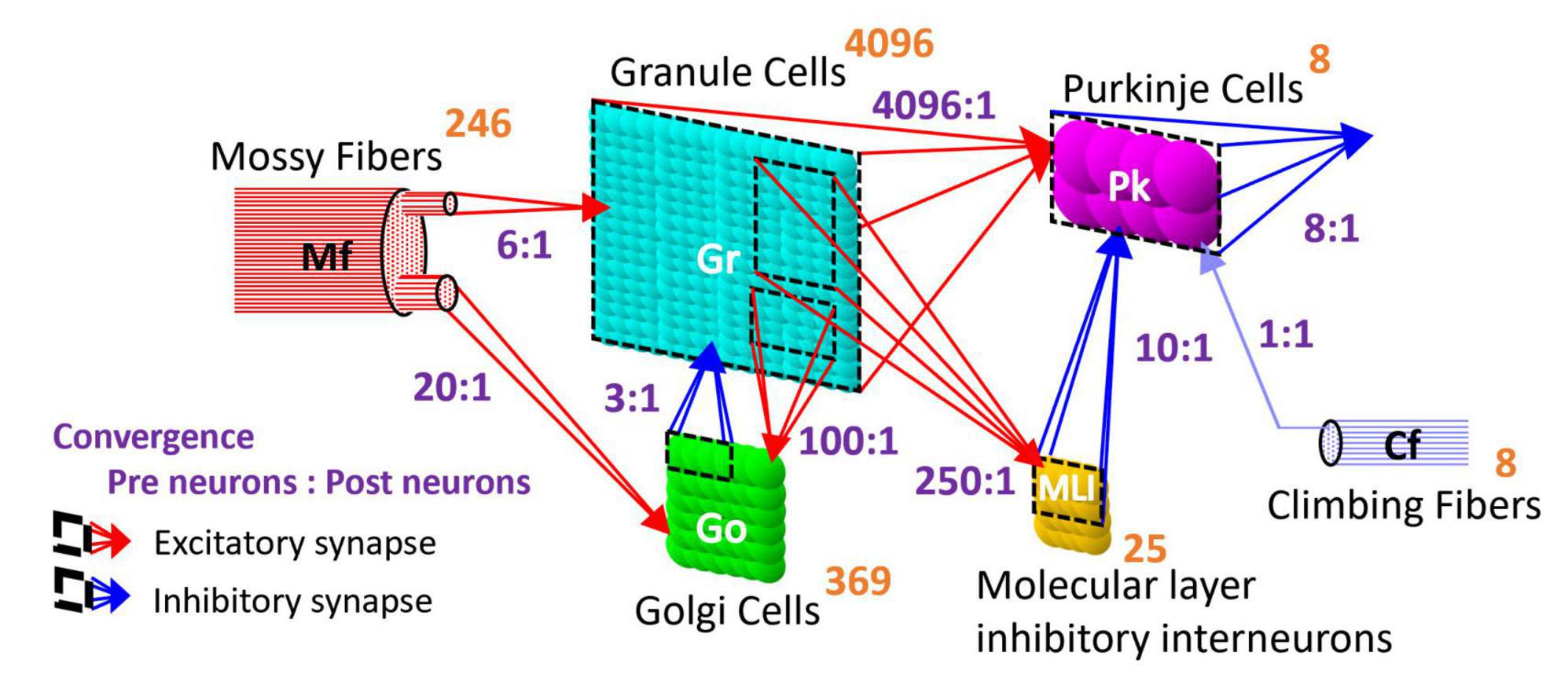

2.1.1 Network structureThe artificial cerebellum has a bi-hemispheric structure as the real cerebellum (Figure 1). Each hemisphere consists of 246 mossy fibers (MF), 8 climbing fibers (CF), 4096 granule cells (GrC), 369 Golgi cells (GoC), 25 molecular layer inhibitory interneurons (MLI), and 8 Purkinje cells (PkC). These numbers were determined so that the model can be implemented and run in real-time on the FPGA currently employed (see below) while preserving convergence-divergence ratios of these fiber/neuron types (Figure 1, purple numbers) as close as those found in the vertebrate cerebellum (Medina et al., 2000). Note that this number of GrC (4096) has been demonstrated to be enough to control real-world objects such as a two-wheeled balancing robot robustly (i.e., independently of the initial synaptic weight values) (Pinzon-Morales and Hirata, 2015). In the cerebellar cortical neuronal network, MFs connect to GrCs and GoCs via excitatory synapses, and GrCs connect to PkCs, GoCs, and MLIs via excitatory synapses. GoCs connect back to GrCs via inhibitory synapses, while MLIs connect to PkCs via inhibitory synapses. PkCs connect to the extra cerebellar area via inhibitory synapses.

Figure 1. Structure of the artificial cerebellum. The numeral in orange in the top-right corner of each neuron represents the total number of neurons while the ratios indicated in blue between presynaptic and postsynaptic neurons represent the convergence ratios.

2.1.2 Spiking neuron modelNeurons and input fibers in the artificial cerebellum are described by the following leaky integrate and fire model (Gerstner and Kistler, 2002; Izhikevich, 2010) described by Eqs 1–3:

Cdv(t)dt=-gL(v(t)-El)+i(t)+ispont(t)(1)

v(t)={v(t)+(Vr-Vth)ifv(t)>Vthv(t)otherwise(2)

δ(t)={ 1ifv(t)>Vth 0otherwise(3)

where, v(t), i(t), and ispont(t) are the membrane potential, the input synaptic current, and the current producing spontaneous firing at time t, respectively. C is the membrane capacitance, and gL is the leak conductance. When the membrane potential v(t) exceeds the threshold Vth, it is reset to the resting potential Vr, and the unit impulse function δ(t) outputs 1. Otherwise, the unit impulse function δ(t) outputs 0. The outputs are transmitted to the postsynaptic neuron and induce postsynaptic current (see Section “2.1.3 Synapse model”). The current ispont(t) is to simulate the spontaneous discharge, which is generated by a uniform random number [0, 2Ispont] to prevent the timing of spontaneous spikes from becoming the same between neurons. The mean spontaneous discharge current, Ispont, is shown in the cerebellum model of Casali et al. (2019). The constants for each neuron/fiber type are listed in Table 1 which are the same as the previous realistic artificial cerebellums (Casali et al., 2019; Kuriyama et al., 2021) except that the parameters of the input fibers were arbitrarily defined so that their firing frequencies become physiologically appropriate.

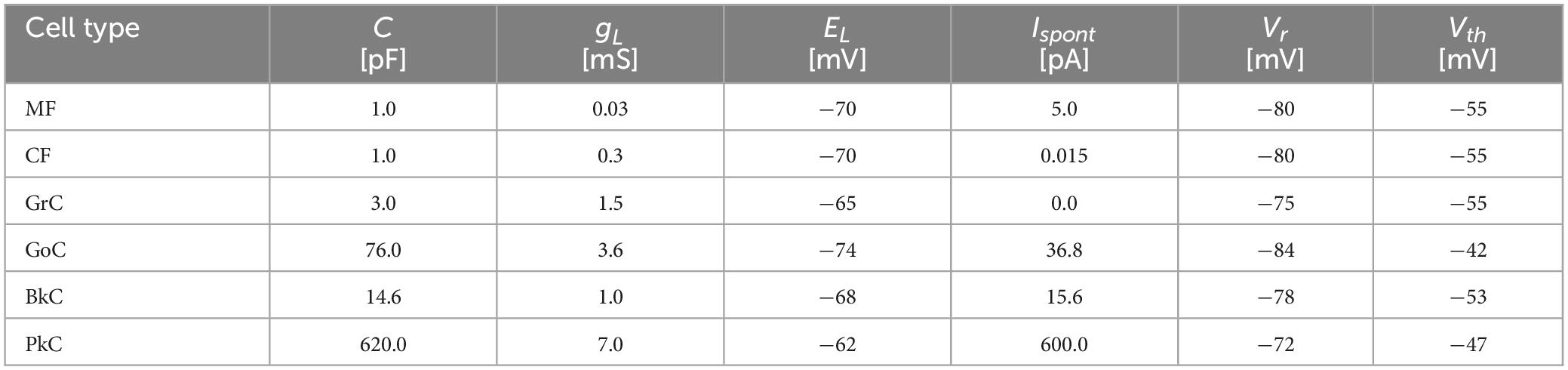

Table 1. Neuron parameters.

2.1.3 Synapse modelThe synaptic transmission properties are described by the following conductance-based synapse model (Gerstner and Kistler, 2002; Izhikevich, 2010) described by Eqs 4, 5:

dgm(t)dt=∑n=0Nδn(t)wn+mN-gm(t)τsyn(4)

i(t)=-∑m=0Mgm(t)(v(t)-Em)(5)

where, gm(t) is the synaptic conductance of the m-th postsynaptic neuron, δn (t) is the unit impulse of the n-th presynaptic neuron or fiber, and wn+mN is the synaptic transmission efficiency between the n-th presynaptic neuron or fiber and the postsynaptic neuron. N is the number of presynaptic neurons. τsyn is the time constant. Em is the reversal membrane potential which is positive or negative for excitatory or inhibitory synapse, respectively. As a result, the sign of the synaptic current i(t) differs between the excitatory synapse and the inhibitory synapse. M is the number of presynaptic neuron types. The ratios of the numbers of synaptic connections between different neuron types were as shown in Figure 1 (Nc1:Nc2) where Nc1 and Nc2 are the numbers of presynaptic and postsynaptic neurons, respectively. The connections between presynaptic and postsynaptic neurons are determined by a pseudo-random number generator described later. The initial values of synaptic transmission efficiency w of all synapses were assigned by Gaussian random numbers whose means and variances are different for different neuron types as listed in Table 2. In the current model, only parallel fiber (PF, the axonal extensions of GrC)–PkC synapses undergo synaptic plasticity as described below. Other synaptic efficacies were fixed at the initial value throughout the execution. The synaptic constants τsyn and Em were set as shown in Table 2 based on anatomical and physiological findings (Medina et al., 2000; Kuriyama et al., 2021).

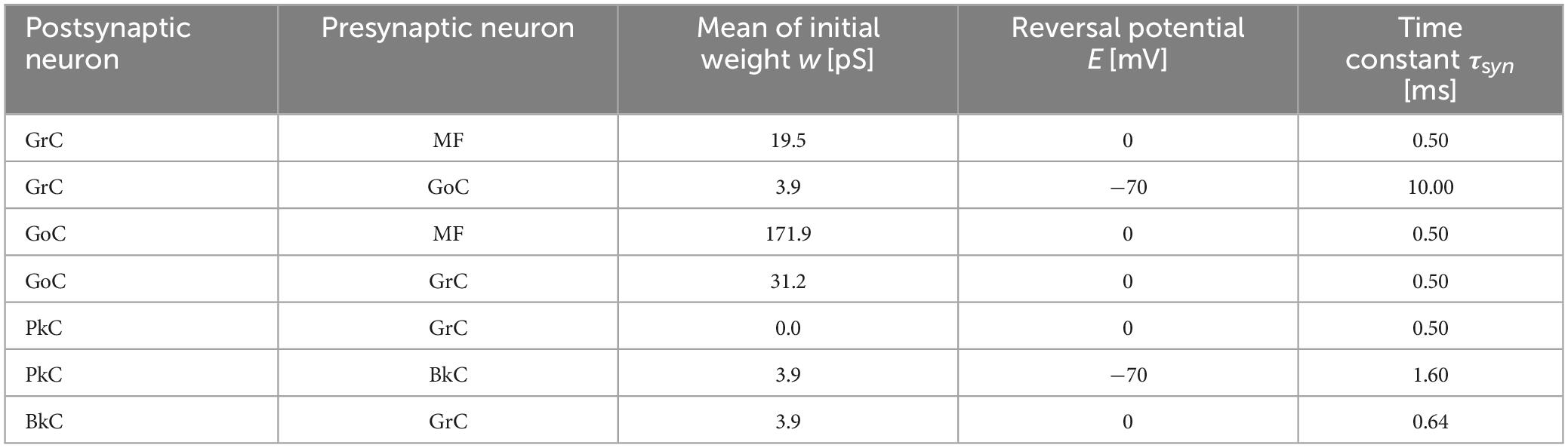

Table 2. Synapse parameters.

2.1.4 Synaptic plasticity modelThe synapses between the PF and the PkC are the loci where the memory of motor learning has been proposed to be stored (Ito, 2001; Takeuchi et al., 2008). These include long-term depression (LTD) and long-term potentiation (LTP). The present model implements plasticity described by Eqs 6, 7:

dwPF-PkC(t)dt=-γLTDsCF(t)qGrC(t)+γLTPδ¯CF(t)sGrC(t)(6)

τLTDdqGrC(t)dt=δGrC(t)-qGrC(t)(7)

Here, qGrC represents the average firing rate of the GrC, δGrC(t) represents the unit impulse of the GrC, and δ¯CF(t) represents the negation of the unit impulse of the CF. δGrC(t) turns to 1 when a spike fires, otherwise 0. The synaptic weight wPF−PkC(t) increases or decreases from the initial value 0 in the range of [0, 1]. When the firing of GrC and CF are synchronized, LTD occurs (Ekerot and Jömtell, 2003). This plasticity model is achieved by reducing the synaptic transmission efficiency wPF−PkC(t) by the product of qGrC(t) and the coefficient γLTD = 5.94 × 10−8 when CF spikes at time t. The average firing rate qGrC is described by the low-pass filter which has the time constant (τLTD = 100 ms). On the other hand, LTP is induced when GrC fires and CF does not fire (Hirano, 1990; Jörntell and Hansel, 2006). This plasticity model is achieved by increasing the synaptic transmission efficiency wPF−PkC(t) by the product of δ¯CF(t), δGrC(t), and the coefficient γLTP = 4.17 × 10−7.

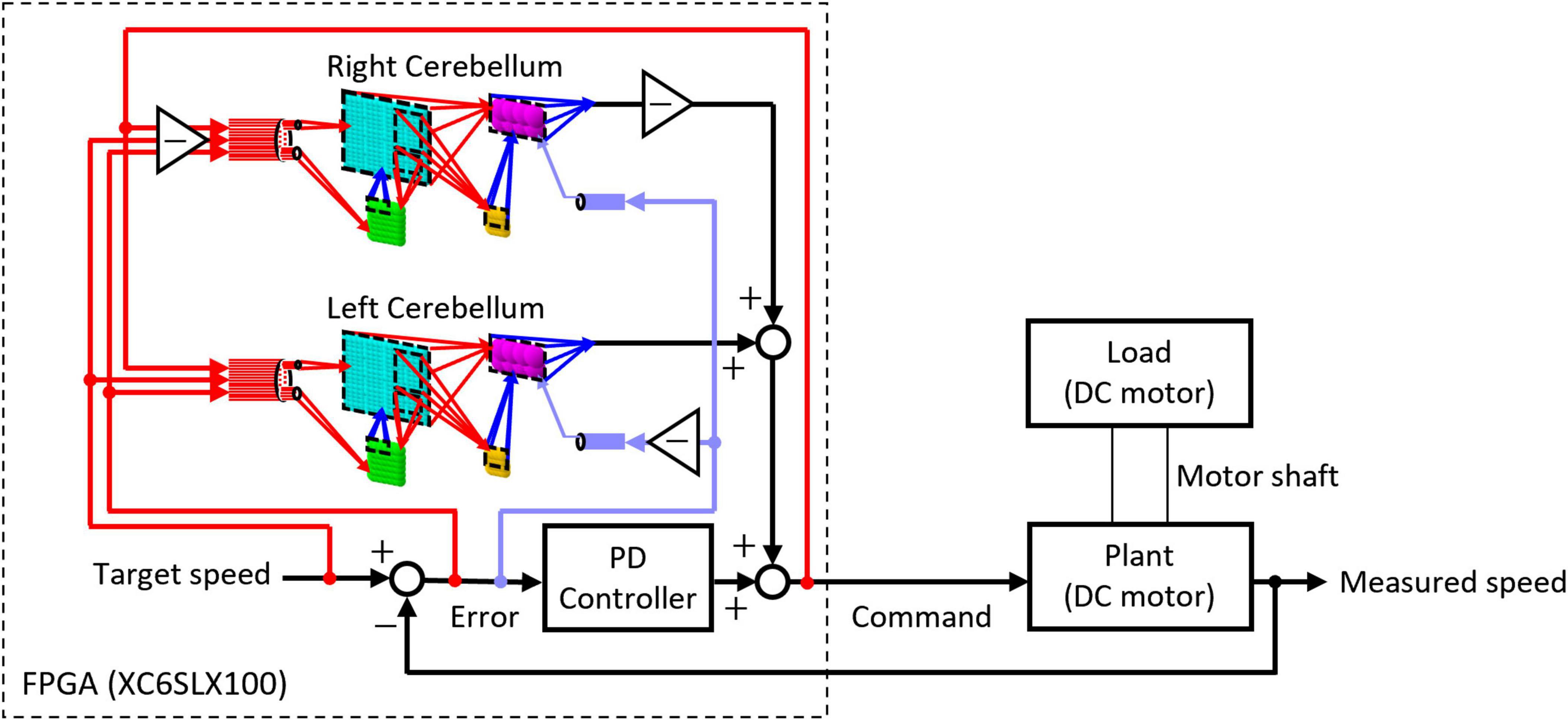

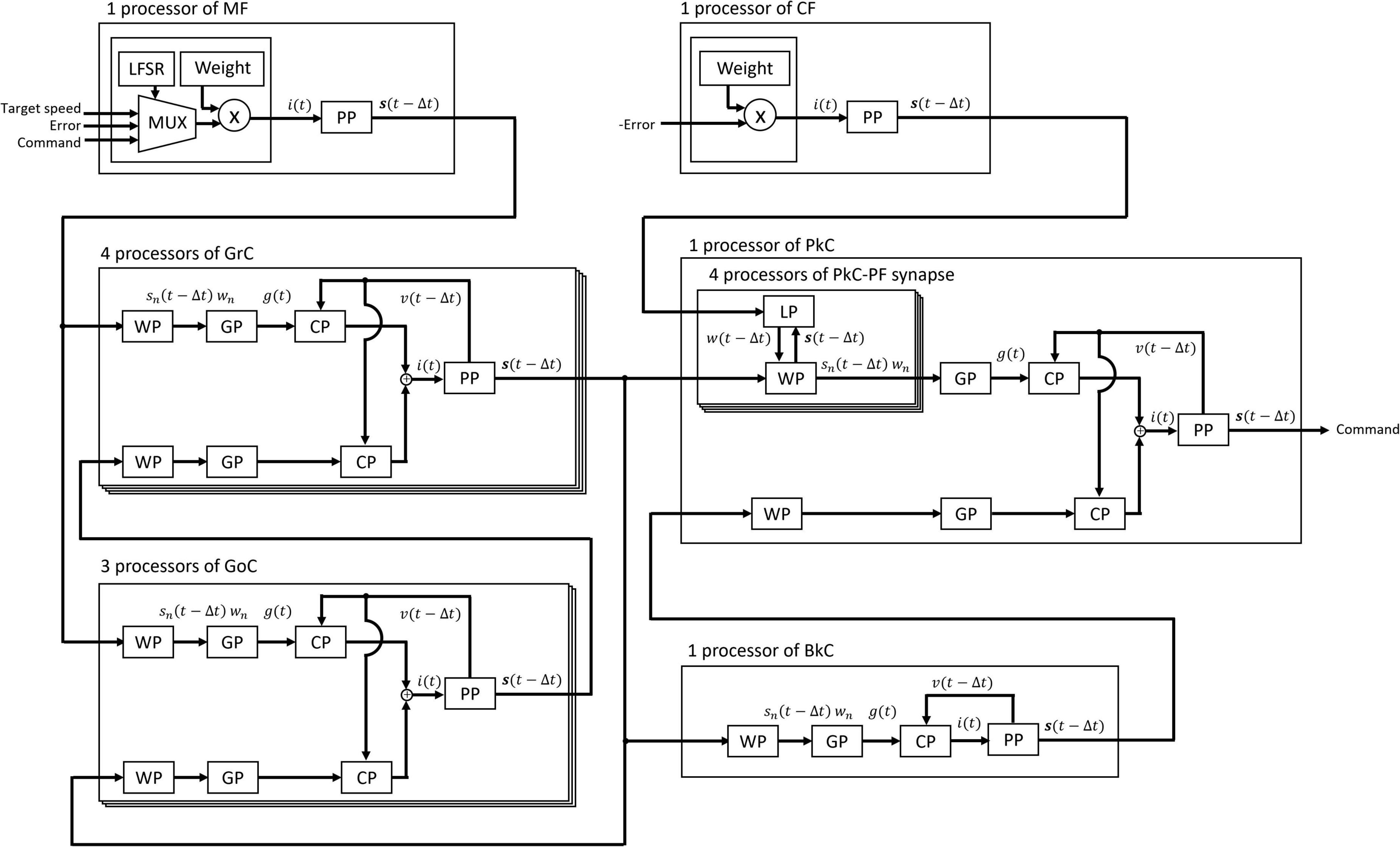

2.2 FPGA implementation 2.2.1 Design of motor control systemThe artificial cerebellum was implemented on an FPGA (XC6SLX100-2FGG484C, Xilinx) attached to an evaluation board (XCM-018-LX100, Humandata). We used a hardware description language, VHDL to describe the artificial cerebellum which is available at [https://github.com/YusukeShinji/Shinji_Okuno_Hirata_FrontNeurosci_2024]. The left and right hemisphere models were combined as shown in Figure 2 to control real-world machines. A proportional-differential (PD) controller that simulates the pathway outside the cerebellum was implemented on the same FPGA as in the previous models (Pinzon-Morales and Hirata, 2014, 2015). The output of the cerebellum model R(t) is described by Eq. 8

TPdR(t)dt=-R(t)+gP∑m=18δm(t)(8)

Figure 2. Structure of the control circuit used in the real-world experiment.

where δm(t) is the unit impulse representing the spiking output of the mth PkC, gP = 0.35 is a gain coefficient, and TP = 310 ms is the time constant of a low-pass filter that describes the relationship between R(t) and δm(t). The cerebellum model outputs RL(t) and RR(t) of the left and right hemispheres are linearly summed with the output of the PD controller to obtain the command value y(t) of DC motor as described in Eq. 9 where proportional and derivative parameters of the PD controller multiplied by the error signal E(t) are GP = 0.00635 and GD = 0.00001, respectively.

y(t)=GPE(t)+GD(E(t)-E(t-Δt))+RL(t)-RR(t)(9)

The Command value y(t) is converted into a pulse-width modulated (PWM) voltage signal and then fed to the motor to be controlled. The control object currently tested is a DC motor (JGA25-370, Open Impulse). As a load was added to the control object, the same type of DC motor was connected co-axially. The load was imposed by short-circuiting the DC motor via a relay circuit controlled by the same FPGA. The produced motion of the control object in response to a given target speed was measured by an encoder and fed back through a hole sensor to calculate the error. The error is sent to the artificial cerebellum as CF activity which induces PF–PkC synaptic plasticity (see Section “2.1.4 Synaptic plasticity”). Other input modalities to the artificial cerebellum via MFs are target speed, error, and the copy of motor command (efference copy) as in the real oculomotor control system (Noda, 1986; Hirata and Highstein, 2001; Blazquez et al., 2003; Huang et al., 2013).

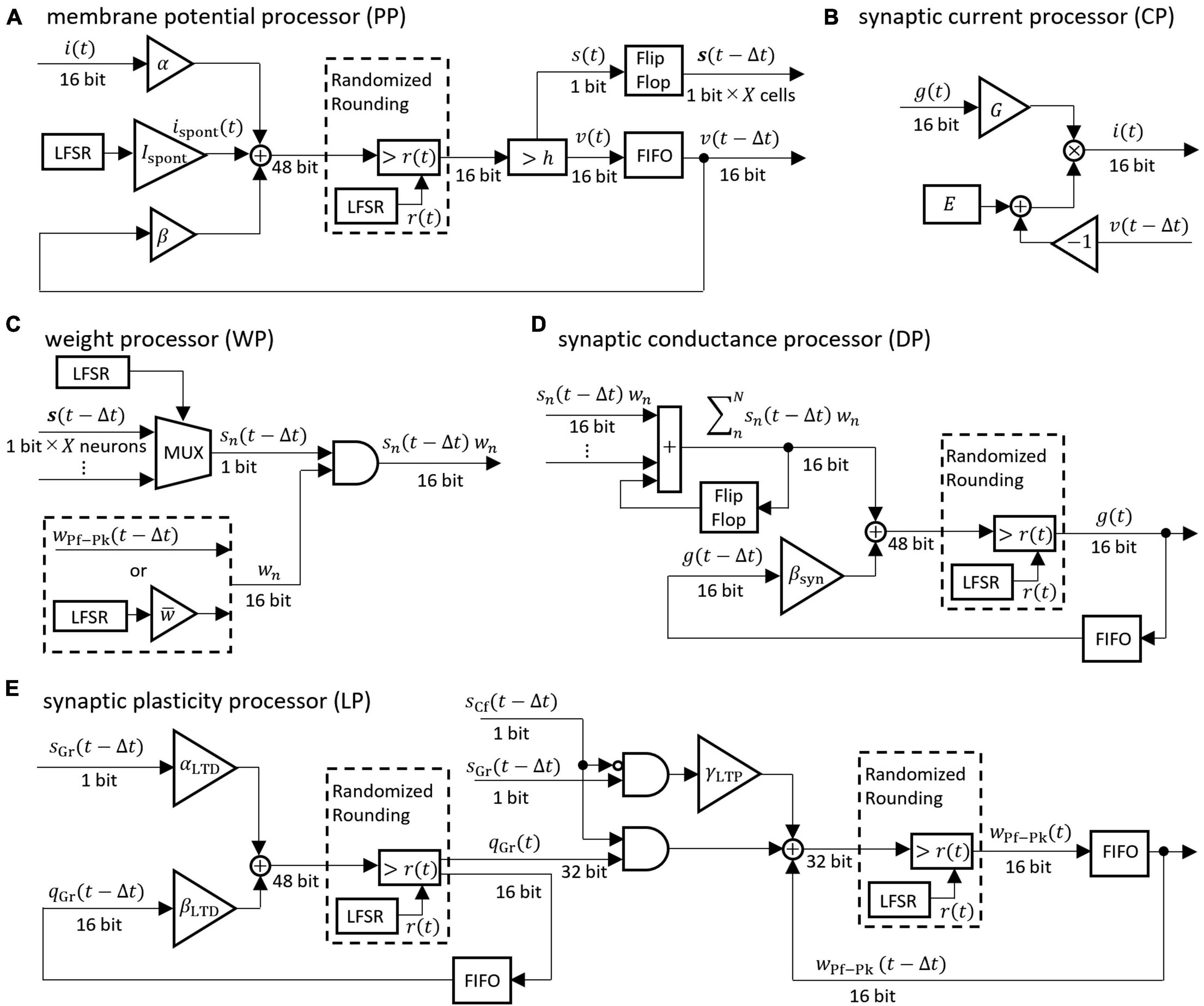

2.2.2 Design of computation, communication, and memory systemsThe differential equations describing the neuron model were discretized by the Euler method and implemented as digital circuits shown in Figure 3. Digital circuits that simulate neuron groups in the model are interconnected to form the cerebellar neural network shown in Figure 1. Figure 3A is the membrane potential processor (PP), B is the synaptic current processor (CP), C is the weight processor (WP), D is the synaptic conductance processor (DP), and E is the synaptic plasticity processor (LP).

Figure 3. Block diagrams of the neural processor. (A–E) Processing devices for membrane potential, synaptic current, synaptic weight, synaptic conductance, and synaptic plasticity, respectively. The coefficients are defined as follows: α = 1/C, β = 1 − gL/C, βsyn = 1 − 1/τsyn, αLTD = γLTD/τLTD, βLTD = 1 − 1/τLTD. For the synaptic transmission efficiency w in (C), only the PF-PkC synapse uses the numerical value obtained by calculating LTD and LTP according to (E). Adder and AND gates are composed of logic circuits in the look-up table (LUT). The DSP slice in the FPGA was used for the triangular block representing the gain and the multiplication. The LFSR is a linear feedback shift register, each of which outputs a random number r(t) that differs depending on the initial seed. The flip-flop is a storage element that is constructed by registers contained in the LUT in the FPGA. The FIFO is a storage element that uses the FPGA’s block RAM in a first-in-first-out format. The multiplexer (MUX) is a data selector. In (C), the MUX selects the impulse of one neuron from the flip-flop storing impulses of X neurons. In the (A,D,E), randomized rounding is a rounding element that rounds up if the random number generated by LFSR is smaller than the fraction bits and rounds down otherwise.

These processors adopted two parallel processing methods to complete the processing within a time step (1 ms). The first parallel processing method is pipeline processing used in all the processors in Figure 3. For example, pipeline processing in WP reduces the execution time by processing the second synapse at the MUX simultaneously with the stage of processing the first synapse at the AND. The second parallel processing method is the parallel operation of the dedicated processors described above. As shown in Figure 4, a number of PP, CP, DP, WP, and LP are provided for parallel processing. Four processors are provided to process GrCs in parallel due to their large number of neurons. To process PkCs, 4 WPs, and 4 LPs are provided because PkC has a large number of synapses despite a small number of neurons (8 neurons). Three processors are provided to complete GoC processing during the PkC processing. One processor is provided to complete BkC processing during PkC processing.

Figure 4. Processing circuit of the artificial cerebellum. Overlapping frames indicate parallel processing.

In addition to these parallel processing, we adopted the following three new methods for implementing the artificial cerebellum efficiently on the FPGA within the limits of the number of logic blocks and time steps required for actual motor control.

2.2.2.1 Fixed-point arithmetic and randomized roundingIn order to reduce computational cost and memory usage, 16-bit fixed-point numbers were adopted instead of floating-point numbers in the FPGA. However, round-off errors that occur in fixed-point arithmetic can degrade the precision of computation. In order to minimize the accumulation of rounding errors, randomized rounding was adopted. This method compares a fraction that is supposed to be rounded up or off with random numbers. Namely, if the fraction is less than the random number, it is rounded up while if the fraction is greater than or equal to the random number, it is truncated. The average rounding of random numbers is distributed around the fractions and can be rounded unbiasedly. We employed uniform random numbers generated by a linear feedback shift register (LFSR). Although an LFSR is a pseudo-random number generator with periodicity, the bit width of the LFSR used in this study (32-bit) provides a sufficiently long period and can keep the bias in randomized rounding small.

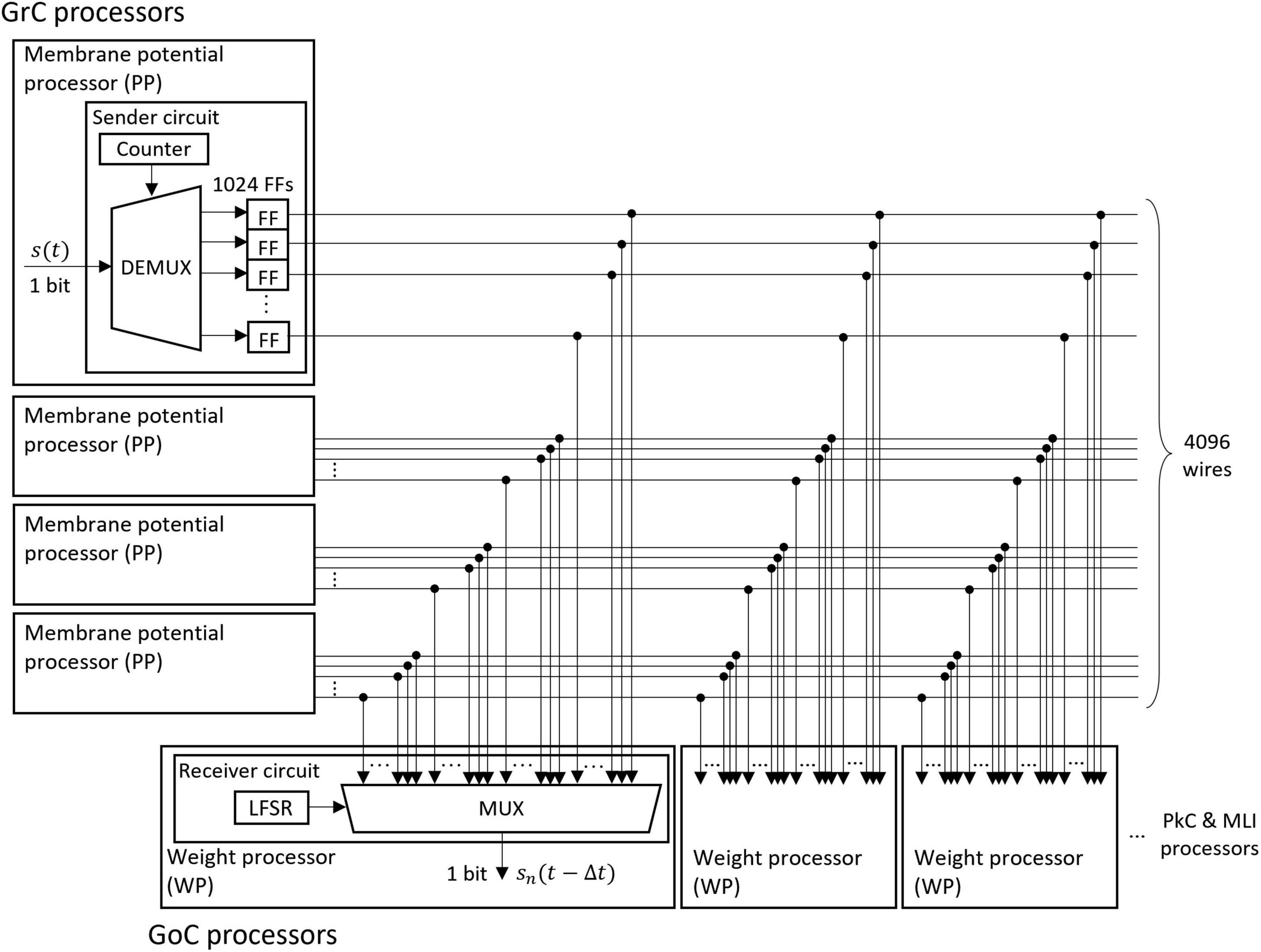

2.2.2.2 Fully coupled spike transfer circuitThe memory of the unit impulse δ(t) is frequently referenced by many processors. Short memory latency is required to take advantage of parallel processing. Therefore, we designed a parallel I/O interface composed of sender circuits and receiver circuits. We used this interface for the transmission of impulses between all neurons. An example of the interface is the transmission circuit from GrC to GoC as shown in Figure 5. The interface consists of sender circuits in the processor of GrC, which is the presynaptic neuron, and receiver circuits in the processor of GoC, which is the postsynaptic neuron.

Figure 5. Structure of the fully coupled spike transfer circuit. The unit impulse δ(t) calculated by the membrane potential processor (PP) is stored in the flip-flop (FF). The outputs of all FFs of the presynaptic neurons are connected to the multiplexer (MUX) of all weight processors (WP) of the postsynaptic neuron. By receiving the output of the linear feedback shift register (LFSR), the MUX randomly selects a presynaptic neuron and outputs the impulse.

A sender circuit is composed of a counter, a demultiplexer (DEMUX), and flip-flops (FFs). The number of FFs corresponds to the number of model neurons simulated in the PP of GrC; the number is 1024 in this case. When the membrane potential processing ends and the impulse is generated in the PP, the DEMUX receives the impulse and stores the event in one of the FFs depending on the counter output, which represents the neuron identifier (ID) of the impulse. The total number of impulses handled by this interface is 4096 because four sender circuits work in parallel. Here, the ON/OFF state of one wire of the FF output represents an impulse (whether spiked or not) of one neuron in a certain 1 ms.

A receiver circuit is composed of an LFSR and a multiplexer (MUX), which selects one signal from its 4096 input signals depending on the LFSR output. The LFSR outputs pseudo-random numbers, each of which represents the ID of the presynaptic neuron connected to the postsynaptic neuron processed at the period. The output of the LFSR is updated every 1 clock. One of the GoC (postsynaptic neuron) processors simulates 123 GoCs and three GoC processors simulate 369 GoCs in total. Each GoC is connected to 100 presynaptic GrC neurons, and the IDs of the connected GrC neurons are determined by the LFSR. Each receiver circuit repeats the spike read procedure 100 × 123 times. When the circuit completes one cycle of the procedure, the LFSR is reset to the initial value which varies for each receiver circuit, and the impulse from the same presynaptic neuron ID is read in the next time step. By branching receiver circuits, the reading procedure of impulses is processed in parallel.

2.2.2.3 Pseudo-random number generator to represent neural connectionsIn order to reduce memory usage, we adopted a pseudo-random number generator in place of a memory that stores information on neural connections. When implementing the artificial cerebellum on an FPGA, it is necessary to store the neuron ID of presynaptic and postsynaptic neurons. As shown in Figure 3C, the ID is used by MUX to output impulses of a desired neuron group. The memory capacity required for storing the ID is the product of the number of pre-synaptic neurons, the number of post-synaptic neurons, and the convergence rate, which is huge (approx. 40 million bytes). However, the capacity of the internal RAM, which is the RAM embedded in an FPGA and provides a much wider bandwidth than the external RAM, is very limited. The effective use of the internal RAM is the key factor for implementing many neurons in an FPGA. A large amount of internal RAM should be used for storing differential equation variables, not for the ID of neural connections. Because the neural connection in our model is defined by random numbers that are unchanged through an operation, we used an LFSR to achieve uniform random numbers that define neural connections. Since an LFSR is composed only of XORs and FFs, internal RAMs are unnecessary. Similarly, synaptic weights that don’t have synaptic plasticity were generated by the LFSR.

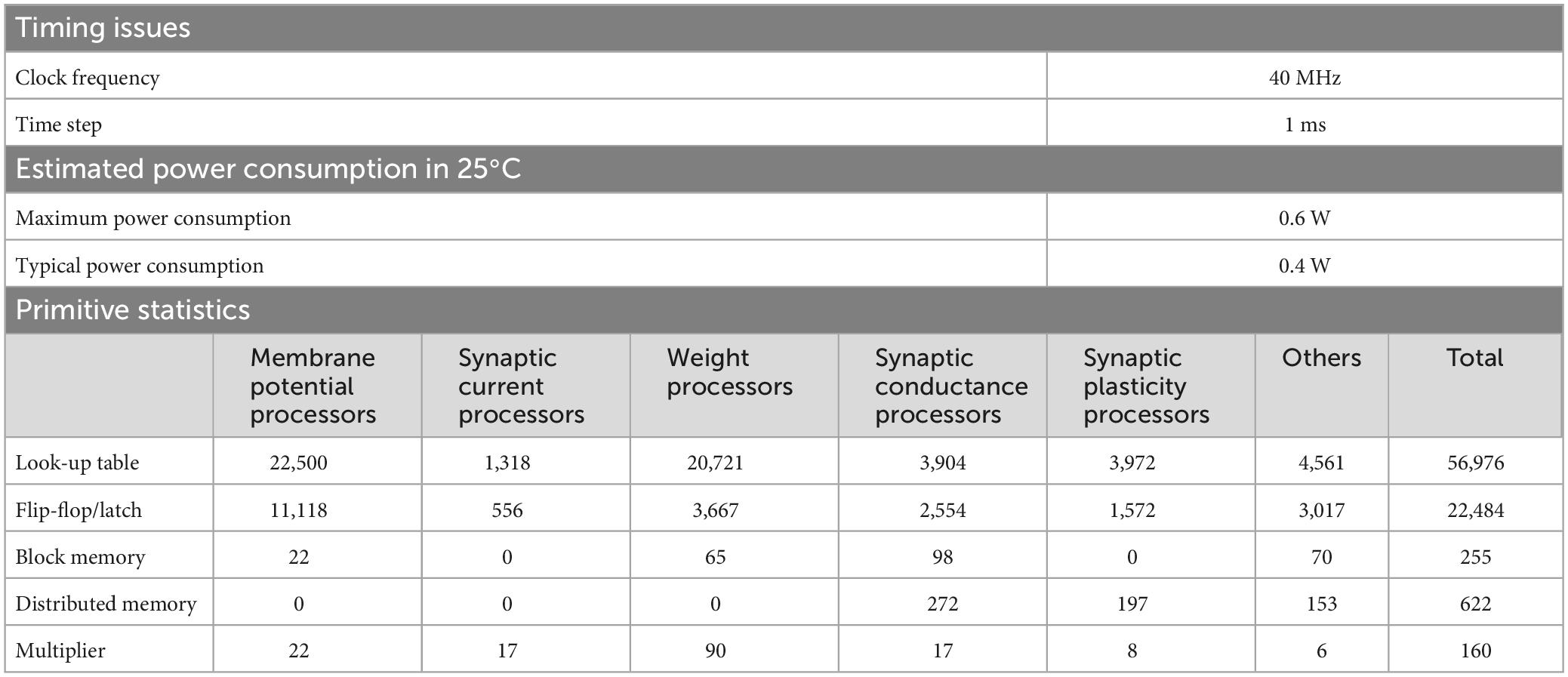

3 Results 3.1 SpecificationsThe neural network constituting the artificial cerebellum contains 9,504 neurons (including MFs and CFs) with 240,484 synapses. The hardware resources used for the artificial cerebellum construction are shown in Table 3. Block memory and Multiplier are internal RAM and DSP slices in the Spartan-6 FPGA chip. Distributed memory is RAM that uses look-up tables. At the FPGA clock frequency of 40 MHz, the calculation time for each hemisphere of the artificial cerebellum is 0.40 ms. The entire control circuit, including both hemispheres, completes all the computation in 1 ms time interval. Considering that the maximum firing rate of neurons in the cerebellum is about 500 spikes/s (Ito, 2012), 1 ms is fast enough for simulating the cerebellum. Therefore, real-time operation of the artificial cerebellum with this configuration is possible. The maximum power consumption at a clock frequency of 40 MHz and a device temperature of 25°C was estimated to be 0.6 W by the Xilinx Power Estimator.

Table 3. Specifications of the artificial cerebellum.

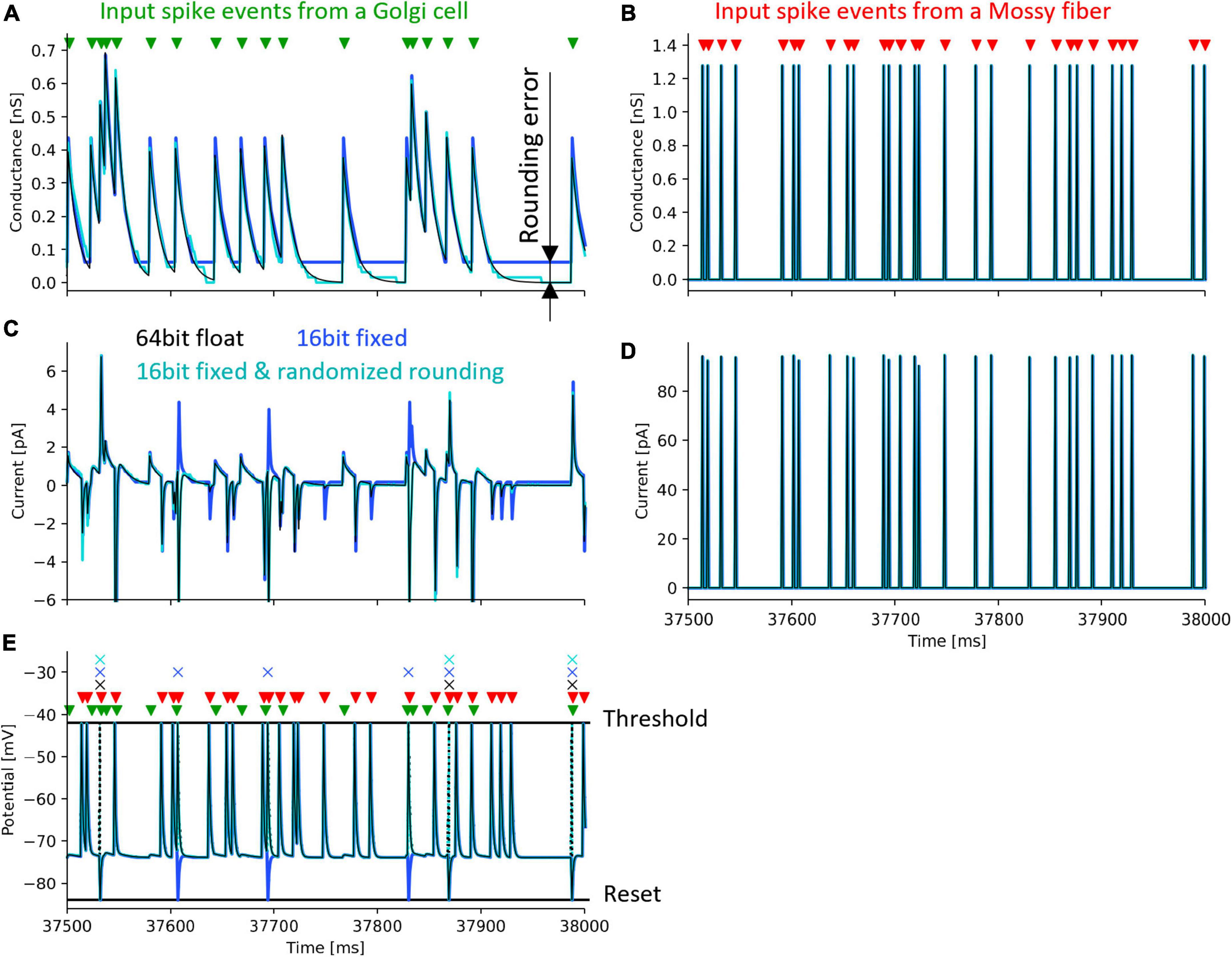

3.2 Simulation to evaluate the randomized roundingTo evaluate the effect of rounding error, we calculated one neuron model with the following three methods: (1) Python with 64-bit floating-point number and half-up rounding, (2) Xilinx ISE Simulator and 16-bit fixed-point number and half-up rounding, (3) Xilinx ISE Simulator with 16-bit fixed-point number and randomized rounding. As a simulation with high calculation accuracy, we used Python’s float type which is a 64-bit floating-point number and performed the simulation on a personal computer.

We simulated one GrC receiving inputs from one GoC and one MF. The input signals sent from GoC and the MF are impulses whose firing time is determined by uniform random numbers generated by an LFSR. The simulation was performed under the condition that the GoC fires at an average of 31 spikes/s and the MF fires at an average of 62 spikes/s so that the effects of rounding error can be easily evaluated. In this simulation, the weight between GrC and GoC was set to 93.8 pS, and that between GrC and MF was set to 320.0 pS.

Figure 6 shows the simulation results. The black lines in the figure plot the results simulated with a 64-bit floating-point number. Simulations with 16-bit fixed-point numbers cause rounding errors when computing the differential equations of synaptic conductance and membrane potential due to the limited number of digits. As a result, the synaptic conductance computed with 16-bit fixed-point numbers and half-up rounding does not converge to 0 nS (Figure 6A, blue line), resulting in the positively biased membrane potential, and the increased spike frequency (Figures 6C, E, blue line and cross mark, respectively). In contrast, using randomized rounding, the synaptic conductance converges to 0 nS (Figure 6A, light cyan line), the membrane potential is not biased, and the frequency of spike occurrence is almost the same as that computed with 64-bit floating-point numbers (Figures 6C, E, light cyan line and cross mark). The synaptic conductance computed with a 64-bit floating-point and that computed with a 16-bit fixed-point number and randomized rounding are approximately equal. Even if an error occurs in the computation of synaptic conductance, the conductance computed with a 16-bit fixed-point number and randomized rounding converges to 0 nS while no spike comes to the neuron, so the accumulated error can be canceled. In the simulation computed with 64-bit floating-point numbers, the mean firing rate of GrCs during 50 s was 6.58 spikes/s. The mean firing rate difference between the simulation with 64-bit floating-point numbers and that with 16-bit fixed-point numbers was 1.64 spikes/s. The mean firing rate difference between the simulation with 64-bit floating-point numbers and that with 16-bit fixed-point numbers and randomized rounding was 0.030 spikes/s. These results assure that the calculation accuracy can be maintained by using randomized rounding when computing differential equations that describe a neuron model with fixed-point numbers.

Figure 6. Simulation of a granule cell to verify the accuracy of randomized rounding. Black lines and cross marks depict a simulation result using a 64-bit floating-point number and half-up rounding, calculated in Python. Blue lines and cross marks represent a simulation result using a 16-bit fixed-point number and half-up rounding calculated in the Xilinx ISE Simulator. Cyan lines and cross marks show a simulation result using a 16-bit fixed-point number and randomized rounding calculated in the Xilinx ISE Simulator. Green and red triangles denote the spike timing of input to a GrC from a GoC and an MF. Cross marks illustrate the spike timing of the output of the GrC. (A) postsynaptic conductance between the GrC and the GoC. (B) postsynaptic conductance between the GrC and the MF. (C) postsynaptic current between the GrC and the GoC. (D) postsynaptic current between the GrC and the MF. (E) membrane potential of the GrC. Dotted lines represent changes in membrane potential during spikes which were not stored in the FPGA.

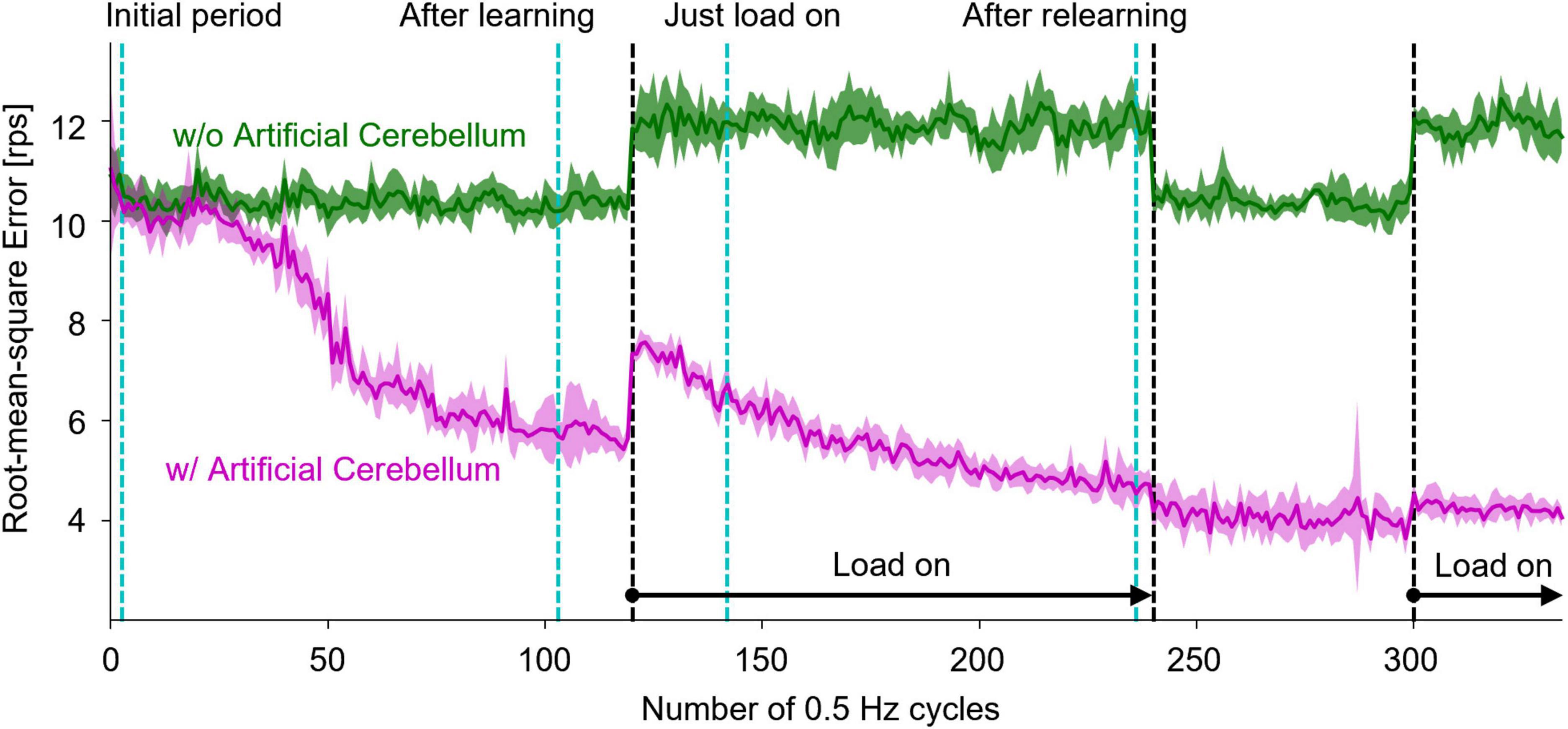

3.3 Real-world adaptive machine controlIn order to evaluate the capability of the artificial cerebellum in adaptive actuator control in a real-world environment, we employed a DC motor and imposed a load that varies in intensity over time. As shown in Figure 2, the FPGA controls the DC motor via an inverter in the control circuit. The rotation speed of the controlled object was fed back to the FPGA by the Hall effect sensor. The rotation speed error, which is calculated by subtracting the measured speed from the target speed, was input to the MFs and CFs of the artificial cerebellum.

Another DC motor was connected to the shaft of the controlled motor to impose a load. During the experiment, the switch in the circuit was opened to make the Load-OFF state and was closed to make the Load-On state. The resistance of the switch was 13.5Ω. The switch was opened and closed by the signal sent from the FPGA. The target time course of the rotation speed was a sine wave with an amplitude of 32 rotations per second (rps) and a period of 2.048 s. The load was turned on in the 120th cycle, turned off in the 240th cycle, and turned on again in the 300th cycle, at which the control of the artificial cerebellum had been stable.

Figure 7 shows the results of motor control experiments repeated 10 times each with or without the artificial cerebellum. Because the initial weights of the synapses between PFs and PkCs were set to 0, the amount of error was the same with and without the artificial cerebellum at the beginning of the experiment. With the artificial cerebellum, the amount of error started from the same level as the PD controller alone. The adaptation did not start immediately due to the influence of noise in the real-world environment. The error started to decrease from around the 50th cycle due to motor learning in the artificial cerebellum. When a load was imposed in the 120th cycle, the error rose sharply but gradually decreased as the artificial cerebellum adapted to the load. By contrast, the error increased with a much smaller amount at the timing of Load-Off in the 240th cycle and Load-On in the 300th cycle, demonstrating generalization in adaptation to both Load-Off and Load-On states.

Figure 7. Results of real-world motor control with and without the artificial cerebellum. The magenta and green lines represent the results with and without the artificial cerebellum, respectively. The solid line and the shaded area indicate the mean and plus-minus 1 standard deviation, respectively, over 10 experiments. The black dashed lines indicate the periods where the load was imposed. The cyan dashed lines denote the periods evaluated in Figure 8.

Figure 8 shows the rotation speed of the controlled DC motor and the activities of representative neuron models in the following four periods: initial period, after learning, just load-on, and after relearning corresponding to each triangle marked on the horizontal axis in Figure 7. In the top panels of Figure 8, the black, green, and magenta lines plot the target speed, the measured speed controlled without the artificial cerebellum, and the measured speed controlled with the artificial cerebellum, respectively. In the initial period (left top panel), the measured speed controlled with the artificial cerebellum (magenta) was smaller than and delayed to the target speed (black). This situation was similar to the

留言 (0)