The brain mechanisms involved in decision-making have been extensively studied in the last decades [reviewed in (Gold and Shadlen, 2007; Wang, 2008)]. Many studies focused on characterizing the neural dynamics of reward processing (Padoa-Schioppa, 2011; Wallis and Kennerley, 2011; Gluth et al., 2014), visual discrimination (Shadlen and Newsome, 1996; Shadlen and Newsome, 2001; Roitman and Shadlen, 2002), and other aspects of option assessment during value-based decision-making (Pastor-Bernier and Cisek, 2011; Wallis, 2011; Cai and Padoa-Schioppa, 2019; Carroll et al., 2019). Other tasks were developed to study decisions in the context of short-term memory (Siegel et al., 2009), and cost-risk trade-off (Kahneman and Tversky, 1979; Birnbaum, 2008; Eichberger and Pasichnichenko, 2021). In most of these contexts, choice outcomes are immediately experienced. This feature makes calculating costs and benefits straightforward, as all the necessary information is directly and immediately available to the decision maker for calculation (Kurniawan et al., 2013; Skvortsova et al., 2014; Apps et al., 2015; Thura and Cisek, 2016). However, a complete account of value-based choice behavior requires understanding the brain mechanisms underlying the detection and computation of non-immediate consequences of choices, and of the use of this information to guide subsequent decision strategies. Despite the rich literature in cognitive decision-making and the fact that long-term consequence is a critical concern in our daily decision-making processes, the dynamics of its operation are not fully understood, and have not been incorporated into state-of-the-art models of decision-making (Brunel and Wang, 2001; Wong and Wang, 2006; Wong et al., 2007). Most previous models work only for independent trials by considering value and/or accumulation of evidence about choice alternatives (Drugowitsch et al., 2012). They often do not, however, take into consideration the memory of recent past or the long-term effects of decisions in the context of brain dynamics. By contrast, studies on hierarchical decision-making show that when choices are repeatedly made along nodes of the same decision-tree, they tend to integrate elements of subsequent nodes (Hyafil and Moreno-Bote, 2017). In other words, the assessment of options during decisions incorporates elements of subsequent branching points. However, for these decisions to be informed, exploration and ultimately knowledge about future nodes is required.

Here we are interested in formalizing the brain mechanisms underlying how this exploration leads to information gain when the strategy is non-obvious. In other words, which are the brain operations involved in considering the consequence of choices during sequences of decisions. In this scenario, the case when the immediate most rewarding choice leads to lower long-term reward is of particular interest, as participants must anticipate that the cost of choosing lower value options results in increased delayed reward and higher cumulative reward overall. Moreover, if this relationship is covert, what are the cognitive mechanisms that enable us to learn the optimal strategy? Furthermore, how does the learning occur in the absence of explicit performance feedback?

To answer these questions, we developed the consequential task. Consecutive perceptual decision-making trials were organized into groups of dependent trials, where the choice made in one trial had a consequence on the next by determining the available choice options. How does the complexity of a perceptual decision-making task augment when combined with consequence assessment? First, consequence-based decisions (i.e., decisions in which optimal performance can only be achieved after acquiring knowledge of future nodes) require an increased temporal span of consideration, and, consequently, involve a more uncertain and broader set of factors to examine. This typically results in more computationally demanding option evaluation (Trommershäuser et al., 2008; Nagengast et al., 2011; O’Brien and Ahmed, 2015; Kirchler et al., 2017), longer deliberation, and often poorer decision accuracy (Schuck-Paim and Kacelnik, 2007; Drugowitsch et al., 2016). Second, making decisions based on gauging choice consequence involves a range of cognitive processes broader than those involved in immediate sensory-motor decisions (Cisek et al., 2009; Donner et al., 2009), with particular emphasis on value integration (Cisek and Kalaska, 2005; Park et al., 2011), metacognitive processing (Klaes et al., 2011; Goodwin et al., 2012) and long-term working memory (Cavanagh et al., 2018; Barbosa et al., 2020). Though long-term consequence assessment may be viewed as a time extended version of immediate action outcome evaluation, significant doubts remain regarding the core cognitive and neural processes underlying this ability (Balasubramani and Hayden, 2018).

To investigate the cognitive processes underlying consequence-based decision-making, we carried out a combined experimental and theoretical study. In the first part of this work, we designed a decision-making task, the consequential task, to characterize consequence-based option assessment. In brief, in the consequential task, consequence takes the form of increases/decreases in future reward value options as a function of participants’ choices. The nature of this inter-trial dependence was not disclosed in the instructions given to the participants, and no explicit performance feedback was provided. The absence of explicit learning cues was intended to force the participants to rely on their own subjective performance feedback to infer the delayed consequence of their decisions.

In the second part of our study, we provided a theoretical framework of the cognitive and neural processes required for consequence-based decision-making, including the patterns of inhibition and of far-sighted consequence assessment required to acquire the most reward across trials. The model was organized in three layers. The bottom layer, in line with the Amari, Wilson-Cowan and Wong-Wang models (Wilson and Cowan, 1972; Soltani et al., 2006; Wong and Wang, 2006; Webb et al., 2011; Marcos et al., 2013; Hertäg et al., 2014), described the neural dynamics of binary decision-making by means of two populations of neurons. The middle and top layers modeled an oversight mechanism for the assessment of consequence across groups of trials and the learning mechanism as a function of reward value across trials. This model reproduced the full range of behavioral observations across the different participants accurately while predicting a plausible neural implementation of the processes underlying the learning of consequence-based decision-making. In particular, our model described how the metacognitive assessment of consequence extends from short to long-term value prediction through an oversight mechanism that monitors predicted performance.

In this section we describe the consequential task and, more specifically, how it is designed to tap into the cognitive mechanisms involved in learning delayed consequences in the absence of explicit performance feedback. In this task, 28 healthy participants were instructed to choose one of two stimuli presented left and right on a screen. The stimuli represented partially filled containers of water and reward value was directly proportional to the amount of water in each container. The participants reported their choices by moving the computer mouse’s cursor from the central cue to the chosen stimulus (see Figure 1 and Materials and Methods for a thorough description). Participants were only paid a show-up fee and were, thus, not monetarily incentivized to perform well.

Since consequence depends on a predictive assessment of future contexts, the task was organized into two block types. In the first, trials required one-shot decisions, purely independent of one another. Similar to most decision-making tasks, the reward value in this block type could be maximized by picking the stimulus associated with the most reward value in each trial, i.e., choosing the larger of the two blue bars. However, in the second block type, trials were grouped into pairs or triads of dependent trials. We called each group of consecutive trials an episode to signify the boundary of dependence between them, and defined the notion of horizon (nH) as a metric for the depth of consequence to be expected for that episode. In other words, nH equaled the number of dependent trials following the first trial of the episode. For example, for nH = 1 an episode consisted of 2 trials, with the second depending on the first. The nature of the dependence between trials of an episode was such that the mean reward values of the stimuli in the second/third trial were systematically increased or decreased based on the participant’s choice in the preceding trial. Choosing the larger stimulus value led to a reduction of stimuli values in the subsequent trial whereas choosing the smaller stimulus in the first trial led to an increase (Figure 1B). The increment/reduction amount (G) was a constant and chosen such that selecting the larger stimulus in the first trial could never compensate for the loss in future reward value. In other words, acquiring the maximum cumulative reward value in each episode required choosing “big” in single trial episodes (horizon nH = 0), and choosing “small” in all trials of nH = 1 and nH = 2 episodes except the last, in which “big” should be chosen.

The consequential task design enables investigation into the role of perceived consequence during sequential decision-making. Consequence, in this context, refers to the influence of a choice on the stimuli values in the trial next. The post-decision stimuli heights function as a form of feedback which participants must learn to interpret in order to become aware of and evaluate the consequences associated with particular choices. Performance feedback, however, is absent from the task in that participants are never presented with cues indicating whether they are behaving optimally. This absence required participants to evaluate their own performance based on their experience during task execution. Importantly, participants were not informed of the nature of the inter-trial dependence and had to discover it on their own via exploration. Explicit performance feedback might have had the undesirable effect of participants focusing on finding the specific sequence of choices yielding optimal performance feedback, without having to learn the dependence between their decisions and the subsequent trials. In other words, an explicit measure of performance might have reduced the task to an explicit trial-and-error test in which participants would experiment with different sequences of choices (“big-small,” “small-big,” etc.) until finding the sequence leading to maximum performance, rather than learning to evaluate each option’s consequence in terms of their prediction of future reward. In contrast, the absence of performance feedback obligated participants to create an internal sense of assessment, which could only rely on two mechanisms: the sensory perception of the systematic stimuli changes in the subsequent trial after each choice, and the exploration of option choices at each trial during the earlier part of each block. The resulting task essentially becomes a measure of learning about delayed consequences associated with each option in the absence of explicit performance feedback.

In summary, for the participants to be able to perform the task, they were informed of the episode-based organization of trials at each block, i.e., the horizon. The instruction to the participant was to find the strategy leading to the most cumulative reward value for each episode and to actively explore their choices. Learning the optimal policy was challenging due to several factors. First, perceptual discrimination was difficult in some trials since the height difference between stimuli could be as low as 1% the height of the container. Second, although participants were informed that their choices may affect future trials within the episode, the nature of this dependency was not specified. This means that from the perspective of the participants, the value of the stimuli offers might at first appear random. Third, explicit performance feedback was omitted from the task after each episode, requiring participants to discover the nature of the inter-trial dependencies via exploration. Further details are shown in the Methods section, and in Figure 1.

Several metrics were extracted from the participants’ behavioral data: performance (PF), reported choices (CH), reaction time (RT), and visual discrimination (VD) sensitivity. PF was extracted from each episode and assumed values between 0 (worst) and 1 (best). PF was calculated as the percentage of the maximum possible reward value acquired in each episode and is normalized such that PF = 0 in episodes wherein the participant acquired the minimum possible reward value. CH was the choice made by the participant in each trial and could take one of two values: small (i.e., smaller stimulus), or large (i.e., larger stimulus). RT was calculated as the time difference between the simultaneous presentation of both stimuli (the GO signal), and the onset of the movement. VD is a measure of each participants’ ability to visually discriminate between stimuli, i.e., identifying which stimulus is bigger/smaller (see Methods for further details). As shown below, when the difference between stimuli (ΔS) is the smallest, participants were not able to accurately distinguish between stimuli. The ΔS varies between 1 and 20% of the size of the container. Note that for horizon nH = 0, a trial with ΔS = 0.01 is perceptually difficult, but if chosen wrong, the difference in the final reward would be small (1%). However, for horizon nH = 1 or 2, choosing the wrong stimulus due to perceptual discrimination has a large impact on the final performance, since it leads to a decrease of the available stimuli in the next trials.

The absence of explicit performance-related feedback at the end of each episode made the task more difficult, and, consequently, not all participants were able to find the optimal strategy. For horizon nH = 0, 26 of the 28 participants learned and applied the optimal strategy, i.e., repeatedly selecting the larger stimulus. In contrast, only 22 participants learned the optimal strategy during horizon nH = 1, 2 blocks, i.e., selecting the larger stimulus in the last trial only.

We analyzed the exploratory strategies the participants employed. In particular, we tested whether participants only considered the size of the stimuli (small/big), or if they also tested other hypotheses involving the order of presentation of the stimuli (first/s) or the location (left/right) of the stimuli. The result of this analysis can be found in the Supplementary Figure S1. In brief, participants’ choices overwhelmingly depended on stimuli size and there was little evidence other factors such as order of presentation or location were seriously considered in the decision-making process. Most participants who did not learn the optimal strategy for nH = 1,2 repeatedly chose the larger stimulus for all trials.

In Materials and Methods (subsection Consequential Decision-Making task), we described how the task was structured, and we mentioned that we randomized the order in which participants performed the horizons. This means that, for example, some participants performed nH = 2 before nH = 0. We wondered if the order of execution of the horizons had an influence on learning. To address this, we performed an analysis comparing learning times for different orders of horizon presentation. The results of this investigation can be found in the Supplementary Figures S2, S3. In brief, we discovered that once the optimal strategy was understood in nH = 1 or 2, participants generalized the rule and, by abstraction, applied it to the horizon performed afterwards. For this reason, we defined a single learning time per session. We defined learning time (tL) as the number of episodes that occurred before the optimal strategy was assimilated. We considered the optimal strategy to be assimilated if the participant employed it in at least 9 out of the following 10 episodes, and 75% of the remaining episodes until the end of the block. To account for perceptual discrimination errors (during low VD), we excluded the most difficult episodes in terms of ΔS to calculate the learning time.

Figure 2 shows the summary results for all 28 participants. In Panel (a), we show the histogram of their learning times in terms of episodes (E). The last histogram bar in Figure 2A (shown as NL – No Learning) represents the 6 participants who never learned the optimal strategy. We divided participants into 4 groups as a function of their learning speed: slow, medium, fast, and those who never learned the optimal strategy.

Figure 2B shows the VD, for all difficult trials (smallest ΔS) and participants, where VD was calculated as the percentage of correct choices over the last 80 episodes in the horizon nH = 0 block. On average, stimuli were discriminated correctly in 71% of the most difficult trials. This indicates that most participants continued making errors after learning the optimal strategy due to low VD. This is reported in Figure 2C which shows the grand average and standard error of the PF across subjects as a function of the difficulty level for all episodes following each participant’s learning time (p = 10−12, F-stat = 59). Note that, in Figure 2D, RT increased as a function of VD (p = 10−25, F-stat = 160).

The dependence of PF and RT on VD together with the other variables had to be established statistically. To assess the learning process, we quantified the relationship of PF and RT with horizon nH, trial within episode TE, and episode E. To obtain consistent results, we adjusted these variables as follows. The trial within episode was reversed chronologically, because the optimal choice for the last TE (large) is the same regardless of the horizon number. Furthermore, regarding the model of PF, we made a per trial adaptation of PF (PF was originally calculated per episode), i.e., the probability of choosing the optimal choice Poc . Finally, to assess differences between learning groups, we introduced the categorical variable L that identified the group of participants that learned the optimal strategy and the ones who did not (as seen in Figure 2A). We then used a generalized linear mixed effects model (Verbeke and Molenberghs, 2009; Gałecki and Burzykowski, 2013) to predict PF and RT. The independent variables for the fixed effects are horizon nH, trial within episode TE , the passage of time expressed in terms of episodes E, and ΔS. We set the random effects for the intercept and the episodes grouped by participant p; we write the random effects as (E|p) . The resulting models are: Poc~L·E+L·ΔS+L·nH·TE+(E|p) and RT~L·E+L·ΔS+L·nH·TE+(E|p). The regression coefficients, along with their respective group significance, are shown in Figures 2E,F. The detailed results of the statistical analysis are reported in Section 5.5. In panel (e), Poc increases with TE , suggesting that the first trial(s) within the episode are less likely to be guessed right, i.e., choosing the smaller stimuli. This makes sense, since only the early trials within episode required inhibition. Moreover, looking at the amplitude of the regression coefficients, we can see that this effect is even stronger in the no-learning case. The same argument can be made for the dependence with nH. A strong difference between learning and no-learning can be appreciated when considering the time dependence: for the learners group Poc increases as time goes by, i.e., E increases, while it is not significant for the group that did not learn the optimal strategy. The two learning groups are globally statistically different (p = 10−7). In panel (f), RT shows converse effect directions between learning and no-learning groups for both dependencies on TE and nH. The participants who learned the optimal strategy exhibited longer RT for the earlier trials within the episode, consistent with the need to inhibit the selection of the larger stimulus. Also, the larger the horizon, the longer the RT, opposite to the no-learning group. As expected, RT increases with decreasing ΔS for both groups. The two learning groups are globally statistically different (p = 10−17).

Figure 3 depicts the data from 3 sample participants. In particular we show their PFs, CHs, and RTs metrics, and the order of execution of the different blocks and horizons. Each column corresponds to a participant and each row to a different horizon level. Note that all three participants performed the nH = 0 task correctly (Figures 3A,B). The first 2 participants also performed nH = 1 correctly, while participant 3 did not learn the correct strategy until executing nH = 2. Note that participants 1 and 2 performed nH = 1 before nH = 2 and were able to apply what they learned in nH = 1 to nH = 2. Because of this, a very fast learning process can be seen during the first nH = 2 block. In Figure 3C, note that some RTs are negative. In these cases, the participant did not wait for the presentation of the GO signal to start the movement.

In this section we describe our mathematical formalization of consequential decision-making which incorporates a variable foresight mechanism and adapts to the distribution of reward value across trials. The formalization takes the form of a three-layer neural model. In brief, the bottommost layer is a mean-field model for binary decision-making. The mean-field is driven by a strategy learning layer which then dictate the choices to the decision-making process.

We feel this novel approach yields several advantages over more classical models (i.e., reinforcement learning, drift-diffusion, urgency-gating, etc.). In brief, we aim to provide a formalization of the neural processes involved in reward-driven, delayed-value, multi-step decisions in a context in which attaining reward is contingent on learning the covert effect of actions on the environment. In other words, learning must operate in the absence of explicit performance feedback. Another unique aspect of our approach is the incorporation of a foresight mechanism which adapts to the covert relationship between actions and their effect on the environment as well as to the distribution of reward value across the trials of an episode. We expand on the reasoning behind the creation of our novel formalization in the Discussion section.

To describe the neural dynamics at each trial, we used a mean-field approximation of a biophysically based binary decision-making model (Wilson and Cowan, 1972; Brunel and Wang, 2001; Wang, 2002; Thura et al., 2022). This approximation is often used to analyze neuronal dynamics in contexts where mean population activity is relevant. It has been shown that even simple mean-field approximations leveraging as little as two internal variables could reproduce most features of the underlying spiking neuron model (Wong and Wang, 2006).

The core of the model consists of two populations of excitatory neurons: one sensitive to the stimulus on the left-hand side of the screen (L), and the other to the stimulus on the right (R). The intensity of the evidence is the size of each stimulus, which is directly proportional to the amount of reward displayed. In the model this is captured by the parameters λL, λR, respectively. Though distinguishing between the bigger and smaller stimulus values is critical in our task, in the model it is convenient to characterize stimuli based on their position, i.e., left/right. The reason being that the information regarding target size is already conveyed by the respective stimuli values, i.e., the parameters λL, λR. Moreover, this allows us to introduce an extra degree of freedom in the model without increasing the number of variables. The equations

vs. for participant 2). Such disadvantageous initial conditions combined with a weak learning rate was not enough for the strategy to be learned in a block of 50 episodes.Figure 9D shows the goodness of fit for the two main behavioral metrics we aimed to reproduce: the reaction time (RT) and the performance in terms of initial performance (PFi) and learning time (tL). To measure the goodness of fit while remaining consistent with our fitting procedure, we used the following metrics: KSD for RT, mean-square error for PFi, and the difference between the participant’s data and the model’s mean divided by the total number of episodes for tL.

To summarize, we first found the best fit for the RT and VD by varying τ and β in Eq. 3. Then, we calculated the subjective initial bias ϕ0. Finally, while holding the aforementioned parameters fixed, we found the best fit for the learning rate k.

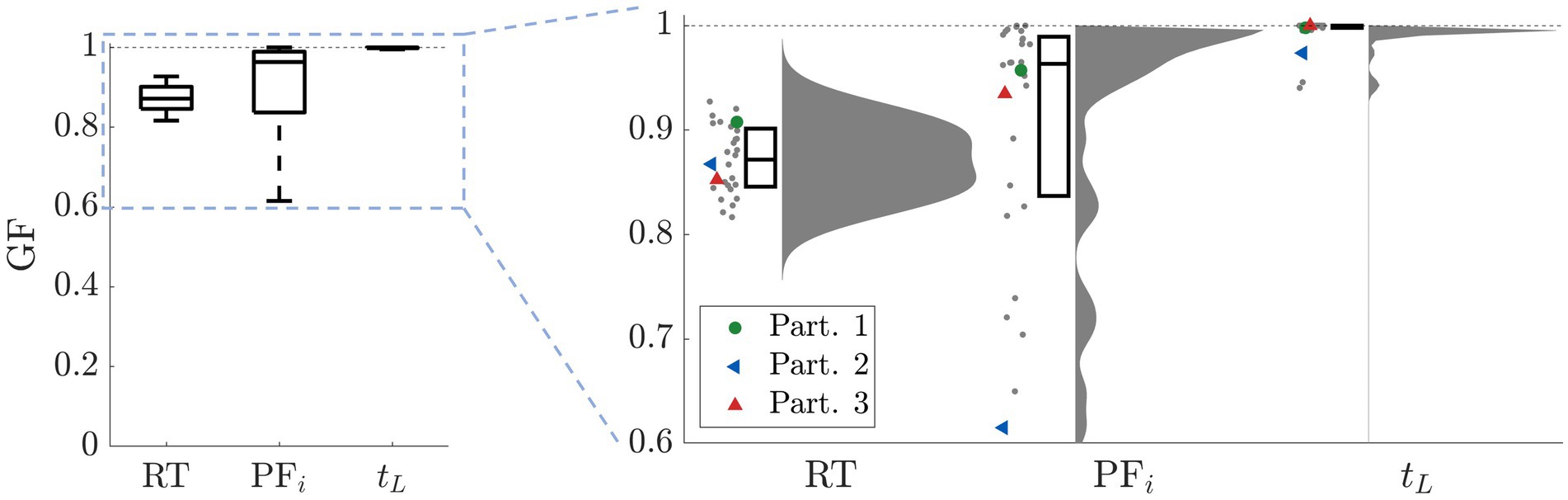

To illustrate that the model can capture the full range of behavior, Figure 10 shows the goodness of fit for the RT, initial performance PFi, and learning time tL for all 28 participants. For all three metrics, we show the scatter plot including each participant, the respective distribution, and the boxplot depicting the median and the 25th/75th percentiles. For reference, we superposed colored markers to indicate three sample participants shown in the previous figure.

Figure 10. Goodness of fit. For RT we calculated KSD, for PFi we evaluated the mean-square error, and for tL we took the difference between the participant’s data and the model’s mean divided by the total number of episodes. For all three metrics, we show the scatter plot of each single participant, the corresponding distribution, and the boxplot depicting the median and the 25/75 percentiles. For reference, the superposed colored markers indicate the three participants shown in the previous figure.

In summary, we fit the model to each of the participant’s behavioral metrics. We first used the RT distribution and VD of each participant to fit the parameters in Eq. 3. Once these parameters were fixed, we moved on to calculate the initial bias before running simulations of the model. Finally, we compared the results of the simulations with the performance of the participants and found the best fit for the behavioral parameters, i.e., the learning rate and decisional uncertainty.

3 DiscussionIn this study we analyzed how the consideration of consequence influences learning in value-based decision-making, and provided an account of the underlying neural processes. To this end, we examined how human participants learned to make sequences of decisions between value-based stimuli in the consequential task. This is a novel experimental task in which initial knowledge about environment was minimal, and explicit performance cues were absent. Consequence refers to the effect choices exert on the value of stimuli in the next trial. This was designed to promote small value choices during the early trials of each episode, and a large value one in the last trial. The instruction to each participant was to explore and to find the strategy leading to the highest cumulative reward value. The absence of explicit performance cues was meant to promote the development of a subjective assessment of performance based on relating the size of the stimuli in the current trial to the choice in the previous one. Our results show that decisions involving the computation of future consequence took longer to perform than those with no further consequence (i.e., the last choice of each episode), suggesting a more involved decision-making process when future consequence is to taken into account. Most participants eventually learned the optimal strategy, although with significant differences in their learning times.

Based on these observations and on previous evidence, we introduced a mathematical model of a set of plausible cognitive processes for consequence-based decision-making. The model is organized in three layers. The bottom layer describes the average dynamics of two neural populations representing the preference for each option. The populations compete against each other until their difference in activity crosses a threshold. The middle layer illustrates the participant’s preference for choosing the bigger or smaller stimulus at each trial (the so-called intended decision). The top layer describes the strategy learning process which oversees the model’s performance, adapts by reinforcement to maximize the cumulative reward value, and drives the intended decision layer. This oversight mechanism, combined with the modulation of preference, accurately reproduced an internal process of consequence assessment and subsequent policy update. The model was validated by fitting its parameters to reproduce each participant’s behavioral data (i.e., reaction time distribution, visual discrimination, initial bias, and performance). The model faithfully reproduced the participants’ behavior despite its varied nature. Importantly, this model also provides a plausible account of the neural processes required for gauging options as a function of their associated consequence (measured in terms of reward), and of how these processes are involved in decision-making.

3.1 Justification of the consequential taskReal world decisions are rarely accompanied by immediate feedback, there is often a conflict between short and long-term reward, consequences are often long-lasting, reward is often difficult to quantify, and state-action spaces often require exploration to define (as opposed to being known a priori). Several of these characteristics generate uncertainty and complicate performance assessment. The consequential task combined features common to both hierarchical decision-making (Lorteije et al., 2015; Zylberberg et al., 2017; Zylberberg, 2022) and delay discounting paradigms (Hayden and Platt, 2007; Kim et al., 2008; Hwang et al., 2009; Alexander and Brown, 2010; Hayden, 2016) to examine how this kind of decision-making unfolds. Moreover, the absence of cued performance feedback during the task made our paradigm particularly suitable for studying how learning optimal strategies may extend from immediate perceptual decision-making to a more complex process involving predictions of future states. Unlike standard hierarchical decision-making and partially observable Markov decision processes (Smallwood and Sondik, 1973; Kaelbling et al., 1998), participants in the consequential task were not aware of the underlying relationship between actions and their consequences. Participants were told only that the choice they made in one trial might influence the next. In this way, participants had to explore and observe the consequences of their choices to deduce that an inter-trial dependence existed. Moreover, participants could never be certain if they found the optimal solution, i.e., picked the correct sequence of decisions to maximize cumulative reward value. This is in sharp contrast to delay discounting tasks which largely focus on the principle of inhibitory short-term control where the presence of explicit cues helps overcome impulsive behavior, such as in the farming on Mars task (Gureckis and Love, 2009) (see below).

3.2 Cognitive hypothesis based on behavioral resultsThe purpose of our study was to understand how participants learned how their choices influenced the decision context, as opposed to assessing whether reward value varied with time. In other words, the absence of explicit cues was intended to force the participants to rely on their own subjective assessment to infer the delayed consequence of their decisions across groups of successive trials, and whether their strategy was being successful. This inner assessment had to be driven by the participant’s probing of patterns of action/decision effects. Complementary to this, we believe that participants had to go through a hypothesis testing process, until the eureka moment of realizing that one specific strategy was better than the others. Consequently, to find the optimal strategy, participants had to first realize that choosing the smaller option lead to more rewarding options (the eureka moment). Explicitly, this implies identifying the specific feature of the stimuli to be considered, having nothing else than the observance of their choice/action effects on the environment (the stimuli in the next trial). Then, they had to confirm their criterion based on the global effect of their choices on the stimuli size across episodes.

3.3 Rule-based vs. Far-sighted assessment of consequenceThe strategy to attain the highest possible cumulative reward value may be operationalized as a sequence of decision rules: choose small, then big in horizon 1 episodes; choose small, then small, then big, in horizon 2 episodes. Though we expected the participants’ choices to abide by these rules once the learning was complete and the optimal decision strategy was established, the focus of this study is on how consequence-based assessment forms and influences the learning of that optimal strategy. Because of this, it was crucial that the consequential task were devoid of any cued performance feedback, which could potentially inform the participant of his/her performance after each episode and, ultimately, promote a rule-based strategy.

For the same purpose, and to promote exploration, the participants were left with the uncertainty of neither having a criterion to follow to make decisions nor the knowledge about which aspect of the stimuli to attend to while making decisions. Note that, in addition to the bar heights (proportional to reward value), the stimuli at each trial were presented on the right and left of the screen, they were shown sequentially, randomly alternating their order of presentation across trials. Both the position and order of presentation of the stimuli increased the uncertainty with respect to the relevant stimuli dimensions. Under these conditions, participants had to perceive the relationship between their choices and the values of the stimuli presented in subsequent trials. If noticed, this observation could then be used to predict the consequence associated with choosing each option at each trial within episode. In other words, participants had to identify the relevant aspects of the stimuli for the goal at hand and rely on their own subjective perception of performance. This derived from their observations of the stimuli presented after each decision and by their own internal assessment criterion which itself was based on their ability to estimate the sum of water (reward value) throughout the trials of each episode.

To summarize, cued performance feedback could have reduced task to simple rule-based learning. Although the optimal strategy consists of a rule-based sequence, the crucial element of the task is that the participant must undergo a phase of exploration in which learning is driven by exploration and assessment of the reward-based consequence associated with each option.

3.4 Computational model of consequenceDrift-diffusion models (DDM) have been used to describe how sensory decisions unfold as a function of evidence accumulation (Ratcliff and McKoon, 2008). Likewise, urgency-gating model (Cisek et al., 2009) emphasize the contribution of the passage of time to make sensory based decisions in dynamic environments. Extended versions of the DDM have also been used to describe how evidence relates to value-based decisions via informative saccades (Krajbich et al., 2010; Krajbich and Rangel, 2011), extending into hybrid models that can adapt their parameters over time (Fontanesi et al., 2019; Boelts et al., 2022) via reinforcement learning (Sutton and Barto, 1981). However, these formulations fall short to describe the complexity of brain population dynamics during decision-making and of inhibitory processes therein. Furthermore, they do not capture how action effects and rewards are subjectively perceived and merged in contexts in which these are delayed and must be first perceived and learned, as it occurs in the consequential task. In brief, here we intended a formalization of the neural processes underlying reward-driven, delayed-value, multi-step decisions in a context in which attaining reward is contingent on learning the covert effect of actions on the environment. In this way, learning must operate in the absence of explicit performance feedback, and in the absence of knowledge of the target strategy itself, which is unlike previous RL-based formulations. By contrast, if the purpose of the present study were to merely provide an estimate of the participants’ decisions and learning process, an RL formulation could have been used to solve the credit assignment problem (Minsky, 1961) and learn the behavioral strategy. However, these models fall short of the aforementioned aspects of neuronal dynamics, competition and inhibition that we targeted in this study.

Learning in our model is operationalized by a reinforcement comparison algorithm (Amari, 1998; Brunel and Wang, 2001; Roxin and Ledberg, 2008; Krajbich et al., 2010; Cos et al., 2013; Shahar et al., 2019), scaled by the difference between predicted vs. obtained reward value (Sutton and Barto, 1981; Dayan, 1992), measured accordingly to the participant’s subjectively perceived scale. For simplicity, we assumed a fixed function across participants to quantify reward value [R(T) function in Eq. 4]. Furthermore, to provide the necessary flexibility for the model to capture the full range of participants’ learning dynamics, the model included two free parameters, the learning rate and the decisional uncertainty, to be fit to each participant’s behavior. The result is a model that could faithfully reproduce the full range of behaviors of each participant: RT distribution, pattern of decision-making, and learning time.

The model is organized in three layers. The lower neural dynamics layer represents the average activity of two neural populations competing for selection, each sensitive to one of the two stimuli at each trial. The commitment for an option is made when the difference in firing rate between the two populations crosses a given threshold (Amari, 1998; Brunel and Wang, 2001; Marcos et al., 2013). A similar architecture, with small variations, has been used to model decision-making in a broad set of tasks (Wong and Wang, 2006; Marcos et al., 2013; Marcos and Genovesio, 2016; Lam et al., 2022) and can describe most types of single-trial, binary decision-making, including value-based and perceptual paradigms. Importantly, our model does not provide a clear delineation between deliberation and commitment as DDMs do, but rather a neuron-like unselective ramp-up representation of options that diverge until a commitment is made. Like accumulation-to-bound models, attractor-based models can also account for speed-accuracy trade-offs during decision-making. We chose this kind of formalism because attractor models are more biologically realistic than the abstract accumulation-to-bound ones, and possibly provide a more promising avenue for unifying theories of brain and behavior. This was necessary for our model to provide a plausible explanation for the neural competition and inhibition known to operate in premotor and prefrontal cortical areas. Moreover, our model weighs inputs with recurrent activity during sequences of decisions and projects this formulation for a neighbor neurophysiological study. Note that this layer of the model can be derived analytically from a network of spiking neurons used for making binary decisions (Wang, 2002). Beyond the scope of this study, this model could also subserve probing into working memory (Deco and Rolls, 2005; Wong and Wang, 2006); a transient input could bring the system from the resting state to one of the two stimulus-selective persistent activity states, to be internally maintained across a delay period.

In addition to binary population competition, we claim that modeling consequence-based decision-making requires at least two additional mechanisms. The first one is needed to prioritize a specific policy to guide the decisions; the second one to create an internal mechanism of performance to evaluate these criteria, based on the difference between predicted and obtained reward value. Accordingly, the role of the middle layer (intended decision) is to implement those criteria which in our case depend on the relative value of the stimuli and on the number of trial within episode. Finally, the top layer (strategy learning) carries out learning by reinforcement comparison (Sutton and Barto, 2018) and temporal difference (Sutton and Barto, 1981; Houk et al., 1995).

Altogether, our model introduces a plausible implementation of the neurocognitive processes involved in consequence-based decision-making. Each part of the model is essential to describe decision-making, inhibition, and learning. For the neural dynamics layer, the set of equations corresponds to the most simplified version of a network of brain neurons during binary decision-making (Wong and Wang, 2006); it makes use of only two populations of neurons and a minimal set of parameters. The middle layer consists of one equation (with only one free parameter) and makes use of the simplest possible form of a two-attractor dynamical system (with the addition of a noise component). Finally, the top layer follows a reinforcement comparison algorithm, and adds a single free parameter to the model: the learning rate. Each of these elements is indispensable for a biologically plausible theoretical formalization of consequence-based decision-making. Without the first layer we would not have a biologically plausible decision-making model, without the middle layer we could not describe policy changes, and without the top layer we would not have learning.

Previous research describes models of learning processes during decision-making, for the most part implemented via RL (Sutton and Barto, 2018). Although our paradigm could also be modeled with RL, the clear advantage of our model is that it does not only describe the behavioral patterns of learning for each individual participant, but provides a biophysically plausible description of the underlying brain processes when predicting RTs. Moreover, our model is directly grounded on the neural substrate dynamics, since the mean-field approximation has been derived analytically from networks of spiking neurons (Wang, 2002).

The results and predictions depicted in the model show that the dynamics of the three layers combined can accurately reproduce the behavior of each single participant, including those who did not attain the optimal strategy. The low number (4) of equations in the model, together with the low number of free parameters (7, of which only 3 are used for fitting), makes this model a simple, yet powerful tool able to reproduce a large variety of behaviors. Moreover, unlike the basic RL agents or models for evidence accumulation, our model is biologically plausible and predicting individual behavioral metrics, such as RT, initial bias, and visual discrimination. Note that, for the behavioral part of the model, only one free parameter is used, i.e., learning rate. A larger number of free parameters (at least 3) is needed for classical reinforcement learning algorithms, e.g., Q-learning.

The comprehensive formulation of the model makes it possible to explain and fit various scenarios. We have already mentioned the differences in learning speeds, and that the model could fit any of them, even when there was no learning. Another example is the difference in the order of execution of the blocks. Namely, most participant were able to take the optimal strategy learned in one horizon and generalize it to the other horizon block, making the learning much faster (see Supplementary materials). In our model, this is captured mainly by the initial bias which is calculated for each block individually. As third example, potentially, a characteristic that our model could fit is the difference in RT between trials within episodes and horizons (see Figure 2F). In this manuscript, for simplicity, we decided to perform a single fit for the neural dynamics’ equations, finding one set of parameters per participants. To explain the differences between horizons and trials within episodes, the same fit should be done for each condition. Moreover, even if it is not the case of this specific task, the model is able to adapt in case of a sudden change of strategy. Nevertheless, if this would be the case, it would be advisable to adopt a more realistic adaptation mechanism. Namely, it seems reasonable to assume that, after learning, once a participant realizes that the optimal strategy used so far is not working anymore, he would reset his strategy instead of gradually change it. However, even though it is an interesting topic, this is work for future investigation.

4 Conclusion and future workIn this manuscript we have introduced a minimalistic formalism of the brain dynamics of consequence-based decision-making and its associated learning process. We validated this formalism with the behavioral data gathered from 28 human participants, which the model could accurately reproduce. By extending classic, single-trial binary decision-making, we designed a oversight mechanism based on the assessment of the effect of decisions on subsequent stimuli, and a reinforcement rule to modify behavioral preferences. We also designed the consequential task, an experimental framework in which acquiring the most reward value required learning to assess the consequence associated with each option during the decision-making process. Both the experimental results and the model predictions describe consequence-based decision-making as an extended version of value-based decision-making in which the computation of predicted reward value may extend over several trials. The formalism introduces the necessary notions of oversight of the current strategy and of adaptive reinforcement, as the minimal requirements to learn consequence-based decision-making.

Although our model has been designed and tested in the consequential task described here, we argue that its generalization to similar paradigms in which optimal decisions require assessing the consequence associated to the presented options, or sequences of multiple decisions, may be relatively straightforward. Specifically, we envision three possible future extensions to facilitate its generalization. First, the model could incorporate several preference criteria (either simultaneously or combinations thereof) into the intended decision layer: left vs. right or first vs. second, instead of small vs. big, to be determined in a dynamical fashion. This could be achieved with a multi-dimensional attractor model, with as many basins of attraction as the number of preference criteria to be considered.

The second future extension is the re-definition of the reward function R(T) according to the subjective criterion of preference. Namely, a reward value can be perceived differently by different participants, i.e., people operate optimally according to their own subjective perception of the reward value. Because of this, a possible extension is to incorporate an individual reward value function per participant (R(T) in Eq. 4). For simplicity, in this manuscript we set R(T) to be fixed and to be the objective reward value function. In case a participant did not perceive what was the optimal reward value, he/she performed sub-optimally according to objective reward function, and the model responded by allowing the learning constant k to be zero. This holds since the optimal strategy was never reached, and the fitting of the participant’s performance was correct. Nevertheless, it remains a standing work of significant interest to investigate different subjective reward mechanisms and their implementation in the model.

Finally, the third enhancement we propose for our model is making the learning rate time dependent, i.e., k(E). This would facilitate reproducing learning processes starting at different times throughout the session. For example, it is possible that participants initiate the session having in mind a possible (incorrect) strategy and they stick to it without looking for clues, and therefore without learning the optimal policy. Nevertheless, after many trials they may change their mind and begin to explore different strategies. In this case, the learning rate k(E) would be set to zero for all the initial trials when indeed there is no learning.

Again, we want to emphasize that even if this model is built for the consequential task, it contains all the elements and processes to reproduce behavior from other tasks involving sequential consequence-based decision-making. Note that the strategy learning mechanism is already general enough to adapt to tasks where the optimal policy is not fixed throughout the experiment. In the case of a policy reversal, for example, the learning mechanism would be able to detect a change and adapt accordingly. Finally, we want to stress that our model could be applied to other decision-making paradigms, such as a version of the consequential random-dot task (Britten et al., 1993) or other multiple-option paradigms.

5 Materials and methods 5.1 ParticipantsA total of 28 participants (15 males, 13 females; age range 18–30 years; all right hand dominant) participated in the experimental task. All participants were neurologically healthy, had normal or corrected to normal vision, were naive as to the purpose of the study, and gave informed consent before participating. The study was approved by the local Clinical Research Ethics Committee (CEIm Ref. #2021/9743/I) and was conducted in accordance with relevant guidelines and regulations. Participants were paid a €10 show-up fee.

5.2 Experimental setupParticipants were situated in the laboratory room at the Facultat de Matemàtiques i Informàtica, Universitat de Barcelona, where the task was performed. The participants were seated in a chair, facing the experimental table, with their chest approximately 10 cm from the table edge and their right arm resting on its surface. The table defined the plane where reaching movements were to be performed by sliding a light computer mouse (Logitech Inc). On the table, approximately 60 cm away from the participant’s sitting position, we placed a vertically-oriented, 24” Acer G245HQ computer screen (1920×1080). This monitor was connected to an Intel i5 (3.20GHz, 64-bit OS, 8 GB RAM) portable computer that ran custom-made scripts, programmed in MATLAB with the help of the MonkeyLogic toolbox, to control task flow (NIMH MonkeyLogic, NIH, United States; https://monkeylogic.nimh.nih.gov). The screen was used to show the stimuli at each trial and the position of the mouse in real time.

As part of the experiment, the participants had to respond by performing overt movements with their arm along the table plane while holding the computer mouse. Their movements were recorded with a Mouse (Logitech, Inc), sampled at 1 kHz, which we used to track hand position. Given that the monitor was placed upright on the table and movements were performed on the table plane (horizontally, approximately from the center of the table to the left or right target side), the plane of movement was perpendicular to that of the screen, where the stimuli and finger trajectories were presented. Data analyses were performed with custom-built MATLAB scripts (The Mathworks, Natick, MA), licensed to the Universitat de Barcelona.

5.3 Consequential decision-making taskThis section describes the consequential decision-making task, designed to assess the role of consequence on decision-making while promoting prefrontal inhibitory control (Wessel and Aron, 2017). Since consequence depends on a predictive evaluation of future contexts, we designed a task in which trials were grouped together into episodes (groups of one, two or three consecutive trials), establishing the horizon of consequence for the decision-making problem within that block of trials.

The number of trials per episode equals the horizon nH plus 1. In brief, within an episode, a decision in the initial trial influences the stimuli to be shown in the next trial(s) in a specific fashion, unbeknown to our participants. Although a reward value is gained by selecting one of the stimuli presented in each trial, the goal is not to gain the largest amount as possible per trial, but rather per episode.

Each participant performed 100 episodes for each horizon nH = 0, 1, and 2. In the interest of comparing results, we have generated a list of stimuli for each nH and used it for all participants. To avoid fatigue and keep the participants focused, we divided the experiment into 6 blocks, to be performed on the same day, each consisting of approximately 100 trials. More specifically, there was 1 block of nH = 0 with 100 trials, 2 blocks of nH = 1 each with 100 trials, and 3 blocks of nH = 2 with two of them of 105 trials and one of 90. Finally, we have randomized the order in which participants performed the horizons.

Figure 1 shows the timeline of one nH = 1 episode (2 consecutive trials). The episode consists of two dependent trials. At the beginning of the trial, the participant was required to move the cursor onto a central target. After a fixation time (500 ms), the two target boxes were shown one after the other (for 500 ms each) to the left and right of the screen, in a random order. Targets were rectangles filled in blue by a percentage corresponding to the reward value associated with each stimulus (analogous to water containers). Next, both targets were presented together. This served as the GO signal for the participant to choose one of them (within an interval of 4 s). Participants had to report their choice by making a reaching movement with the computer mouse from the central target to the target of their choice (right or left container). If the participant did not make a choice within 4 s, the trial was marked as an error trial. Once one of the targets had been reached for and the participant had held that position (500 ms), the selection was recorded, and a yellow dot appeared above the selected target, indicating successful selection and reward value acquisition. In case of horizons larger than 0, the second trial started following the same pattern, although with a set of stimuli that depended on the previous decision (see next section). A progress bar at the bottom of the screen indicates the current trial within the episode (for nH = 1, 50% during the first trial, 100% during the second trial).

At the beginning of the session, participants were given instructions on how to perform the task. Specifically, using some sample trials, we demonstrated them how to select a stimulus by moving the mouse. Step by step we showed that a target appears in the center of the screen indicating the start of an episode. We told them that they had 4 s to move the cursor to the central cross. After moving the cursor to the central cross, two bars appear, one after the other, and once both appear together/simultaneously, they had 4 s to make their decision by moving the cursor over one of the two bars. At that point a yellow dot appears over the bar indicating their selection. After that, the central target appears again indicating the beginning of a new trial. After explaining how to technically execute the task, we focused on explaining the task goal. We showed them a schematic of the task, much like the one in Figure 1A illustrating the structure of trials and episodes. We told them that the goal is to get as much reward (water) as possible in each episode, and that for episodes with more than 1 trial each, the choice in a trial may have an effect on what appears in the next trial in the same episode. We encouraged them to explore in order to try to figure out what that effect might be, while keeping in mind that their goal is always to maximize the total reward in each episode. Finally, we told them that they will be presented with a series of episodes in a row, each episode is independent, meaning that their decisions in one episode have no effect on subsequent ones.

5.4 Episode structureThe participants were instructed to maximize the cumulative reward value throughout each episode, namely the sum of water contained by the selected targets across the trials of the episode. If trials within an episode were independent, the optimal choice would be to always choose the largest stimulus. Since one of the major goals of our study was to investigate delayed consequence assessment involving adaptive choices, we deliberately created dependent trial contexts in which making incentive decisions (selecting the larger stimulus) would not lead to the most cumulative reward value within episode.

To promote inhibitory choices, the inter-trial relationship was designed such that selecting the small (large) stimulus in a trial, yielded an increase (decrease) in the mean value of the options presented in the next trial. As explained below, because of the parameters choice we made, always choosing the larger stimulus

留言 (0)