Autonomous driving technology exemplifies a crucial application of robotics theories and techniques, aiming to ensure safe and efficient self-driving in real-world traffic environments (Zhang et al., 2024). Within autonomous driving systems, decision-making and planning algorithms are pivotal, tasked with generating driving behaviors and planning trajectories. Broadly autonomous driving encompasses two phases: behavior decision-making and motion planning. Behavior decision-making addresses responses to temporary events, such as abnormal driving behaviors of other vehicles, sudden pedestrian crossings, and emergency vehicle avoidance (Xu et al., 2024; Wang T. et al., 2024). At this phase, the decision system must exhibit high adaptability and predictive capability for potential future scenarios, allowing for quick adjustments like lane changes, acceleration, or deceleration based on real-time conditions (Yang et al., 2024; Feng et al., 2024). Motion planning delves into a more granular aspect of autonomous driving, generating detailed trajectories based on the current vehicle state and behavior decision outputs (Li Z. et al., 2023). The motion planning ensures the smoothness and comfort of the vehicle's trajectory while adhering to dynamic constraints such as speed and acceleration. Given the significant challenges associated with achieving safe and flexible interactions, behavior decision-making and motion planning have become critical focal points in autonomous driving research, which is also the primary subject of this paper.

The decision-making and motion planning algorithms for autonomous vehicles integrate theories from multiple disciplines, including machine learning, pattern recognition, intelligent optimization, and nonlinear control (Lu et al., 2024). Deep learning techniques effectively improve the ability to encode model features (Bidwe et al., 2022). These technologies provide the foundation for safe interactions between autonomous vehicles and other road users on public roads. Furthermore, decision-making and planning algorithms must consider ethical and legal responsibilities, ensuring adherence to socially accepted moral standards and compliance with traffic regulations during emergencies (Zheng et al., 2024; Gao et al., 2024). Current research on decision-making and planning algorithms focuses on improving robustness, enhancing stability and safety in unforeseen situations, and increasing predictive accuracy of the surrounding environment and other traffic participants (Wen et al., 2023; Wang W. et al., 2023; Zhai et al., 2023). Additionally, efforts are being made to reduce computational resource consumption and improve algorithmic efficiency to achieve rapid responses under resource-constrained conditions.

Aiming at the aforementioned research objectives, the researchers primarily employ knowledge-driven and data-driven approaches to construct decision-making and motion planning systems. The knowledge-driven approach simulates human decision-making processes through the encoding of expert knowledge and logical rules. By integrating information such as road characteristics, traffic regulations, and historical behavior data, these approaches can search for the optimal driving path or optimize for a specific objective function, thereby achieving safe and efficient driving strategies (Jia et al., 2023; Chen L. et al., 2023; Aoki et al., 2023). Concurrently, data-driven approaches have emerged prominently propelled by advancements in machine learning and statistical analysis. Unlike knowledge-driven methods, data-driven approaches do not necessitate pre-defined explicit rules. They enhance decision accuracy and adaptability by training and optimizing decision models using vast amounts of real driving data. Particularly in complex and dynamic traffic environments, data-driven strategies effectively learn and emulate human driver behaviors and decision-making processes (Wang T. H. et al., 2023). The application of data-driven technologies also significantly enhances their generalizability across different environments. However, solely relying on data-driven methods has its limitations; these methods typically require large volumes of labeled data for training and often have poor interpretability, making it challenging to ensure consistent and safe decisions.

On the other hand in industry, the deployment of autonomous vehicles (AVs) is incrementally expanding, particularly within the commercial sector. The available AVs on the market primarily employ several key decision-making and planning methodologies. These include rule-based systems, state transition models such as Markov Decision Processes (MDPs) and Partially Observable Markov Decision Processes (POMDPs), as well as game-theoretic approaches. These vehicles utilize sophisticated algorithms to process road environments and vehicle states, optimizing state transitions to make the best possible decisions.

The deployment of AVs is being tested and operated in specific geographic areas and under certain traffic conditions. Waymo and Tesla stand out as prominent examples. Waymo offers autonomous taxi services in Phoenix, Arizona, and is expanding its service reach. Reports indicate that Waymo plans to extend its services to Miami, Florida, in an effort to gain an edge in the intensifying competitive market. Moreover, Waymo has established a partnership with the automotive financing company Moove, which will manage Waymo's fleet operations in Phoenix, including maintenance of the autonomous taxis and management of charging infrastructure. Currently, Waymo has deployed approximately 200 autonomous vehicles in Phoenix. Tesla, on the other hand, collects data through its fleet learning program to improve its autonomous driving systems. While Tesla's Autopilot and Full Self-Driving (FSD) systems have made significant advancements in autonomous technology, there is still a considerable gap before achieving true Level 4 (L4) autonomous driving capabilities. Tesla employs an end-to-end (E2E) deep learning strategy, integrating neural networks and reinforcement learning in an attempt to enhance the intelligence level of autonomous driving. Tesla's Robotaxi technology faces challenges, including safety and reliability issues, regulatory and licensing hurdles, and market acceptance and operational challenges. These deployments demonstrate the applicability and challenges of autonomous technology under real-world conditions and highlight how industry leaders are testing and optimizing their technologies in specific geographic and traffic settings. As technology matures and regulatory environments adapt, it is anticipated that the deployment of AVs will become more widespread and in-depth.

Despite the immense potential of AVs, they still face certain limitations in decision-making and planning. These include interactions with human drivers under mixed traffic conditions, responses to unexpected situations, and adaptability within complex traffic environments. Additionally, AV decision-making and planning systems must consider ethical and legal responsibilities, ensuring adherence to socially accepted moral standards and compliance with traffic regulations during emergencies.

Neurorobotic approaches, which combine neural networks and robotics, offer new possibilities for AV decision-making and planning. These methods can improve the accuracy and adaptability of decision-making by learning from and optimizing decision models with extensive real-world driving data. Particularly in complex and dynamic traffic environments, data-driven strategies effectively learn and emulate human driver behaviors and decision-making processes, significantly enhancing generalizability across different environments. However, relying solely on data-driven methods has its limitations; these methods typically require large volumes of labeled data for training and often have poor interpretability, making it challenging to ensure consistent and safe decisions.

Thus, an increasing number of researchers are attempting to combine knowledge-driven and data-driven methods to complement each other. In this paper, we refer to these combined methods as hybrid methods. Hybrid methods harness the advantages of both approaches: the data-driven component improves the system's adaptability to complex environments and the accuracy of predictions by extracting patterns and behaviors from extensive driving data; meanwhile, the knowledge-driven component ensures decisions comply with traffic regulations and safety standards, providing systematic constraints and guidance within a well-defined framework. This combination allows for more flexible, robust, and interpretable decision planning (Singh, 2023). Although hybrid methods aim to integrate the strengths of knowledge-driven and data-driven approaches, they also present certain limitations and potential challenges in development. The design and implementation of hybrid methods are complex, requiring precise integration of two fundamentally different techniques. This not only demands strong theoretical knowledge from algorithm designers but also necessitates continuous tuning and optimization in practice to achieve optimal performance. By thoroughly discussing and comparing these algorithms, we aim to understand their respective advantages and limitations and explore effective ways to integrate these methods to tackle complex decision-making and motion planning problems in autonomous driving.

1.2 Paper structureThis review provides a comprehensive overview of decision-making and planning technologies in autonomous driving systems. The following sections detail the research progress in each aspect.

Introduction: The introduction reviews the essential role of decision-making and planning in autonomous driving systems, outlining the historical applications and unique advantages and limitations of knowledge-driven, data-driven, and hybrid methods. It provides a detailed comparative analysis of these methods, discusses their effectiveness in various scenarios, and summarizes the article's structure to offer a comprehensive understanding of the advancements in the field.

Knowledge-driven decision and planning methods: This section explores knowledge-driven decision-making and planning methods, focusing on the decision process and path planning process. It covers the framework of rule based systems, state-transition systems including Markov Decision Processes (MDPs), game-theory based decision models, and path planning methods like search-based algorithms (A* and Dijkstra) and optimization-based techniques.

Data-driven decision and planning methods: This section explores data-driven decision-making and planning methods, covering imitation learning, reinforcement learning, inverse reinforcement learning, and associated challenges. It discusses training systems via expert behavior observation, environment interaction for optimal strategy learning, and inferring reward functions, emphasizing their application and real-world challenges in autonomous driving.

Hybrid decision and planning methods: This section examines hybrid decision-making and planning methods that merge knowledge-driven and data-driven approaches to improve accuracy and efficiency. It discusses the integration of expert knowledge with techniques like imitation and reinforcement learning, highlighting both the benefits of this combination and the technical and practical challenges in implementation.

Experiment platform: This section explores pivotal resources for autonomous driving: datasets and simulation platforms, detailing their sources, composition, applications, and support for decision-making algorithm development. It also evaluates simulation platforms, examining their features and role in testing algorithms and simulating complex traffic environments, crucial for advancing autonomous driving technology.

Challenges and future perspectives: This section addresses current challenges in autonomous driving decision-making and planning, including environmental perception uncertainties, unpredictability of traffic participants, and limitations of data-driven algorithms, and examines industry and academic responses. It also looks to future trends, such as multi-sensor fusion, deep learning, behavior prediction models, scenario simulation, reinforcement learning, synthetic data, continuous learning, and explainable AI.

1.3 Significance and contributionsThe autonomous driving industry has been progressing at a rapid pace, yet there exists a notable gap in the systematic analysis and synthesis of decision-making and planning methods. Our review aims to bridge this gap by providing a comprehensive and systematic categorization, comparison, and analysis of the current state of the art in autonomous driving. Through this rigorous examination, we have identified a clear trend and a compelling direction for future research: the integration of knowledge-driven and data-driven approaches into hybrid methods.

The lack of systematic analysis in the field has led to fragmented development and a lack of clarity on the most effective strategies for advancing autonomous driving systems. Our review stands as a testament to the need for a structured evaluation of the various methodologies, encompassing rule-based, state transition-based, game-theory based, search-based, sampling-based, and optimization-based methods. By conducting a thorough comparative analysis, we have been able to elucidate the strengths and limitations of each approach and how they complement one another.

Our systematic summary and synthesis have led us to conclude that the future of autonomous driving decision-making and planning lies in hybrid methods. This conclusion is not merely a promotion of a particular approach but is grounded in the recognition that no single methodology can address the multifaceted challenges of autonomous driving. Hybrid methods offer a balanced and comprehensive framework that leverages the strengths of both knowledge-driven and data-driven strategies, thereby enhancing the adaptability, safety, and interpretability of autonomous vehicles.

We advocate for hybrid methods as the future research direction because they hold the potential to: Improve Adaptability: By incorporating data-driven learning, hybrid methods can adapt to dynamic and complex traffic scenarios that exceed the capabilities of traditional rule-based systems.

Enhance Safety and Reliability: Knowledge-driven components provide a safety net, ensuring that decisions comply with predefined rules and ethical standards, which is critical for public trust and regulatory compliance.

Ensure Interpretability: The combination of data-driven flexibility with knowledge-driven structure allows for greater transparency in decision-making processes, which is essential for debugging, optimization, and building user trust.

In conclusion, our systematic analysis and synthesis of autonomous driving decision-making and planning methods provide a clear premise and direction for the field. We believe that the hybrid approach, informed by our comprehensive review, is not only a promising direction but also a necessary evolution in the development of autonomous driving systems. Our review serves as a roadmap for researchers and practitioners, guiding the industry toward a future where autonomous vehicles can operate with enhanced safety, efficiency, and reliability. The contributions of this paper can be summarized as follows:

This paper provides a comprehensive overview of automated decision-making and planning methods, and innovatively classifies these methods into three categories: knowledge-driven methods, data-driven methods, and hybrid methods. Regarding knowledge-driven methods, we highlight the efficiency and accuracy of expert systems in handling specific decision problems through rule system design and state management, and we explore the role of game theory in strategy formation. Additionally, we also discuss how search and optimization algorithms plan paths. For data-driven methods, we analyze decision-making and planning methods based on reinforcement learning, imitation learning, and inverse reinforcement learning. We explore the theoretical foundations, current applications, and challenges of these algorithms. This comprehensive analysis highlights the strengths and limitations of various methods and provides direction for future research and technological improvements. Moreover, we delve into hybrid methods, emphasizing their potential to integrate the advantages of data-driven and knowledge-driven approaches in autonomous driving decision systems.

Furthermore, we detail various virtual simulation platforms and physical experimental facilities necessary for testing and validating algorithms, emphasizing their crucial role in transitioning algorithms from theory to real-world applications.

Finally, we discuss industry challenges and prospects, clarifying future research directions and the integration potential of emerging technologies in the field of automated decision-making and planning.

Overall, this paper enriches academic research in automated decision-making and planning and guides practitioners in the field, contributing positively to technological advancements in this domain.

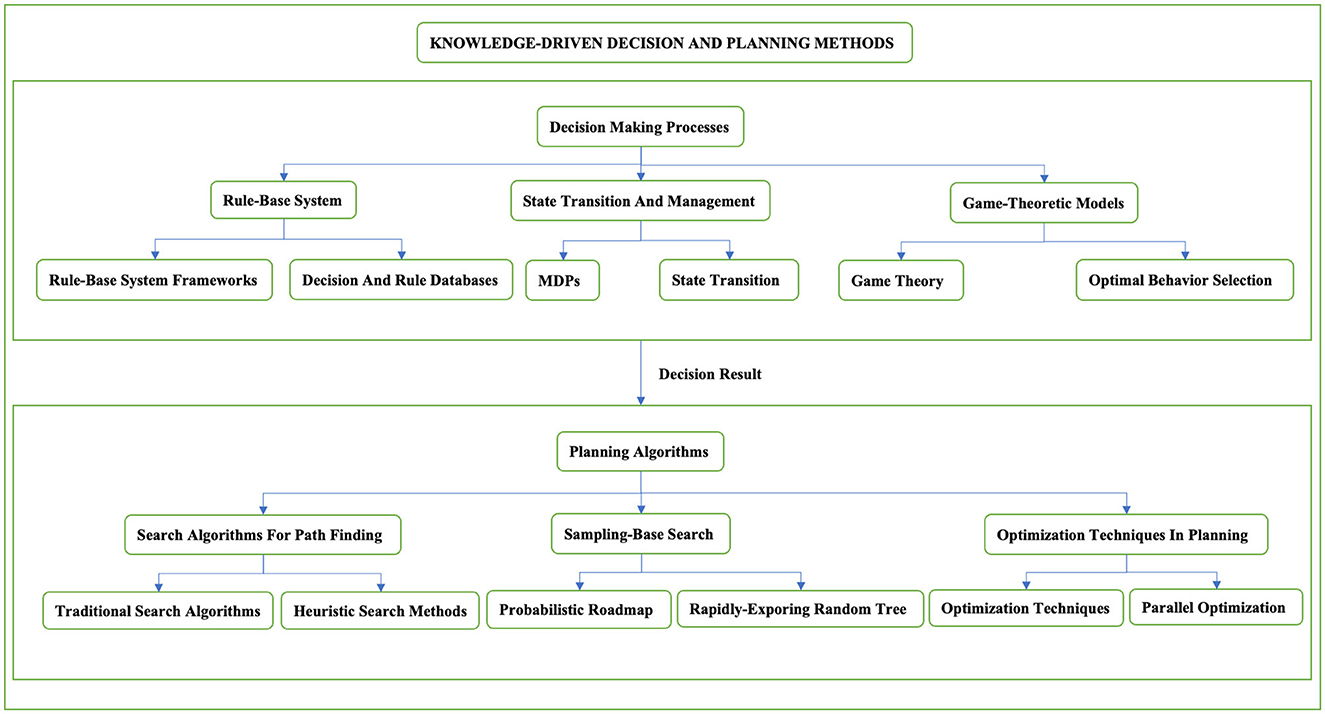

2 Knowledge-driven decision and planning methodsThe knowledge-driven method typically separates the decision-making phase from the path planning phase to enhance modularity, manageability, and efficiency. This distinction allows the decision module to focus on high-level strategies, such as overtaking or following other vehicles, while the path planning module translates these strategies into specific driving paths. The overall classification structure of knowledge driven methods is shown in Figure 1.

Figure 1. Classification structure of knowledge driven methods. This figure carefully classifies the knowledge-driven decision-making planning methods and introduces the decision-making planning methods in stages. It is mainly divided into two stages, including the decision-making process and the planning process. The second section will discuss each method in the two-stage process in detail.

The advantage of the knowledge-driven planning method is that it ensures the vehicle remains in a safe state within the predefined range of rules. However, this approach also has several drawbacks, such as overly conservative decision-making and increased time complexity due to the accumulation of rules. While the introduction of trajectory post-processing helps ensure the smoothness and safety of the vehicle's trajectory, the issue of planning delays persists. In the following sections, we will discuss the knowledge-driven planning method in detail, including rule-based and learning-based approaches, examining its benefits, limitations, and potential improvements.

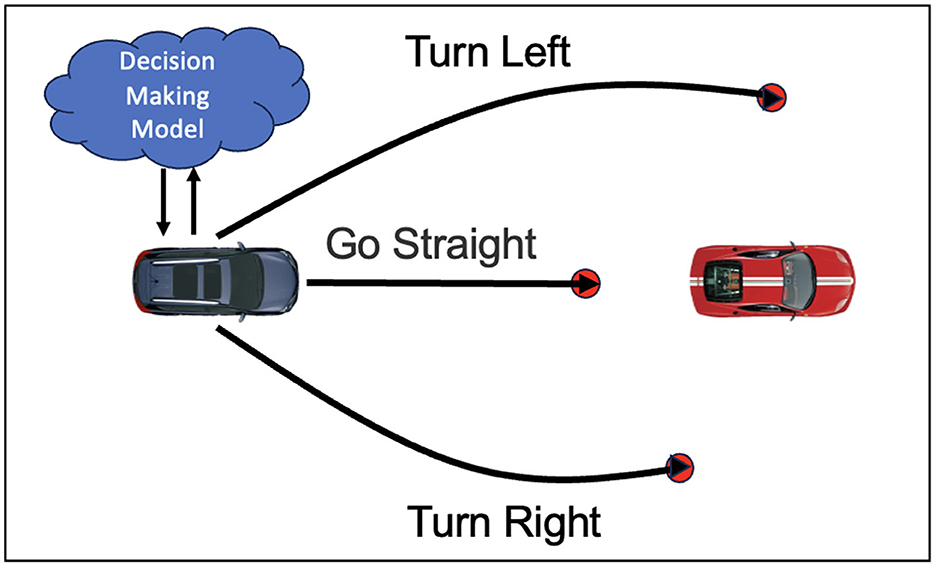

2.1 Decision making processesThe decision module provides initial coarse-grained decision results for the knowledge driven decision and planning model, as shown in Figure 2. Decision-making methods can be categorized into rule based, state-transition based, and game-theory based approaches. Rule based methods use predefined traffic rules and driving strategies, making decisions with conditional logic and reasoning. State-transition methods, such as Markov Decision Processes (MDP) and Partially Observable Markov Decision Processes (POMDP), represent the driving environment and vehicle states as a state space, optimizing state transitions for optimal decisions. Game-theory based approaches treat autonomous driving as a multi-agent system, using game theory to analyze and predict other traffic participants' behaviors to form cooperative or competitive driving strategies. The following sections provide a detailed overview of these decision-making methods.

Figure 2. Schematic diagram of knowledge driven decision-making. The figure describes the possible decisions that the vehicle may make when encountering an obstacle vehicle, including going straight, turning left, and turning right. The vehicle plans the optimal trajectory based on the decision-planning model.

2.1.1 Rule based decision systemsRule based systems make decisions with predefined rules and logic. These systems rely on expert knowledge and experience to build decision logic and rules. Zhao et al. (2021) propose a rule based system using if-then rules to process perception information from the traffic environment, generating corresponding driving behaviors. The decision logic of this system is to select the optimal driving strategy based on the surrounding traffic conditions and potential risk assessment. Additionally, the design of the rule database is a crucial component of rule based systems. Pellkofer and Dickmanns (2002) propose a behavior decision module, which execute task plans generated by task planning experts rule database. Hillenbrand et al. (2006) introduce a multi-level collision mitigation system that decides whether to intervene by evaluating the remaining reaction time (TTR). The system employs a time-based decision-making approach, and provides a flexible trade-off between potential benefits and risks while maintaining product liability protection and driver acceptance. Dam et al. (2022) propose an advanced predictive mechanism that comprehensively analyzes the current state of traffic flow and vehicle behavior patterns. This system can anticipate potential upcoming situations by utilizing probabilistic models and machine learning techniques.

In highly complex traffic environments, maintaining system reliability and effectiveness is a critical issue. Zhang T. et al. (2023) address this problem by proposing several solutions, including enhanced perception capabilities, real-time traffic data analysis, adaptive rule adjustment, multimodal decision fusion, safety strategies, redundancy design, and human-machine interaction optimization. Koo et al. (2015) discuss how to enhance the transparency of rule based systems, making their decision-making processes easier to understand and verify. Noh and An (2017) define a decision-making framework for highway environments, capable of reliably and robustly assessing collision probabilities under current traffic conditions and automatically determining appropriate driving strategies. This framework consists of two main components: situation assessment and strategy decision-making. The situation assessment component uses multiple complementary “threat metrics” and Bayesian networks to calculate “threat levels” at both vehicle and lane levels, assessing collision probabilities in specific highway traffic conditions. The strategy decision-making component automatically determines suitable driving strategies for given highway scenarios, aiming for collision-free, goal-oriented behavior.

Trajectory prediction and multi-angle trajectory quality evaluation must be introduced into the rule-based decision-making system. This will allow the drivable trajectory to be planned at future moments more quickly and accurately while ensuring driving safety. The most important thing is to ensure the absolute safety of all agents in a complex environment.

2.1.2 State transition and management modelsState transition and management models describe how a vehicle moves between different states. State management involves making decisions based on current state and environmental information. The application of Markov Decision Processes (MDPs) is particularly significant, providing an effective mathematical framework for state management. Galesloot et al. (2024) introduce a novel online planning algorithm to address challenges in multi-agent partially observable Markov decision processes (MPOMDPs). They integrate weighted particle filtering into sample-based online planners, and leverage the locality of agent interactions to develop new online planning algorithms operating on a Sparse Particle Filter Tree. Sheng et al. (2023) introduce a safe online POMDP planning approach that computes shields to restrict unsafe actions violating reach-avoid specifications. These shields are integrated into the POMDP algorithm, presenting four different methods for shield computation and integration, including a decomposed variant aimed at enhancing scalability. Furthermore, Barenboim and Indelman (2024) propose an online POMDP planning method that provides deterministic guarantees by simplifying the relationship between the actual solution and the theoretical optimum. They derived tight deterministic upper and lower bounds for selecting observation subsets at each posterior node of the tree. The method simultaneously constrains subsets of state and observation spaces to support comprehensive belief updates. Ulfsjöö and Axehill (2022) combine POMDP and scenario model predictive control (SCMPC) in a two-step planning method to address uncertainty in highway planning for autonomous vehicles. Huang Z. et al. (2024) propose an online learning-based behavior prediction model and an efficient planner for autonomous driving, utilizing a transformer-based model integrated with recurrent neural memory to dynamically update latent belief states and infer the intentions of other traffic participants. They also employed an option-based Monte Carlo Tree Search (MCTS) planner to reduce computational complexity by searching action sequences. Schörner et al. (2019) develop a hierarchical framework for autonomous vehicles in multi-interaction environments. This framework addresses decision-making under occluded conditions by computing the vehicle's observation range. Additionally, it considers current and predicted environments to foresee potential hidden traffic participants. Lev-Yehudi et al. (2024) introduce a novel POMDP planning approach for target object search in partially unknown environments. Liu et al. (2015) present a trajectory planning method using POMDP to handle scenarios with hidden road users. Chen and Kurniawati (2024) propose a context-aware decision-making algorithm for urban autonomous driving, modeling the decision problem as a POMDP and solving it online.

The utilization of Markov Decision Processes (MDP) and their various extensions in the field of autonomous driving has greatly enhanced the capacity for effective decision-making and strategic planning, particularly in environments that are partially observable or involve multiple interacting agents. In real-world driving scenarios, vehicles often encounter situations where not all variables or conditions are fully visible or predictable, such as obstacles obscured from sensors or dynamic traffic patterns. MDPs provide a structured framework to address these uncertainties by allowing autonomous systems to evaluate potential actions based on probabilistic models of outcomes, thereby optimizing decision-making under uncertainty. Furthermore, in multi-agent environments where interaction with other vehicles, pedestrians, and traffic systems is required, extensions of MDPs, such as Multi-agent MDPs (MMDPs), facilitate coordinated strategies that ensure safe and efficient navigation. These advanced models enable autonomous vehicles to anticipate and respond to the actions of other agents, leading to more reliable and intelligent driving solutions. Overall, the applications of MDP and its variants in autonomous driving enable effective decision-making and planning in partially observable and multi-agent environments.

2.1.3 Game-theoretic modelsGame theory plays a critical role in decision-making by analyzing the strategies and potential actions of different participants, enabling autonomous driving systems to optimize their behavior in competitive environments. Fisac et al. (2019) propose a hierarchical dynamic game theory planning algorithm, effectively handling the complex interactions between autonomous vehicles and human drivers by decomposing dynamic games into long-term strategic games and short-term tactical games. Sankar and Han (2020) employ adaptive robust game theory decision strategies within a hierarchical game theory framework to manage vehicle interactions on highways. This strategy allows autonomous vehicles to adjust their behavior based on other drivers' actions, reducing collision rates and increasing lane-changing success. Li et al. (2020), Cheng et al. (2019), and Li et al. (2018) utilize non-cooperative game theory methods to address traffic decision-making at unsignalized intersections. In these methods, each vehicle is viewed as an independent decision-maker to minimize its travel time or enhance its safety without necessarily cooperating with other vehicles. Martin et al. (2023), Tian et al. (2022), and Fang et al. (2024) explore cooperative game theory to enhance the efficiency and safety of interactions among autonomous vehicles. In cooperative game theory, multiple participants form coalitions and share information or resources to achieve common goals, such as reducing overall travel time or increasing overall system safety. The cooperative driving framework allows vehicles to share their location and speed information and predict the intentions and behaviors of others, leading to more coordinated and safer decisions in complex road environments. For autonomous vehicle control at roundabouts, Tian et al. (2018) demonstrate the effectiveness of adaptive game theory decision algorithms by online estimating the opponent driver types and adjusting strategies accordingly, thus managing multi-vehicle interactions in complex traffic environments.

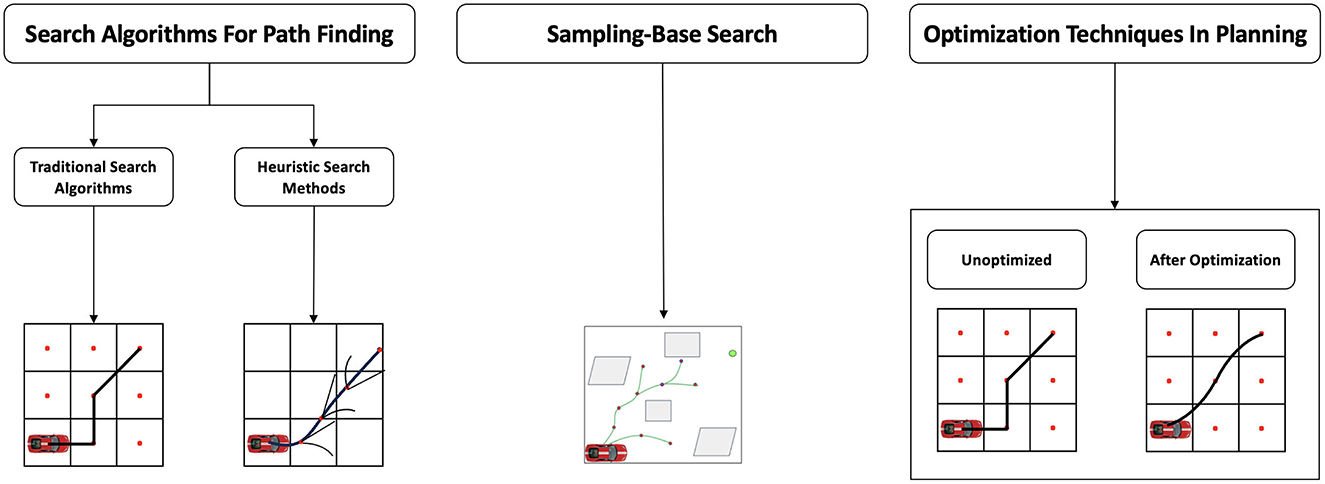

2.2 Planning algorithmsPath planning methods are typically divided into two stages. The first stage includes search-based methods, such as A* and Dijkstra, which systematically search all possible paths to find the optimal solution for well-defined and relative problems. Additionally, it also includes sampling-based methods, such as Rapidly-Exploring Random Trees (RRT) and Probabilistic Roadmaps (PRM), which generate candidate paths through random sampling and are suitable for high-dimensional and complex planning spaces. The second stage primarily employs optimization techniques by designing objective functions and constraints to model the path optimization goals. Ultimately, this process results in a planned path that meets requirements for smoothness, safety, and efficiency. Figure 3 depicts the architectural forms of different planning algorithms.

Figure 3. The knowledge-driven algorithm framework is mainly divided into three parts: search-based methods, sampling-based methods, and optimization-based methods. From the figure, the hybrid search effect is more prominent in the search-based methods, and the trajectory planned by the optimization-based method is smoother.

2.2.1 Search algorithms for path findingTraditional search algorithms such as A* and Dijkstra systematically explore the path space to find the optimal route from a start to an end point. However, the environments for path planning have become increasingly complex. Diverse heuristic and meta-heuristic search methods have emerged to enhance computational efficiency and path quality. Ferguson and Stentz (2006) explore the field D* algorithm, which calculates path cost estimates during linear interpolation to generate paths with continuous headings. Daniel et al. (2010) find shorter paths on grids without restricting path direction. Wang J. et al. (2019) combine A* with neural networks to incorporate rich contextual information and learn user movement patterns. Meanwhile, Melab et al. (2013) demonstrate the efficiency and practicality of the ParadisEO-MO-GPU framework, a GPU-based parallel local search meta-heuristic algorithm, which implements parallel iterative search models on graphical processing units. Stochastic search algorithms and their variants are also applied in autonomous vehicle path planning to handle dynamic obstacles and complex scenarios. Kuffner and LaValle (2000) solve single-query path planning problems by constructing trees from both the start and end points. Yu et al. (2024a) improve planning efficiency in dynamic environments, which combines dual-tree search with efficient collision detection mechanisms. Huang and Lee (2024) achieve asymptotically optimal path planning in narrow corridors by adaptive information sampling and tree growth strategies.

2.2.2 Sample algorithms for path findingSampling-based methods address path planning problems in complex and dynamic environments by generating candidate paths with random sampling in the planning space. Rapidly-exploring Random Trees (RRT) and their variants are representative of these methods. Specifically, Wang Z. et al. (2024) demonstrate that by introducing guided paths and dynamically adjusting weights, which effectively reduces planning time and path curvature. Similarly, Dong et al. (2020) propose a knowledge-biased sampling-based path planning method for Automatic Parking (AP). This approach improves the algorithm's integrity and feasibility by introducing reverse RRT tree growth, using Reeds-Shepp curves to directly connect tree branches, and employing standardized parking space/vehicle knowledge-biased RRT seeds. Chen et al. (2022) propose a strategy combining RRT and Dijkstra algorithms to adapt to semi-structured roads. The method first narrows the planning area using an RRT-based guideline planner, then translates the path planning problem into a discrete multi-source cost optimization problem. The final output path is obtained by applying an optimizer to a discrete cost evaluation function designed to consider obstacles, lanes, vehicle kinematics, and collision avoidance performance. Huang H. et al. (2024) adopt the least action principle to general optimal trajectory planning for autonomous vehicles, offering a method to simulate driver behavior for safer and more efficient trajectory planning. Beyond RRT algorithms, the Probabilistic Roadmap (PRM) algorithm and its improved versions have shown superiority in narrow path planning. Huang Y. et al. (2024) enhance the PRM method by combining uniform sampling with Gaussian sampling, increasing the success rate and efficiency of path planning in narrow passages. Zhang Z. et al. (2023) transform a grid map into a formal context of concepts, mapping the relative positional relationships between rectangular areas. They then convert these relationships into partial order relationships within a rectangular region graph based on concept lattices.

2.2.3 Optimization techniques in planningOptimization techniques in planning are broadly applied and critical, By appropriately selecting and applying suitable optimization methods, the performance and efficiency of planning systems can be significantly improved. Xu et al. (2012) introduce a real-time motion planner that achieves efficient path planning through trajectory optimization. Similarly, Zhang et al. (2020) decompose the path planning process into two stages: generating smooth driving guidance lines and then optimizing the path within the Frenet frame. Additionally, Werling et al. (2010) combine long-term goals (such as speed maintenance, merging, following, stopping) with reactive collision avoidance. The method demonstrates its capability in typical highway scenarios, generating trajectories that adapt to traffic flow and validating. Zhang Y. et al. (2023) and Gulati et al. (2013) employ nonlinear constrained optimization methods to compute trajectories that comply with kinematic constraints. The method focuses on dynamic factors such as continuous acceleration, obstacle avoidance, and boundary conditions to achieve human-acceptable comfortable motion.

In practical applications, the efficiency and feasibility of implementing optimization algorithms are critical. Stellato et al. (2020) propose the OSQP solver, which effectively addresses convex quadratic programming problems using the Alternating Direction Method of Multipliers (ADMM), making it suitable for real-time applications. High-performance nonlinear optimization has been realized through domain-specific languages (DSL), where GPU acceleration is used to enhance solving efficiency (Yu et al., 2024b). Furthermore, Huang X. et al. (2023) adopt the Levenberg-Marquardt optimization algorithm for nonlinear systems to the control of continuous stirred-tank reactors, showing faster convergence rates and stronger disturbance resistance.

In summary, optimization techniques play a pivotal role in enhancing the efficiency and effectiveness of planning systems, particularly in the context of autonomous driving and real-time applications. By strategically selecting and applying appropriate optimization methods, such as trajectory optimization and nonlinear constrained optimization, these systems can achieve significant improvements in path planning and dynamic response to environmental factors. The integration of advanced solvers like the OSQP for convex quadratic programming and the utilization of domain-specific languages for leveraging GPU acceleration further highlight the importance of optimization in achieving high-performance planning. These approaches demonstrate the capability to address complex scenarios, maintain compliance with kinematic constraints, and ensure comfort and safety in motion, thereby validating their critical role in theoretical and practical applications across various domains.

3 Data-driven decision and planning methods 3.1 Imitation learning algorithmsImitation Learning (IL) has become a pivotal methodology in the advancement of Autonomous Vehicles (AVs), leveraging expert demonstrations to navigate around the complexities and hazards intrinsic. Imitation Learning for autonomous vehicles is categorized into three main approaches: Behavioral Cloning (BC), Inverse Reinforcement Learning (IRL), and Generative Adversarial Imitation Learning (GAIL).

Behavioral Cloning (BC) is a straightforward approach that directly mimics human driving behavior. The approach offers several advantages, including simplicity, ease of training, and effective performance when there is a substantial amount of high-quality human driving data available. However, BC encounters difficulties when confronted with unfamiliar road scenarios, is susceptible to noise in the training data, and does not consider the long-term implications of decisions. BC is typically employed in relatively simple and structured environments, such as highways or known routes. Inverse reinforcement learning (IRL) offers the advantage of understanding the underlying intent of human drivers by inferring a reward function, thereby capturing complex driving strategies. This makes it an advantageous approach in diverse and complex scenarios. It demonstrates effective adaptation to novel environments; however, it is associated with considerable computational complexity, necessitating substantial resources and well-designed features and models. IRL is frequently utilized in scenarios that necessitate the comprehension of intricate decision-making processes, such as urban driving. Generative Adversarial Imitation Learning (GAIL) integrates the strengths of generative adversarial networks to enhance model robustness and generalization through adversarial training. GAIL can imitate complex behaviors without explicit reward functions, thereby providing better adaptability to unknown situations. However, its training process can be unstable, involves complex tuning, and demands high-quality and diverse training data. GAIL is suitable for uncertain driving environments that require high robustness and adaptability, such as dynamic urban traffic. An overview of these approaches will be presented in this section.

3.1.1 Imitation learning problem formulationImitation Learning approach exploits the vast repository of human driving data to train policies that emulate expert behavior. The fundamental problem definition for IL in the context of AVs revolves around deriving a policy π* that closely matches the expert's policy πE by minimizing the discrepancy between their state-action distributions across a dataset D of demonstrated trajectories. Each trajectory t within D consists of sequential state-action pairs (sit,ait), where ait is the action executed by the expert in state sit according to πE. The optimization framework for achieving this can be mathematically formalized as:

π*=arg minπL(πE,π) (1)where L is a divergence measure that quantifies the dissimilarity between the expert's policy and the learned policy.

BC simplifies the IL challenge by transforming it into a supervised learning problem, with the aim of learning a policy. πθ that minimizes the loss function L over the dataset D of state-action tuples, thereby replicating the expert's behavior:

πθ*=arg minθE(s,aE)~PE(s∣πE)L(aE-πθ(s)) (2)Where the PE(s ∣ πE) is the state distribution of the expert policy, and the L can be a loss function that measures the imitation quality of the expert's actions. Commonly, L can be the L1 loss (mean absolute error) or the L2 loss (mean squared error). Taking L2 loss as an example, the loss function can be:

Loss=1T∑j=1T‖a−aE‖2 (3)The problem of IRL in autonomous driving revolves around inferring a reward function r* from expert demonstrations. Given a set of state-action pairs sampled under the expert policy π*, IRL attempts to learn a reward function r* such that:

π*=arg maxEπ[r*(s,a)] (4)Generative Adversarial Imitation Learning (GAIL) is a framework that extracts expert-driving policies without explicitly defining reward functions or employing laborious reinforcement learning cycles. It synergistically combines imitation learning with Generative Adversarial Networks (GANs), creating a duel between a generator and a discriminator. The generator, parameterized by θ, emulates expert maneuvers by matching the distribution of state-action pairs observed in demonstrations. Meanwhile, the discriminator represented by Dω within the interval (0, 1) serves as a stand-in reward evaluator, quantifying the likeness between the generated and actual expert behaviors. The GAIL lies in a min-max optimization objective, succinctly expressed as:

minπθmaxDωEπθ[logDω(s,a)]+EπE[log(1-Dω(s,a))]-λH(πθ) (5)This formula pits the generator against the discriminator, where Eπθ[logDω(s, a)] encourages the generator to produce actions indistinguishable, and ensures the discriminator's sharpness in distinguishing real from fake samples. The term EπE[log(1 − Dω(s, a))] introduces entropy regularization to promote policy exploration. Here, H(πθ) denotes the entropy of policy π, a measure of randomness in action selection that fosters learning flexibility. Gradients guiding updates for both components are defined as:

∇θJ(θ)=Eπ[∇θlogπθ(a|s)Q(s,a)]-λ∇θH(πθ) (6) ∇ωJ(ω)=Eπ[∇ωlogDω(s,a)

留言 (0)