Molecules such as proteins or nucleic acids make up the building blocks of life. Living organisms contain a plethora of molecules that often comprise thousands of atoms. Biomolecules change their conformation (i.e., shape) to fulfill important biological functions such as enzymatic reactions or cellular communication. Understanding the conformational heterogeneity of biomolecules is crucial for deciphering their functional mechanisms and designing targeted interventions. Cryo-Electron Microscopy (cryo-EM) has emerged as a powerful technique for visualizing molecular structures at high resolution. Recent advancements in computational cryo-EM have demonstrated the potential of latent variable models to capture the diverse conformations adopted by biomolecules (reviewed in Donnat et al., 2022). However, interpreting these learned latent spaces and extracting biologically meaningful information from them remains a significant challenge.

In this paper, we propose a fruitful approach to unravel the complexities of conformational latent spaces in cryo-EM by framing this as an Independent Component Analysis (ICA) problem. In its original linear formulation, the high level goal of ICA is to discover linear projections of the data that are as statistically independent as possible (Hyvärinen and Oja, 2000). A common observation in ICA applications is that these linear projections discover underlying factors of variation in the data that give insight into the underlying processes. A few prior works have tested the application of linear ICA to molecular imaging (Borek et al., 2018; Gao et al., 2020) finding more meaningful separation of molecular conformation changes. However, the transformation between meaningful factors and the data is inherently nonlinear in cryo-EM. Therefore, we need theory and models that work for the nonlinear models used in modern cryo-EM (e.g., Zhong et al., 2021a). Building on recent theoretical work in identifiable nonlinear ICA Hyvärinen et al. (2024), in disentanglement models and their benchmarks in machine learning Locatello et al. (2019), we suggest a path to bridging the gap between theoretical advancements and practical applications in cryo-EM research. We argue that nonlinear ICA methods have the potential to provide a powerful framework to disentangling the latent representations of biomolecular conformations from cryo-EM datasets, overcoming the limitations of traditional volume visualization approaches and ultimately allowing to delve deeper into the structural dynamics of biomolecules. Moreover, we argue that the establishment of benchmarks and metrics specific to cryo-EM disentanglement models is of paramount importance. Adapting and extending existing benchmarks from the machine learning field should allow to objectively evaluate the performance of different disentanglement approaches and track progress in the development of interpretable cryo-EM methods. Ultimately, this interdisciplinary approach will enhance our understanding of complex biological processes and open up new avenues for therapeutic interventions and drug discovery.

The paper is structured as follows. We first provide a general background on the cryo-EM computational problem (Section 2) and how it can be framed as an ICA problem (Section 2.1). We then discuss the two fundamental challenges associated with cryo-EM: firstly, separating the conformation and the pose representations (Section 2.2) and, secondly, finding the right (disentangled) representation of conformations (Section 2.3). We then go into more detail on both challenges by providing quantitative metrics to measure progress and modeling suggestions to improve current frameworks. To disentangle poses and shapes, we propose intervention based metrics (Section 3.1) and training schemes (Section 3.2) that can be added to existing models. For the larger problem of disentangling conformation representations (Section 4.1), we discuss existing disentanglement benchmarks and metrics (Locatello et al., 2019). We then discuss three potential approaches for solving this problem (Section 4.2), based on temporal information (Section 4.2.1), temperature control (Section 4.2.2) and atomic models (Section 4.2.3). Finally, we discuss the path forward and the broader implications for this framework to take computational approaches from neural network based curve fitting to actual understanding of the mechanisms in nature.

2 Interpretable heterogeneous reconstruction–a disentanglement problemHeterogeneous cryo-EM reconstruction methods aim to model the different conformations that a molecule may assume (Donnat et al., 2022). For instance, we can think of a molecule with a fixed central structure and two adjustable “arms” (see Figure 1). Clearly, any conformation that this molecule may assume can be described by providing the position of both arms. Thus, these independently moving parts may be thought of as the fundamental degrees of freedom of this molecule’s conformations.

Figure 1. Overview. What does it mean to have a disentangled representation of molecular conformations? (A) The example (left) shows a simple molecule with two degrees of freedom 1. and 2. for changing its conformation. An entangled model (right, top) represents mixtures of both movements on each of its latent dimensions z1 ∼ 1. + 2. and z2 ∼ 1. − 2. A disentangled model (right, bottom) represents pure movements on each of its latent dimensions z1 ∼ 1. and z2 ∼ 2.; actually, z2 = −2. but the sign flip, incorporated in ∼, does not compromise interpretability. Note that disentangling conformations from cryo-EM measurements requires additional information (e.g., time, temperature or physics), as discussed in section 4. (B) Training a VAE with separate pose ϕ and conformation z latent spaces on cryo-EM particle images, without any intervention. (C) Interpreting the learned latent space of a model. An axis traversal (blue) results in a complex motion of both arms, i.e., fails at disentangling the two degrees of freedom. A simple transformation, moving only the left arm, corresponds to a curved trajectory.

We can parameterize them by a two dimensional latent variable z∈Z where Z≔R2 is some degree of movement. The volume that the molecule occupies in three dimensional space can be thought of as a function v∈V,v:R3×Z→ that is parameterized by z and indicates for any position in space (R3) whether it is part of the molecular volume or not, known as an implicit representation of the volume (Sitzmann et al., 2020; Donnat et al., 2022). That means, for different values of z, vz = v(., z) would describe a different volume. Crucially, this function is not known and it is a central goal in heterogeneous cryo-EM reconstruction to learn and study it. For instance, one approach would be to train a neural network (vθ) to approximate the true volume function vθ ≈ v* (Zhong et al., 2021a).

Furthermore, in cryo-EM we typically see a projection π:V×Φ→RN to a gray-scale pixel image (represented, to keep notation uncluttered, as a vector with N entries). This projection depends on the pose parameters ϕ ∈ Φ = SO(3), so we will also refer to π as the pose function (Table 1). The pose parameters may also need to be inferred (typically, the cryo-EM image formation model would also include camera parameters such as the microscope defocus—we are skipping those for simplicity). That means, for different values of ϕ, πϕ = π(., ϕ) would describe a different projection. Importantly, the function π does not have to be learned because we know the physics, i.e., optics behind this projection, thus, we know that the projection in our model πϕ must be the same one as the ground truth projection πϕ*=πϕ.

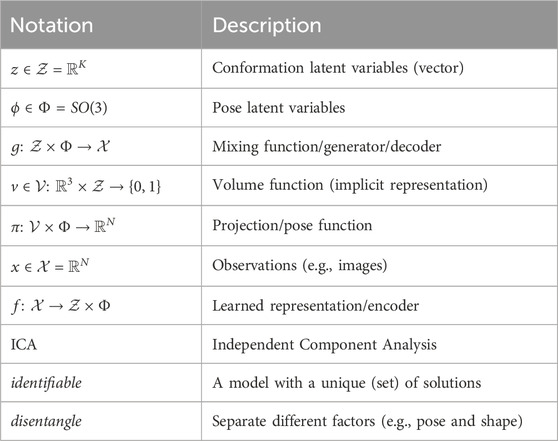

Table 1. Glossary. Whenever a distinction is necessary in a given context, we use a ∗ (e.g., g*) to highlight that we are referring to the ground truth model (g*) or ground truth latent variables (z*, ϕ*). For instance, we have the ground truth generator g* of the data, in contrast to the learned generator g (i.e., decoder) from our model of the data.

Putting this together we can write the combined cryo-EM generative model (i.e., the abstract process that yields the data we observe). That is, the observed data x is modeled as being generated by the ground truth model as x = g*(z*, ϕ*), which is, crucially, a function of the ground truth latent quantities (z*, ϕ*)

g*:Z×Φ→X,g*z*,ϕ*≔πvz**,ϕ*=πϕ*◦v*z*∈RN.(1)Usually, we would be measuring very noisy signals x = g*(z*, ϕ*) + ϵ where the noise can be modeled as additive Gaussian ϵ∼N(0,σ2) in image space. The essential problem of heterogeneous reconstruction in computational cryo-EM can now be stated as follows.

Given only noisy observations of x = g*(z*, ϕ*) + ϵ, can we recover v*?

This would be the true volume function v* that shows us how the independent degrees of freedom change the molecule’s conformation. Many cryo-EM models actually learn a probabilistic p(x|z, ϕ) representation of the observed data x conditioned on the latent variables. Thus, it becomes necessary to perform inference such as maximum a posteriori estimation of the latents, conditioned on some observed data p(z, ϕ|x). Alternatively, it is common to approximate the posterior distribution itself with an amortized variational method such as a variational autoencoder (VAE) (Kingma and Welling, 2013). In this work we will be agnostic about the inference procedure (maximum a posteriori probability (MAP), or mean of the amortized variational posterior) and just assume that there exists a mapping f:X→Z from data to latent variables.

2.1 Heterogeneous reconstruction in Cryo-EM is an ICA problemLet us compare this to a standard independent component analysis (ICA) setting (Comon, 1994). In ICA we assume that there are K > 1 independent variables collected in the random vector z = (z1, …, zK). As an example to illustrate this, we may think of a public space where K different speakers proclaim their prophecies zi, completely independently of one another p(zi, zj) = p(zi)p(zj), ∀i ≠ j. However, we do not observe those z directly. Instead, we observe K linear combinations of those variables

with A∈RK×K some unknown full rank matrix and, again, with additive Gaussian ϵ∼N(0,σ2). In our example, this may correspond to K microphones placed in the space and each recording some linear combination AiTz of the speech signals. This scenario is also called blind source separation, the term “blind” referring to the idea that we know almost nothing about the “sources” zi, apart from some general statistical properties. In linear ICA, the function g:Z→X,g(z)=Az that maps from sources z to observations x is called the mixing function. This basic case of linear ICA has been well-studied in the machine learning and signal processing literature (Hyvärinen and Oja, 2000). Briefly, under the simple assumption that at most one of the sources zi follows a Gaussian distribution, we can find an unmixing function f:X→Z that approximately inverts the mixing function, in practice up to (f◦g)(z) ∼ Cz, i.e., some simple equivalence class ∼C such as permutations and scalings.1

If g*(z*, ϕ) in Eq. 1 was linear in z and ϕ, then the cryo-EM reconstruction problem would amount to a simple linear ICA problem with the (extended) sources (z‖ϕ) where ‖ denotes concatenation. Unfortunately, the cryo-EM mixing function g(z, ϕ) in Eq. 1 is nonlinear. This can be easily appreciated, e.g., by noting that a multiple of some latent αz will not produce the same output as an equally scaled image g(αz, ϕ) ≠ αg(z, ϕ) which would be just the same image but changed in brightness. In the case of a nonlinear mixing function g, (Hyvärinen and Pajunen, 1999) showed that it is possible to construct many functions f:X→Z that turn the data into independent variables. However, most of these independent variables have no intelligible relationship with the true sources z. This problem is called the lack of identifiability of the model, which in general mathematical terms means lack of uniqueness of the solution.

For cryo-EM models this would mean that we can learn latent spaces whose individual dimensions have no principled relationship with the true degrees of freedom in molecular conformations. As an example, we may end up with a representation of the simple two dimensional molecule from above where traversing any single dimension in the latent space of our model corresponds to complex combinations of the two arm movements (Figure 1). This would, likely, bias our interpretation of how they are articulated together to carry out their function. Thus, without further restrictions on our model, we would fail to discover the simple and elegant structure where the molecule just changes conformation along two independent degrees of freedom, i.e., left and right arm. In the modern machine learning context, finding a latent space that separates the underlying factors of variation is often called disentanglement (Bengio et al., 2013), but it has to be noted that the meaning of that term is quite vague.

Fortunately, recent advances propose ways to solve this problem with nonlinear ICA (Hyvärinen et al., 2024). For example, Khemakhem et al. (2020) adds conditioning (“auxiliary”) variables u that change the source distributions p(zi|u). Such a u could represent extra measurements by another modality, or it could be defined by interventions. The model then becomes identifiable if the u modulates the distribution of z strongly enough. This is possible because then the zi are conditionally independent for any u, which provides much stronger constraints than the mere (unconditional) independence of the zi as in the basic ICA framework. Khemakhem et al. (2020) further propose to estimate this model using variational methods, leading to an algorithm which is a variant of VAEs. An alternative approach is possible by assuming temporal dependencies of the source time series (Hyvarinen and Morioka, 2017; Klindt et al., 2020; Hälvä et al., 2021); spatial dependencies can also be used (Hälvä et al., 2024). In this case, independence of the components over time lags leads, again, to more constraints, and thus to identifiability under some conditions. A very different approach can be developed by constraining the nonlinear function g, parameterizing it with such a small number of parameters that identifiability is obtained (Hyvärinen et al., 2024; Section 5.4); for example, if we know the physics underlying g (i.e., pose transformations and projections) we may also be able to obtain identifiability. Finally, we point out that the independence assumption can be relaxed (Träuble et al., 2021); even causal relationships between the independent components have been modeled, but this requires further constraints and assumptions (Träuble et al., 2021; Morioka and Hyvärinen, 2023; Yao et al., 2023). Any such learning is easier if interventions on the system are possible (Ahuja et al., 2023) or if it is assumed that the system undergoes sparse, discrete state changes like in robotics experiments (Locatello et al., 2020b), but the theory mentioned above is specifically unsupervised, thus not necessarily requiring interventions.

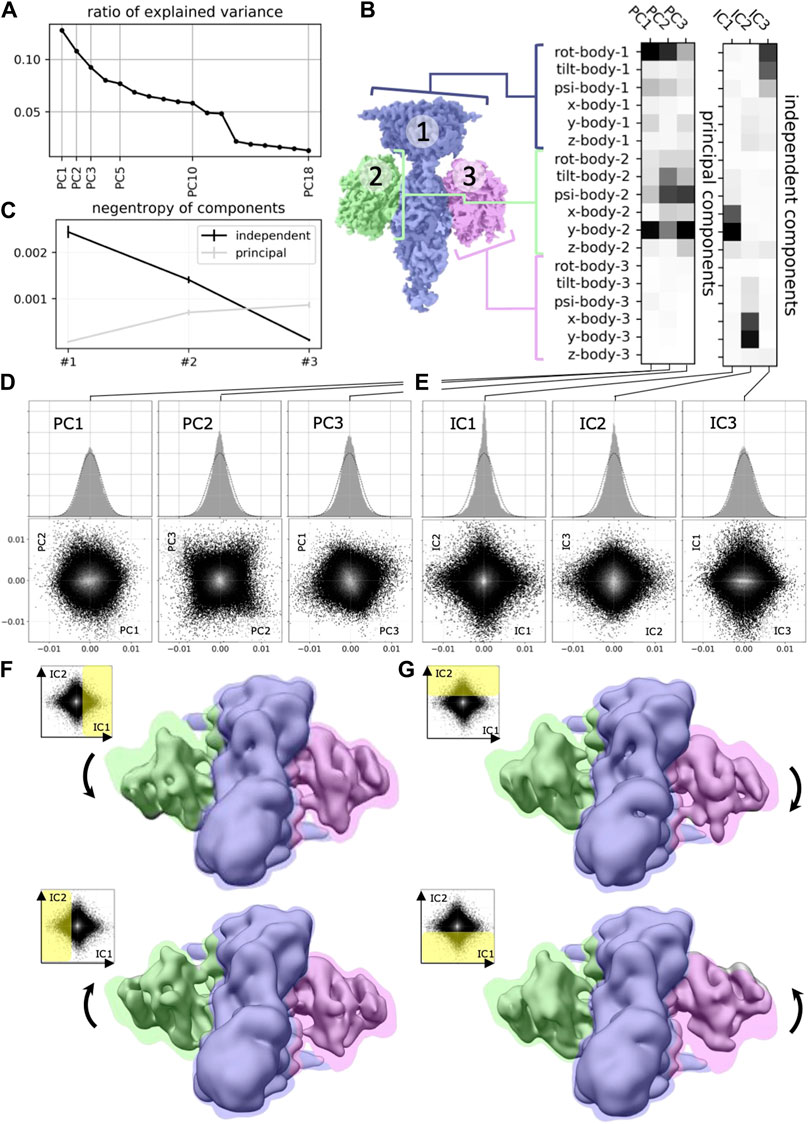

In this work, we will argue that the heterogeneous reconstruction problem in cryo-EM should be framed as a non-linear ICA problem to help us build better and more interpretable models that separate the independent degrees of freedom with which molecules change conformation in nature. Few prior works have applied linear ICA to molecular imaging (Borek et al., 2018; Gao et al., 2020), finding more meaningful separation of molecular conformation changes (Figure 2). However, to the best of our knowledge, none of the nonlinear ICA approaches mentioned above have so far been applied to the field of computational cryo-EM. Below we will propose three promising candidate approaches for solving the nonlinear ICA problem in cryo-EM.

Figure 2. Application of ICA to cryo-EM data (reproduced with permission from (Gao et al., 2020). Original caption: (A) Principal component analysis of the 18 multi-body parameters refined for each particle image yields 18 principal components (PC) displayed here in decreasing order of explained variance. The first three components explain more than 30% of the variability in the particle images. (B) (left) definition of the multi-body segmentation: the central PDE6 stalk in blue corresponds to Body 1, while the 2 GαT⋅ GTP subunits correspond to Bodies two and 3. (right) The motion of each body is parameterized with three translational parameters and three rotational parameters. Each of the 18 principal and three independent components is a linear combination of the resulting 18 rigid-body parameters, and their weights are shown here for the first three principal components (from negligible to larger weight as the shade of grey becomes darker). (C) Negentropy (i.e., reverse entropy) of the first three principal and independent components. (D) (resp. (E))–(top) histogram of the projection of all image particle parameters on the first three principal (resp. independent) components PC1, PC2 and PC3 (resp. IC1, IC2 and IC3). (bottom) 2D histograms of the projections of all image particle parameters on all pairs of the first three principal (resp. independent) components. (F) (resp. (G)–Maps illustrating the motions carried by IC1 (resp. IC2). (top) map reconstructed from the particles whose projections belong to the last bin along IC1 (resp. IC2). (bottom) map reconstructed from the particles whose projections belong to the first bin along IC1 (resp. IC2). All maps are shown overlaid on the consensus map, with threshold set at a lower density value, colored according to the scheme in (B).

2.2 Disentangling pose and conformationThe first challenge in computational cryo-EM is that of separating the pose ϕ of a molecule from its conformation z. Again, looking at the cryo-EM mixing function (Eq. 1) x = g*(z*, ϕ*) + ϵ, this means we want to find a representation

f:X→Z×Φ,fx=fzx‖fϕx=z‖ϕthat separates the estimated conformation z and the pose ϕ.2 In other words, as a first step, we could find just two latent subspaces without specifying the individual components, or the bases, inside those subspaces. Again, if g* were linear, we might use the well-developed methods of independent subspace analysis, or subspace ICA, to approach this problem (Hyvärinen and Hoyer, 2000; Theis, 2006). This might also help with the pose variables’ topology that is, typically, not Euclidean. For instance, a circular pose variable ϕi ∈ S1 that lives on a circle and encodes rotations around one axis could not be represented, by a single dimension, in a typical latent variable model that maps to real valued scalars f:X→RK. However, some subspace variants of ICA provide exactly such a transformation into spherical coordinates (Hyvärinen et al., 2009, Ch. 10).

Many cryo-EM models use separate latent spaces to represent conformation and pose (Donnat et al., 2022). However, that does not mean that models learn, during nonlinear optimization, to actually use those separate spaces in the intended way. Recent work by Edelberg and Lederman (2023) demonstrated that this is a problem in popular cryo-EM models such as CryoDRGN (Zhong et al., 2021a). In particular, they showed that a 90° rotation of an image causes a different prediction in the space of conformation latent variables, even though those should be invariant to pose transformations (see below, 3.1).

2.3 Disentangling independent factors of conformationsA more fundamental challenge is that of separating the independent degrees of freedom of a molecule. Specifically, we want to find a representation fz of the molecular conformation that inverts, up to some equivalence class ∼C like permutations and scaling (see above), the ground truth generative model (fz◦g*)(z) = z ∼Cz*.

A popular approach (see CryoDRGN tutorial) consists in fitting a nonlinear model to cryo-EM data (Figure 1B) followed by manual investigation of the learned latent space that represents conformational heterogeneity (Zhong et al., 2021a) (Figure 1C), thus limiting our ability to quantitatively compare models. Here, we propose a possible remedy in the shape of benchmarks where we simulate data using the generative model (Eq. 1) to assess how close different methods get to the correct (i.e., up to ∼C) representation of conformational latent spaces. This taps into a rich, recent literature in nonlinear ICA methods (Hyvärinen et al., 2024) including benchmarks and metrics for model comparisons (Locatello et al., 2020a).

Once we have benchmarks and metrics, we can measure quantitative progress. However, none of the existing heterogeneous reconstruction approaches in computational cryo-EM are identifiable—mirroring the state of the disentangled representation machine learning field in 2019 (Locatello et al., 2019). To actually make progress, in this perspective, we propose three potential approaches to apply nonlinear ICA method for the unsupervised discovery of molecular conformational changes:

1. Time-resolved single particle imaging. Observing conformational changes over time, such as a sparse change in a single conformational degree of freedom, provides valuable information; this relies on nonlinear ICA methods that use temporal autocorrelations of the sources (Section 4.2.1).

2. Boltzmann ICA. It may be possible to disentangle conformational degrees of freedom by sampling at different temperatures; this relies on nonlinear ICA methods that use additional conditioning variables u, like temperature (Section 4.2.2).

3. Atomic models. Building constraint models, with knowledge of the physical mechanism, may exclude faulty solutions; this relies on reducing the hypothesis class provided by the (typically over-parameterized) neural network models (Section 4.2.3).

3 Disentangling pose and conformationIn this section we will first discuss the problem of separating pose and conformation in cryo-EM latent variable models. Recent experiments by Edelberg and Lederman (2023) demonstrated that this desired disentanglement is, unfortunately, violated in the case of CryoDRGN (Zhong et al., 2021a). We start by proposing more systematic evaluations and metrics to measure progress on this task (Section 3.1). Note that those are not metrics in a strict mathematical sense, but rather indices that allow us to measure progress. These metrics inspire simple supervised intervention experiments that can be executed in simulation and added to existing training pipelines to disentangle pose and conformation in cryo-EM latent spaces (Section 3.2).

3.1 Evaluating disentanglement of pose and conformationWe are interested in the cryo-EM ground truth generative function g*(z*,ϕ*)=πϕ*(vz**) (Eq. 1), which consists of a known pose function πϕ*=π(.,ϕ*), an unknown volume function vz**=v*(.,z*), an unknown pose ϕ* and an unknown conformation z*. Now, for any specific image, we have full control over the pose function πϕ* but do not know the pose ϕ*; however, for the conformation we have neither control over the volume function vz** nor knowledge of the true conformation z* (Shannon et al., 1959). Consequently, in this section and in Section 3.2 we leverage the fact that we have complete knowledge about the pose function, to measure and constrain the flexibility of the conformation z and volume function vz that we learn in our model.

Put simply, what we want is that, for a molecule with fixed conformation, our model predicts the same conformation even if we change the pose of the molecule. That is, we want the conformation representation fz to be invariant to pose changes. Additionally, we want the pose representation fϕ to be invariant to conformation changes. Mathematically, the requirements of invariance can be written as

fz◦gz,ϕ=zandfϕ◦gz,ϕ=ϕ,for all possible poses ϕ ∈ Φ and conformations z∈Z. When we train a model, this can go wrong both in our encoder f(x) (if it fails to separate pose and conformation), or in our decoder g(z, ϕ) = π(vz, ϕ) (if the volume function vz learns to represent pose changes). Moreover, it may be necessary to add observation noise to the generated images g(z, ϕ) + ϵ to mitigate for domain shift between the training data and these simulations. To measure progress in this challenge, we can turn this into six different evaluation metrics. We introduce those six in Appendix A. In practice, a single metric (Alg. 1, Eq. 2) seems to suffice as we will discuss in the next section.

3.2 Correcting disentanglement of pose and conformationAlgorithm 1.Interventions for Pose and Conformation Disentanglement.

1. Encode a batch (xi)i=1,…,N of images into conformations and poses (zi ‖ ϕi) = f(xi)

2. Detach all zi and ϕi from the computation graph.a

3. Random shuffle the poses ϕi′=ϕσ(i)b

4. Decode and encode the new conformation and pose pairs into (ẑi‖ϕ̂i)=(fθ1◦gθ2)(zi,ϕi′)

5. Measure the distances d(., .) to the original latentsc

Lfθ1,gθ2=1N∑iNdzi,ẑi+dϕi′,ϕ̂i(2)6. Optimize the encoder and decoder (fθ1,gθ2) along the derivatives (∂L∂θ1,∂L∂θ2)

7. Repeat 1. to 6. until convergence; or add L(fθ1,gθ2) to total loss function in regular training

a We treat these as given latents and do not differentiate with respect to their initial computation

b Using a random permutation σ instead of a perturbation δϕ ensures that we stay within the posterior distribution p(ϕ|x) of poses

c In the conformation space, this could just be Euclidean; in pose space we would have to compute, e.g., the geodesic distance in SO(3).

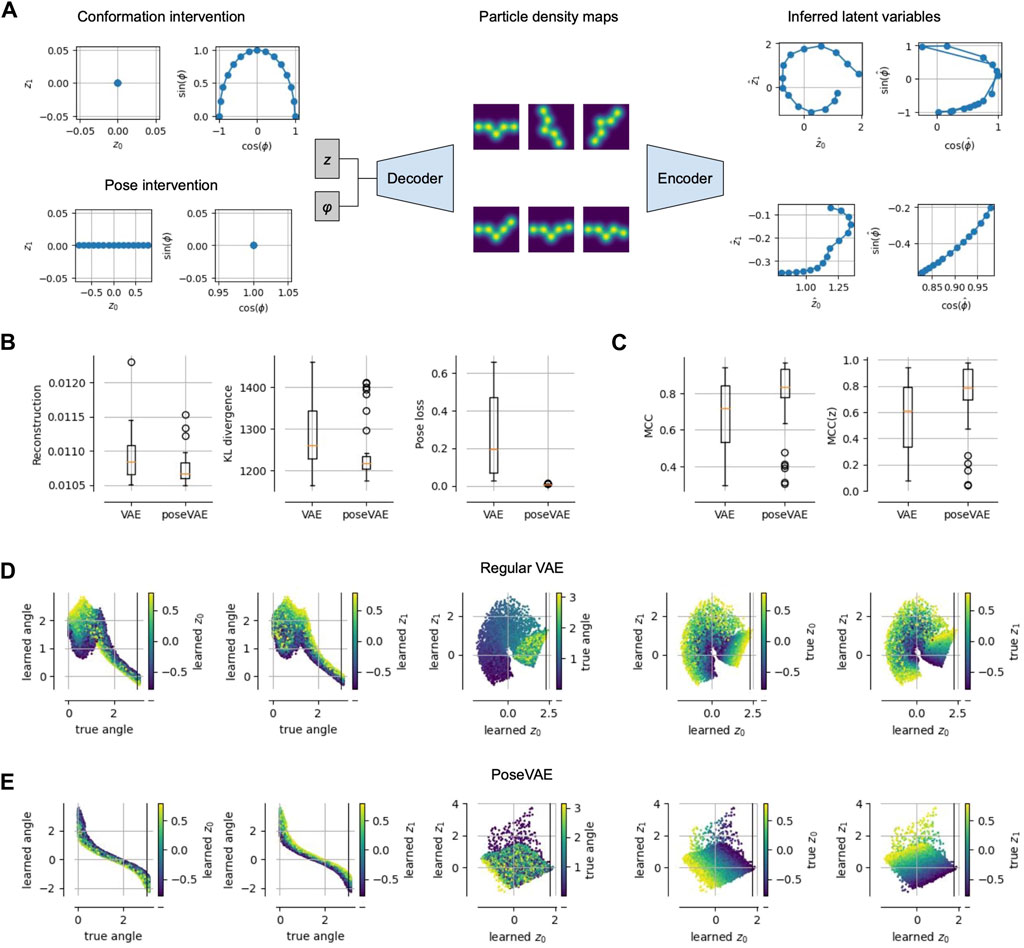

Based on the ideas proposed in the previous section and the metrics in App. 5.1, we are now going to propose a simple penalty term that can be added to existing cryo-EM models to disentangle pose and conformation. The logic behind these intervention experiments is illustrated in Figure 3. This procedure relies on the physics-based decoder g with an explicit pose representation πϕ. Typically, the representation fθ1 and the generator gθ2 are parameterized as neural networks with learnable parameters θ1 and θ2 (Kingma and Welling, 2013; Zhong et al., 2021a). Clearly, we can compute the gradients of all metrics with respect to those parameters. In practice, we observed that good results can be achieved simply by following Algorithm 1. Note that this is a straightforward, supervised learning objective that is a relatively standard problem in modern machine learning and should present little difficulty. Thus, we can just add L(fθ1,gθ2) (Algorithm 1) as an additional penalty term to the loss function of any of the existing models with separate pose and conformation representation to encourage disentanglement.

Figure 3. Physics-based Pose and Conformation Disentanglement. (A) We perform an intervention, i.e., changing only the pose (conformation) of a latent pair; these changed latents are decoded and encoded again to measure the consistency and invariance of our model. (B) Compared to a vanilla VAE, a model PoseVAE trained with interventions (i.e., Alg. 1) achieves lower reconstruction error, lower KL divergence to the prior and a lower pose disentanglement loss (Eq. 2). (C) Disentanglement, measured as mean correlation coefficient (MCC (Hyvarinen and Morioka, 2017),), increases not only between pose and conformation variables (left), but also among the conformation variables (right). (D) Visualizations of the learned latents for the vanilla VAE model, showing that the learned angle is not perfectly representing the true angle (plots one and two from the left); the three plots on the right show the learned conformation latents, representing mixtures of true conformation and pose. (E) Same as (D) but for PoseVAE, showing a perfect monotonic relationship between learned and true angle; also the conformation latents contain little information about the true angle (third plot) and disentangle the true conformation variables up to a 45° rotation.

Importantly, we are only able to write this approach in such a concise and easy form because of the physics-based decoder g. By this we mean the fact that we know the physics, i.e., optics behind the projection πϕ in the image formation model (Eq. 1). We could imagine a different cryo-EM generative model where both the conformation and the pose change are modeled by the implicit volume representation v:R3×Z′ with some extended latent space Z′. Or, in even more general terms, we could just train a standard VAE (Kingma and Welling, 2013) on cryo-EM images to learn a neural network encoder f:X→Z″ and decoder g:Z″→X back to image space with some, potentially, even more abstract latent space Z″Miolane et al. (2020). However, such abstract models would not have the built-in physics of objects in space, their poses ϕ and their projections πϕ onto a two dimensional image, which we assume a priori in our standard cryo-EM decoder (Eq. 1). In other words, such more abstract models would lack the architectural distinction between z and ϕ which we need in our intervention experiment to disentangle pose and conformation. Thus, we would not be able to manipulate distinct parts of the extended latent spaces (Z′ or Z″), knowing that those represent distinct physical manipulations of the image.

For models using an implicit representation of the volume, the reason we have to use this interventional approach to disentangle pose and conformation in the first place is that the implicit representation v(z) is a highly flexible neural network that can easily model pose changes (Sitzmann et al., 2020). Only by combining this with the constraints physics (i.e., the image formation optics) are we able to disentangle pose and conformation representation. This is akin to disentanglement approaches that use the assumption of sparse manipulations, i.e., pairs of data points where only subsets of the latents are modified (Locatello et al., 2020b). Those models have been demonstrated to solve the nonlinear ICA problem theoretically and practically. Thus, whenever we know something about the physics of the world it makes our representation learning task much simpler if we can run intervention experiments that test the causal dependencies between our latent variables (Ahuja et al., 2023; Squires et al., 2023).

We performed a small proof-of-concept experiment to test these predictions and report the results in Figure 3. We train a standard VAE (with separate pose and, implicit, volume representation) on pseudo cryo-EM data and compare it to the same model but with the additional training step in Algorithm 1. We refer to that model as PoseVAE. In Figure 3B, we see that the additional penalty term in PoseVAE does, indeed, succeed at lowering the pose disentanglement metric (Eq. 2). Inspecting the latent representations (Figure 3E, middle), we observe that the pose is now fully confined to the pose variable. Moreover, we observe that the conformation space z is, itself, becoming more disentangled (Figure 3E, right). Intuitively, this makes sense because less can go wrong now in encoding two instead of three variables into it. Quantitatively, this observation is confirmed by standard disentanglement metrics showing that PoseVAE achieves higher mean correlation coefficient (MCC) both across all latents (Figure 3C, left) but also within the conformation latents z alone (Figure 3, right). This is encouraging for the next task of disentangling the conformations.

4 Disentangling conformationsAnalogously to the previous section, in a second step, we propose a theoretical framework with metrics and benchmarks concerning the further disentanglement of the individual components inside the conformation vector z (Figure 1). This addresses the essential challenge of interpretable cryo-EM conformational representations for heterogeneous reconstruction (Section 4.1). These benchmarks will help us measure true progress in the budding field of computational cryo-EM (June 2023: Cryo-EM Heterogeneity Challenge). Lastly, we discuss different methods to leverage recent development in nonlinear ICA that have the potential to build the next-generation of cryo-EM models that get closer to the true answer (Section. 4.2). These models may require future technological advancements such as temperature dependent cryo-EM (Bock and Grubmuller, 2022) or time-resolved single particle (X-ray) imaging (Shenoy et al., 2023a; b).

4.1 Evaluating disentanglement of independent factors of conformationsWe consider the disentanglement of the individual components or dimensions inside the conformation space z. We propose a metric, in the form of a computational procedure, to evaluate whether the independent components of conformational variations are disentangled in the latent space. Essentially, this proposal relies on simulated data that exactly fulfills the generative model (Eq. 1) which we assume for cryo-EM data. Having full control over the generative model is important, not only to measure progress, but also to simulate extended datasets (e.g., time-resolved imaging), because we know that only those future datasets with additional assumptions will, provably, allow progress in disentangling conformations. Otherwise, this challenge is hopeless (Locatello et al., 2019). Thus, in the ideal case, we have access to a good cryo-EM simulator g*, so we can just use this.

However, if we do not have a good cryo-EM simulator, we can just use the existing state-of-the-art model and see if we can recover its latents. More precisely, we can do the following: Train a regular cryo-EM model with the additional training loss (Algorithm 1) that ensures that pose and conformation are disentangled. Then, check that the model is approximately, invertible. This is a common assumption in ICA theory (Hyvärinen et al., 2024) to make sure that the task of recovering the sources is well-defined. For this, we basically want to make sure that no two distinct points in conformation latent space z1 ≠ z2 would lead to the same volume representation v(z1) = v(z2). Once this is, approximately, validated we can use the model as a cryo-EM simulator. Intuitively, we now treat this first model as ground truth generator g*≔g and see if we can recover its latents z*≔z.

The procedure to evaluate and benchmark heterogeneous cryo-EM latent variable models would then be to assess how well they learn the same (up to equivalences) conformation latent space as the original model. Thus, we would effectively sample a ground truth pose z* and some random pose ϕ* and feed them into the ground truth model to obtain an image x = g*(z*, ϕ*) + ϵ. We then process this image with a candidate model fz(x) = z to obtain the learned conformation representation z. Consequently, we have to compare the two vector representations z* and z. Depending on the equivalence class (∼C) that we are interested, there are many different metrics to assess how well z* is disentangled in z. Intuitively, we want some kind of one-to-one correspondence between the two representations where changing a single entry in z* corresponds to changing a single entry in z, and vice versa. Fortunately, this problem has been studied extensively in the machine learning subfield of disentangled representation learning (Bengio et al., 2013), with many proposed metrics and standardized benchmarks (Locatello et al., 2020a). We can build on those advances to get better quantitative measures on progress in heterogeneous cryo-EM reconstruction than volume based comparisons.

As an example metric measuring disentanglement, we will discuss the Mean Correlation Coefficient (MCC) (Hyvarinen and Morioka, 2017). Intuitively, we want each learned latent variable to be perfectly correlated (or anti-correlated, since sign flips do not compromise interpretability) with a single source variable. To measure this we can just compute the (absolute) correlation coefficient between all ground truth latents z* and all learned latents z. To account for permutations, we have to solve a linear sum assignment with a permutation σ: → , which basically finds the best matching zσ(i)* for each zi. The MCC is then, simply, the mean over those matches

MCCz,z*=maxσ1K∑iK|corrzi,zσi*|with corr() denoting correlation. Other metrics focus on decodability, or informational independence (Locatello et al., 2019) and there is no agreed-upon consensus on the optimal disentanglement metric. Thus, we simply report scores across all metrics–these can be further grouped by rank ordering to get overall model comparison scores (see Klindt et al., 2020).

4.2 Correcting disentanglement of independent factors of conformationsLet us now discuss the hardest task, i.e., finding the independent degrees of freedom that determine the conformation of a molecule (Figure 1). This is a hard problem in the sense that, for a nonlinear function g (Eq. 1), without any additional assumption it has been known for the last 2 decades that this is, practically, impossible (Hyvärinen and Pajunen, 199

留言 (0)